JamieTheAnything

Member-

Posts

44 -

Joined

-

Last visited

JamieTheAnything's Achievements

-

JamieTheAnything started following Precision 7550 & Precision 7750 owner's thread

-

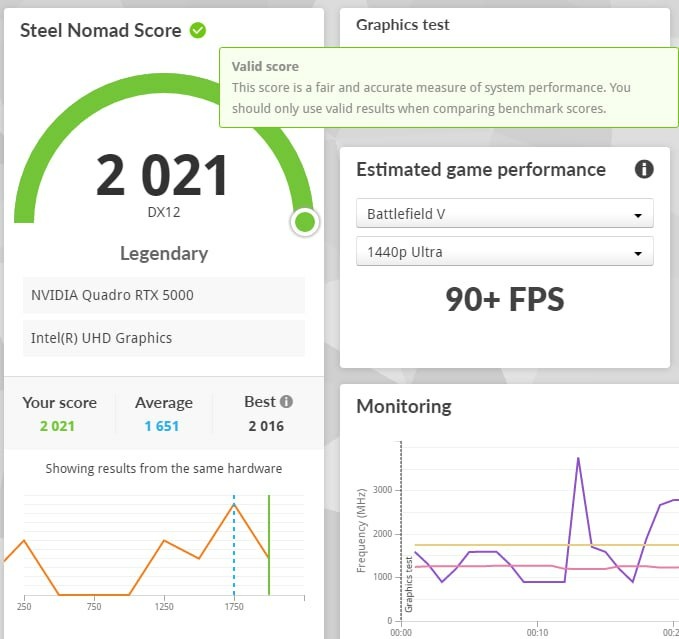

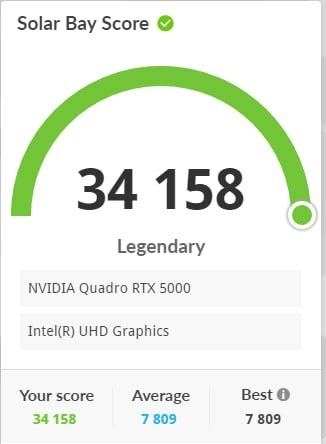

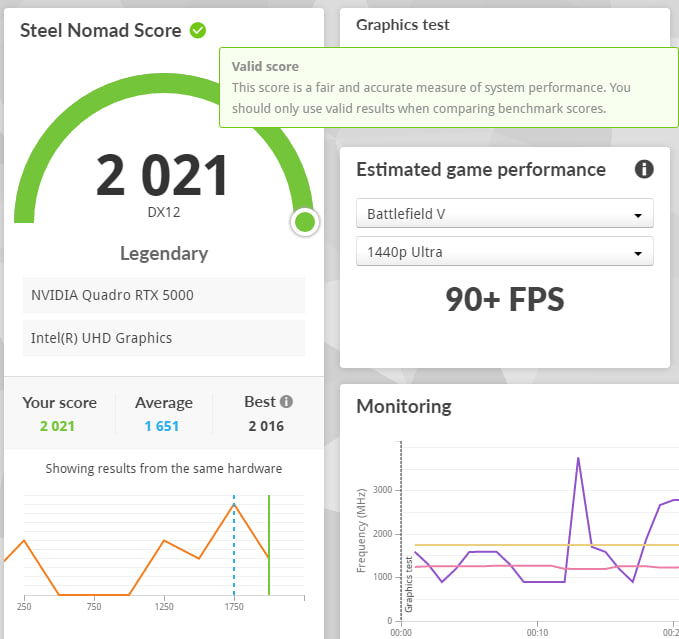

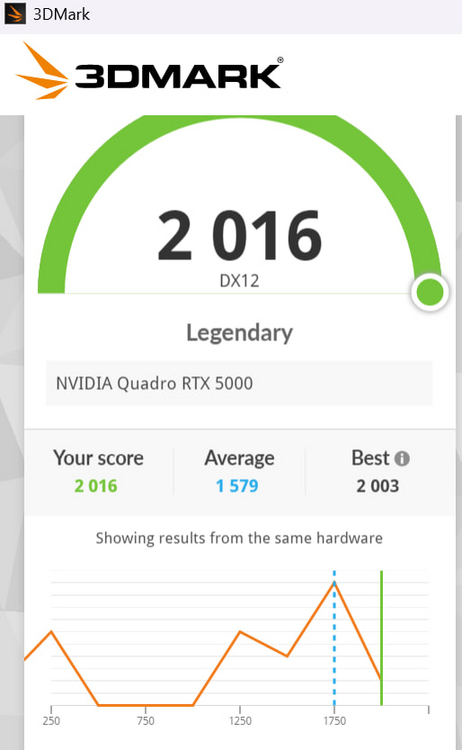

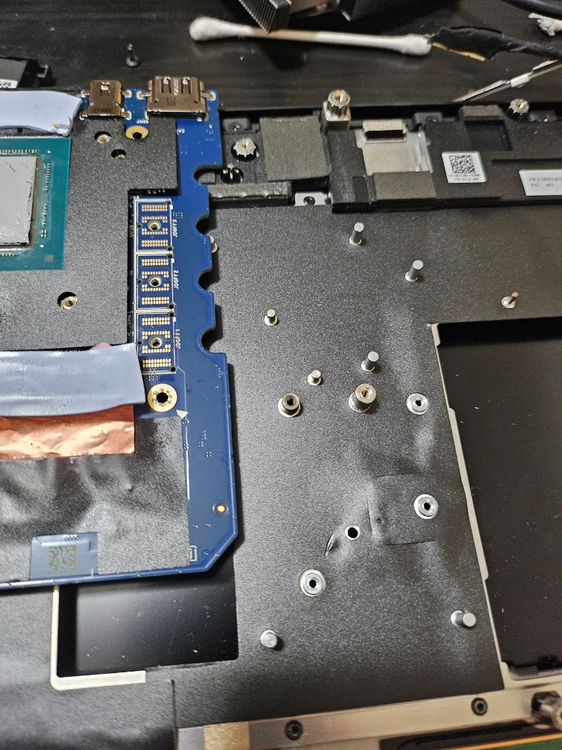

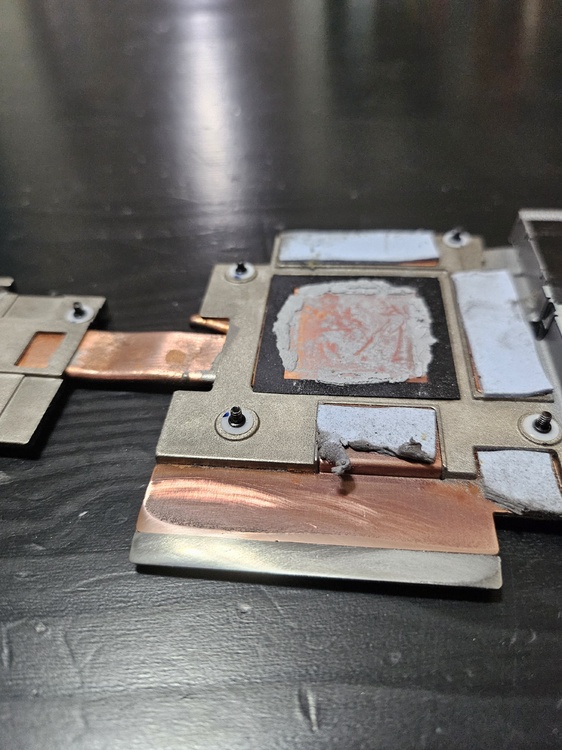

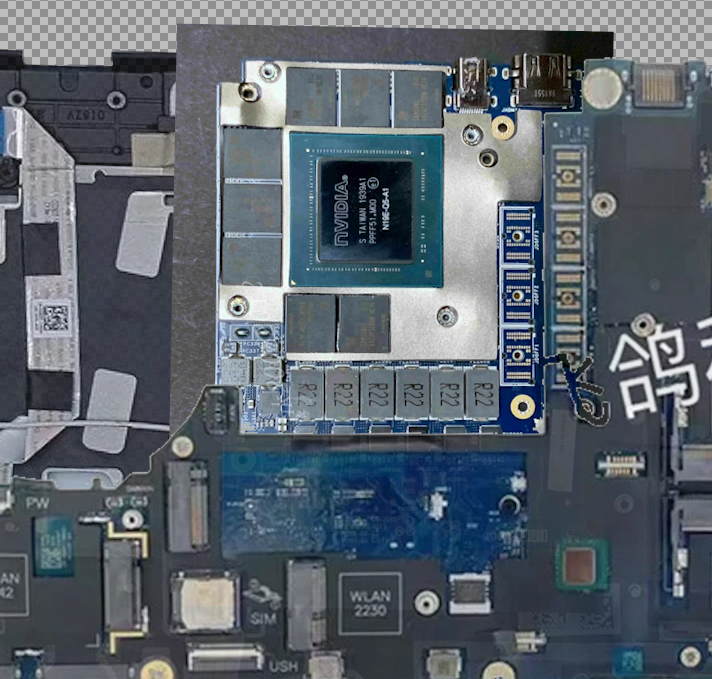

I put 3 copper shims in however I think only one would've worked. Each one of them is .2mm so it's roughly .6mm right now however I was seeing much better results with just one .2mm shim thanks to the losses due to stacking thermal paste->shim->thermal paste too many times. In any case I'm glad that it's working properly and not throttling anymore even with the 105W bios. 3Dmark tests below. Please also note you will have to remove this post in order for the gpu to fit.

-

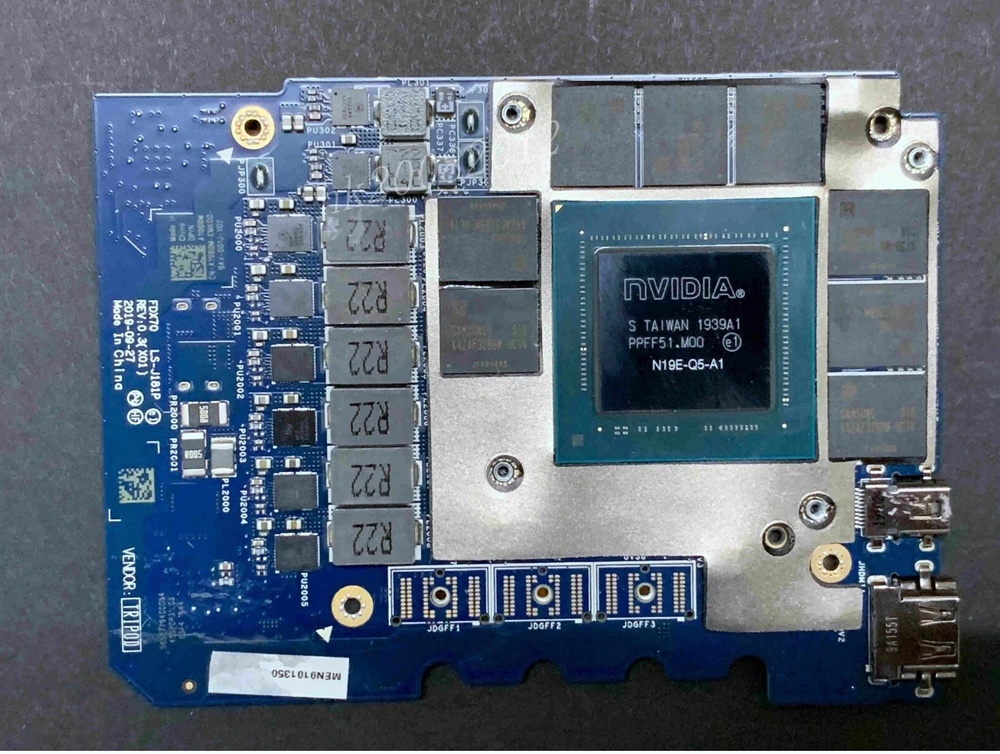

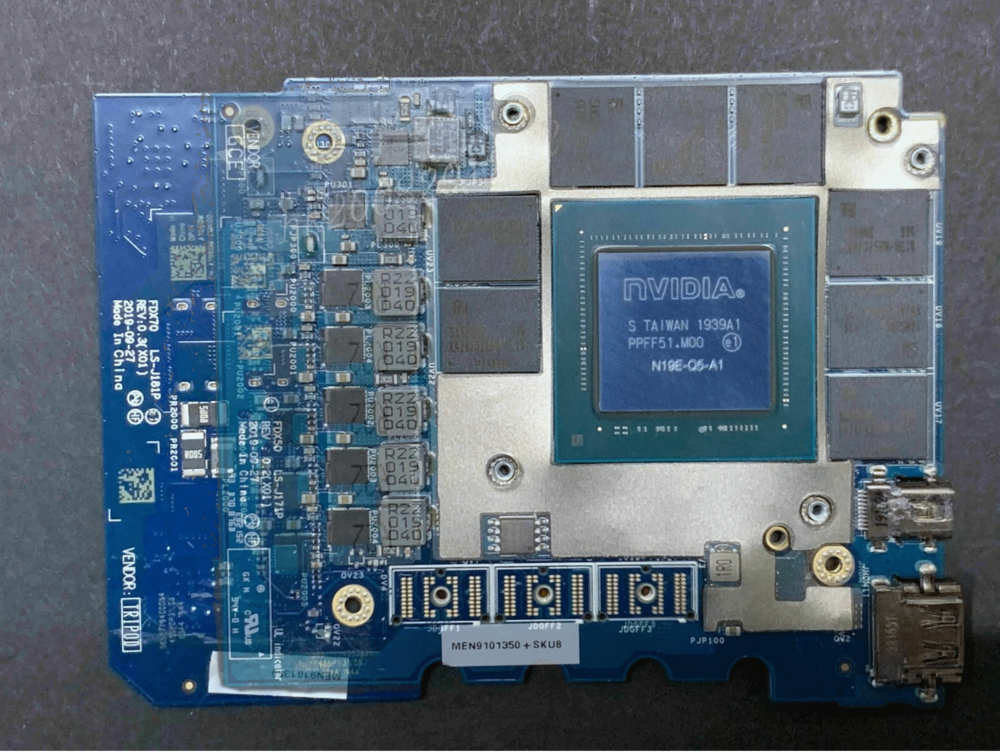

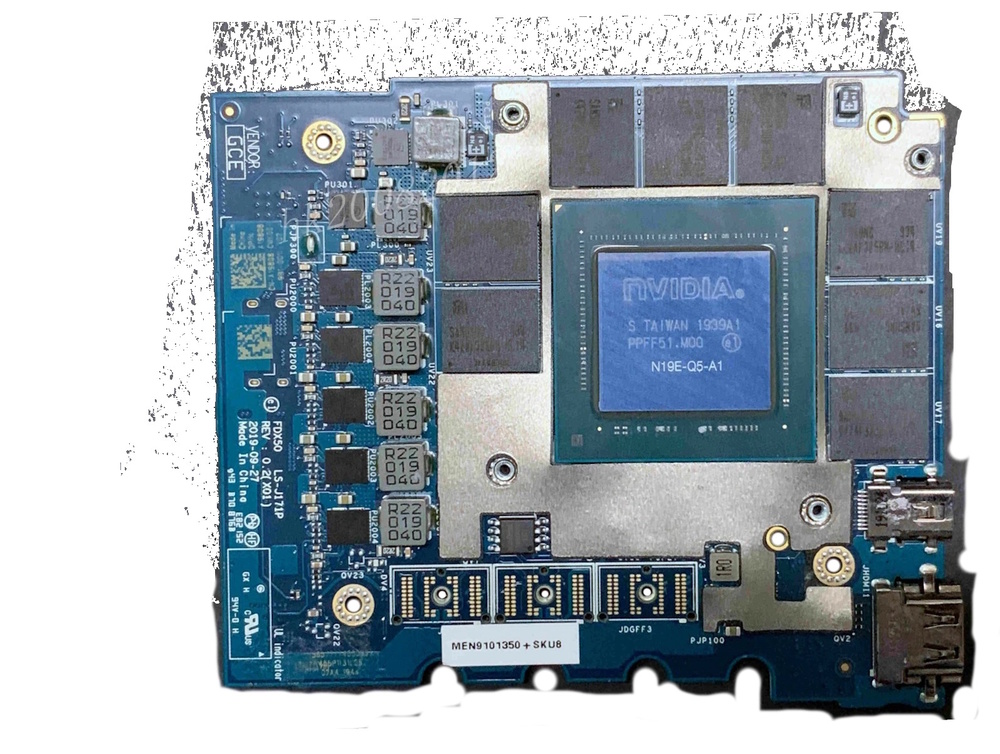

So uhhh, developments This card runs too damn hot and i think there is less than a MM gap between the heatsink and the GPU die. However!!!, I can fix this using the copper foil I was using for the mosfets. Even with the MaxQ Vbios flashed onto the card and limited to 85W it was unable to keep the hotspot from creeping past 90 degrees. After that point it throttles down to 300-700Mhz, and makes certain games unplayable.

-

Alright so update to the whole project, I'm going to be using a very thin .2mm sheet of copper to connect between the heatsink, the inductor, and the mosfets. The order from top to bottom Heatsink->Paste->CopperShim->Putty->Inductors, and using a Motherboard->Putty->shim->paste->Mosfet sandwich on the mosfet side. Hopefully this will guarantee that the poor mosfets won't dump all of it's heat into the motherboard while also ensuring that the "quickest" path to the heatsink is given to the mosfets.

-

I would rec. a thermal repaste along with replacing the thermal pads with thermal putty. It seems like your machine might not have the entire Die being in contact with the heatsink. at idle it shouldnt be at 100C, even when stressed it should get up to 100 but still be around 4.2-4.3ghz all core under load. (optimized fan preset). With my 7550 I have yet to repaste it since getting it, so it should be using the original paste and that has been my experience. After getting fresh and enhanced paste should improve things dramatically. Most cpu's that have absolutely cracked and dried out paste will show what you're experiencing, repasting it and also replacing the pads for the CPU with putty would be your best bet of getting things under control thermally.

-

In short, A little bit of the bottom of the palm rest (the bottom part of it which faces up when you stare at the bare GPU, would have to get trimmed to make room for the mosfets/PD of the non-maxQ version. However the non-maxq version is the same length as the p5000 that was supposed to fit the 7540/50, as far as I can tell it's just longer between the two of them. the Width is the same and only one screw mount (related to screwing it to the palmrest) is different, other than that, the palmrest + heatsink modification (flatten it out since the "dip" for the mosfets will be in the wrong place, and then either adding a shim/small bit of aluminum to connect the mosfets to the 7550 heatsink), is all that is needed, and since they both share the same BIOS there shouldn't be a whitelist conflict.