-

Posts

2,120 -

Joined

-

Last visited

-

Days Won

38

Content Type

Profiles

Forums

Events

Posts posted by Reciever

-

-

24 minutes ago, tps3443 said:

I’m amazed how fast that 10850K is against the 5800X3D. Just gotta say it!Oh yeah for sure, if anyone from 10th Gen @5ghz and high speed memory was thinking about swapping to the 5800x3d it wouldn't make too much sense from a general standpoint.

5800x3d will be my daily driver so I can put the 10850k into quad fire / quad sli benching in the winter.

-

1

1

-

-

4 minutes ago, Mr. Fox said:

I found the TV series equally slow and boring. I forced myself to watch the first 3 or 4 episodes and just couldn't get into it. My wife likes it. I find it difficult to not fall asleep watching them.

It didnt help that the writers actually hated the IP, fans have rejected the show at this point including Henry Cavill and I believe its canceled.

Anyways, thread for 2700X / 5800X3D / 10850K has been made

-

2

2

-

3

3

-

-

-

Citations

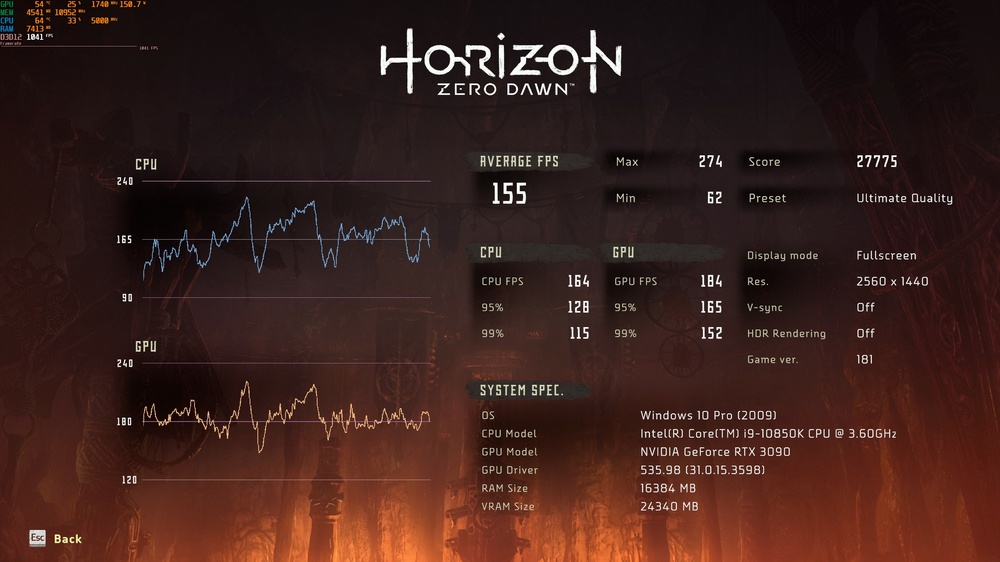

Horizon: Zero Dawn

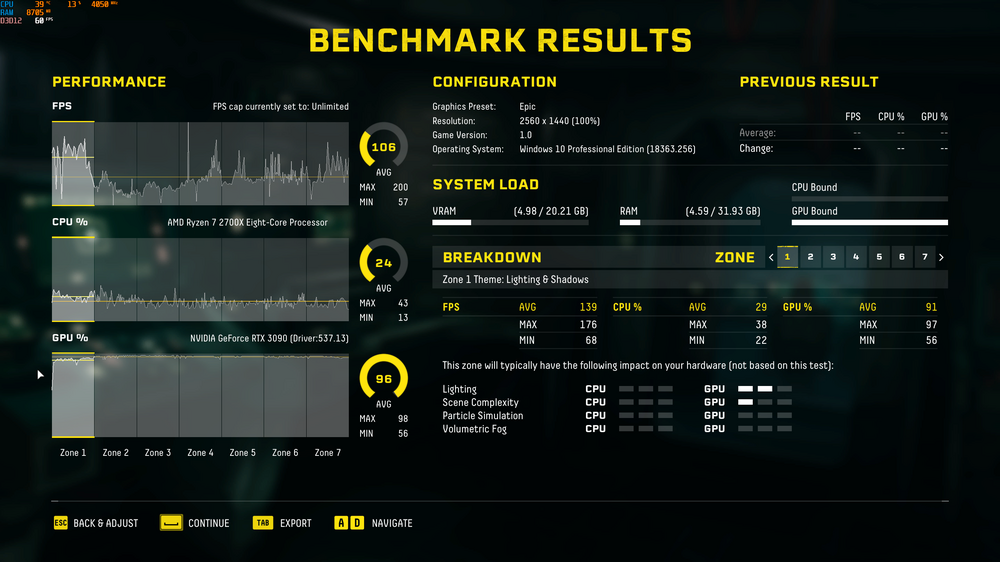

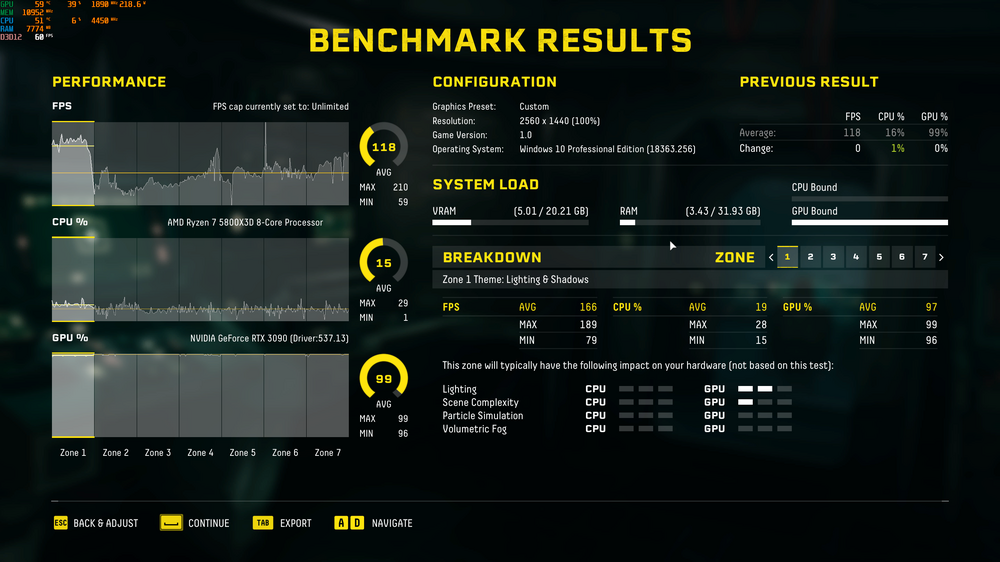

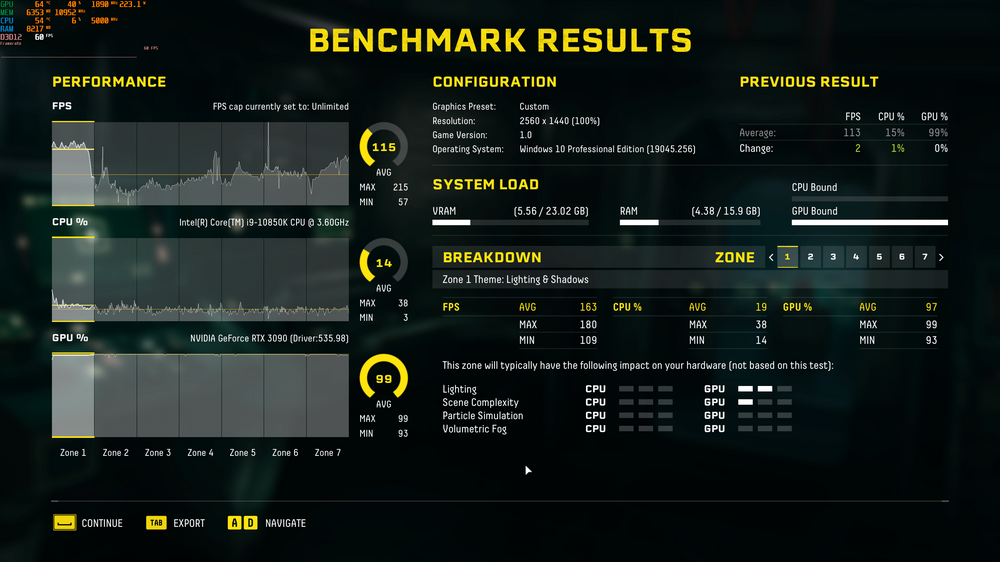

Returnal

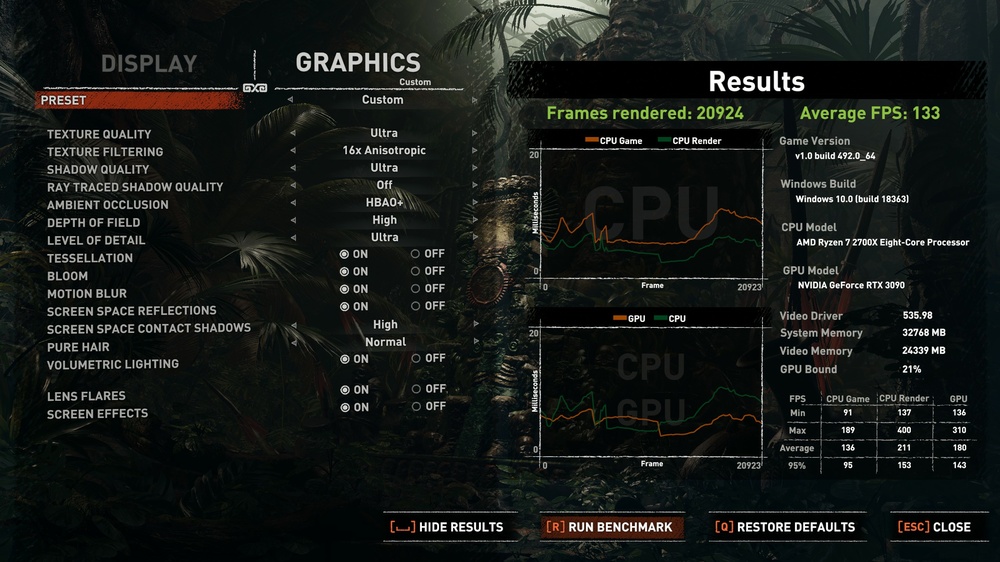

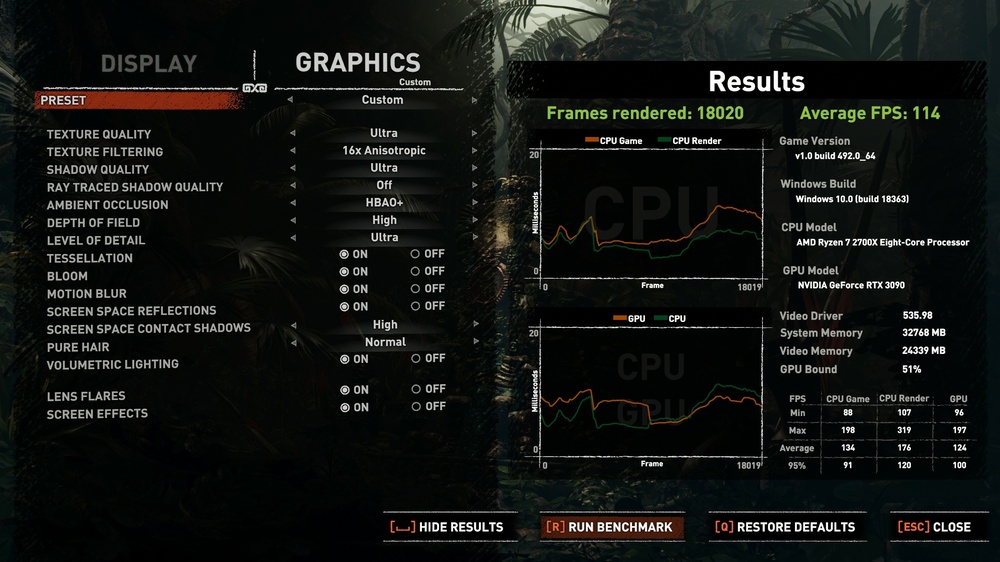

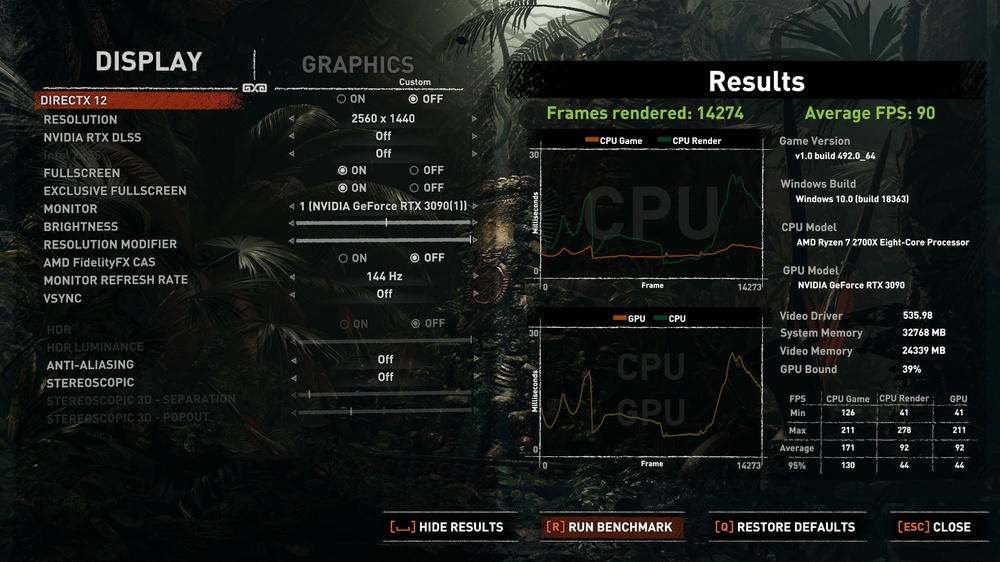

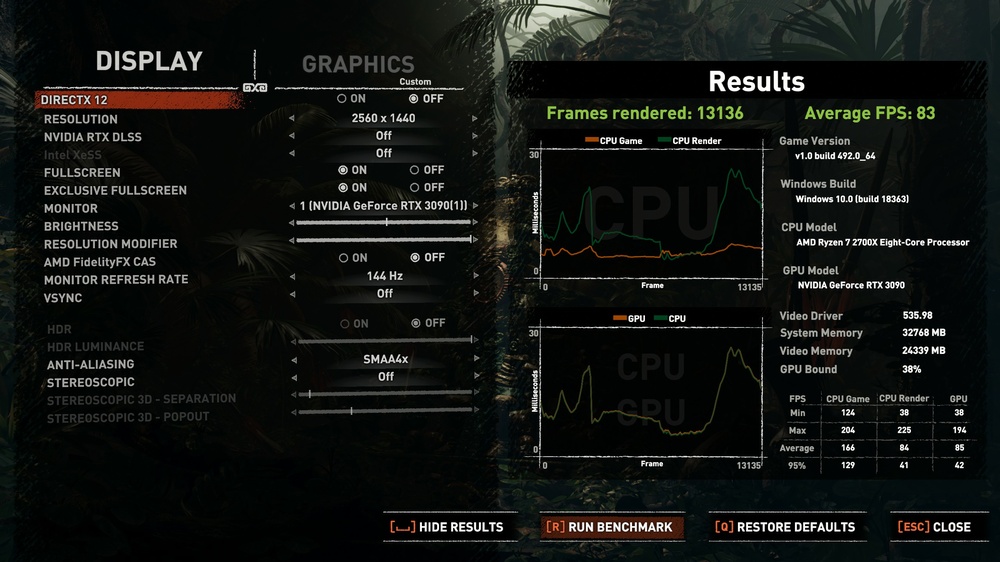

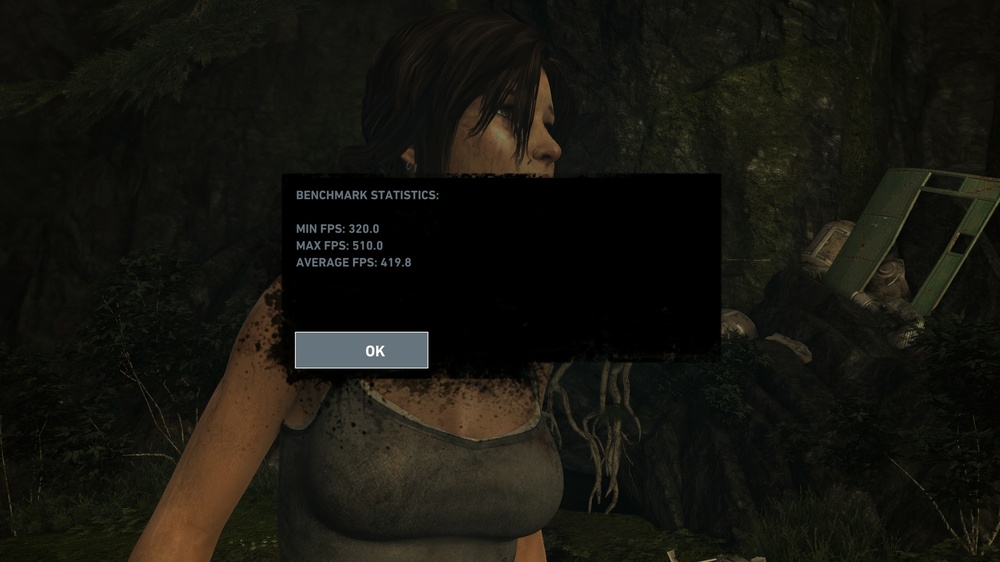

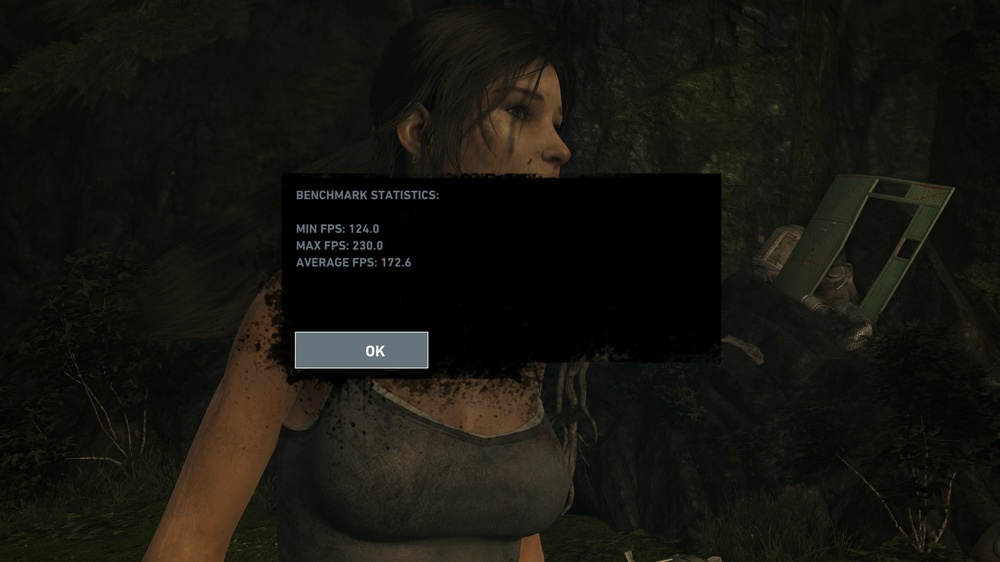

Rise of the Tomb Raider

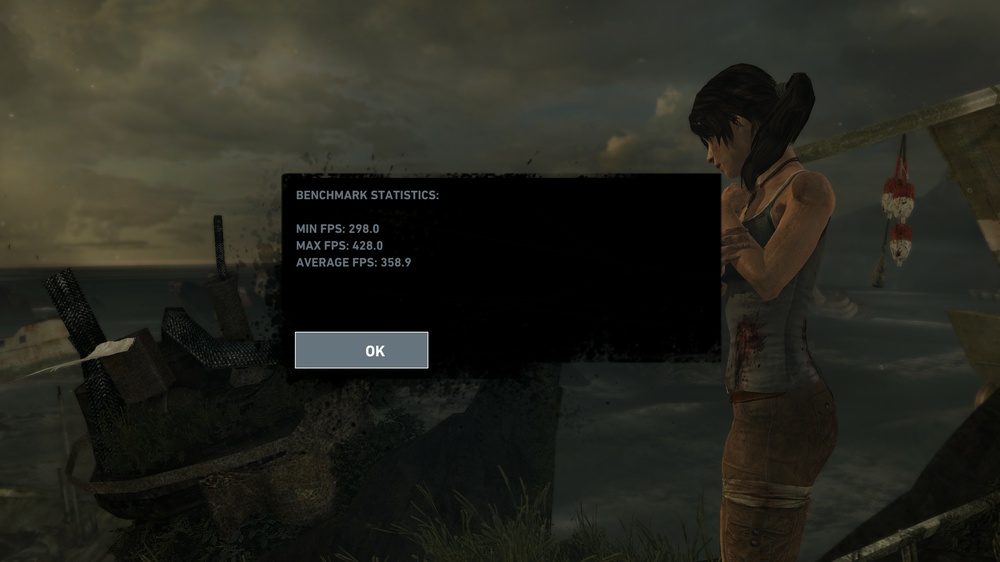

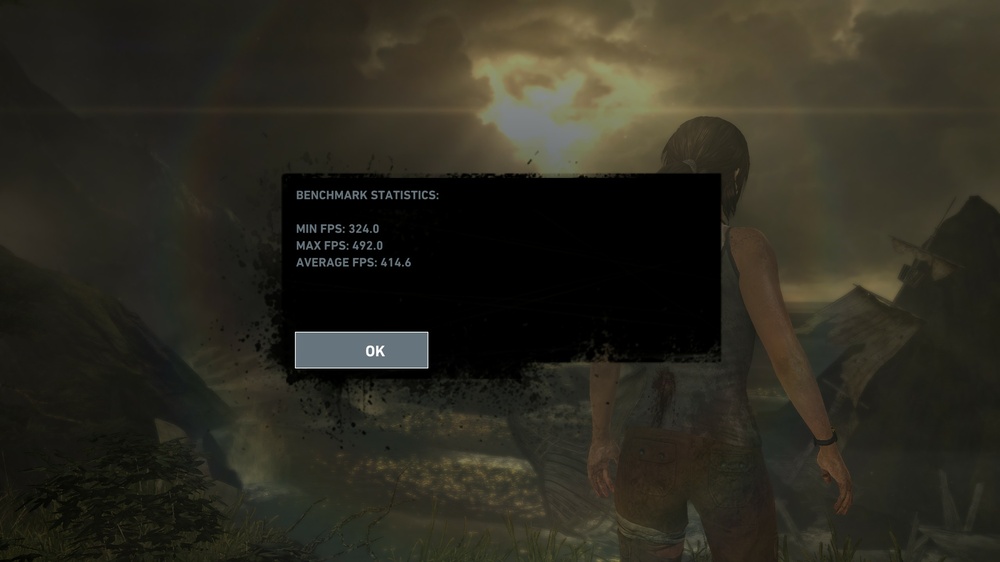

Shadow of the Tomb Raider

Shadow of the Tomb Raider - RT Enabled @ Ultra

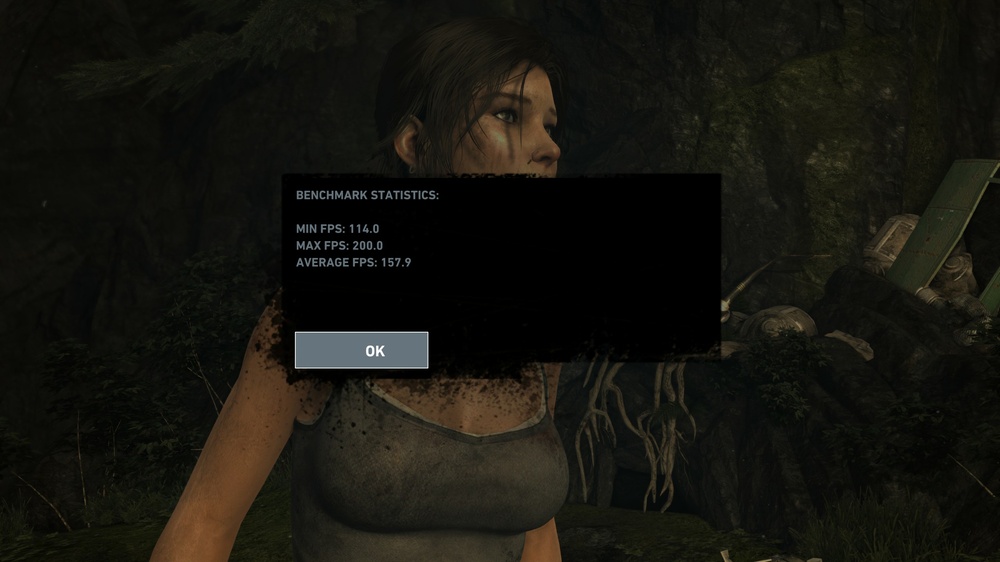

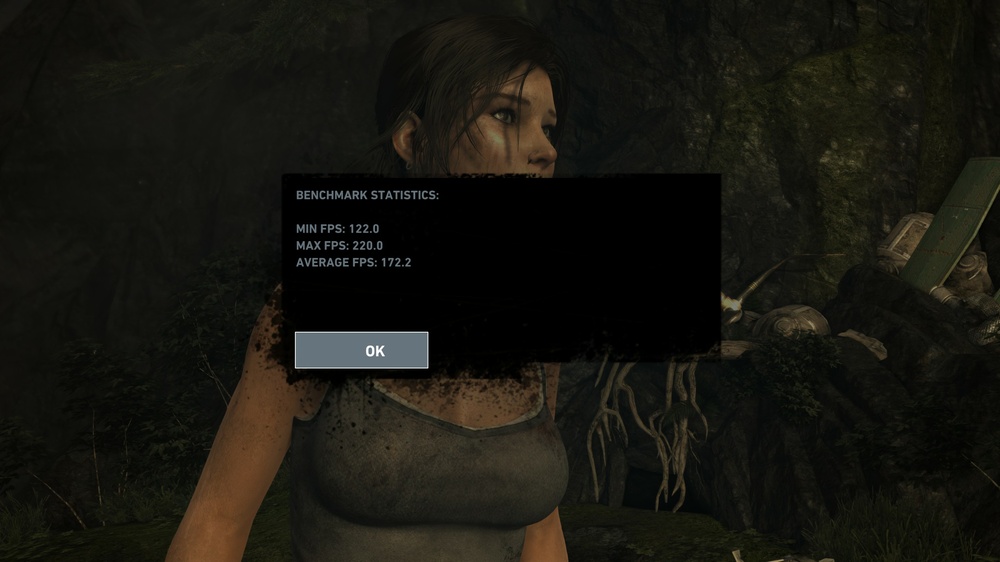

Tomb Raider

Shadow of War

-

Good Evening everyone!

So to give some context in this upgrade going to have to rewind a bit. I had just finished doing another modification to the Alienware 17 R1, already had lost my sound due to BCLK tampering and now the screen started flickering when the GPU was screwed ever so slightly too tight. Frustrated and tired of tearing down the system I decided to make my way to MicroCenter.

It appears this was the perfect time to buy a new system as AMD 3xxx had dropped so pricing on the 2700x was dirt cheap, and in the deals they have for buying a motherboard together with the CPU and it worked out to about 160 USD for the 2700x. I did splurge a bit with the X570 ITX board with the idea of, I would eventually buy what ever the top of the line chip was at the end of the line for AM4. Waited a bit as the pricing really sucked in comparison to when I purchased the 2700x, recently the price dropped to 270. Hence, here we are. Oh and 10850K makes a cameo appearance as well!

Here are the specs for all systems involved.

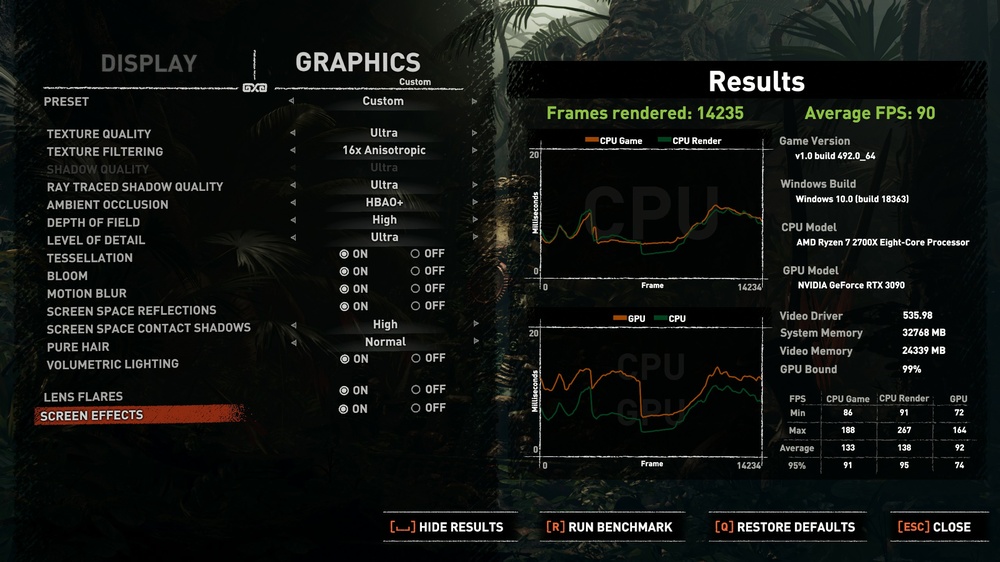

2700X @ 4.0Ghz w/ PA 120 SE w/3x Nidec Servo 2150RPM

32GB DDR4 3200Mhz (XMP)

RTX 3090 +100/+1200/@119PL

2560x1440 VSYNC OFF

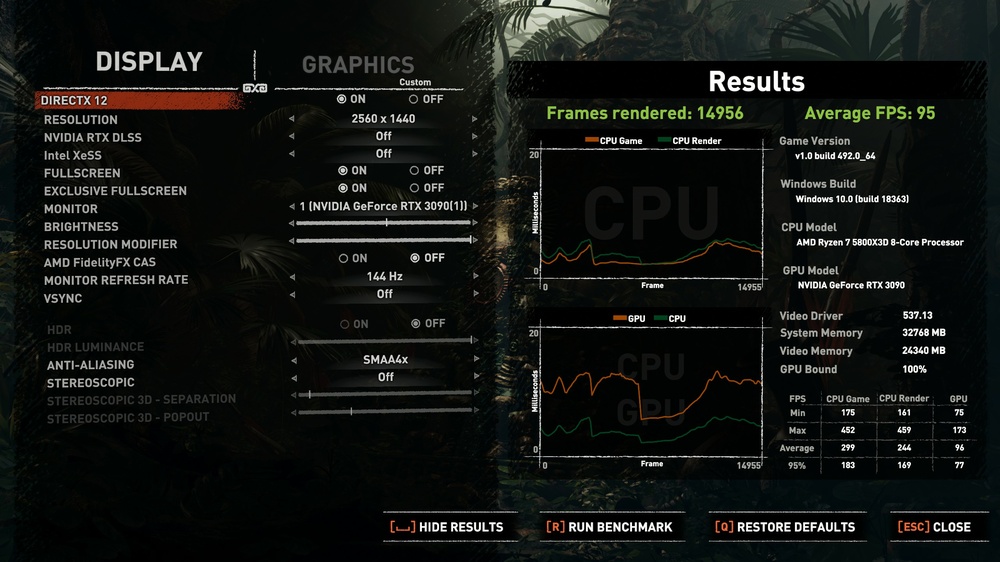

5800X3D @ 4.450Ghz w/ PA 120 SE w/3x Nidec Servo 2150RPM

32GB DDR4 3200Mhz (XMP)

RTX 3090 +100/+1200/@119PL

2560x1440 VSYNC OFF

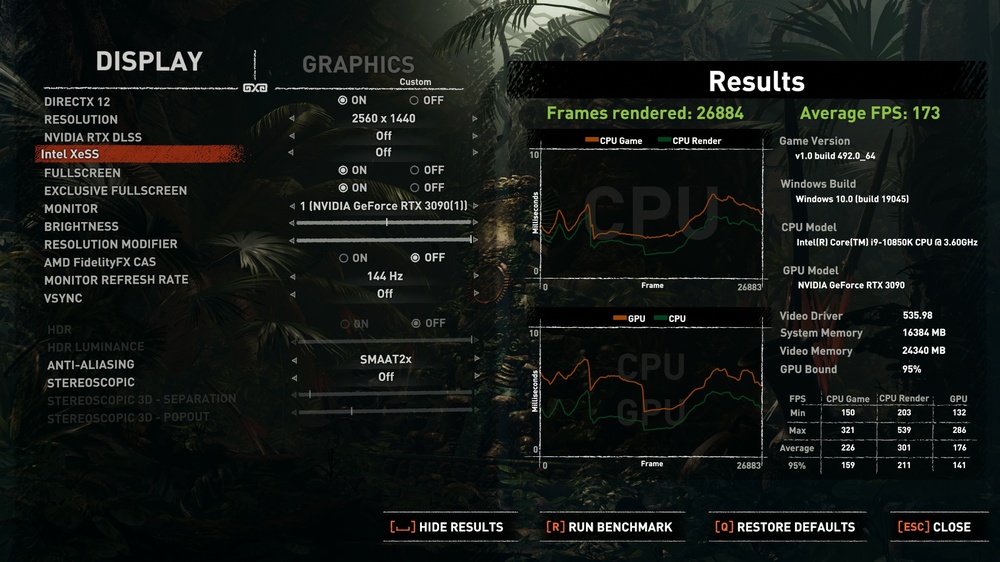

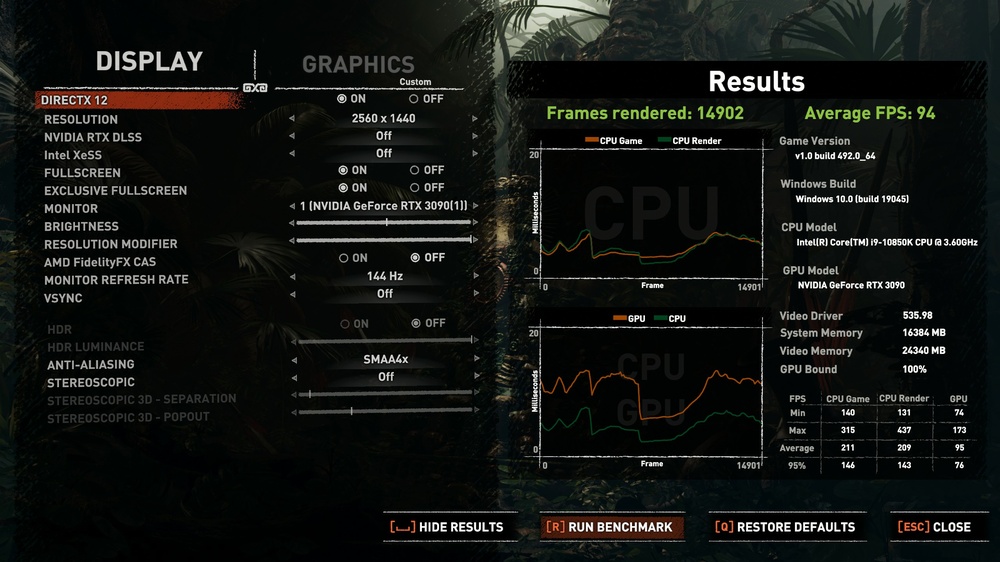

10850K @ 5.0Ghz w/ NH-D15 w/Arctic 140mm P14

16GB DDR4 4400Mhz

RTX 3090 +100/+1200/@119PL

2560x1440 VSYNC OFF

Now on to some benchmarks!

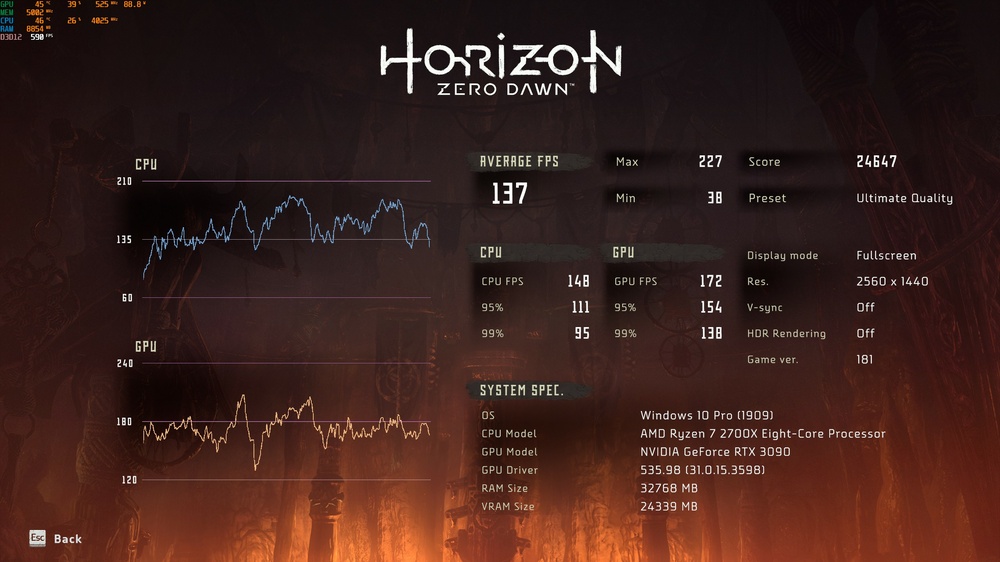

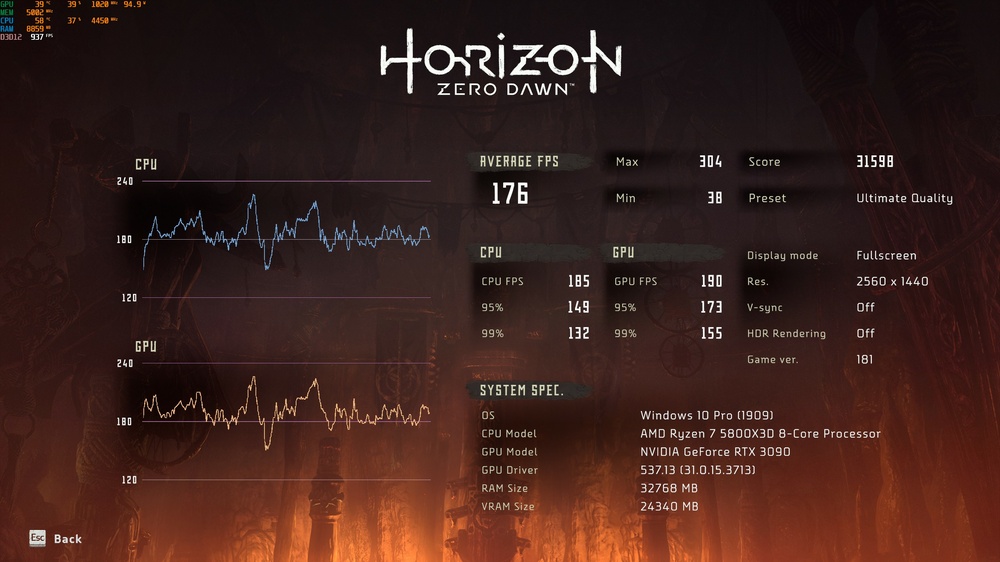

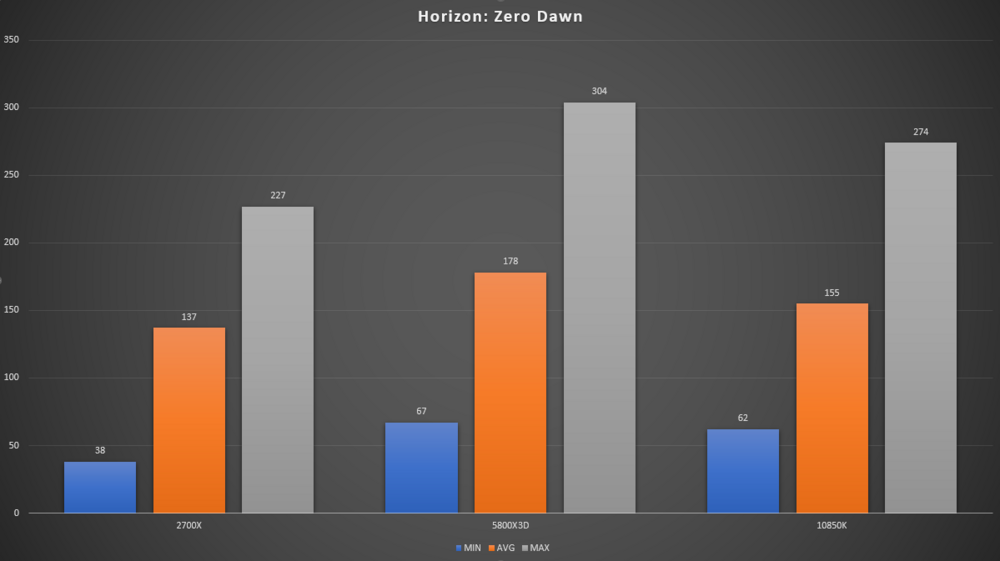

Horizon: Zero Dawn had a rough launch, but I enjoyed playing it regardless as I resisted the temptation to buy a PS4 and was "rewarded" with a PC release. I think its in better shape now, might replay again soon.

From the above data, the 2700X does "just fine" for Horizon: Zero Dawn. However, benchmark does point out that it does drop to 38 FPS which is pretty noticeable on a 144hz panel. It doesnt happen often, but once a pattern is noted, its hard to not notice it. The 5800X3D does have a healthy bump across min/avg/max readings as well as surpass the tuned 10850K as well. Its not enough to justify switching platforms but it is note worthy.

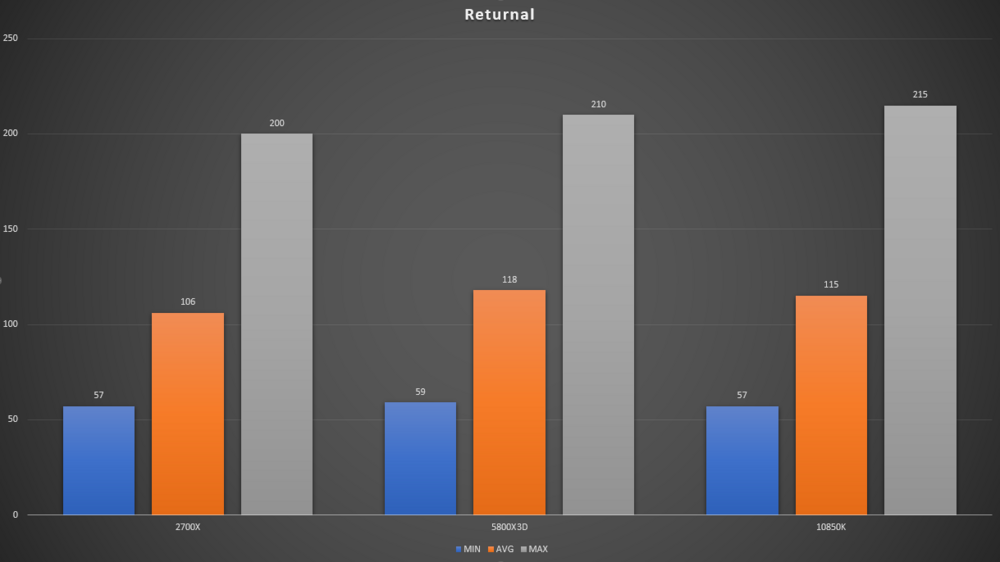

Returnal - This was a new title in my rotation, ended up enjoying it more than I suspected I would. A challenging game that punishes you failing and rewards you for not failing. There is no succeeding in this game.

This title doesnt seem to benefit much from either the 5800X3D or the 10850K. There are definitive gains, but not enough to jump to either platform. Appears to be mostly GPU bound title.

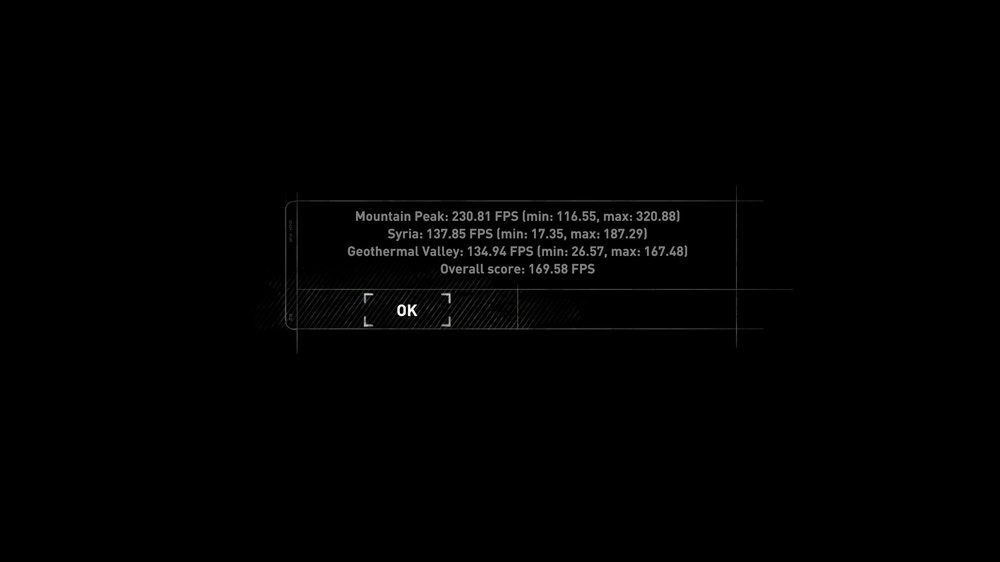

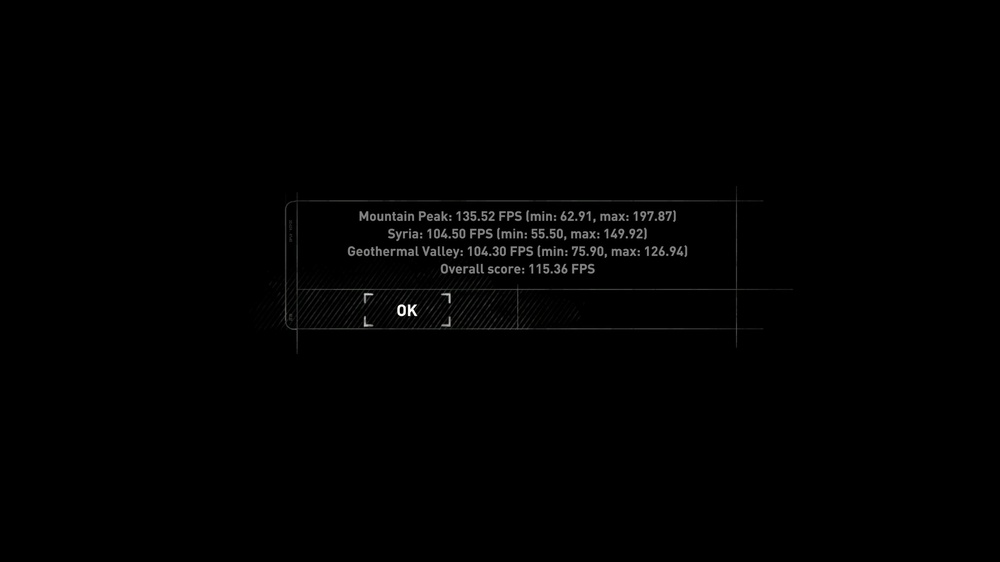

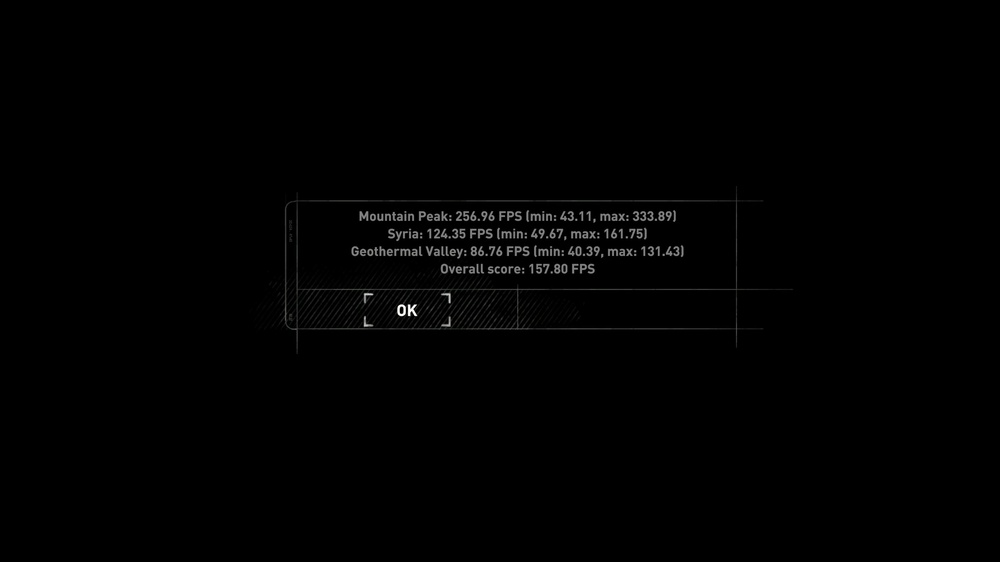

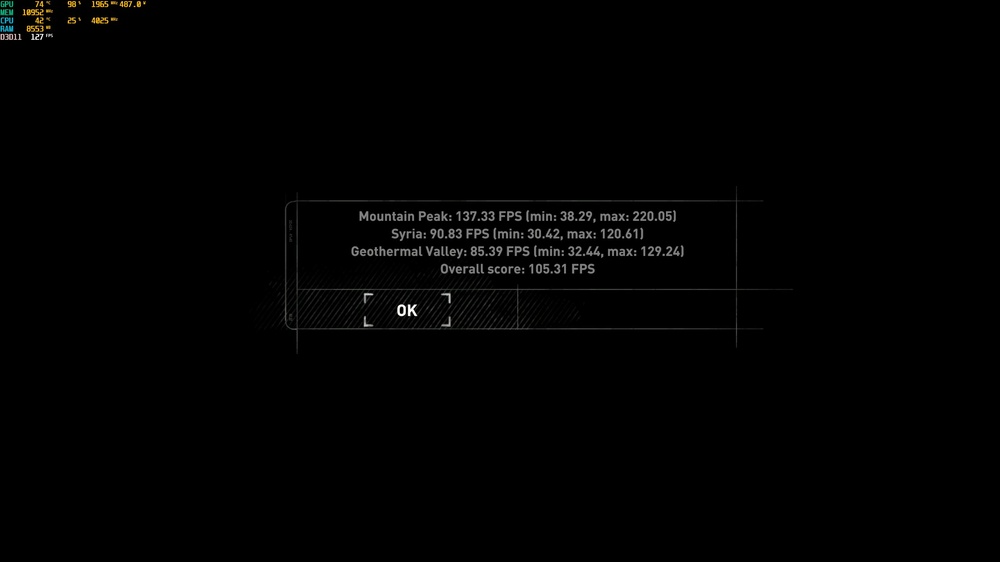

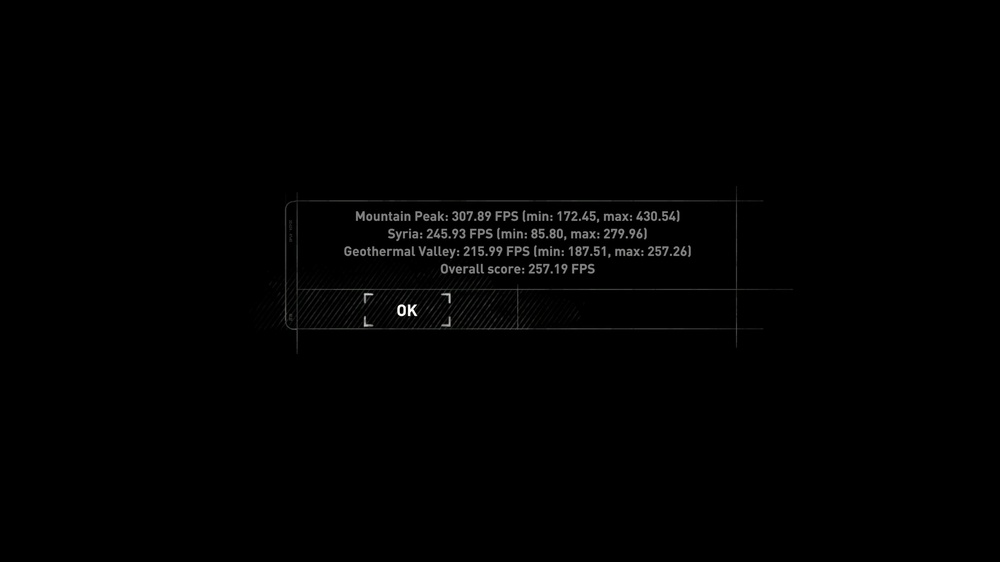

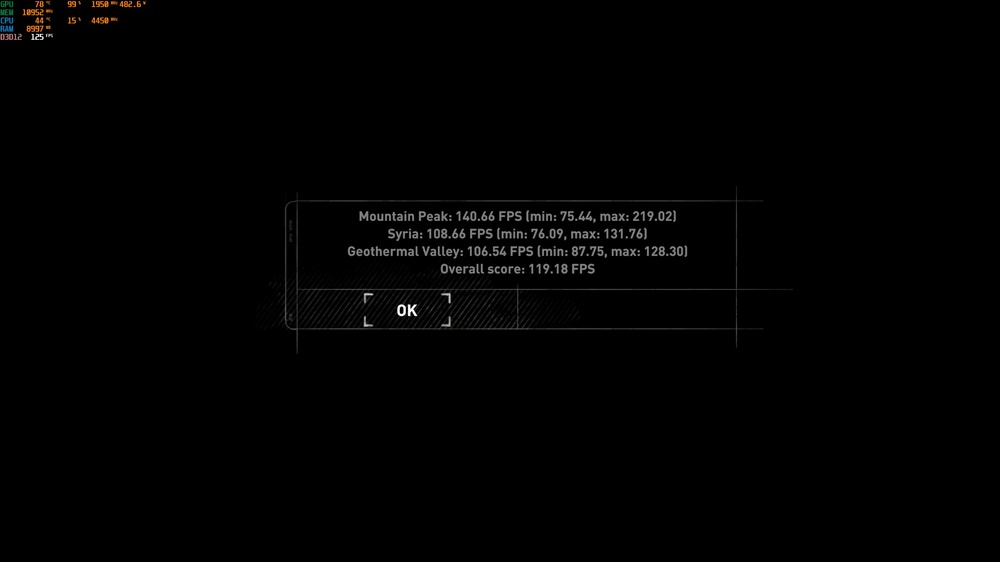

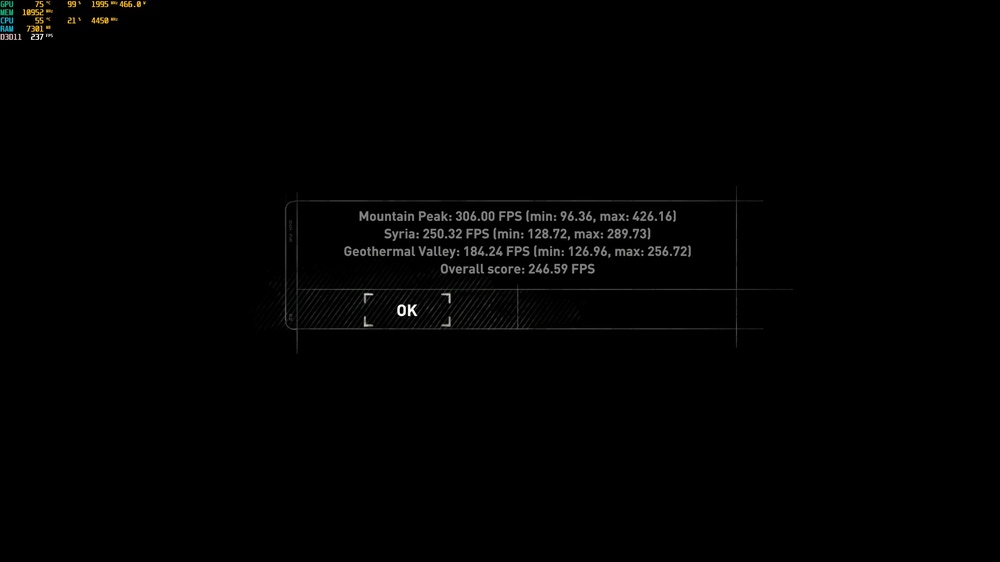

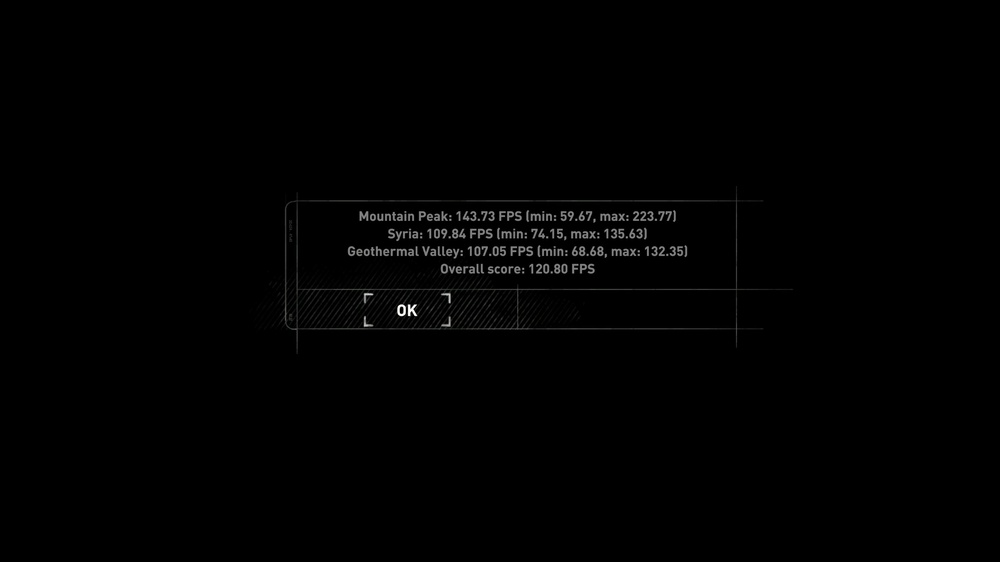

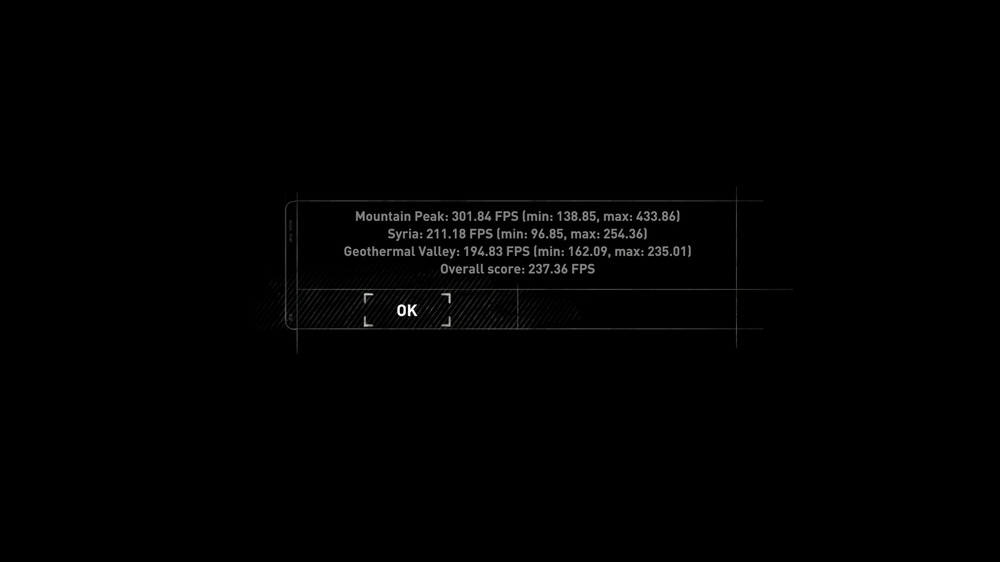

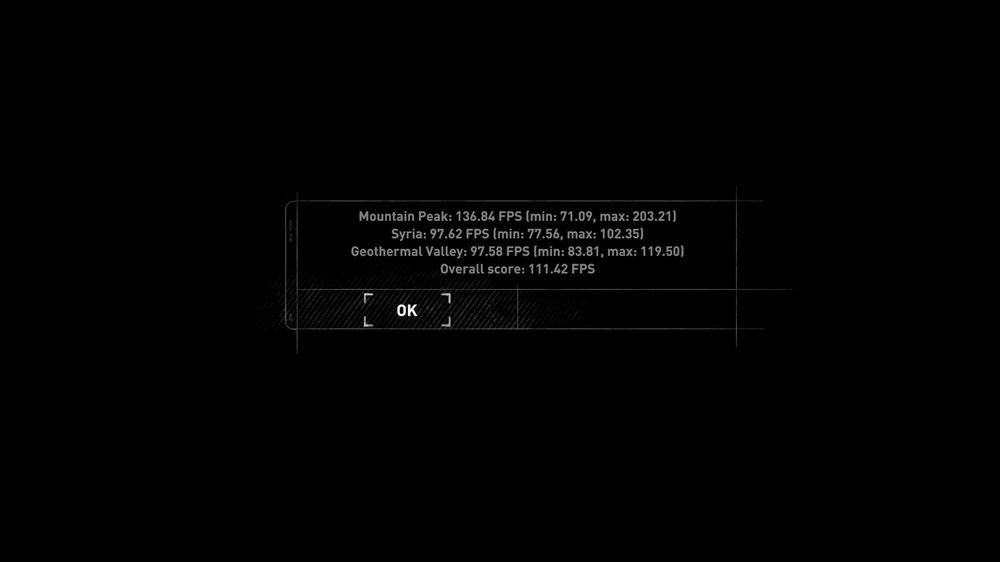

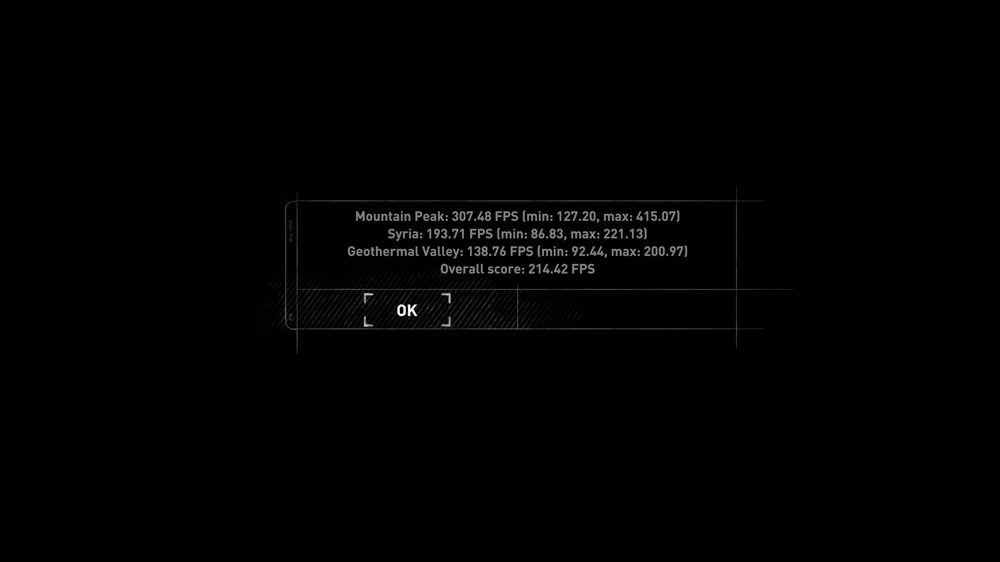

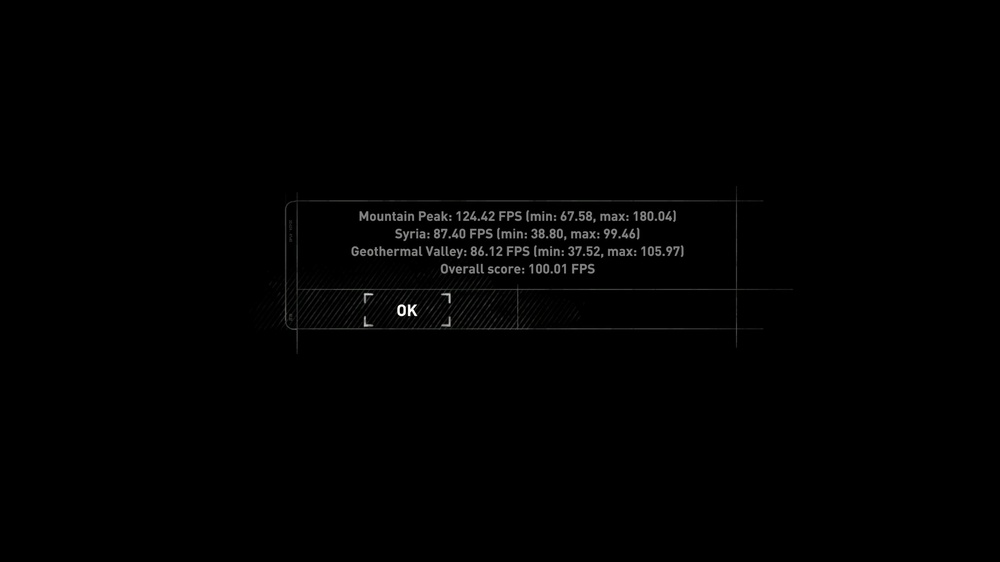

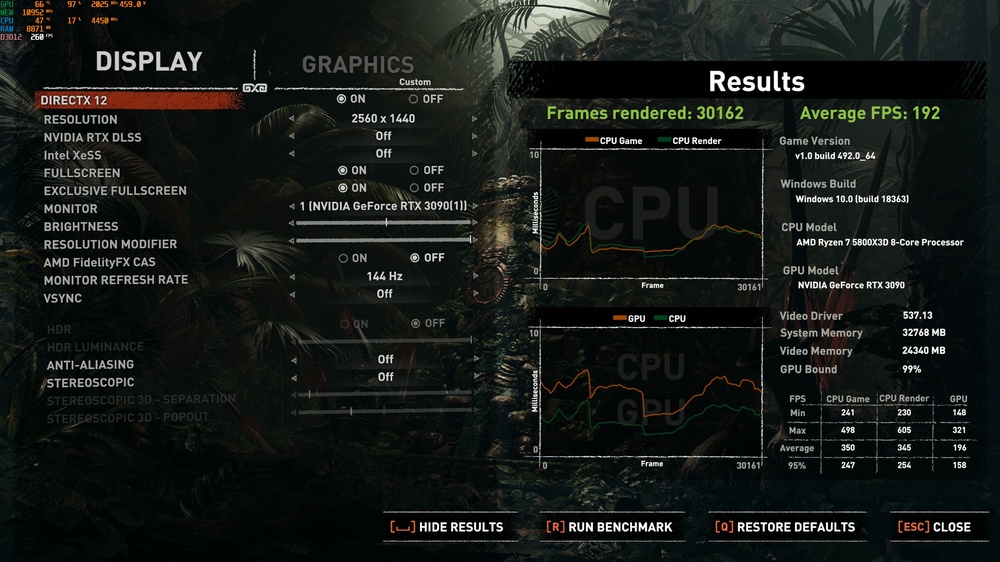

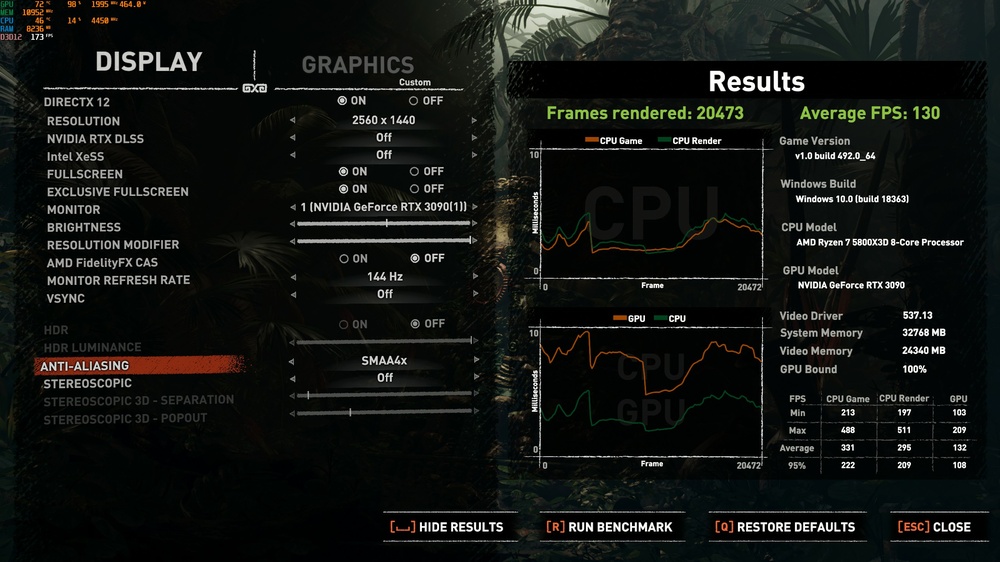

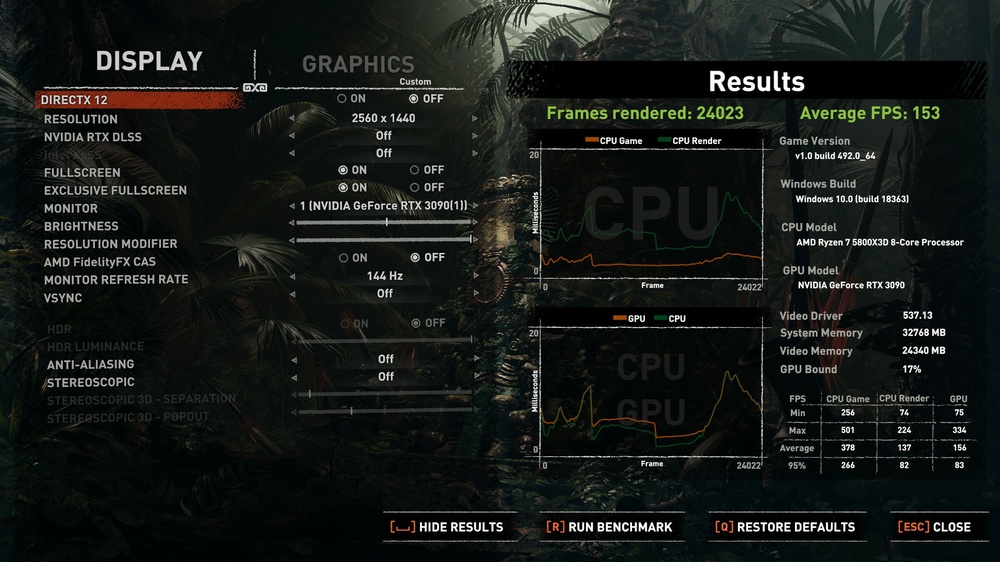

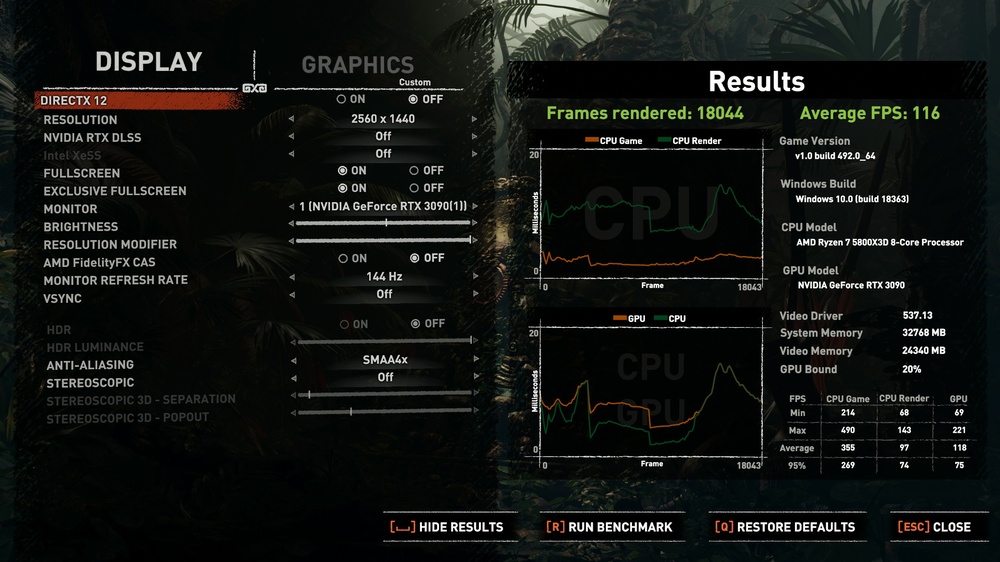

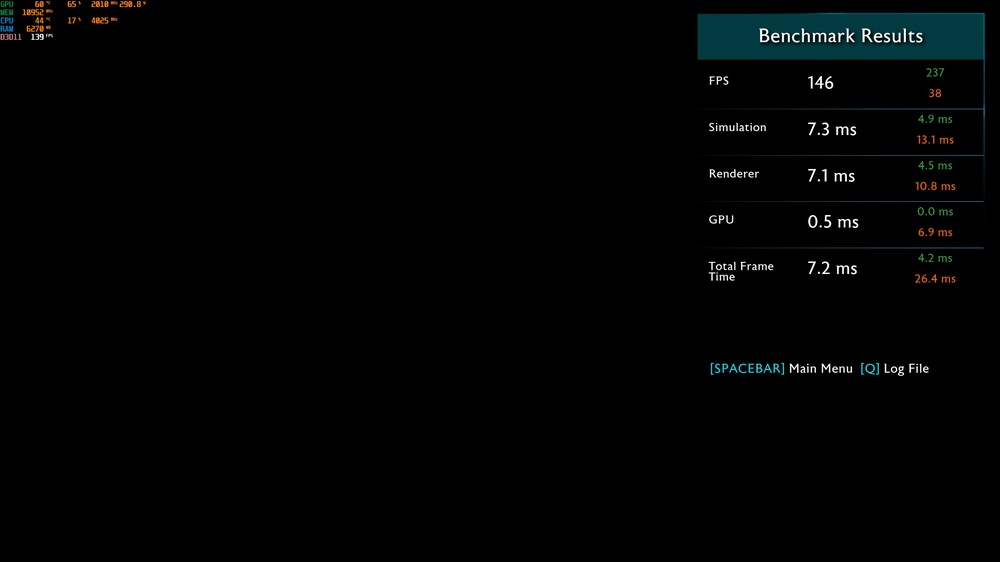

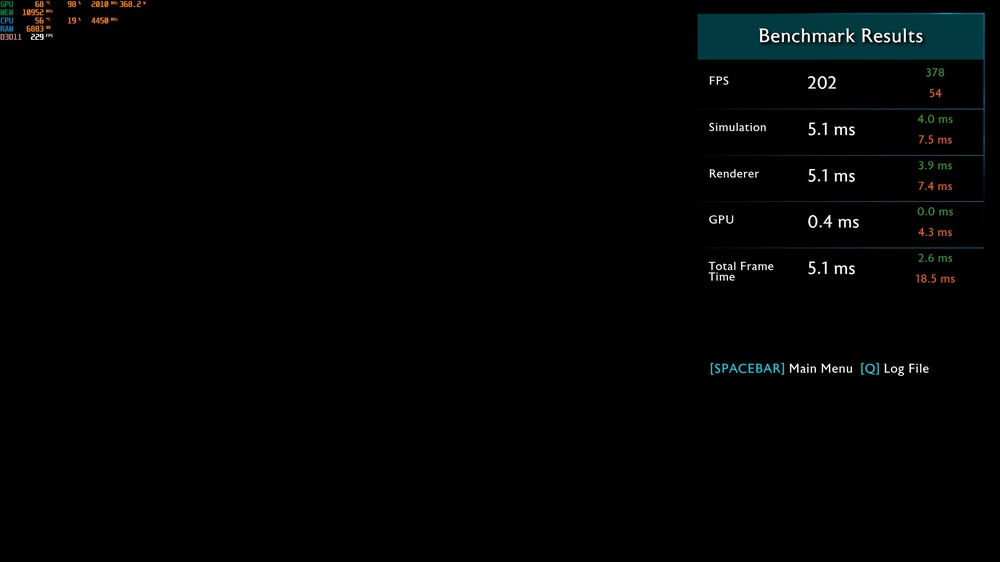

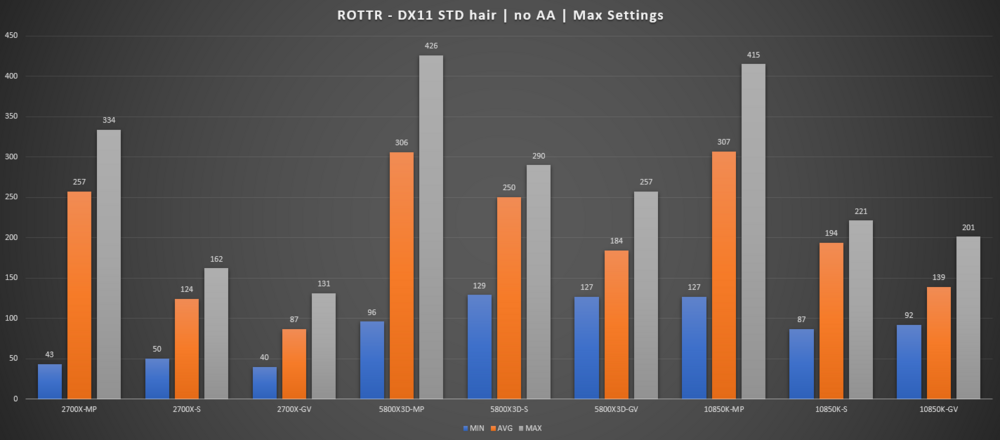

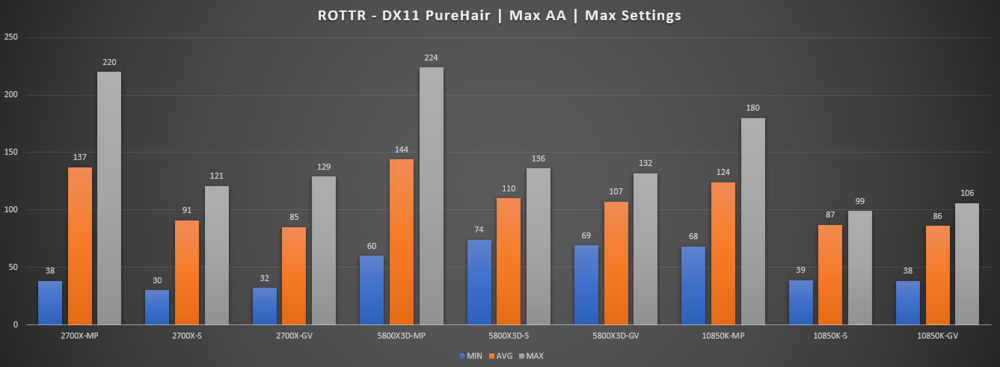

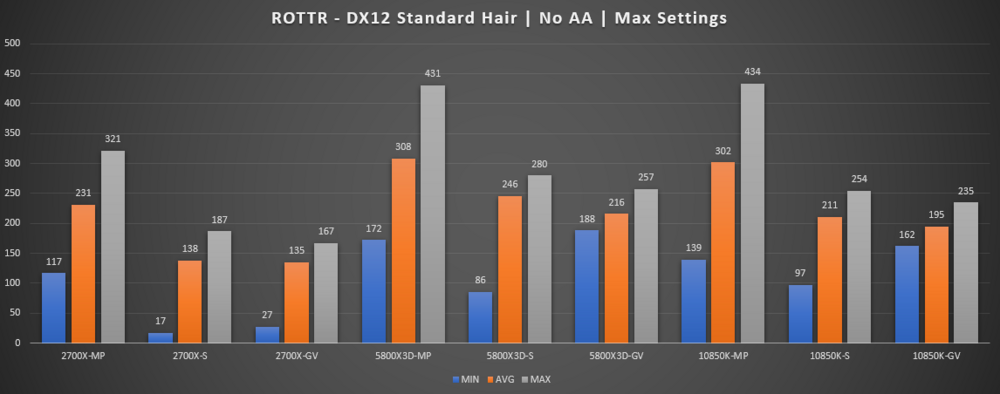

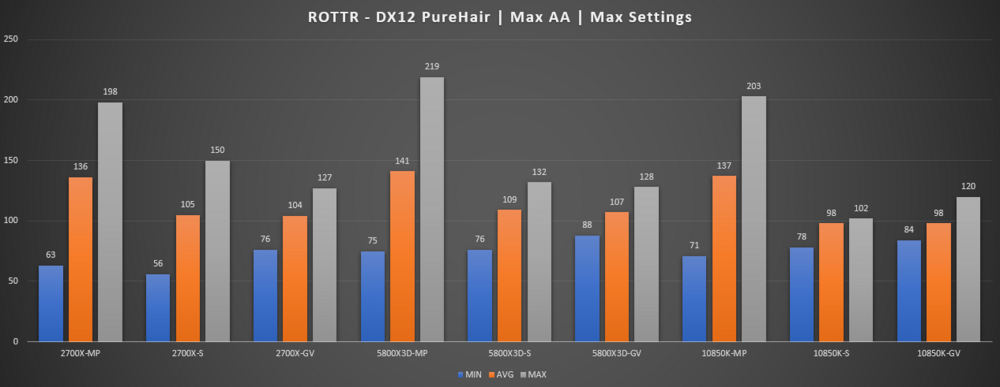

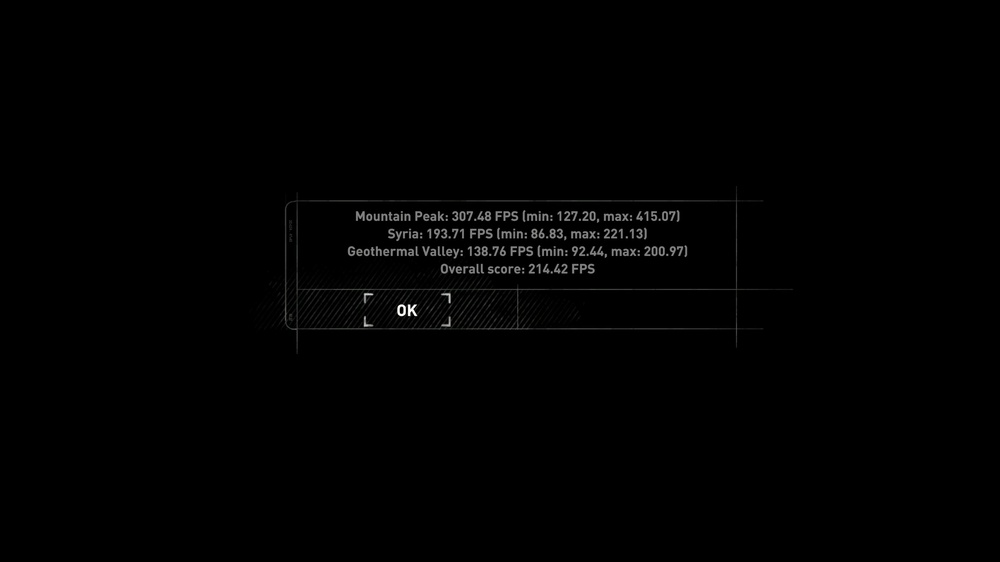

Rise of the Tomb Raider - I've enjoyed the reboot TR games, for some reason I stopped playing Shadow of the Tomb Raider but I might pick it up again. didnt know before hand but it appears that Tomb Raider is one of the titles that L3 cache appears to play a larger role in. As a result I took more time with this title. The next 4 graphs will be ROTTR at various settings noted in each graph.

ROTTR goes through 3 areas to capture FPS data. I abbreviated each run on the charts to simplify how its presented. STD shorthand for Standard, and AA shorthand for Anti-Aliasing.

-MP = Mountain Peak

-S = Syria

-GV = Geothermal Valley

and continuing...

Not sure why the 2700x seemed to be just taking a dirt nap under DX12 with lighter settings. The lows were abysmal, perhaps it has to do with still loading the run. That being said when you crank up the settings across the board a pattern more or less emerges. The demands on the hardware increase with each run save for the last graph.

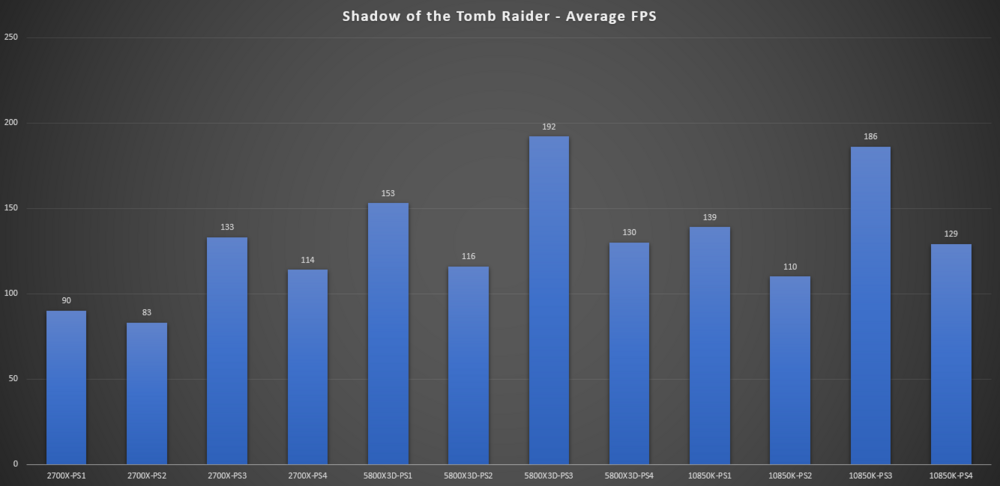

Shadow of the Tomb Raider - Average FPS

Im not quite sure how to extrapolate all the data from SOTTR benchmarks so I just went with the averages. I still have the screenshots so if people let me know I can edit them re-submit. Also I followed the same presets as before, in this order.

PS1 - DX11 | Max Settings | No AA | Low hair

PS2 - DX11 | Max Settings | Max AA | Max hair

PS3 - DX12 | Max Settings | No AA | Low hair

PS4 - DX12 | Max Settings | Max AA | Max hair

Also I didnt grab the full sweep of data for the 10850K. I'll add that in later if people want. EDIT: Went back and re-ran the benchmarks to finish this chart. Citations will be provided shortly.

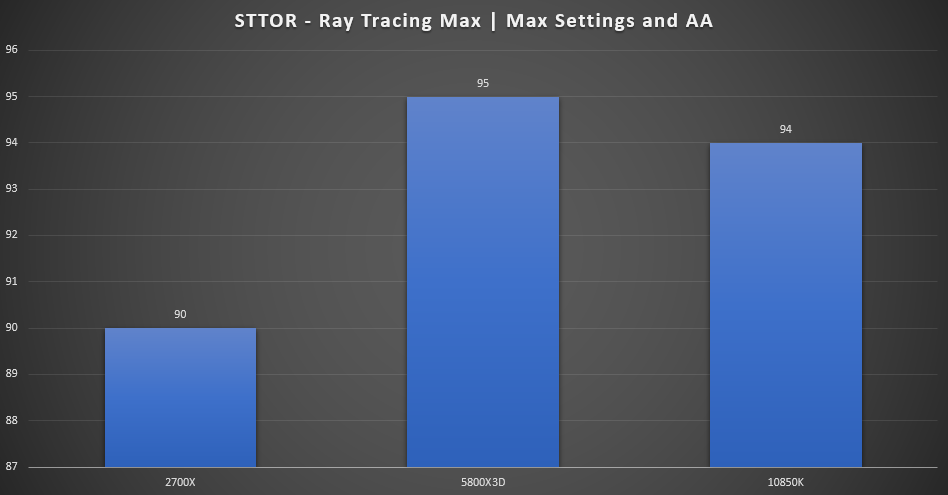

Shadow of the Tomb Raider - Ray Tracing Enabled

Dont let this bar chart paint an incorrect impression of RT performance, the FPS range between all systems is 5 FPS. Wanted to see if there would be much impact on performance between the CPU's with RT On. There is a difference, but within this range it would be hard to make an argument for any of the processors.

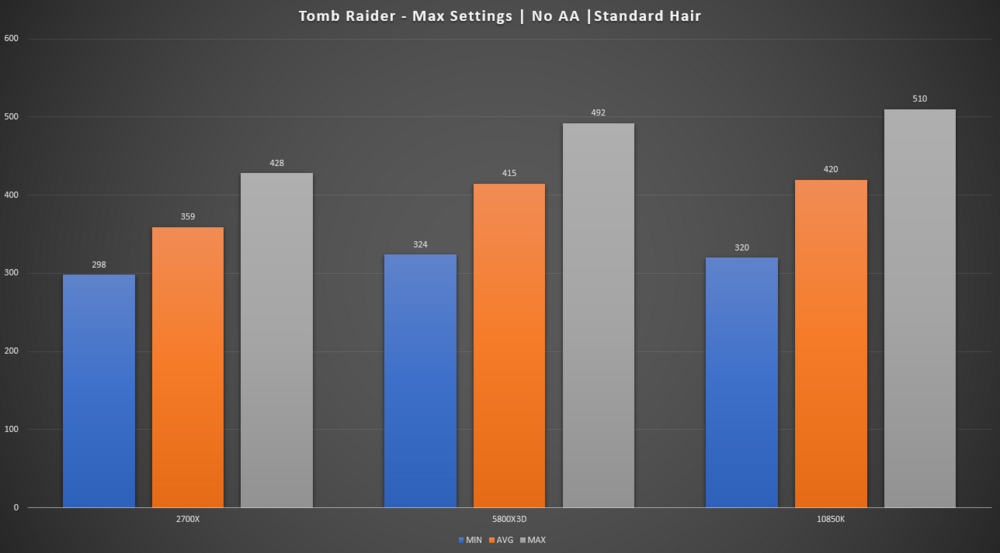

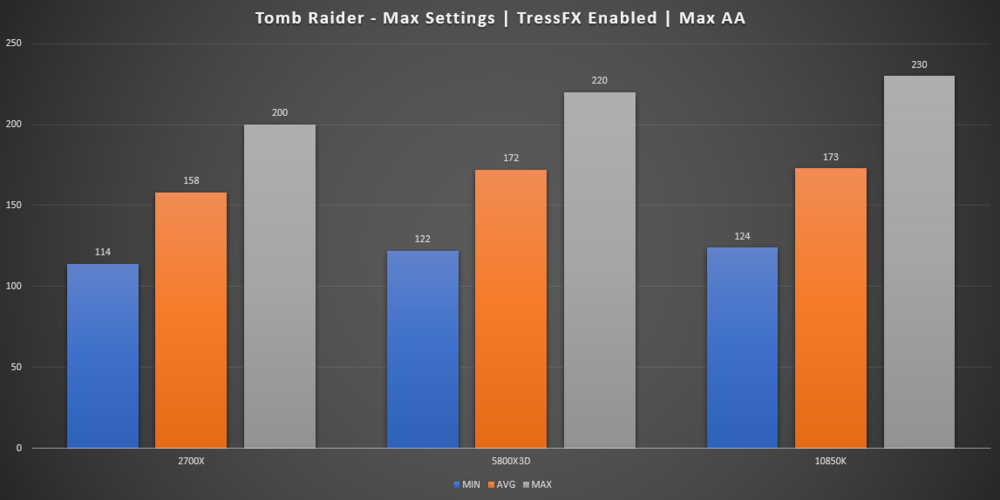

Tomb Raider

Tomb Raider (2013) by today's standards is quite dated. That being said I like to play it from time to time. One thing I usually derive from this game is why I dont care for new graphics features that often, above you can see the performance penalty of adding TressFX and AA. No setting should cost you 200 FPS. Performance is in-line from what you would expect however this title seems to favor the brute force method of the 10850K over the 5800X3D, albeit, not by much.

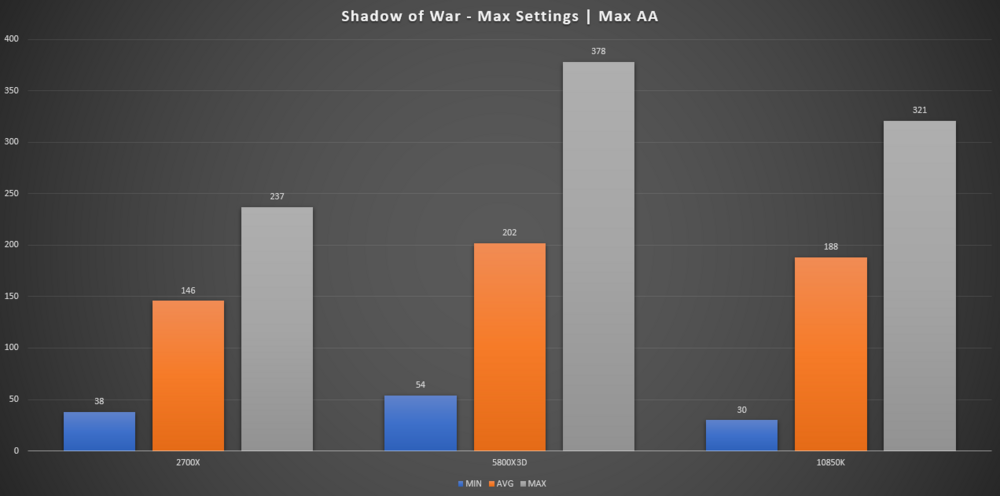

Shadow of War is one of those titles that somehow punched above its weight class. While some elements of the story were just ridiculous, the gameplay was quite enjoyable. This title does seem to favor the 5800X3D, particularly the minimum FPS. Perhaps the bad minimums for the 2700x and 10850K are a result of the start of the benchmark as for the short while that I did play I didnt see choppy FPS. It is what it is though.

The 5800X3D is an overall improvement over the 2700x, but if you are using the 10850K with some decent ram, there really isnt any justifiable reason to switch platforms. If you are sitting on an older AM4 motherboard with standard RAM the 5800X3D is very tempting in comparison to the 2700x.

Im getting tired, ill add more as I gather it together.

-

1

1

-

4

4

-

1

1

-

-

23 minutes ago, Mr. Fox said:

Did you try bumping up the memory voltage? XMP profiles are often not stable because their default voltage is too low and if you tighten some of the timings things will come unraveled unless you increase the voltage. I would start at 1.500V and see if you can dial in settings you want. Once you do, slowly start decreasing the voltage until it begins acting up, then go back up about 0.020-0.025V.

I did quite a bit of reading last night and this morning. It looks like there are multiple factors in play for memory overclocking on AM4.

First, the kit themselves. People and reviewers failed to hit even 3400Mhz on this kit. Even timings barely move without hitting instability. Im not too bent over it, was just seeing what I could get from them but as a daily driver system, 32GB was more to what I was wanting. Everything else would've just been a bonus.

Second, AM4 motherboards seem to have issues when applying XMP as well, chiefly where the SoC voltage ramps up for reasons yet to be discerned. Have to go back and set voltage manually. Once this is done, generally memory OC then becomes viable. However, I run into the issues above.

Its fine, its a bummer sure but I will keep the 4400Mhz kit in the 10850K system, benching doesnt have much need for more than 16GB where as a daily driver system will feel those limitations.

I am gathering the data into an excel spreadsheet so I can make some bar charts, had to re-run some benchmarks since I made some clerical errors. Hopefully tomorrow I can have this posted and move on to working on my professional development.

-

4

4

-

-

3 hours ago, electrosoft said:

I believe the term you are looking for is "Performance Enthusast." 🤣

Having played some more of it, I am now referring to it as Starfield 76 aka Fallout in Space aka Starfall aka StarOut aka FallField.....as it looks feels and plays just like Fallout 4 and 76.

I'm waiting to see if any of the frame render or loot/NPC bugs rear their ugly heads.

Tempering your expectations, the vast majority of GPUs down to even a 3060 are enough to actually play. We're just a group of jaded hardware enthusiasts.....

As for Nvidia, they are perpetually in cash grab mode 24/7/365 😞

I'm so happy you rectified the situation Papu! How many pairs of Crocs did you have to trade in for that wonderful upgrade in quality of life? 😁

Looks 4.5Ghz might just be out of the cards, cant even POST at 101.00 BCLK

Also looks like the RAM kit I bought for this system forever ago appear to be duds. They do XMP, but thats about it.

-

2

2

-

-

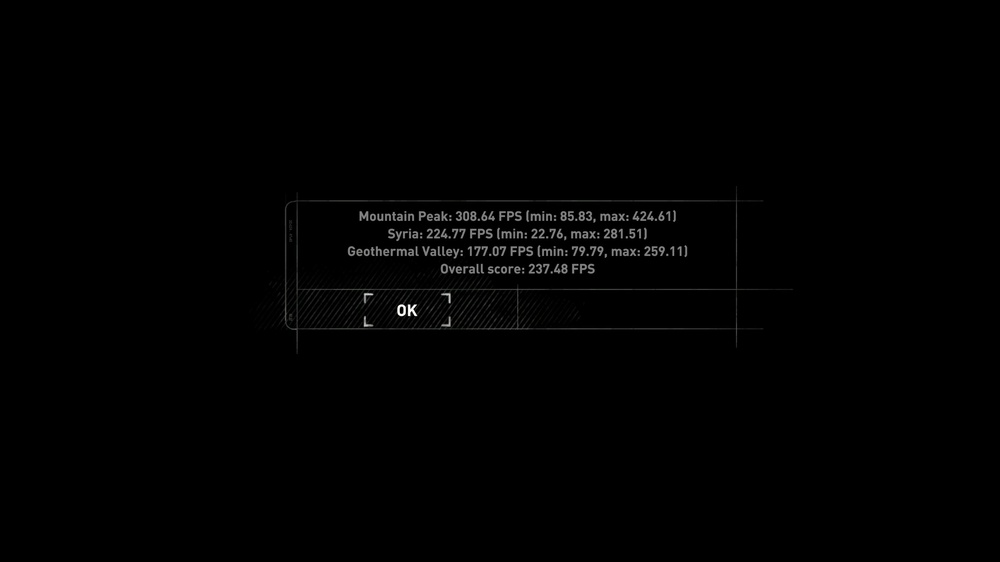

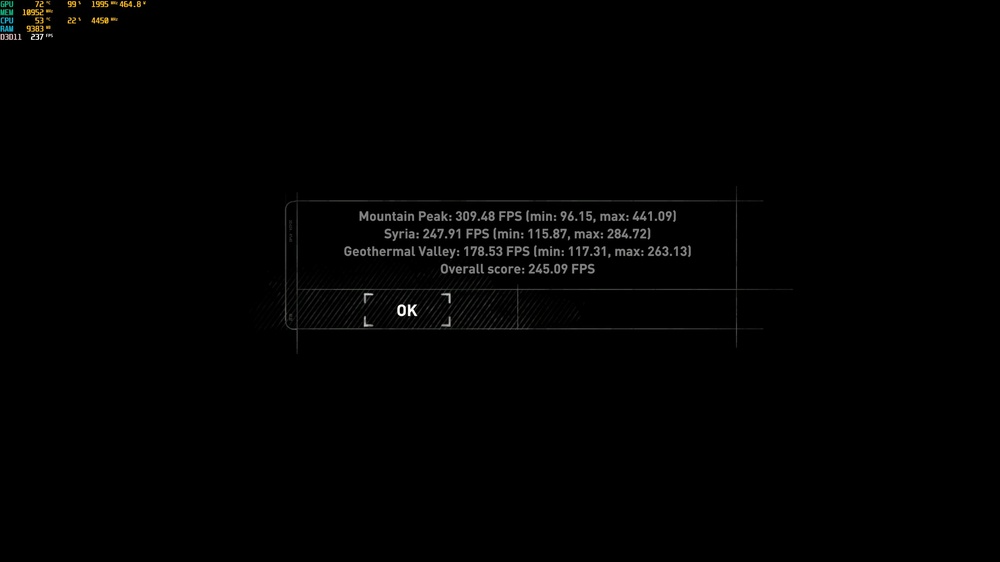

Just added an update to my last post, the tuning seems to have a profound effect on its performance. Probably last thing would be BCLK tuning eek out a bit more Mhz from the CPU. The idiot in me wants 4.5Ghz, not 4.450Ghz

-

1

1

-

4

4

-

-

Got the 5800X3d installed, wanted to do more tests but I dont want to take too much time away from my certification training that I have been doing the past couple of weeks, think I have about a half dozen titles.

With no tweaks made to the BIOS settings besides max fans and 3200Mhz on memory, the gains versus 2700x is quite substantial. That being said at the moment it does fall behind the 10850K @ 5.0Ghz \ 4400Mhz DDR4.

So, I will need to do a bit of reading on how best to tune the chip to maintain peak performance tonight and likely follow up in the AM.

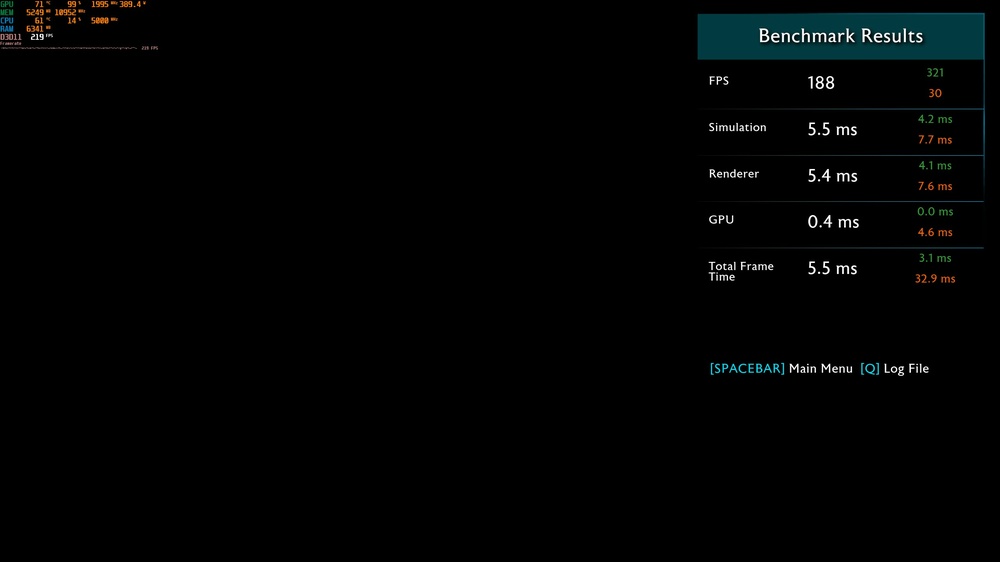

Just a quick comparison on one of the games I like to play every so often.

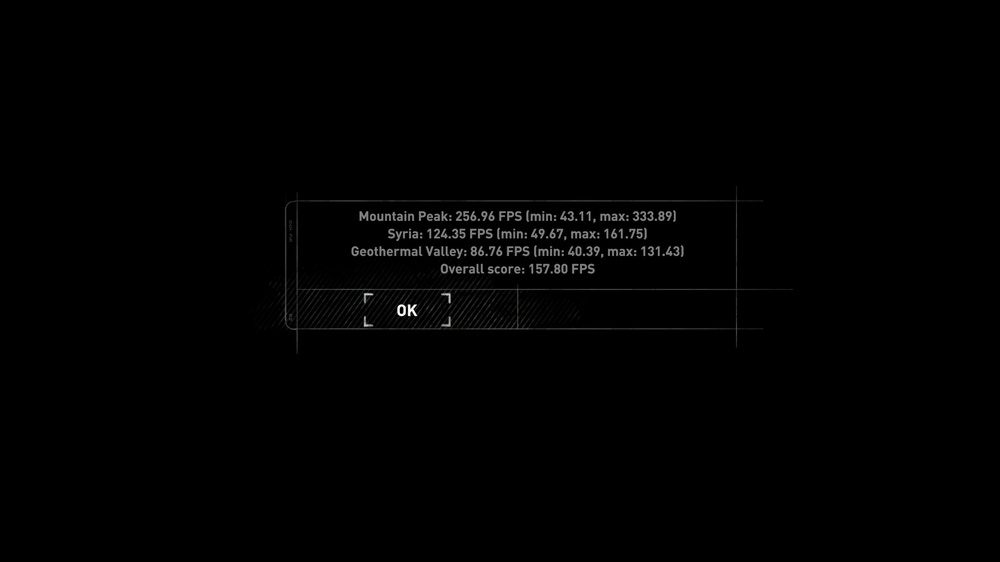

Rise of the Tomb Raider | DX11 | AA off | Standard Hair | Otherwise Max Settings

10850k @ 5.0Ghz | DDR4 4400Mhz

2700x @ 4.0Ghz DDR4 3200Mhz

5800X3D @ Stock DDR4 3200Mhz

Might be a bad test, or bad stock tune by the motherboard who knows, peaks are higher than the 10850K but the minimums are bad by comparison. That being said its still double that, or greater than double of the 2700x.

Anyways, some reading is in order before documenting more benchmarks.

NEW TEST EDIT:

5800X3D @ 4.450Ghz | DDR4 3200Mhz | Resizable BAR Enabled | PBO2 Tuner -30Quite an improvement, the min/max/avg handily now surpass the 10850k in 2/3 zones in the benchmark under the above settings. Things are getting interesting!

-

2

2

-

4

4

-

-

2 hours ago, ryan said:

I wouldn't go to burger King and ask the manager a question about engineering. But hey you never know.

Gpu utilization is low because the cpu is the bottleneck due to the way it was programmed. Everyone that has the game will experience this. Crysis should be doing the same thing as the 2nd and third. They did not account for future 64 core cpus

Im not asking the manager, clearly no one from CryEngine is here to discuss, Im talking to their customers to see if their experience at Burger King is the same as mine.

42 minutes ago, jaybee83 said:what res are u testing it at? try and bump it up to the max. also set your gpu back to full stock, see if that does anything for the gpu utilization. if it does, might indeed be a cpu bottleneck potentially caused by the unoptimized engine.

its at least capable to scaling up to 6 cores / 12 threads....

2560x1440

Im guessing this is just what you can expect from Crysis 3, in Crysis 1 Remaster the utilization is maxed out pretty consistently, hadnt tried Crysis 2 yet. Not sure if I have it lol

I moved on to get some testing going to get some testing going for the 2700x as I want to install the 5800X3D lol, gathering some data first is hurting my soul XD

-

1

1

-

-

Yeah vendor reps are not permitted to talk about their competition here on the forums rules iirc.

Sets the conversation into a toxic playground instead of the friendly competitive nature that I personally enjoy. That goes for any vendor, they are free to support their products but x is better than y is left for members to discuss.

-

4

4

-

1

1

-

-

I enjoy SFF for my daily driver, depending on how much I am trying to pack into it, can be as fun as when I was modding my Alienware 17 R1. For benching it wouldnt make much sense, at all.

-

2

2

-

1

1

-

-

Welcome to the Forum!

-

1

1

-

-

41 minutes ago, poprostujakub said:

Well, as far as it won't need a lot of work with terminal I can try it.

I'm a refugee from NBR but thanks 🙂

There is no BIOS that supports newer cards, I checked this first...

P180HM is the prettiest laptop I've ever seen, so I had to have it 😉

Well, another update - with HD8970M it doesn't even light up the screen and after one minute it shuts off, so no commmunication to KBC. It's sad, because W7170M's are very cheap...

Yeah it has to be able to read the sensors it wants or it turns off the system. Obviously Clevo cards have better chance of working but MSI is usually the next best option in terms of at least posting and letting you boot up.

When another member on NBR (cant remember username) tried to get some newer cards working for the M4600 they were only able to get some cards working under Linux as Windows would crash or lock up. Same drill for a couple of the other Dell Precision systems, no idea if that will hold any water for the P180HM though. Worth a try

-

I should have the 4980hq sitting around my house somewhere, worked in the M6800 but not the PC I originally wanted to work with (Alienware 17 R1)

-

1 hour ago, Mr. Fox said:

It's too funny how our brains work. It's a fault that I love and hope never gets fixed, LOL. A picture is worth 1,000 words. As you can see, this monopolizes my desk. If I can not feel like I am going insane using one 4K display (TBD) I might actually be able to put my computer next to the monitor on my desk instead of on a microwave cart next to it.

OK. I feel better now. A cluttered workspace is better than a cluttered mind. 🤣

That's quite the command center... wow, 36 controlled from one station. Crazy. What kind of KVM switch can do that?

On another note, rubbish designed by an idiot (like this) makes it hard to take a brand seriously. This proprietary abortion is about as stupid as an Alienware and equally disgusting to look at.

Recent drama aside... what Linus does best... purpose-driven entertainment.

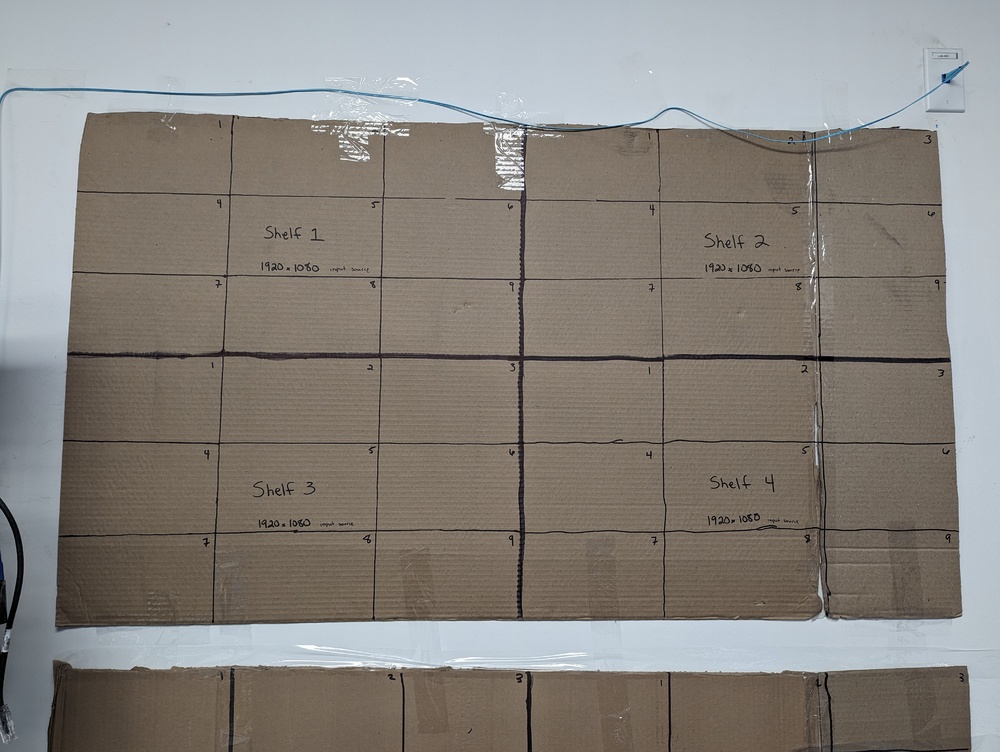

I dont use KVM's anymore, too limiting. Great for granular stuff if needed though. I use a multiviewer, basically taking multiple inputs and cropping into one display.

What I use to control all of them are USB synchronizers, kind of a flimsy product but it gets the job done.

My home station right is a bit of a mess, mostly because I am running 2 desktops now, benchmarking one while I work on some certifications on the other until that is wrapped up. Finally can start doing some SLI/Xfire benchmarks when the winter time hits.

-

6

6

-

-

This is how it was setup when I started working here, 2x16 KVM's, works fine. Problem is you can only view 2 systems at a time and if you lose signal or never had it when booting you had to cycle the system, reseat cables, try another cable as it doesnt return when re-plugging with the system live.

This method allows me to use 9 systems per monitor, totaling 36 on this cart, with the synchronizer and QMK I can run all production at the same time. Instead of producing 2 at a time, I now run 36 in real time. Think CCTV monitors and how they crop a display, but for PC's.

This mock up is to see if there is enough physical real estate to make sense of the approach. 4K HDTV is cheaper than 4 1080P monitors these days. basically you take the setup prior and add another "multiviewer" in front of it so that everything outputs to 1 single panel.

If everything is wired up appropriately, I could scale up into hundreds of systems from one keyboard with no remote software, no network dependencies and even simplify processes with QMK.

Im not doing photoshop or sending emails off these things, that would be hell lol, this is just provisioning, Autopilot, SCCM/MDT deployments things of that nature. So I just need to see enough to make sure I am making the right configurations for each client.

-

4

4

-

1

1

-

3

3

-

-

22 minutes ago, jaybee83 said:

i have a nice comparison between my workspace at the office and at home: 2x27inch 1080p monitors vs. 1x32inch 4K display.

to be totally honest: with regards to available workspace, those two are more or less the same to me (the 4k has higher gamut and brightness so nicer picture 😋 and less head movement back n forth due to just one display to focus on)

haha i loved that comment 😁 sorry to hear about that "lost order" bud...fingers crossed all goes well!

lulz here we have a full crapton of enthusiasts basically always going overboard with everything...enter bro @Recievergiving overkill a completely new meaning 😂65 inch 4K displays for work, dayum! hahaha

I'll snap some pictures of my mockups for you guys in a bit lol

-

4

4

-

-

9 minutes ago, Mr. Fox said:

I agree in terms of picture quality, but is a super easy to tell the difference in screen real estate. Going from 1080p to 1440p is a massive leap and 4K even more so. As long as you leave the scaling to 100% (where it belongs) a 4K screen is literally the equivalent of four 1080p monitors.

Im working on converting all my work displays to 65" 4K HDTV's, its cheaper than replacing/adding monitors for my volume. Plus it also gives some eye candy for when they shop out the room to future clients. I would basically be using 4x 1080p multiviewers (splitting the physical screen to 9 systems) into 1 4K multiviewer (=36 systems). I also have devices that allow me to control all PC's at the same time via 1 keyboard.

I really need to take a course or something on cable management though. Routing your cables neatly in a single pc is fine of course but when you have 4 cables per pc, thats 144 cables on a single "cart" or wireframe shelf. I even have QMK for semi-automating what I need for each of my projects.

Looking forward to 4K :)

-

2

2

-

3

3

-

-

30 minutes ago, KING19 said:

Exactly.

It makes it seems like GN is trying to milk all the attention they gotten from all of this. They need to go back to making their normal content and be better. Hope this crap never happens again!.

Don't know that I can agree, without having seen the video. GN does like to make a few jokes at the other parties expense but most of the time it's those that refused to acknowledge the problem.

I don't want for either group to be completely sanitized of humanity. That's how you get videos made by someone from HR.

-

1

1

-

1

1

-

2

2

-

-

2 hours ago, electrosoft said:

Steve had basically issued their own version of LTTs "Here's the Plan" video which was a mix of very VERY indepth policy and testing structures (along with future plans) but it was a tad tone deaf and moreso terrible timing.

I said it before and I'll say it again. GN should have just dropped that first analysis of LTTs testing methodology along with the prototype shenanigans and left it at that.

Thats fair, GN tends to do that sort of thing periodically. Dont know about the tone deaf portion since I didnt see it but the timing is definitely bad since it would be seen as an additional swipe at LTT (regardless if true or not). Its fine in my book to criticize, but if the party in question has accepted it, spent time on reflection, and is re-entering the market then you need to allow them to get up.

-

2

2

-

-

Didnt watch it either, whats going on?

-

8 minutes ago, Raiderman said:

USPS "currently awaiting for package"...haha they have until tomorrow. Never been this disappointed with Newegg. Ordered on the 19th, supposedly shipped on the 21st. I have always received my orders in 3 to 4 business days, with full tracking available. This is stupid.

Sounds like you are getting the Amazon treatment I usually get.

-

3

3

-

2

2

-

-

Any further inquiries regarding price, please do so over PM.

Thanks everyone!

-

1

1

-

2700X to 5800X3D upgrade! With a 10850K Cameo!

in Desktop Hardware

Posted

Citations post added in the second post so that if people wanted they can view the screen shots.

If anything is amiss I can easily rerun benchmarks for the 5800x3D and 10850k. If 2700x benches are needed that will probably wait until I have a bundle of tasks I can do as I will have ti swap the cpu