MyPC8MyBrain

Member-

Posts

685 -

Joined

-

Last visited

Content Type

Profiles

Forums

Events

Everything posted by MyPC8MyBrain

-

is Vegas "tropical" enough? These weren’t cherry-picked images. I simply went back to my older posts and selected the most recent ones I had shared. You’re free to read the thread from my first post onward, there are plenty of screenshots covering idle, load, and various test conditions. As for ambient: yes, I understand exactly what it means. Those measurements were taken under specific conditions that I already documented at the time, BIOS Cool mode, Windows Power Saver, and a controlled environment. When switching to Ultimate Performance, I explicitly noted the +10°C increase. None of this was hidden or misrepresented. Regarding efficiency: I never claimed architectural efficiency of Alder Lake exceeds Arrow Lake. My point was strictly about thermal behaviour under mobile constraints. Architectural efficiency on paper and real-world thermal behaviour in a confined chassis are not the same thing, and they often diverge. Your interpretation mixes two separate discussions: Silicon-level efficiency (input power -> compute output) Thermal behaviour and sustained performance in a mobile cooling budget The former favors newer architecture. The latter is heavily dependent on bin quality, power limits, chassis design, and thermal headroom — which is the context of my earlier comments. So let’s keep the discussion technical and grounded in actual mobile behaviour rather than assumptions about what someone ‘does or doesn’t understand.

-

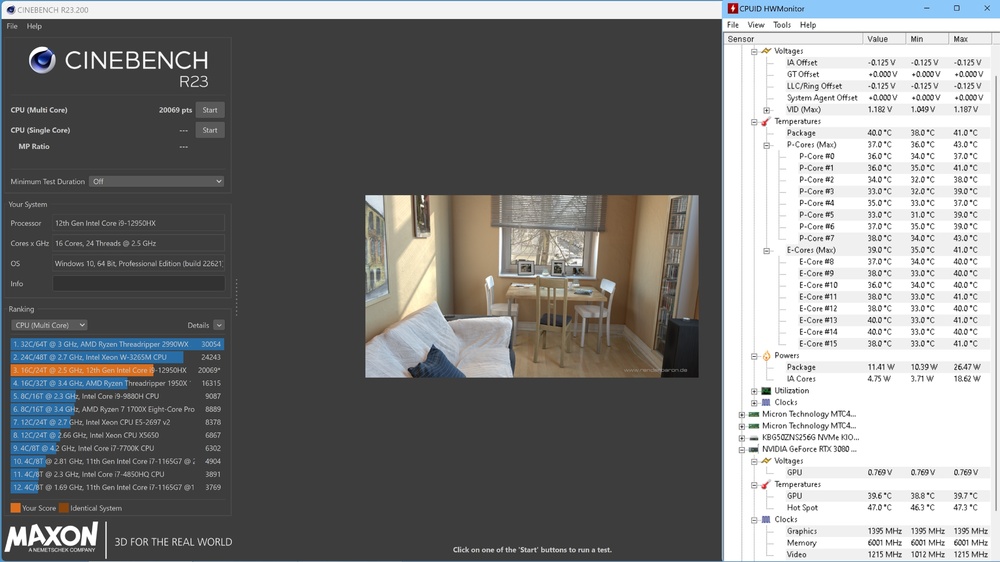

Wooohat… you don’t believe in fairy tales? 😮 Then maybe you’ll believe the i9-12950HX running cooler and more efficiently than your brand-new i9-285HX?" (Images of my own system below — since you think numbers like these belong in storybooks.) Surface temps, idle temps, and CB23 results were all posted in this forum long before the current generation even existed — nothing new, nothing fabricated. None of this was “luck of the draw.” It took 3 months, 6 replacement units, and refusing to accept canned responses from entry-level techs who weren’t even aware of these thermal behaviors. I escalated repeatedly, every time with technical data they couldn’t refute. Only after that did the correct unit arrive. Had that final system not performed the way it should out of the box, I was ready to walk away from Dell altogether — and I’ve been buying Precision systems since the M60 era in ’02–’03. This isn’t my first rodeo. So no — these numbers aren’t fairy tales. They’re what happens when you understand the platform, strip out the inefficiencies, and hold Dell accountable for delivering a properly binned unit.

-

My personal experience with multiple high-end Dell Precisions confirms this extreme variation: 6 Bad Units: Three 7670s and three 7770s all idled at 90∘C+ and throttled easily. 1 Golden Unit: The final replacement unit, with the same model and same flagship CPU, ran at Ambient+2∘C at idle—proving that a low-voltage, low-heat chip does exist in the bin, but that the average chip is a thermal disaster. The high temperatures we’re seeing aren’t just an unavoidable side-effect of efficiency — they’re largely the result of wider binning tolerances. In other words, the chips span a much broader quality range, which leads to higher heat output on the weaker bins. Intel smooths that out by raising the allowed thermal ceiling (higher Tjmax), so everything appears normal under sustained load. I can dive deeper into my theory, but that’s the short version. I’ve been busy dealing with life since 2022. I stopped tracking the fine-grain CPU landscape right around the time 13th gen showed up. And to make matters worse, Intel introduced ‘Undervolt Protection,’ which locked out ThrottleStop and shut the door on the one tool that made laptop tuning predictable. So yes — I missed some of the incremental changes, but the fundamentals haven’t shifted: hotter silicon, wider bins, higher Tjmax, and fewer user controls. True — with a distinction. Yes, the scheduler will push the CPU/GPU to Tjmax regardless of paste quality when the heatsink’s thermal capacity is this limited. But that doesn’t mean the paste is irrelevant. Your operational thermal buffer matters. If the system is already idling in the 80–90°C range because the interface material isn’t performing well, then half of your thermal window is already gone before the real workload even starts. That window should ideally begin only a few degrees above ambient, not 40–50°C higher. Starting that close to Tjmax means you hit the ceiling almost instantly, which forces the system into aggressive throttling long before it should.

-

ooh wow 105c TJmax 🤯 that cant be good for the silicon die or overall performance. ignore Intel attempt to cover up their cheap edge silicon die slices, that much heat is still excessive and indicates one of two things, either cooling is insufficient for the 285HX in that chassis or the cpu needs repasing and reseating, another user experiences similar temps is not an indicator for this being ok! it just means you both have similar issue. last round i went through 6 replacement before they finally sent a unit with proper cpu, before that i was idling at 90+ out of the box and was ready to just give up on dell, with a good cpu i was idling out of the box 2-4 degrees above ambient. (its documented somewhere in the 7670/7770 owners thread, and that cpu was no where near the 285HX efficiency)

-

While I’m already on a roll… what happened to the Xeon option in the new lineup? And we’re still stuck with no real keyboard upgrade path on the flagship models. You basically need to buy a teenager’s Alienware if you want a proper keyboard or a non-ISV GPU out of the box. And yes, I’ll say it outright: I miss the old clicky keys, and everyone in the office can deal with it. If they offered a lever that advanced the paper like a typewriter, I’d probably add that too just for the experience. 😅

-

A bit of context on ISV GPUs, ECC, and why these distinctions made sense in the past but hold very little value today—especially in mobile systems. Historically, ISV-class GPUs (Quadro/RTX Pro) ran ECC VRAM enabled by default, and you couldn’t override it. Their drivers were built for long-duration, mission-critical workloads, so clocks were intentionally conservative. This made sense for environments where any calculation error could have financial or legal consequences—think Wall Street, CAD/CAM shops, or regulated verticals. Back in the early 2000s, Precision mobile workstations were literally the only laptops offering system-level ECC memory, and ISV certification actually mattered. The software ecosystem was a mess—standards were looser, OpenGL implementations varied wildly, and ISV drivers guaranteed predictable behavior across entire application suites. That was the right tool for that era. Today? The landscape is completely different: Modern applications and frameworks self-validate, self-correct, and handle error states internally. DDR5/DDR7 include on-die ECC and advanced signal-integrity correction long before data ever reaches system memory. Driver ecosystems are mature and unified; the old instability that justified ISV certification is largely gone. Even system ECC memory is increasingly redundant for most mobile workflows. The big reality check: In mobile platforms, the theoretical advantage of ISV GPUs—sustained stable clocks—simply cannot manifest. Modern mobile thermals hit the ceiling long before ISV tuning makes a difference. Both Pro and non-Pro GPUs will throttle the same once the chassis saturates. That endurance advantage only shows up on full desktop cards with massive cooling budgets. That leaves one actual, modern-day benefit: Legacy OpenGL pipelines. Outside of that niche, ISV certification brings almost nothing to the table—desktop or mobile. Bottom line: ISV certification made sense 15–20 years ago. Today, especially in mobile workstations, it’s a legacy checkbox with minimal practical value. Non-Pro GPUs offer the same real-world performance, and in many cases, better flexibility for modern workflows.

-

Bingo. 🙂 This may come as a shock, but people like us do exist, Dell. Our workflows cannot run on ISV-certified GPU drivers. ISV certification adds nothing to what we do, and none of our end users run ISV drivers. We validate our environments and new releases exclusively using non-ISV drivers. And as several members here already pointed out: we need a business-class system that we can also game on after hours. I don’t see how that contradicts the workstation designation. Do they really expect us to buy an Alienware just to play games after work? Or should we simply settle with the limitations? Which one is it, Dell?

-

I get that — I do the same myself — but I still need to run 4K workflows, and the option simply needs to be there. This is supposed to be a workstation, and productivity should never be treated as optional. For years we had full configurability, down to choosing whether or not to include a webcam in the bezel. Now we’re stuck with the same IPS panel generation after generation. It’s not as if Dell lacks compatible displays; they have an entire lineup they could fit here. Yet the flagship productivity platform doesn’t even get a touch option, let alone a modern high-resolution panel. Removing basics like a 4K option sends the message: here’s the flagship — be happy we still give you 2K. That just doesn’t sit right. And I hear you on Raptor Lake being dated — loud and clear. The 16 is still a valid system, but the lack of any non-ISV GPU option makes it a poor fit for many workflows. Not everyone runs ISV-bound workloads, and Dell’s current configuration approach essentially signals that this entire segment is now reserved exclusively for ISV-certified use cases. This is exactly what I mean when I say the platform feels compromised at every turn. Dell and Precision line used to stand for an uncompromising frontier. What makes it even more of an oxymoron is that Dell isn’t struggling financially — the company was bought back by its original owners and is in a strong position. This overhaul wasn’t driven by necessity; things were working well. It feels like optimization for the sake of optimization simply because they can, not because the platform needed it — unless the goal is to turn every model into a flat, disposable pizza-tray design you’d grab at a 7-Eleven.

-

The 18-inch model increased screen size without increasing resolution — where is the 4K option, or even any panel choice beyond IPS? Meanwhile, the 16-inch model gains a few features over the 18 but drops the 4th NVMe bay. I'm also not sure what you’re comparing the new 16’s thermals against; in my experience, packing this much dense hardware into a smaller chassis leaves far less thermal headroom than the larger 17-inch and up designs. And since Dell removed all modularity from Alienware, there’s no longer any option to order a non-ISV GPU like we used to on both platforms now.

-

I have the MB16250, MB18250, AA16250, and AA18250 all spec’d to the max, but I still can’t bring myself to pull the trigger on any of them. Every one of these new units feels compromised in one way or another. The MB16250 is probably the least compromised, but based on past experience its thermals are still weaker than the MB18250—and that one comes with the odd tall screen ratio that I’m not sold on. I also looked at the AA16250 and AA18250. Hardware baseline is solid, but Dell removed every bit of modularity these platforms used to have. That alone is a huge step backward. And the configuration pages now look unprofessional and borderline insulting. The way they structure component options feels deliberate—almost as if they assume no one notices. For example, they list a single 16GB or 32GB module supposedly running in “dual-channel,” yet they offer no 2×8GB or 2×16GB options, not even a basic single 8GB stick. Everything is pushed toward unnecessary upgrades and more expensive configurations that absolutely don’t need to be forced. or they intentionally omit the top Intel CPU options from the AA16250/AA18250 lineup entirely. How does that make any sense except for ‘going green’ on their cost structure? What a sad state of affairs. For the first time ever, I’m seriously considering buying the discontinued 7780 instead.

-

https://ollama.com/library/llama3.1 Minimal Requirements for Llama 3.1 8B (Quantized) VRAM (GPU Memory) ≥ 8 GB VRAM System RAM ≥ 16 GB If you are interested in the larger, more powerful Llama 3.1 variants, the requirements scale up dramatically, moving them out of the range of most standard PCs: Llama 3.1 70B ≈ 35 - 50 GB VRAM Llama 3.1 405B ≥ 150 GB VRAM Hardware support https://docs.ollama.com/gpu Compute capability (CC) https://developer.nvidia.com/cuda-gpus

-

@Easa The issues regarding NVMe and SSD drive failures, including data loss, have been widely reported and linked primarily to a specific Windows update. The Suspected Update: The update most frequently cited in connection with the drive problems is KB5063878, a security update for Windows 11 version 24H2 (though some reports also mention earlier updates and Windows versions). Symptoms and Conditions: Users reported that after installing the update, drives (NVMe and some SATA SSDs/HDDs) could disappear from the system, fail to boot, or show as RAW/corrupted, leading to data loss. This issue was often observed: During or after large, continuous write loads (e.g., transfers over 50GB). Especially if the drive was more than 60% to 80% full. On systems with specific SSD controllers (particularly Phison, though other brands were mentioned).

-

Wooohat... manual fan control, did the world gone mad? that is so pedestrian in an era of self driving cars, solid and basic working technology belongs in the 2000 and prior when things didn't have hidden agendas and corner cutting in mind just so a bunch of deadbeat exec's can buy another weekend house. and don't anyone dear to say they didn't do something in their time.

-

this is mainly related to advanced battery health management features designed to prolong the battery's lifespan. the "native way" to override this is to use the Primarily AC Use mode in Dell Optimizer or Dell Power Manager, or manually set a Custom charge start/stop limit (e.g., start->50% stop ->100%) to ensure the laptop runs almost exclusively on AC power when plugged in, thus preserving the battery's lifespan. Use Dell Optimizer or Dell Power Manager look for a section related to Battery Information or Battery Settings. You will find options designed to maximize battery lifespan for users who are always plugged in. The key settings are: "Primarily AC Use" This option is specifically designed for users who primarily operate the computer while plugged into an external power source. It automatically lowers the maximum charge threshold (e.g., to around 80% or a value set by Dell) so the battery stops charging and the system runs on AC power. "Custom" This gives you the most control. manually set the percentage at which the battery will Start Charging (e.g., 30%) and the percentage at which it will Stop Charging (e.g., 100%). Result: If the battery is below 100% and above 30% and the laptop is plugged in, the system will run entirely on the AC adapter, and the battery will remain at 100% (only acting as an emergency UPS/buffer for peak loads). or Use the System BIOS/UEFI set this at the hardware/firmware level or if you do not have the Dell software installed: Find the Primary Battery Charge Configuration: Look for an option like Primary Battery Charge Configuration or Custom Charge Settings. Set the Thresholds: You can usually set the Start Charge and Stop Charge percentages directly in the BIOS. Setting the Stop Charge to a lower value (start->30% stop ->100%) will force the system to run on AC power once that level is reached, achieving more or less the behavior you are after.

-

If you’re plugged into wall power, you can try disconnecting the battery so it’s no longer part of the system’s power management loop. It might be worth experimenting with a clean OS installation while the battery is disconnected, so the system builds its initial power profile more like a desktop. After setup, reconnect the battery so it functions more as a UPS-style backup rather than part of the active power delivery chain. I’ve seen similar behavior before, so this approach might help mitigate the performance throttling effect under heavy load. It’s also possible there’s a low-level BIOS flag (not exposed in the standard interface) that could toggle this behavior natively — Dell loves burying those.

-

@win32asmguy interesting, in theory a standard SODIMM in a system that supports CSODIMM the memory should still run at a lower speed with CKD Disabled, many high-end laptops supporting CSODIMM still ship with standard SODIMM while the underlying architecture is future proof with CSODIMM support. my guess is this is some intentional nonsense by dell to secure their memory upgrade path monopoly they started with CAMM modules.

-

After collecting feedback here and watching some of the review videos, I think I can tentatively justify some of the new design choices. That’s not to say I like them—but the more exposed aluminum look may be part of the thermal strategy. From everyone’s reports, thermals seem solid, even if no one can pinpoint exactly why. My guess is that the exposed chassis is acting as a better heat conductor than the old plastic brackets. This is speculation based on what we’ve seen so far.

-

i was looking at Intel® Core™ Processors HX-Series (14th gen) brief specs, interesting, the Ultra Core for Desktop processor is showing 192GB limit with 2 memory channels only while the Core Ultra Mobile 285HX is Listing 256GB limit still with 2 memory channels, CAMM's 4 channel is still in the air outside of Dell's marketing bubble, until CPU's ship with 4 IMC's CAMM's are pointless atm. mobile platforms with these processors onboard will never be able to utilize CAMM's 4 channels as the CPU is soldered to the board, since the premises of CAMM is quad channel, the tech currently embedded is moot.