-

Posts

142 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Events

Everything posted by Ashtrix

-

The way AMD's new CPU works is very radical. The fact that you can use any cooler the chip will always boost to 95C, like yeah it's fine on AIO or even midrange Air cooler or high performance Noctua, the fact that the cooler will run at high temps 24.7 that is a point of discussion which I want to hear, not about how TPU said the CPU is going to melt down and throttle, we already knew from AMD presentation/review/slides that Zen 4 will always hit 95C no matter what. Also the point @Mr. Fox mentioned is very important observation that how the parts will live up when they are running this hot always, a.k.a slow cooking means we have the HSF areas always running at full speed or be it fans or the entire apparatus of Vapor chamber or Heat pipes doing their thing over at max. A silicon is a silicon, unless there's some significant change in chemical composition how TSMC 5N silicon is and it's Zen 4 lineup being 100% fine for 24/7 running at 95C always. I can recall one thing, remember Nvidia.. They said GDDR6X was rated at 100C+ and Max temp is 113C or something as per Micron datasheets so they said the VRAM on 3090 at back heating is all ok. Well we know that how BAE Systems makes RAD products (Radiation Hardened), well they are geared for Space. High heat, High radiation, Extreme cold. Not all are certified for that class. If you see the space grade tech is not even near the latest lithography node. They are on 200nm to 150nm technology process. RAD5500 is their latest chip, it's on PowerPC 64-Bit uArch. Just food for thought..

-

The thing is why AMD went with Thicker IHS is many reasons one is the LGA1718 backplate and ILM retention system. If you see Intel's LGA1700 has a bending CPU / Mobo behavior to which Intel said nothing and was like it is intended and only fix is to get an aftermarket part for DIY. But with AMD's IHS it's strong and thick. Second is cooler compatibility. And AMD's IHS is also rock stable with zero flex on motherboard PCB, CPU PCB+IHS and Backplate tested by Igor's Lab as well. Der8aur's method won't be easy for 99% of the users, he is using Direct Die cooling + a Delid with a custom LM on top plus entire AMD's ILM and Backplate are totally removed as well. So how does it impact the long run mechanical aspect of the AM5 board in use ? Nobody knows. If we watch the video of his i9 11900K it also saw a reduction of temperatures by similar amount because again that's a direct die. Plus note how Ryzen 5000 delid is a total failure, we even saw one chip getting wasted in the process here in the Benchmark thread that too performed by Rockit themselves. Because of various factors esp how AMD's CPUs have MCM design, the proper heat distribution from IODie 6nm chiplet and Zen 4 5nm chiplet it must happen in a very natural matter not influencing the other by thermodynamics heat transfer behavior and ruin the performance. All in all its a design engineering from AMD on all fronts - Therodynamics, Mechanical stress, MCM factor and more. I really cannot think of AMD failing on designing such a high tech component, they also make the world's fastest HPC / Datacenter x86 processors which are ofc LGA only. I personally think that method of his is for strict OC and those Extreme Enthusiasts only and not to be considered as a realistic option for retail. The Curve Optimizer is a cool feature to tame but Optimum Tech's video is -30 offset, that is unrealistic by monumental proportions. If we see the Zen 3 behavior on OCN threads with Curve Optimizer, there's a swath of reports on it being unstable even with -20 to -15, and the heavy high core processors with individual CO offset plus stability testing = ultra work. Add the Core Recycler too. I would say a mild voltage offset undervolt along with a custom TDP by a few 10-20W would be better approach, since many of the silicon be it CPU or GPU are higher voltage out of factory, for that mean distribution of nominal boost / default settings so getting that best spot would improve things... I could be wrong, since it's just my thinking.

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

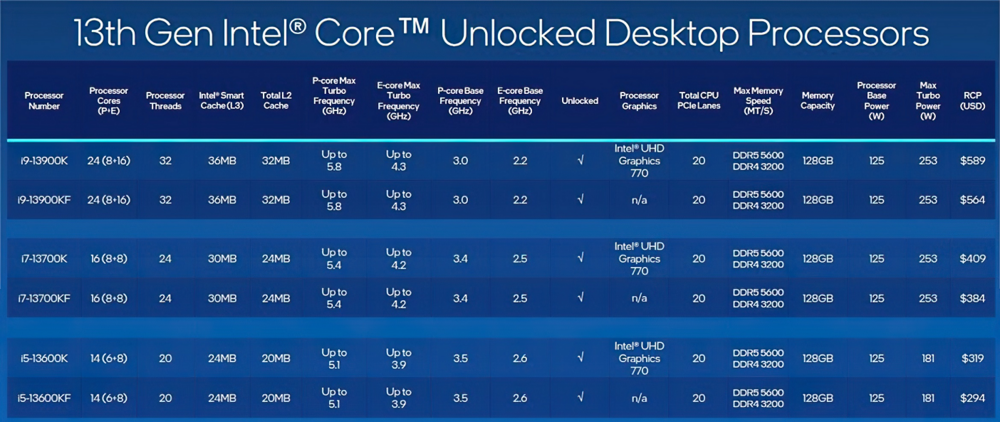

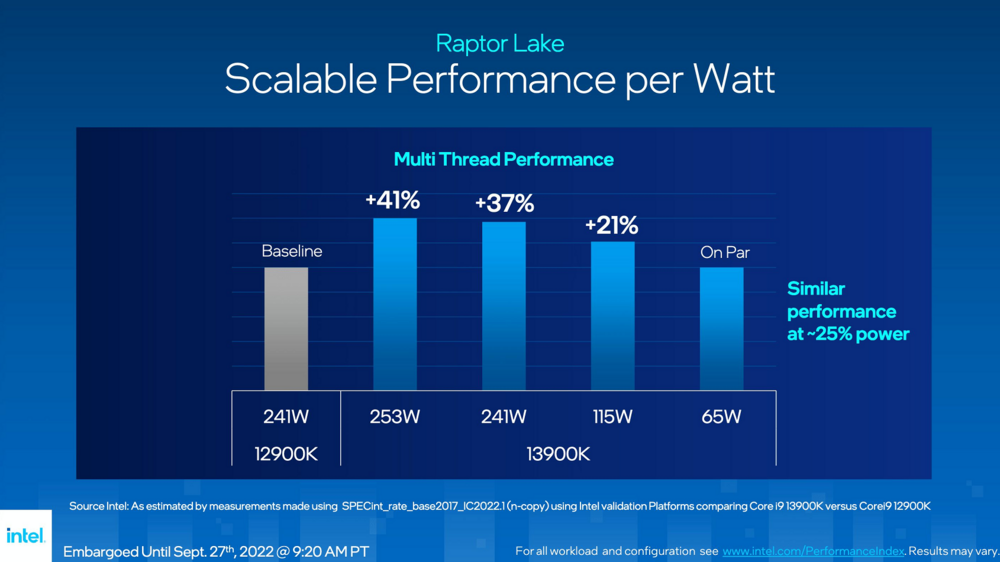

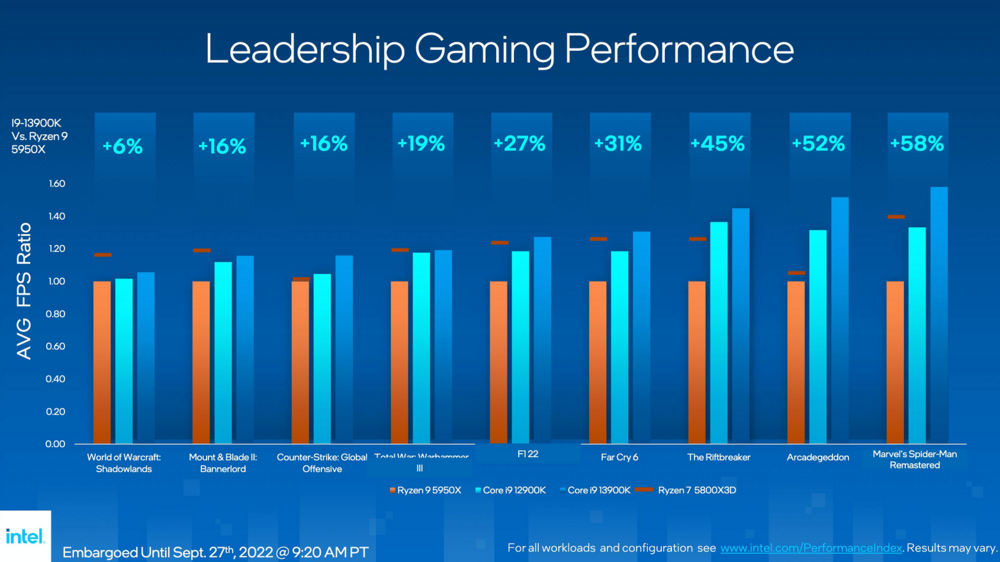

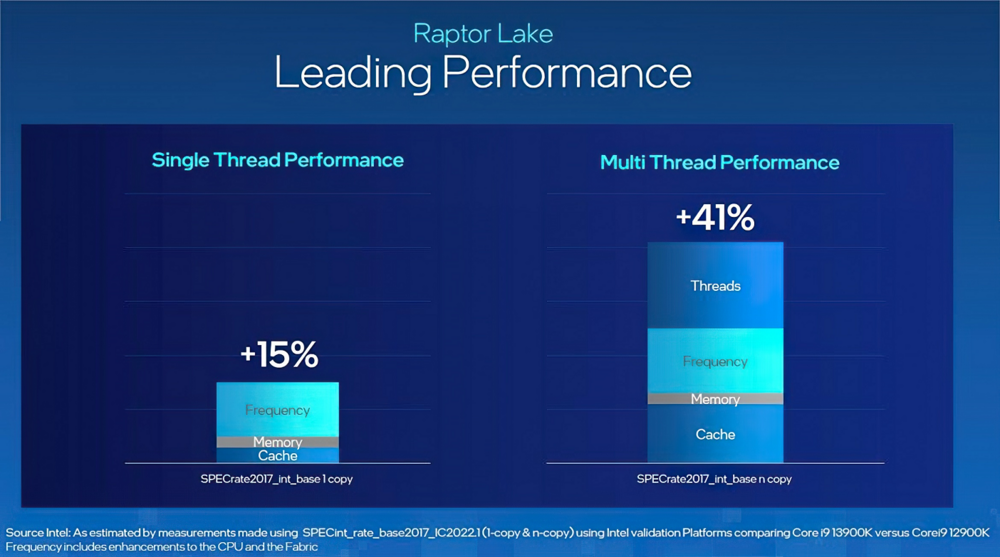

Intel announced new Raptor Lake Processors 5.8GHz ST boost as per rumors confirmed, improved IMC for sure, more E cores for all of the stack for MT. Clocks as well, more Chipset lanes for mobos for I/O. Surprisingly NewEgg pricing was higher than MSRP Intel is launching today. I think they want to take all the potential sales from AMD's Zen 4 Platform, probably a last minute pricing change is my guess. Pricing wise Intel is heavily undercutting Zen 4 the i9 is significantly cheaper than AMD R9. Now they are going to retake the ST IPC from Zen 4 I guess. MT I think AMD might lead. Gaming performance is not much there to be really honest both Zen 4 and RPL will fight hard, even though they both are just mild improvements from camps this round. These high end processors are for those who are on pre-9th gen parts to be realistic. Overall anyone who wants to lock in and buy right now, Intel Z790 platform could be a good deal but need to note the potential EOL of LGA1700, although the BIOS and QA of AMD is still up, they need to prove it still AM5 is going to outlast the current Intel on that end. Looks like Intel is also offering that new 65W option which AMD Zen 4 Ryzen 7000 series did as well. Due to the high heat density of these modern x86 processors. There seems to be that Inevitable top Binned i9 13900KS coming soon in Q12023 with 6.0GHz out of box clock rate, either Zen4X3D counter for that bragging rights or just the yields. Gotta see. I think anyone with existing 12900K should skip the 13900K and wait for KS bin, if they want to upgrade because that will be the ultimate final chip for LGA1700 CPU. AMD wanted to get 6GHz on their Zen 4 but could not hit it seems as per Anandtech. But looks like Intel is going to be first in getting that magical figure out of box for a retail chip. Those P cores are looking mighty powerful, I really wish we got a 10C20T variant instead of this Biglittle nonsense which I really despise. Also no improvement on the ILM side of Z790 probably they did not want to break HS and Cooler compatibility so that reinforcement of the LGA1700 and CPU is still a DIY task. Below is the slide deck. Also probably the last of it's kind ? EVGA Z790 DARK and yeah all Z790 boards are released as well. I do not see much ILM changes here on the front, maybe there's no improvement on the ILM side unfortunately. -

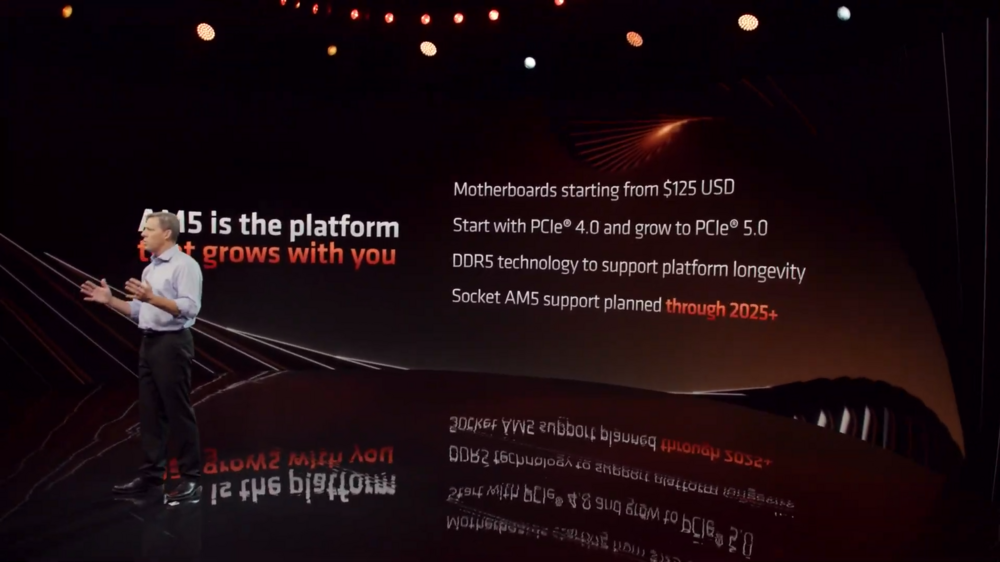

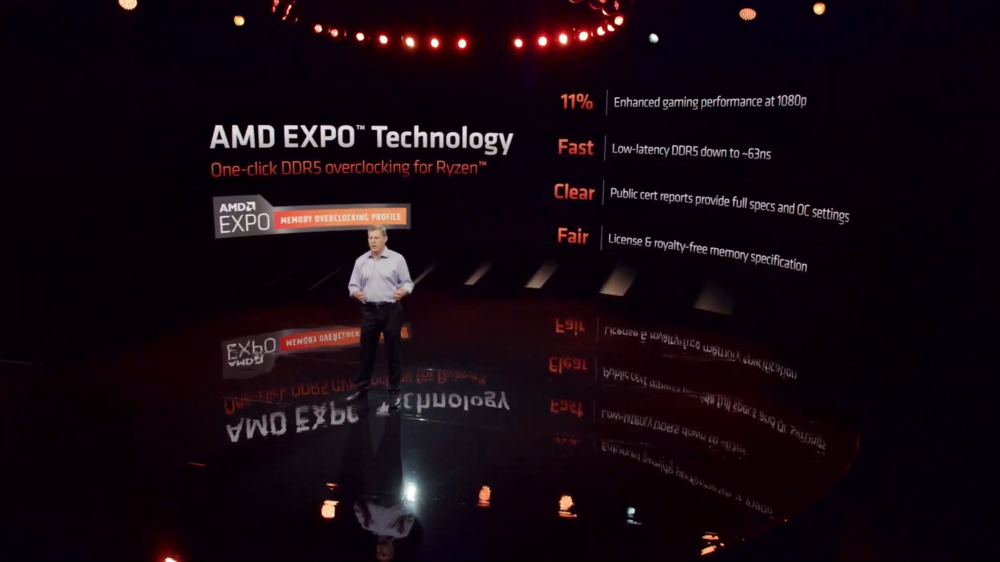

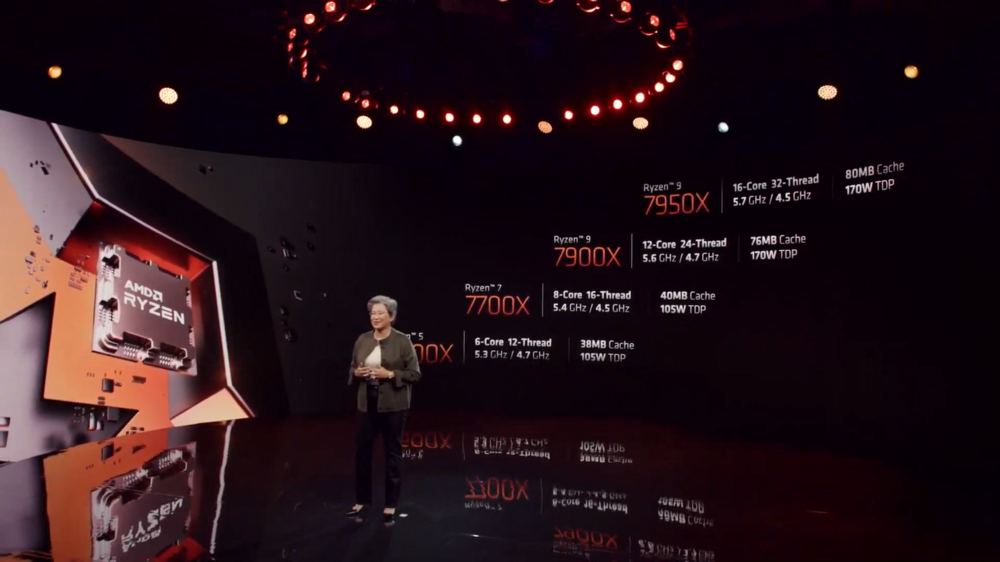

Crossposting from Benchmark thread. My thoughts on the new Zen 4 launch. Thanks for your time. Okay went down AT, GN and Hardware Unboxed and this is a good chip on many ends with some downsides as well. Overall a platform to act as launchpad for AM5. Given Zen4X3D will come in next year alongside Meteor Lake and Zen 5 in 2024 with new Chipset but same AM5 socket and Intel with Nova Lake or Lunar Lake whatever. Infinity Fabric and Memory AMD's IF clock is totally decoupled, this is a huge thing, the older Zen 3 had 1:1:1 ratio for Infinity Fabric clock Fclk, Uclk (Memory Controller IMC), Memory Clock (DRAM) had 3 variables. Now Zen 4 moves away to IF being totally Auto and UClock : MemClock are now 1:1, just 2 variables only, IF is now totally independent, a.k.a the IODie IF interconnect won't BS crap out like previous Zen processors a.k.a trash USB and other I/O issues. Because the Fclk is totally untouched now, that's my understanding. So the memory speed is now Auto:1:1. DDR5 6000MHz DRAM kit you get IF at 2000MHz (Auto) : Memory Clock at 2400MHz to 3000MHz : IMC Clock Uclock 2400MHz to 3000MHz. And EXPO DDR5 6000MHz is sweet spot and switching to more speed = 1:2 ratio a memory performance hit. So best is to stick with EXPO and ratio 1 and OC. Also AMD is not running in Gear 2 like Intel. The slight difference of Intel and AMD is, Intel has Uncore totally unrelated to IMC until CML. But with RKL and ADL somehow the ratio of IMC to DRAM is now Geared. AMD decoupled the IF in similar fashion to CML. Very good thing. Note, DDR5 is same like Intel DDR5. The JEDEC standard, 4DIMMM DDR5 = not great as we all know. The design itself is like this for DDR5. IO Die TSMC6nm from GloFo's horrible 14nm maximum power on the IOD is now heavily reduced this allows to let the Zen 4 CCDs hit max clocks, plus the IF also is now higher speed than Zen 3 as per AMD, and that means low power again but high clock. The IGPU is weak 2CU RDNA2, BUT it even has DP2.0 and HDMI2.1 which both Ada Lovelace RTX40 series doesn't have ROFLMAO. So expect RDNA3 to have DP2.0 and HDMI2.1. Also it has USB C output native as well, great option to be honest. Plus also H264, H265 both decode and encode this means NAS/NUC boxes get a huge win for AMD Zen 4 parts. Chipset Improved, esp vs Intel the backplate reinforcement for LGA socket prevents any bend. Speaking about IO the improvements are nice PCIe5.0x16 for PEG on X670E and B650E just like Alder Lake and if manufactures want to get 2x5.0 NVMe. And a lot of I/O options like Z690. But the issue is as I mentioned here previously already is the downlink PCIe4.0x4 on this round same as X570 basically. 100% with Zen 5 AMD will launch new X770 platform with PCIe5.0 downlink because the re-drivers for PCIe5.0 might be expensive this time as it's not even debuted on HPC/Exascale/Datacenter markets... Xeon is delayed Genoa is not yet released, so overall BOM = high if they mandated PCIe5.0x4 downlink for Chipset. The daisychain system of Chipsets needs to prove itself. AMD says it allows CPU to stay out of the I/O density and let the chipset handle. Also there's BIOS Flashback at Chipset/CPU level this time. And many do not even care for Bandwidth, they will populate all slots. Unfortunate part is 8xSATA is going away, the chipset is changed a bit and looks like there are barely any 8xSATA boards only ASRock is making them for both Intel and AMD even ASUS dropped. EVGA is another one who adds external SATA controller and add 2X more SATA totaling from 6 to 8. Shame. CPU AVX512 beast, flatout there's no competition here 7950X absolutely dominates Intel 11900K totally and 12900K is absent since KS revisions and new 12th gen it is fused off from factory. But AMD is delivering a powerful performance as total Knock out in AVX512, RPCS3 will see superior gains hopefully across all their processors. Plus they are having no AVX Offsets like Intel needs and downclocks the chip to prevent it from overheating because AMD is using 2x256Bit AVX512 units. So no offset nothing just pure performance. Clockspeed is HUGE boost vs Zen 3, the older chips barely crossed 5GHz this one 16C32T 7950X sits at 5GHz Boost all core. Ultra high performance no BS big little garbage at play. ST boost goes to 5.7GHz (Raptor Lake will have 5.8GHz for it's TVB). But the tradeoff is high temperature and cooling requirement for all Ryzen 7000 Raphael processors. Even 7600X gets to 5.4GHz all core speed. IPC boost is as per AMD marketing no shenanigans based off the SPEC and Cinebench scores at AT Ryzen 7000 seems to be king of all processors. Gaming performance is just right there, it doesn't absolutely dominate by a huge lead but rather beats out 12900K in some and loses out in some. 7600X on the other hand consistently beat out 5800X flatout, 6C12T > 8C16T this is what I wanted to see from RKL when Intel went castrating 2C4T off but it did not work out. However AMD does it here. I can see their 7900X totally beating out 5950X across all workloads even if Zen 3 has 4C8T more. 5800X3D however still stays champ in many gaming benches lmao. TJmax is now 95C max for Zen 4 Ryzen 7000 Raphael but 115C is their OC limit. Looks like the tolerance of new TSMC 5N is very high. Too hot CPU. 250W now, AMD kicked tree hugging BS to curb. I like it. Let the x86 processor do its work and not castrate it. But ofc it's a bit on the heavy side of things seeing a CPU run at 90C all the time. The voltage is however very normal 1.0-1.2v now, unlike the Zen 3 maybe that's a good thing at-least you do not have high voltage AND high temps. Also ASUS has SP rating for AMD Ryzen 7000 as well, does it even matter when the CPUs are too hot ? I think the top end stack of R9 will always be high SP rating. Final Thoughts No biglittle BS drama. Full proper cores and MT performance is very high, the 7600X is a killer chip. They managed to fix a lot of technical issues, some of them needs to be seen on how it fares in public hands (platform stability). Chipset bandwidth is a bummer. But I do not think many even give a single F. I do not think many even damn know about the Chipset DMI and Lane bifurcation on the mobos, if they did HEDT would not have been dead. Cooling AMD axed all that Power and Temp nonsense and let the CPU stretch it's legs properly just like Intel. BUT downside being, unlike their old CPUs these need real beefy cooling esp the top end parts. Just like Intel i9 series Ryzen R9 needs a big AIO cooler to maximize it's clocks and performance however on AMD side now even R6 demands high end cooling as it also hits 90C+ on an AIO. That is somewhat problematic for Air cooler people and maybe those ITX owners. So basically now you get entry level you must get a big cooler you have lost the choice of going cheaper cooler with cheaper SKU. Intel is not like this an i7 12700K won't be this hot as 7700X or i5 12600K won't be as hot as 7600X, that's something. Overclocking is probably non existent and dead given how hot the processors are, barely we can push I guess. How long can we even push when it already hits 95C out of box that too on a 360mm AIO, like Intel 11th and 12th gen this is also very very dense on heat. But it's basically not possible to OC much on this platform unlike Intel, but with Intel if you push it goes over 300W but the temps are controllable here we cannot... Memory also is also dependent on the 1:1 ratio modes, so if you hit 7000MHz the ratio will drop and we will have to see how it fares. With Intel it's all Gear 2. Pay more and try more and get HWBot points but nothing worth real performance boost will be coming out of DDR5 in this cusp of it's introduction tbh from both camps. ST and MT performance Ryzen 7000 (Raphael) Zen 4 design is the new IPC king. MT like always AMD parts had extreme MT performance. Maybe Raptor Lake will match MT by a hair and beat on OC at extra 100W and some fun OCing, but that's it I do not see Intel beating out by a huge margin on that Intel 7 (10nm node) anymore on MT. I think RPL will beat this on ST with higher L2 and DLVR and clocks. Gaming is just not a strong suite for top end 7950X but for 7700X and 7600X it is so it's an addon bonus you do not lose anything in gaming but as a total package you get a good strong gaming processor applies to entire stack. In RPCS3 I can see these totally dominate due to AVX512 without any offsets. EDIT - RPCS3 still Intel leads lol, I cannot understand how is it possible. TPU has it covered for RDR RPCS3 emulation. High platform cost, X670E segmentation, high CPU cost like Intel as expected to be honest from DDR5 and PCIe5.0. And less % growth vs Ryzen 5000 is also another thing. So essentially all the Zen 3 owners have basically not much to even look at the growth and need to upgrade. But the IODie itself is a win now, that crappy USB issues should be gone from my understanding. Future Proofing New buyers should wait for next year and get them when price cuts as it doesn't make much worth right now same for GPUs as well. The platform is a new launch and has longevity upto Zen 5 vs Intel's 2 CPU/socket system, LGA1700 is done once Raptor Lake launches so even if 13600K adds more E cores the platform is gone and max is 13900K while AMD Ryzen 7600X means they can upgrade to 2-3 more SKUs but the price is they need to pay more to AMD than Intel Z690 / Z790 platform.

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Not an issue but is it about not posting here or just want to have the info cross post ? I think most of the folks who even care about new products followed by posts about them is often in the benchmark thread thus this habit of mine writing pages of essay came from old NBR thread. Well, I can cut down on words but I think some of the information is useful. Edit : Done, thanks. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Okay went down AT, GN and Hardware Unboxed and this is a good chip on many ends with some downsides as well. Overall a platform to act as launchpad for AM5. Given Zen4X3D will come in next year alongside Meteor Lake and Zen 5 in 2024 with new Chipset but same AM5 socket and Intel with Nova Lake or Lunar Lake whatever. Infinity Fabric and Memory AMD's IF clock is totally decoupled, this is a huge thing, the older Zen 3 had 1:1:1 ratio for Infinity Fabric clock Fclk, Uclk (Memory Controller IMC), Memory Clock (DRAM) had 3 variables. Now Zen 4 moves away to IF being totally Auto and UClock : MemClock are now 1:1, just 2 variables only, IF is now totally independent, a.k.a the IODie IF interconnect won't BS crap out like previous Zen processors a.k.a trash USB and other I/O issues. Because the Fclk is totally untouched now, that's my understanding. So the memory speed is now Auto:1:1. DDR5 6000MHz DRAM kit you get IF at 2000MHz (Auto) : Memory Clock at 2400MHz to 3000MHz : IMC Clock Uclock 2400MHz to 3000MHz. And EXPO DDR5 6000MHz is sweet spot and switching to more speed = 1:2 ratio a memory performance hit. So best is to stick with EXPO and ratio 1 and OC. Also AMD is not running in Gear 2 like Intel. The slight difference of Intel and AMD is, Intel has Uncore totally unrelated to IMC until CML. But with RKL and ADL somehow the ratio of IMC to DRAM is now Geared. AMD decoupled the IF in similar fashion to CML. Very good thing. Note, DDR5 is same like Intel DDR5. The JEDEC standard, 4DIMMM DDR5 = not great as we all know. The design itself is like this for DDR5. IO Die TSMC6nm from GloFo's horrible 14nm maximum power on the IOD is now heavily reduced this allows to let the Zen 4 CCDs hit max clocks, plus the IF also is now higher speed than Zen 3 as per AMD, and that means low power again but high clock. The IGPU is weak 2CU RDNA2, BUT it even has DP2.0 and HDMI2.1 which both Ada Lovelace RTX40 series doesn't have ROFLMAO. So expect RDNA3 to have DP2.0 and HDMI2.1. Also it has USB C output native as well, great option to be honest. Plus also H264, H265 both decode and encode this means NAS/NUC boxes get a huge win for AMD Zen 4 parts. Chipset Improved, esp vs Intel the backplate reinforcement for LGA socket prevents any bend. Speaking about IO the improvements are nice PCIe5.0x16 for PEG on X670E and B650E just like Alder Lake and if manufactures want to get 2x5.0 NVMe. And a lot of I/O options like Z690. But the issue is as I mentioned here previously already is the downlink PCIe4.0x4 on this round same as X570 basically. 100% with Zen 5 AMD will launch new X770 platform with PCIe5.0 downlink because the re-drivers for PCIe5.0 might be expensive this time as it's not even debuted on HPC/Exascale/Datacenter markets... Xeon is delayed Genoa is not yet released, so overall BOM = high if they mandated PCIe5.0x4 downlink for Chipset. The daisychain system of Chipsets needs to prove itself. AMD says it allows CPU to stay out of the I/O density and let the chipset handle. Also there's BIOS Flashback at Chipset/CPU level this time. And many do not even care for Bandwidth, they will populate all slots. Unfortunate part is 8xSATA is going away, the chipset is changed a bit and looks like there are barely any 8xSATA boards only ASRock is making them for both Intel and AMD even ASUS dropped. EVGA is another one who adds external SATA controller and add 2X more SATA totaling from 6 to 8. Shame. CPU AVX512 beast, flatout there's no competition here 7950X absolutely dominates Intel 11900K totally and 12900K is absent since KS revisions and new 12th gen it is fused off from factory. But AMD is delivering a powerful performance as total Knock out in AVX512, RPCS3 will see superior gains hopefully across all their processors. Plus they are having no AVX Offsets like Intel needs and downclocks the chip to prevent it from overheating because AMD is using 2x256Bit AVX512 units. So no offset nothing just pure performance. Clockspeed is HUGE boost vs Zen 3, the older chips barely crossed 5GHz this one 16C32T 7950X sits at 5GHz Boost all core. Ultra high performance no BS big little garbage at play. ST boost goes to 5.7GHz (Raptor Lake will have 5.8GHz for it's TVB). But the tradeoff is high temperature and cooling requirement for all Ryzen 7000 Raphael processors. Even 7600X gets to 5.4GHz all core speed. IPC boost is as per AMD marketing no shenanigans based off the SPEC and Cinebench scores at AT Ryzen 7000 seems to be king of all processors. Gaming performance is just right there, it doesn't absolutely dominate by a huge lead but rather beats out 12900K in some and loses out in some. 7600X on the other hand consistently beat out 5800X flatout, 6C12T > 8C16T this is what I wanted to see from RKL when Intel went castrating 2C4T off but it did not work out. However AMD does it here. I can see their 7900X totally beating out 5950X across all workloads even if Zen 3 has 4C8T more. 5800X3D however still stays champ in many gaming benches lmao. TJmax is now 95C max for Zen 4 Ryzen 7000 Raphael but 115C is their OC limit. Looks like the tolerance of new TSMC 5N is very high. Too hot CPU. 250W now, AMD kicked tree hugging BS to curb. I like it. Let the x86 processor do its work and not castrate it. But ofc it's a bit on the heavy side of things seeing a CPU run at 90C all the time. The voltage is however very normal 1.0-1.2v now, unlike the Zen 3 maybe that's a good thing at-least you do not have high voltage AND high temps. Also ASUS has SP rating for AMD Ryzen 7000 as well, does it even matter when the CPUs are too hot ? I think the top end stack of R9 will always be high SP rating. Final Thoughts No biglittle BS drama. Full proper cores and MT performance is very high, the 7600X is a killer chip. They managed to fix a lot of technical issues, some of them needs to be seen on how it fares in public hands (platform stability). Chipset bandwidth is a bummer. But I do not think many even give a single F. I do not think many even damn know about the Chipset DMI and Lane bifurcation on the mobos, if they did HEDT would not have been dead. Cooling AMD axed all that Power and Temp nonsense and let the CPU stretch it's legs properly just like Intel. BUT downside being, unlike their old CPUs these need real beefy cooling esp the top end parts. Just like Intel i9 series Ryzen R9 needs a big AIO cooler to maximize it's clocks and performance however on AMD side now even R6 demands high end cooling as it also hits 90C+ on an AIO. That is somewhat problematic for Air cooler people and maybe those ITX owners. So basically now you get entry level you must get a big cooler you have lost the choice of going cheaper cooler with cheaper SKU. Intel is not like this an i7 12700K won't be this hot as 7700X or i5 12600K won't be as hot as 7600X, that's something. Overclocking is probably non existent and dead given how hot the processors are, barely we can push I guess. How long can we even push when it already hits 95C out of box that too on a 360mm AIO, like Intel 11th and 12th gen this is also very very dense on heat. But it's basically not possible to OC much on this platform unlike Intel, but with Intel if you push it goes over 300W but the temps are controllable here we cannot... Memory also is also dependent on the 1:1 ratio modes, so if you hit 7000MHz the ratio will drop and we will have to see how it fares. With Intel it's all Gear 2. Pay more and try more and get HWBot points but nothing worth real performance boost will be coming out of DDR5 in this cusp of it's introduction tbh from both camps. ST and MT performance Ryzen 7000 (Raphael) Zen 4 design is the new IPC king. MT like always AMD parts had extreme MT performance. Maybe Raptor Lake will match MT by a hair and beat on OC at extra 100W and some fun OCing, but that's it I do not see Intel beating out by a huge margin on that Intel 7 (10nm node) anymore on MT. I think RPL will beat this on ST with higher L2 and DLVR and clocks. Gaming is just not a strong suite for top end 7950X but for 7700X and 7600X it is so it's an addon bonus you do not lose anything in gaming but as a total package you get a good strong gaming processor applies to entire stack. In RPCS3 I can see these totally dominate due to AVX512 without any offsets. EDIT - RPCS3 still Intel leads lol, I cannot understand how is it possible. TPU has it covered for RDR RPCS3 emulation. High platform cost, X670E segmentation, high CPU cost like Intel as expected to be honest from DDR5 and PCIe5.0. And less % growth vs Ryzen 5000 is also another thing. So essentially all the Zen 3 owners have basically not much to even look at the growth and need to upgrade. But the IODie itself is a win now, that crappy USB issues should be gone from my understanding. Future Proofing New buyers should wait for next year and get them when price cuts as it doesn't make much worth right now same for GPUs as well. The platform is a new launch and has longevity upto Zen 5 vs Intel's 2 CPU/socket system, LGA1700 is done once Raptor Lake launches so even if 13600K adds more E cores the platform is gone and max is 13900K while AMD Ryzen 7600X means they can upgrade to 2-3 more SKUs but the price is they need to pay more to AMD than Intel Z690 / Z790 platform. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

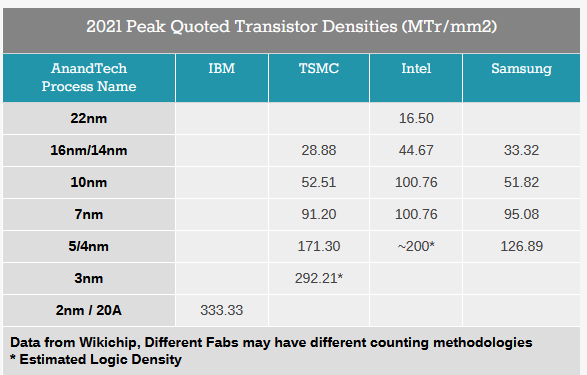

I think to be fair. Intel 7 a.k.a Intel 10nm is very dense vs TSMC 7N node, look at the below chart 10nm is same as 7nm ofc because Intel renamed them esp how bad rep Intel got for almost a decade of 10nm delay they just do not want that name associated with them anymore probably lol. I think It's not great for other products like TSMC factory where almost everyone wants to make their chips because Intel probably still makes their nodes only for their processors. Maybe that's why Intel ARC is not made on that node and instead done on TSMC N6. Coming to AMD side, remember how Ryzen 5000 were running at 1.4v ? insane high voltage on all Ryzen 5000 for under 5GHz clocks. No wonder the damn IO Die was crapping out with BS issues and lot of drama with that stability as well. 5800X3D though is 1.3v fixed. Ryzen 7000 is now is running at 1.2v, much higher frequency that too, 5GHz+ boost clocks. Because TSMC 5N (High Performance Custom 5N for AMD to be precise). I think that's a real improvement from AMD side to be honest. But for OC ? I doubt they are going to change anything. Their CPUs still might be needing that PBO2 with XFR and cannot lock a high Clock like Intel processors so worse for OC and Manual tuning. Time will tell though, esp that 5.7GHz boost clock on Ryzen 7000 parts. Intel will move to their Intel 4 in 2023, unlike 14nm++++++ era they cannot do that anymore now damn that Intel they butchered Rocket Lake on purpose. I wonder if they plan to launch Meteor Lake with Z890 Chipset again or not. It's too frequent if you ask me, every year new motherboard and new processor lol...Also wonder about AMD Zen 4 X3D too, maybe they both will release. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

From what I have known...Apologies for a long post. First about Game AA technologies... We used to have MSAA, Multi Sampling AA, this uses higher resolution of all frames and blends them into the resolution we set, this is why it is ultra hardcore taxing on GPUs as it is using Super Samping technique. This does not have any sort of lower image quality at all. Only super high quality. Nowadays almost all games are using TAA, Temporal Anti Aliasing, it uses reduced graphics effects of previous frames - Ambient Occlusion, Shadows, Lighting for instance. And using TAA in motion causes a blur / vaseline effect reducing the picture quality esp when in Motion. Modern Gaming and AA So modern games cannot implement MSAA due to 2 particular reasons, First Reason the lights used in the games rendering aspect called as "Forward Rendering" limits it, apparently if we have a ton of lights on the Polygons in the game and applying the rendering on all of them in one pass, the GPU hit will be massive because each and every light source will have it's data associated with the textures, GFX effects and etc done at same time so adding Super Sampling means a lot. So developers moved away from Forward Rendering to Deferred Rendering which has separate rendering path and applies with some passes on the final scene. Second reason TAA gets developers make games for trash consoles easily as it doesn't require the heavy GPU power and CPU power to get it done unlike MSAA with ultra high GPU requirement. And thus MSAA is dead now. Notable game which destroys so many AAA today without TAA and is 10 years old - Crysis 3,. CryTek used MSAA and their own TXAA which combines both FXAA and MSAA. Still MSAA in the game is the best. Digital Foundry was drooling over new Crysis Demaster Trilogy, and guess what ? All of the trash Demasters have TAA baked in vs originals which never had that. In motion MSAA destroys TAA without a single question and with super post processing extreme tessellation Crysis 3 the originals look way way better not to talk about other massive downgrades the new Console Ports are yeah old Crysis Trilogy were PC Exclusive, which is why Crysis 2 and 3 melted PS3 X360 and PS4 XB1 never got them, and the modern Demasturds ? They run on Switch LOL. Many modern games do have TAA baked in and you cannot remove it if you remove the game will break the reflections lighting, and LOD effects etc, one of that is Metro Exodus, so we have to accept the TAA low res AA tech no matter what. Resident Evil 2 and Resident Evil 3 do not have TAA that is why game looks super crisp always. However they added new RE Engine upgrades from RE8 Village which means TAA is now mandatory, because RE8 has that atop of RT not only they ruined with TAA but games took a massive downgrade in Ambient Occlusion, Lighting effects too. Red Dead Redemption 2 suffers from horrible TAA implementation as it blurs out all the beautiful vistas in that game massive shame since it is the best looking game with real game inside it unlike many which try (CP2077, not a game). Cannot remove it at all. They added FSR2.0 recently officially after a community mod came and added FSR2.0. DOOM 2016 has SMAA implementation while DOOM Eternal uses TAA. This is why DOOM 2016 looks much much better in fidelity vs Eternal since id Software ditched idTech 6's SMAA for idTech 7 TAA. Although since this DOOM topic came, there's a mod called Carmack's Reshade for DOOM 2016 which uses Reshade and man that thing looks BALLS TO WALLS solid give it a shot the Sharpening used by Reshade is so well done and it clears the game and adds insane oomph factor to it. Control was used a lot in RT demos and etc esp DLSS to fix that perf hit which RT causes. But guess what ? We can remove TAA from the game .exe itself and enjoy better sharp crisp image at nice native resolution. Enter DLSS and FSR DLSS (Deep Learning Super Sampling) Closed Source Proprietary - v1.0 did Temporal Upscaling it uses motion vectors with Sharpening pass on the image which uses TAA, DLSS improves that image because it technically removes that blur which TAA introduces BUT it is only worth on 4K since at 4K the 1080P is used and upscaled to 4K, for 1080P Nvidia uses a very low res picture and then applies the Temporal upscaling. Then the DLSS had all that Ghosting / Shimmering after effects which most of them got improved by 2.x now. Since it is closed source, needs Nvidia to run the game on the servers and generate the data which the RTX GPUs use when the game is loaded through Driver using that nvgx.dll and there's some DLL mods too, like old games which did not get improved DLSS versions they can use the newer DLSS found in the new games as the dll is distributed through the game files and TPU hosts those files. There are ton of presets here - DLSS Performance, Quality, Balanced etc Now in 2021-2022, AMD started FSR (Fidelity FX Super Resolution) 100% Open Source - v1.0 but it did not need any special Tensor Cores or anything on the GPU silicon. It was not Temporal either, it used Spatial Upscaling only. Temporal upscaling factors in the Motion Vector data so the Spatial Upscaler FSR1.0 was not good only helped very poor performing cards. Then AMD upgraded it with FSR2.0 which uses Temporal Upscaling, this does not even use TAA it flatout "Replaces" TAA. Also it has some issues with very thin lines it is improving (RDR2 FSR2.0 has power lines becoming thick). They fixed some more issues with ghosting, shimmering and etc with latest FSR2.1 so it's constantly evolving and open for all GPUs across all ecosystems. Same like DLSS this is also useful only at 4K resolution not at 1440P/1080P since again it is ultimately upscaling only. Same like DLSS FSR also has presets - Quality, Performance, Balanced etc So what AMD did ? No GPU gimmick cores a.k.a Nvidia's chest thumping Tensor Cores mumbo jumbo and it flat out punched Nvidia's proprietary DLSS2.x to the ground, esp now there's no particular visual fidelity difference anymore with FSR2.x vs DLSS2.x even in Motion since it now uses Temporal data and thus uses Motion vector data as well. So Nvidia comes up with Frame Insertion to counter lol. Also there's a lot of games being compared at TPU which we can see. Again they did it without any proprietary technology, all games are getting FSR2.0 mods, RPCS3 Emulator included it inside their code now, Xenia X360 Emulator also added it to the code base. DLSS3.x As mentioned by many videos posted here,Nvidia is adding totally new Fake Frame Data, Unlike the old upscaling techniques this is adding new data, 1,2,3,4 total frames then DLSS3.x is 2 and 3 frames a.k.a not real rendered images, they Extrapolate the Motion Vector Data and the Image and add a frame, this causes Lag and input latency spike. So they added Nvidia Reflex to the DLSS3.x, this Nvidia Reflex is there for almost all GPUs it increases some overhead on CPU but reduces the frame time latency. How Nvidia is claiming there's reduced CPU load when you have more FPS ? Esp that is not how it works right the more FPS there is more CPU work needs to be done, the exact reason why we bench at 1080P to measure CPU performance and not at 4K since at 4K it's GPU dependent as we all know. But how Nvidia says no CPU hit ? because there's no Frame Rendering lol, it's a "Fake Frame" created by their so called Tensor cores to add. This causes artifacts, latency spikes, unwanted effects etc which we do not know as of now and how the picture quality fares. Nvidia is not only upscaling TAA data but now they are adding fake data too all exclusive to Nvidia RTX 40 series only saying Ada has OFA - Optical Flow Accelerator even though it's present on Ampere, because if they add DLSS3.x to Ampere, it will nuke the 4080 cards without a question, this is called on purpose gimping and essentially kills DLSS2.x now. DLSS3.x will run on Ampere and Turing but the Frame Interpolation doesn't work on the old cards. Conclusion As you have already mentioned how many people are simply into consuming such gimmicks, not many will think this much deep in tech but rather simply accept the low quality BS called Upscaling that is how Nvidia was able to convince everyone about their "DLSS Magic" and keeping buying Nvidia only, buy a $1600 card use Upscaling lol. Fantastic. This is just like how we have BGA cancer overloading the real PC and same how Microsoft's strategy is fine by many. But probably the new 3.x Frame Interpolation kicks Ampere users a lot of people are speaking up more and also adding frame data is also too much on the nose. Just look at that Cyberpunk 2077 RT Overdrive mode lmao, 21FPS at 4K, and install the magical upscaler with fake frames, you get 120FPS everyone claps. At this point why even buy Mirrorless DSLR Cameras and high quality 1" Sensor cameras or IMAX Arri Alexa cameras just use a smartphone sensors and apply AI or so called "Compute Photography" or buy a 2K OLED and use upscaler and get away right ? To me that's how it sounds about all this Upscaling technologies (both FSR and DLSS, hey at-least one is free) that have polluted PC space. As @Clamibotsaid, instead of those gimmick tricks more CUDA would have done much better job, take a 50%+ perf hit on these so called RT and implementing upscaling seems counter intuitive esp when we talk PC here which is famous for Fidelity, Nvidia spews 8K Gaming when in reality it's not 8K but upscaled image. RT is nowhere near that great as Rasterization and esp when we see the whole old games destroying in pure art style, look at Batman Arkham Knight, it's from 2015, looks absolutely stunning than so many garbage games we have. I would rather use DSR (Dynamic Super Resolution) awesome feature that Nvidia did, Render the game at higher resolution and display it on your native display resolution. Not only that they upgraded that, It's called DLDSR (Deep learning Dynamic Super Resolution) it adds Tensor Cores to render the data even better when it's displaying on the native display resolution after rendering at higher resolution. both of these are not upscalers which is why they are superior as they are improving Native Resolution image. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Nvidia CEO goes even further defending the garbage move. He says Moore's Law is dead. Yeah sure lol have a look at American ETFs, NASDAQ, NYSE market people will come out and rush to buy when they can barely feed themselves. He will say any sort of BS and lies to sell this lol. Best part ? Intel says Moore's Law is Alive (Aug 2022 article) How Mr. Ngreedia Green Goblin got those Ampere GAxxx chips for peanuts at Samsung 8N node which is 10nm custom node, and who used it ? NOBODY except Nvidia, unlimited supply and 1/2 the cost of TSMC 7N wafers. That guy knew Mining would be booming which is why he fitted Ampere with GDDR6X Custom PAM4 signalling for extreme Memory performance even that is cheaper than GDDR6. All that was possible because of Moore's Law. He got everything for cheap. Maybe one of the reason why the GA102 shared with all 3080 to 3090 variants. But he thought it was his greatest mistake for 3080 with GA102 for $699. He fixed it now, AD104 like Pascal era of GP104 gets to xx80 GPU (1080 was GP104) but do note 1080Ti is a GP102. Also if you realize the pricing of existing stock Ampere cards, they are not slashed anymore, they are fixed now. Probably to save AIBs because if Nvidia axes more MSRP off these Ampere cards MSI, GB, EVGA, ASUS, Gainward, Palit, Zotac, Galax, etc etc will actually make loss over the thin margins. So he kept the pricing intact and took the pricing baseline from 3090Ti and then applied it to the RTX40. 3090Ti ~$1150 4080 12GB $900-1000 either it loses out by 15-25% in Raster / matches or gains using fake frame tech so less priced. 4080 16GB 25% faster than 3090Ti in Raster and shares 25% more price. 4090 50-60% faster in raster from what they have shown, 2-4X in Fake Frame Tech, 60% more cost. *Raster performance is Nvidia picked titles - RE8, AC Valhalla, The Division 2. **All that when he unceremoniously charged RTX3080 -> RTX3090 2X COST for 15% max perf diff which is now normalized. Expect 4090Ti MSRP to be normalized for RTX5000 cards. People need to vote with wallet. AMD adjusted a bit of pricing, although 6900XT is literally same as 6800XT but they are better in pricing, 6800XT fights with a 3090 and beats it in some tiles and it's $600 right now. Shame people do not buy them because garbage DLSS and RT, ironic since DLSS 2.x is dead now by FSR2.0 AND DLSS3.x double whammy. Going by the current Ampere and RDNA2, we are in for a GPU overstock slump. I wonder the RDNA3 prices now how AMD will respond, they clearly have 2 choices - Use the golden chance and knock off Nvidia by severely undercutting OR Reduce the prices a bit and maintain not much marketshare again. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

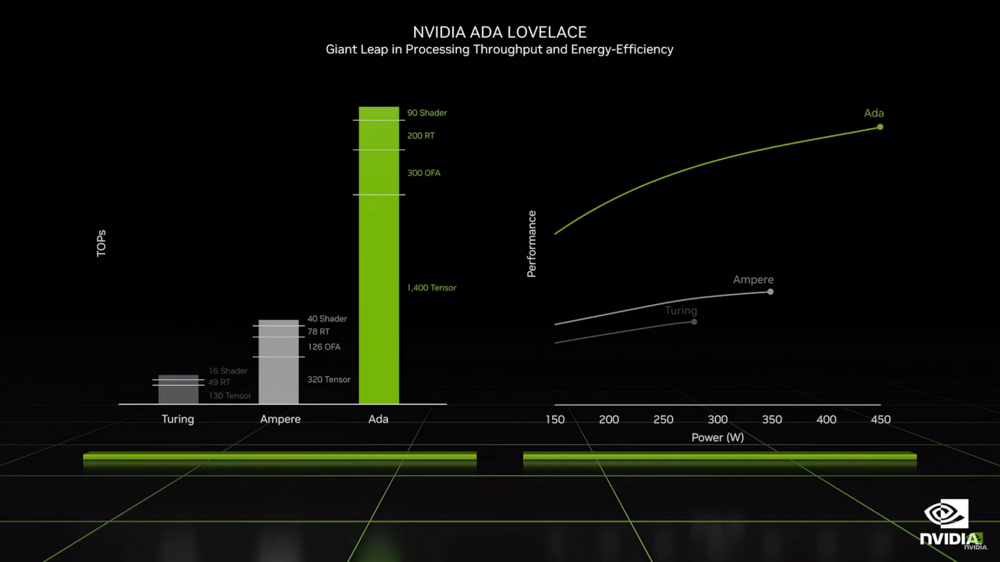

4090 AD102 cut die with ~16000 CUDA cores is fast at 450W and with AIBs offering upto 500W+ with the new 3090Ti PCB base design which fixes all the flaws with 3090. The gen on gen performance is just good but not what Nvidia says as 2X i.e 100%, looking at RE8, ACValhalla vs 4090 it's more towards 50-60% from the slide I mentioned on RE8, ACValhalla, which is not small to be honest and good improvement and it is actually packing 60% more CUDA cores too vs 3090/Ti, 10752 vs 16384. Looking like Ampere 2.0 with SER (Shader Execution Reorganization) HW feature and new RT cores, new Tensor cores. And 4X is just blatant DLSS3.x fake frame addition where Ampere doesn't work at all. If they make Ampere work on that 4080 12GB card will be DOA as it alr is in margin to 3090Ti in RE8 that title has RT and no DLSS3.x. $900 (no FE only AIB, add $100+ premium) so existing market $1000 MSRP 3090Ti FE/AIB beating a brand new 40 series card plus it has low 12GB VRAM. Same here too 7680 CUDA cores AD104 vs GA102 10752. 25% less cores but almost similar and sometimes losing, makes sense to me and cements more of that Ampere 2.0 thought. Now, AMD RDNA3 with new *might* match a 4090 in Rasterization with 7900XT, just going by their RDNA2 execution since it did - Both DOOM Eternal and Red Dead Redemption 2 run way faster on AMD RDNA2 Radeon 6900XT cards than Nvidia 3090Ti however at 4K it doesn't do that much for AMD, add 6950XT gap closes out. But Nvidia has their ace now for the Absolute King card of this gen, which is full AD102 die 18432 CUDA (Upcoming 4090Ti, $2000 MSRP, 600W) which is 2000 cores more than 4090, for comparison 3090 had ~10400 CUDA and 3090Ti was just ~10700 CUDA cores just ~300 more, barely improving it's almost a full die. So they are having a better backup plan this round vs AMD. As for RT I doubt AMD will have competing option and for DLSS3 fake frame insertion technique no idea lol. If we speak from pure marketing pov, AMD might lose because of DLSS3 Frame Interpolation insertion makes up for Nvidia's 2-4X (absolute BS but it lines with garbage rumors which claimed 2X easily nvidia's advantage). However, their RDNA3 has new Matrix Math Engine called WMMA. It could be either RT or DLSS counter or something else totally like SER that Nvidia announced for Ada. As for TSMC 4N that Nvidia announced it is not a big node jump bro Papu (here's TSMC website, they mention it flat out that 3nm is the next full node shift from 5N), It's 5N only with few changes per Nvidia's specifications just like AMD's Ryzen 7000 uses high performance TSMC 5N and it's already showing the performance domination below. So their Radeon we do not know that part, but Radeon 7000 RDNA3 is using MCD (chiplet die for cache), cheaper to make and scalability too, on top huge cache with less power requirement than Nvidia, Nvidia jumped a massive node from Samsung 8N (10nm) to TSMC 4N (5nm) vs AMD from 7N RDNA2 to 5N RDNA3. AMD Ryzen 9 7950X breaks four HWBOT world records with AIO cooler Also I do not like these new 4000 series 4 Slot cards. 3.5 AIB 3090 and Ti already ruin the Mobo PCIe slots for us. On PCIe3.0 mobos we barely get extra 3.0x4 length slot and x1 slots with the 3.x aka 4 Slot cards you are out of options and cannot use anything. I think only FE cards are strict 3 Slot cards. Ampere was 3 Slot only for 3090/Ti. Not sure about 4090. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

After analyzing more of Nvidia's Ada debut. I found some interesting observations and mega BS. Lets first see the 4080 12GB AD104 first and RTX3090Ti and then DLSS3.0 plus rest. See the graph and the games. RE8 is having lower Raster performance on 4070, lets call what it is. And the new 4080 is just a bit faster. That game is RE Engine not even that heavy on GPU to be honest. AC Valhalla is also un-optimized rubble with PentaDRM but GPU heavy and CPU heavy too. Same thing repeat. I do not honestly know much about WH40K it has too much shift so I'm going to ignore it. MSFS ? That is very weird since the performance scaling is blown out totally here. So they got to be mixing DLSS and Raster in this graphs for the first block too. Second Block set Portal RTX and Racer RTX, Cybermeme 2077 (ultra unoptimized overrated nonsense) all are using new RT Overdrive and if you see their own video which I will link below, the perf is crawling at 21FPS. And suddenly DLSS3.0 it goes 80% higher to 100FPS. So yeah that's how they got 4X. Now that's out of the way. Let's talk DLSS3.0 Nvidia is saying OFA is exclusive to Ada Lovelace, and so DLSS3.0's new AI Frame Interpolation works only on Ada rest of RTX GPUs - Turing and Ampere will work on base DLSS3.0 which is 2.x with maybe some improvements. But looking at their own graph which they posted the OFA exists in Ampere it's in their own graph here see below but yeah it's only for Ada lol. But also they are adding frames !! Fake Frames to the game, totally fake AI generated interpolation. Which is why they can get such massive FPS numbers and claim reduced CPU load. It's not even real lol no wonder there's no CPU load as GPU is directly injecting it's fake data, so it adds a latency hit, to overcome that, added Nvidia Reflex to the DLSS3.x now standard because reducing the damn latency. Also funny part is if FPS is low even with DLSS then the latency will be higher and moreover See that OFA (Optical Flow Accelerator) ? It's right there guys !! 128OFA Ampere vs 300OFA Ada LYING through Teeth. Now the game suite, where is DOOM Eternal ? Where's METRO Exodus, this is the real title for RT performance because that game exclusively has RT ONLY build called Enhanced Edition which had mandatory requirement of RTX2060 with RT cores due to RTGI replacing all in game lights with RT. It uses older DLSS but ofc the game is done long back and Devs will not update the DLSS2.x did not get updated, but still could be used to show the performance boost of so called "Ray Tracing" "DLSS" lol. Esp DLSS2.x on the new Ada vs Ampere, nope we do not get anything. I bet the reviewers will also won't show it since it's all DLSS3.x garbage marketing. Next is where is Red Dead Redemption 2 the King of the Raster and Photorealism and not only that the insane level of gameplay and Technology it has. Plus It has latest FSR2.0 (2.1 version fixes even more for FREE on all GPUs) update and DLSS2.x (essentially FSR2.0 totally knocks out Nvidia's proprietary garbage to ashes). But yeah no Raster performance nor DLSS performance showcase, since it's all DLSS3.x selected titles and old games won't get support anytime soon or be abandoned totally. Along with Ampere. Man to be honest, I have really decided to not own this shamware company products they are really awful. Fake Frames, Gated DLSS (Even if it's fake Frames), insane rip off with silicon tiers. Remember 3090Ti is MSRP $1000 now, how can anyone compare the defunct old 3090 price of $1500 ??? This 4090 should be at $1200 tops but now they are normalizing this garbage pricing. Pricing and Others Write it down there will be RTX4090 Ti which is going to come no matter what because that's what full AD102 die is at $2000 and RTX4080 Ti again AD103 full die as well will slot in between at $1300-$1400. And RTX5090 will increase even more at $2000 debut. Maybe they will add Display Port 2.0 then since this first batch of Ada cards are not having DP2.0 so much so for MUH 8K Ray Tracing, lol when Chroma Subsampling at 4K 144Hz itself is castrated also dual 4K 144Hz ? Nope it will slash the bitrate by having low data rate on DP1.4a Trash. Bonus is PCIe4.0 only yes it doesn't really damn matter but you are paying top dollar here. The mobos have PCIe5.0 SSDs (even if they are useless to me, no storage space increase only speed which is worthless), and PCIe5.0x16 and x8 slots, but nowhere to use it all. Write SLI / NVLink also dead, they removed it, so anyone using that CUDA for ML say goodbye to your workloads with NVLink SLI and buy more certified CUDA through RTX A series (rofl they killed Quadro brand). Thanks Nvidia, the way the customers are meant to be played. I just hope AMD kicks this new DLSS3.x to the curb in some way by optimizing FSR like how they did to DLSS2.x which is now defunct lmao while FSR2.0 gets added by mods and even Emulators, fingers crossed but in Duopoly you cannot really expect much to be on the consumer side. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Nvidia shafted Ampere owners big time and new owners at the same time as well lol. Quick takeaways - Spec sheet link TSMC 4N not 5nm 500W is confirmed NVENC is barely improved, Encoder (now 8th gen) adds AV1 for those streamers and Decoder is same as 5th gen version. DLSS 3.x is only for RTX40 series from the looks of it. Not confirmed yet but the spec sheet clearly says so shafted hard on Ampere owners. RIP I guess, anyways I never liked this upscaling nonsense esp it looks worse in 1080P and 1440P, and FSR2.0 is going forward very well Ironic isnt it ? Nvidia owners relying on AMD's FSR2.0 more than the DLSS, I bet DLSS2.x is now EOL. Also Jensen says DLSS 3.x uses Ada Lovelace's new OFA (Optical Flow Accelerator) but when showing the perf charts the OFA exists in Ampere too here below. As I said earlier this trash company is becoming like Apple. Pricing is horrendous. They jacked up a LOT. RTX4090 $1600 24GB G6X AD102 (looks like it has NVLink on the side not the top) , $100 increase over 3090. 2X Raster over 3090Ti, 4X in RT, 450W RTX4080 $1200 16GB G6X AD103 gimped new silicon vs Ampere lineup but lets call it real xx80. 450W again, 2X Raster over 3080Ti, 4X in RT, 320W RTX4080 $900 12GB AD104 gimped hard fake xx80 lol, 4070 rebrand. 285W. Probably similar perf as above no idea as they say it's same per their slides. Performance increase in Raster is what I was after and it looks good but also kinda expected however pricing is also too high now, AIB cards will add $100-200 extra over that so an xx80 card will go up-to $1000 start to $1300+ No wonder EVGA left this is looking ridiculous pricing they cannot stay alive and mint money from this. At this rate RTX5000 Blackwell will have even more worst pricing. By 2030 Great Reset things will become so worse on all aspects and PC DIY might even look like some Luxury 6 digit car ownership. Unfortunate BS tbh. Also are the new games even worth ? With Socio political nonsense not to me (GTA VI plotline got leaked and if that's true it's horrible propaganda, I hated that Mafia 3 garbage for this reason). I'm done stick with good old games. At best maybe 1 to 5 games in 2 - 3 years worth playing I do not have much hope in this industry, esp with that new GaaS model that every one wants on top. RTX30 series will co-exist with these but 3080 will be gone I think only 3060 starting again at $329 WTF lol, there's no 4060 so Ngreedia Green Goblin is milking to death. Awful business. You know AMD can axe Nvidia totally by giving their cards at reduced prices by at-least $250 variance, plus use their open source FSR2.x and improve on it and give more support for ROCM then Nvidia's CUDA can be kicked to the curb for good. But I really do not think they will reduce price even if that would make them super popular and get their marketshare. I hope for the best else the future PC DIY looking much much worse for Tech that deprecates super fast. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

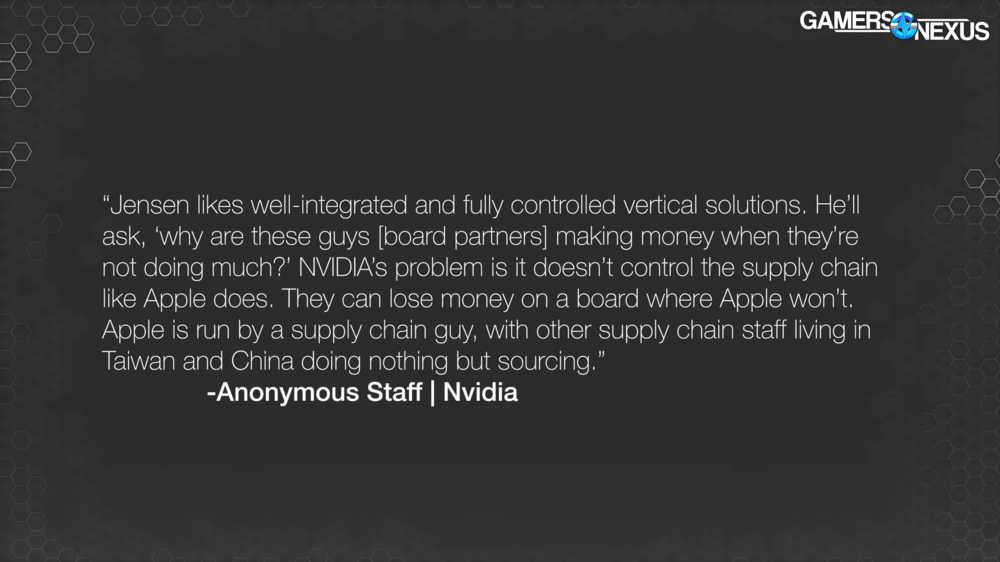

Look what Green Goblin CEO thinks. Just like almost every single garbage spewing company out there wants to become like that horrendous anti consumer nonsense corporation Apple. Apple kicked Nvidia to the curb long time back they probably want that HPC money so tried to buy ARM got beaten hard. Good. Because not that ARM is great but their business practices are bad. Now Apple like vertical integration.... Well I will go a bit tangent but the core gist is same. Look at Google they axed Android's Open Source formula by crippling AOSP apps and making the OS dependent on Play Store API blocks and nuked filesystem made it into iOS. Samsung shamed Apple about 3.5mm jack and notch now their tablets have notches and no more chargers SD cards etc. Microsoft ? Surface pure trash with BGA thin and light taking more spotlight, copying Apple UI into dumbed down trash for Windows 11 and even Win32 Shell as well downgraded to the point of no return. What's latest ? eSIM. iPhone 14 in USA doesn't have SIM physical slot anymore. So basically skipping on parts and components and going full vertical = more greed not surprising this is the new motto of Nvidia who doesn't respect their own damn AIB partners. Jensen Huang's famous quote - "The more you buy the more you save". I respect the guy for making a company out but also scamming the damn out of consumers is absolute trash behavior, GTX970 GPU VRAM fiasco of 3.5GB, Heck the 3080 was gimped pretty bad on that front as well with it's VRAM. How about the RTX 2070 having a low end silicon cut only to be replaced by 2070S next year with actual silicon grade ? Ampere 3090 cards on that garbage game from Amazon MMO New World failing (Thanks to garbage power delivery on all 3090 cards, only the Ti fixes it - Buildzoid, wish I watched the video before), Cancerous Falcon firmware to protect vBIOS, MXM axed and replaced by trash Max Q filth. What not. No proper drivers for Linux on top. GNs video is pretty much hit on spot from start to end. Good video by Steve. EVGA tried a lot with the mining craze went way overboard by ordering too much and now post GPU crash and Inflation at 9% in America where EVGA primarily operates unlike ASUS, MSI, GB. Nvidia on the other hand, absolute trash practices, remember 3080Ti price ? Until the last min nobody thought the $200 markup. Add LHR, extra VRAM DLC type scheme for upcoming 40 series. Also they cannot simply make Radeon 7000 because it needs a ton of testing and QC plus lot of work. EVGA's AM4 X570 was also too late to the market. No wonder they are taking this stance. And Kingpin leaving as well. TiN leaving already put a dent in EVGA imo. xdevs website for Z490 and up do not exist at all. Shame that a respectable company is going away from the market. This will have a huge impact to them. Mobo business is so less now they might not even prioritize much probably Z790 DARK is already designed. I hope AMD does better marketing PR and beat this company in a way that their market share drops and makes them think better. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Thank you very much brother Fox. I will try a few tricks and will let you know.... About the weird HDMI behavior on this board, the moment I turn on CSM the HDMI won't work through IGPU nor the PEG. Something is blocking it. Also with the little time I spent with ASUS BIOS and MSI Click BIOS 5. I like ASUS, it's way too good I think that is why many still buy ASUS. EVGA never used but whenever I saw Luumi's videos, they really make SOLID BIOS. Only unfortunate part I noticed is their support of BIOS updates is not at the pace of ASUS and the Z590 basically cannot run 10th gen properly on their boards and their damn LED BIOS codes indicators on Z490 and Z590 were prone to failure, even X570 (only with Z690 they changed it) if that was not the case I would have bought the Z490 DARK the moment you had it up for sale 🙁 I believe that TiN leaving them is a big negative. EVGA also gives option to shut off PCIe and SATA ports totally every single one of them even physical damn switches on the motherboard ! which is the best thing and the neat little U.2 connector, I remember you even asked them to let us disable the DAMN INTEL ME which they did !! ASUS gives it to an extent only about disabling things I think but not much at all. ASUS also has that V_Latch for direct VCore reading without the whole shenanigans, plus OC switches. But their QC and other non sense is really off putting. MSI Click BIOS 5 is super easy and everything is in there for basics, but there's nothing to disable except USB ports, Wifi/BT plus their Advanced settings is just barebones vs ASUS Advanced options I think even my AW17 Insyde H2O has some more settings. BIOS switch is good, Reset button works like Flex key on ASUS and BIOS LED indicator is also nice, which ASUS also has. But MSI has 8 Layer PCB unlike ASUS cheapening out on just 6 layers and 2oz copper as well. I think for OC they have plenty of options however like both EVGA and ASUS. Also Thunderbolt 4 can be disabled but I just learnt that TB4 Intel's Maple Ridge Controller automatically won't work with CSM BIOS setting, so even if I run Windows 10 dual boot on this board, both the TB4 / USB C ports on rear I/O won't work and useless as CSM is needed for my Windows 7 or heck even if MBR. And ofc ReBAR needs UEFI only. I just remembered how you are running Windows 10 LTSC also on MBR, is that correct ? Truly sad that how things are turning out on tech side. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

So I hooked up the machine to my comp using HDMI input, rather than opening all the monitor again. It gave A2 and A6 and no output, was thinking iGPU would do it. So Updated the BIOS same thing, so I thought DRAM so I painfully removed 2DIMM sticks with DH15 on from 2nd set. Same thing happened, then I plugged in the GPU and the output worked and was able to get into BIOS. Now once in BIOS I enabled the CSM option to test Windows 7. Suddenly HDMI output doesn't show anything from GPU. That was all yesterday night. Today morning I plugged it into the monitor and it shows output properly, not sure why HDMI is iffy vs Display Port with CSM, maybe I'm just seeing things wrong . Now for Windows 7 - The thing is it boots off any USB 2.0 or 3.0 port and detects then starts Setup (Win7 MBR thumbdrive that I made is a USB2.0 device with all the xHCI USB drivers from MDL, essential updates like NVMe hotfix, i225V LAN driver and SHA256 update. But unfortunately none of the USB devices work once it loads the setup page for Windows 7. My early plan on APEX was using PS/2 since it has the option directly and use SATA ODD from the external SATA controller ASMedia since it have native ODD bootup for Windows 7, MSI doesn't have any external SATA controller and no PS/2 options only 2xUSB2.0 on the rear I/O. Also one thing I noticed, in the MSI Click BIOS 5 the USB2.0 port with Logitech combo KBM is lagging badly. Yeah this board Z590 Ace has 2x USB2.0 ports. @Mr. Foxwas able to successfully get Windows 7 on Z690 Unify. Can I please know how did you get it running ? Did you use a PCIe card to PS/2 or PCIe card to USB2.0 or did the Mobo directly allow you to install ? I'm using Logitech combo controller for KB and Mouse no dice. Thank you. Also I remember you always prefer using ODD and then updating from there, please suggest any tip. Selecting components is one thing, and building it as per needs is one. Windows 7 part really is very tedious. But I want this since it's the last time that OS will ever run and for memories. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Thank you Mr. Fox, yeah I was also thinking it's really top max limit. I was checking the manual too. ∙∙Max overclocking frequency: ▪▪1DPC 1R Max speed up to 5600 MHz ▪▪1DPC 2R Max speed up to 4800+ MHz ▪▪2DPC 1R Max speed up to 4400+ MHz ▪▪2DPC 2R Max speed up to 4000+ MHz By that table 2DIMM per Channel Dual Rank is max 4000MHz, I doubt any CPU can go past beyond this rate. But was really not sure as many run on Dual DIMMs as it's best for the OC too. I was just thinking if it works I could get 64GB memory at low latency. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Need a word of advice from the experts here. Thanks in advance. On a Z590 board with 4 DIMMs, will this work.. I'm almost ready to install it now as I type. F4-4000C16D-32GTRSA - 2x16GB kit I have 2 kits, it's B-Die Samsung16-16-16-36 1.4V XMP. DIMM A1, A2 - Channel 1 DIMM B1, B2 - Channel 2 MSI mentions I need to install 1st stick in A2 and 2nd stick in B2 to get full Dual Channel with XMP. My idea is to put both kits populating all 4 DIMM slots, is it possible for the 10900K IMC to handle that much of high speed, high capacity, low latency, Dual Rank DRAM at XMP with B-Die kit at 1.4V ? If Yes .. how should I populate the 2 Kits say Kit X 1st stick goes into A2, 2nd stick goes into A2 and Kit Y 1st stick goes into B1, 2nd stick goes into B2 is this fine OR it should be X Kit 1st goes into A2, 2nd goes into B2, Y Kit 1st goes into A1, 2nd goes into B1. If no, then I will just put one stick in A2 and other stick in B2 with same Kit X. Please advice. Thanks again. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

I'm guessing those are Noctua NF-A12x25mm, if so they do offer them in Black as Chromax option (I think this fan was most waited for Chromax Black option, came out in 2021 only as they have almost all of them in Black iirc). They are retailing for $33, very expensive vs cheaper alternatives like Arctic P12, however the weight of Noctua is higher than Arctic and also the motor for the Fan is bigger on Noctua too. Plus there is a lot of padding / anti vibration rubber on Noctua too. And Noctuas are made in Taiwan vs rest in China. Also I realized late that those are Industrial PPC 3000 RPM beast Delta successors, well Noctua sells Chromax pads - NA-SAVP1. I bought a ton of them to get a color swap since I won't tolerate any RGB fans and their garbage cabling nonsensical BS along with the software. Only issue with their so called "Sterrox" LCP material is, when I use them to screw to chassis they get very flaky and get their holes destroyed badly as in the screw threading process removes a lot of plastic from the hole's top surface - This happens with Noctua self-tapping screws that come with each fan and Case screws (Fractal) too. I think their plastic is probably too soft so the holes will get destroyed no matter which screw I use, but since they are going for mounting directly to chassis it's acceptable as they are a tight fit. Wish they had the screw ridges from the factory. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Intel actually had this done years ago, AMD is wayy late. Intel 5775C i7 Broadwell had eDRAM on the die as L4, 4980HQ Apple exclusive Crystalwell processors also same thing, I remember @Recievertrying hard to get that running on an rPGA interposer for the Alienware 17 and other rPGA Haswell sockets but the BIOS won't boot on the Alienware 17. Anandtech even published a solid review of i7 5775C in 2020, bonus is Intel i7 Overclocks too with better DRAM. But AMD's Stack solution does no. Look at benches it manages to go with 10th gen in Gaming performance. Intel should have done that tech on all CPUs but they did not probably because more money and pins required, esp when they want to screw all the Intel owners for LGA15xx Z170 -> Z390 only P870DM was able to get it fully working proper from all the way from i7 6600K to i9 9900K beast. I think for Intel to do exact same now like AMD stack it will take time because AMD's solution is TSMC Cowos 3D stack technology with partnership. Intel doesn't use this stacking methodology and also their MCM is EMIB based and it's not AMD like Chiplet, Intel's tech is far more complex and expensive to manufacture. Which is probably one of the reason Sapphire Rapids Xeon server is delayed and this is Generation 1 design from Intel which probably needs more time to mature. Yeah 7800X is 100% going to be X3D release there's no doubt, I think AMD wanted to avoid 5800X like mess, that chip was not much loved over like 5600X and the 5900X as it was very high cost. So they avoided it and released 7700X now only instead of 5700X which they did recently. What I'm guessing is unlike a 1 SKU only, they might do 2 - 7800X3D and 7950X3D. For Intel Meteor Lake it's 8P fixed so AMD might flex with gaming only, look how they are comparing 7600X to 12900K, that's a joke comparison because 12900K leads in MT and not just gaming, but "Gamers" will see that and buy the low end one. As for AMD / Nvidia / TSMC / Intel. Intel got exclusive line for them separated from all others because Intel volume is higher than all these, but for Nvidia and AMD I doubt Nvidia can command high and AMD lower, since AMD has actually propelled TSMC along with Apple since 2017 to make a ton of cash plus exclusive 3D Cowos on EPYC 3D Stack processors that is 100% AMD/TSMC collab project. I believe this round there's not much of 5nm Silicon shortage hassle like Zen 3, my rough guess. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

5.0 lanes are available plenty both PCIe x16 slots and PCIe SSD for Zen 4. But the problem is 5.0x4 to 4.0x8 switching is not happening for the Motherboard daisy chain PCH OR PCH is not able to utilize the 5.0 link speed for I/O since most of the controllers might not be ready yet for that PCIe version. So for AMD now with the 5.0 to 4.0 switches being super expensive as probably they are being manufactured, since PCIe5.0 servers do not exist yet, Genoa is year-end and Xeon Sapphire Rapids is delayed to 2023 Q2. No PCIe 5.0 devices either. So ultimately it just boils down to 2 things Intel DMI PCH speed and lanes -> Comet Lake PCIe3.0x4, Rocket Lake PCIe3.0x8, Alder Lake PCIe4.0x8. AMD PCH speed and lanes -> Zen 2+ PCIe4.0x4, Zen 3 PCIe4.0x4, Zen 4 PCIe4.0x4 (despite CPU having 5.0x4). I updated my post with TPU link too on the subject. Honestly I think many people really do not care or understand about this PCIe PCH stuff, even for me it took a long time to understand this whole thing esp when we use PCIex16 second slot, more NVMes etc. It's all about bus saturation. No wonder HEDT is dead, nobody really cares anymore everyone simply is fixated on the gaming, which is why Intel is using their big little BS for that, 8P fixed until 2025 just because gaming also BGA biglittle is super high profits. I think they should have gone with more lanes than 5.0 link speed probably their IOD cannot do a non uniform PCIe link like Intel's DMI which is able to do both 3.0 and 4.0 for RKL and for ADL both 5.0 and 4.0 and it's better for them to get CPU have higher PCIe link speed than mobo since mobo is supporting for AM5 upto 2025+ and if they intro the high PCIe now only what will they sell for Zen 5 apart from some minor changes.. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

I think AMD is going that way only bro papu. Because if you see their deck the Zen 4 and 4 V-Cache are in a same cycle window, I think AMD will refresh 7000X3D maybe next year end, this round perhaps add the Tuning and improve it I guess because for me it's useless if it's a locked down SKU. But you know AMD is anyways not tuning friendly at all..so many will gobble it up. Then in 2024 launch Zen 5 then Zen 5X3D in 2025, at that point Zen 6 might not get released on AM5 or maybe they will since they say it's "2025+" if they do Zen 6 on AM5 also then Intel will have 5 CPUs (Alder, Raptor, Meteor, Lunar, Nova) in all these years with 2-3 sockets variations vs AMD having one single socket. AMD will probably do a mobo refresh sometime in 2024 probably (see below why) So basically AMD uses X3D to combat Intel's 2nd socket release a.k.a Tock refresh, that is probably one strategy we can observe or guess here from AMD. To be really honest, I'm fine with 2 year releases for CPUs just like AMD and Nvidia GPUs refresh cycles we have. 1 year release like Smartphones is really annoying because too much hassle of BIOS, Boards and Software quality and etc and new prices...plus a lot of waste of perfectly functional components. On Smartphone junk it's like they will die due to poor performance, battery and OS lot of nonsense but on desktop side we have Sandy Bridge CPUs in use and keeping up with workloads and old decommissioned XEON processors for Homelab and etc. Intel should have got LGA1700 to get Meteor Lake too, but they did not and stuck to their same old method of 2Yr cycle, it's a really negative anti consumer move from Intel despite AM4 market saturation and now AM5, this will definitely result in loss of marketshare for Intel because tons of people will simply stick to old mobo and upgrade on AMD side just like AM4. The major aspect which AMD did not confirm is PCH link speed for these X670 / B650 class boards. TechPowerUp's old article from Computex Motherboards for AM5 mentioned that PCH link is 4.0x4 only, not 5.0x4 which is actual speed from Zen 4 CPU. I think AMD might refresh motherboards in 2024 with Zen 5 launch and give PCH link speed of 5.0x4 with PCIe switches on the motherboard. The only reason I bring this Mobo PCH is with new boards from Intel and AMD we have more PCIe SSD options like 5x NVMe, so the moar SSDs we add the bandwidth consumption for all parallel workloads will saturate the bus or cut off other I/O like Z590 Extreme has 5 NVMe but the moment we plug all we lost 4 SATA ports from 6, and lose the PCIe slot x4 length which is already at x2 speed. Intel's Alder Lake PCH for Z690 is having wider with PCIe 4.0x8 so we do not have to compromise anything this is a significant win for Intel if AMD is kinda castrating the PCH. We had PCIe3.0 saturation on all CPUs until Comet Lake. For AMD until Zen 2 (2000 series). But you know majority of them do not really care all they want is "GAMING". -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

The biggest thing out of all was, 2025+ AM5 support. That's a massive win for AMD again. They will saturate the market a lot again due to that move, that is the only reason why AM4 was super popular and high saturation for the past 2 CPU refreshes since Zen 2+ era. EXPO is looking interesting too, 63ns DDR5 latency and now no more fiddling with their crappy IMC likes on pre Zen 4 which had higher latency on DDR4 standard, this is a proper refined CPU design from AMD of the Zen design no doubt. AVX512 is confirmed. No wonder they are getting a huge ST performance too with that wide register support, same for Golden Cove P core too, 30% of the silicon which Intel decided to axe off for 12900KS. Claps ! 5.7GHz boost on 7950X, that is a big move too, huge clock speed boost, I wonder how they will hold it. 230W is the max I think for the top end performance which I think is good. I think RPL will blow the roof off with extended TDP mode (350W+) from factory. The main aspect is AMD's AGESA BIOS engineering dept. AND the IO Die on the new Zen 4 processors, I do not care about that iGPU but waiting to see how the new BIOS will hold also the reliability of the most important part of a PC - I/O. I really hope AMD improved their R&D and QC on this on HW and SW sides. Oh I wonder how much Voltage AMD is pumping through this ? Zen 3 had 1.4v on all Processors no wonder the IOD crapping out and on top no room to OC and etc. Only X3D has 1,3v binned chips. If AMD pumps more than 1.4v then if they lock out of voltage control then I won't be really surprised but if that happens then it's going to be a DUD for Enthusiasts and Tinkerers. Intel is already ready with more E cores nonsense to have higher MT performance and they are loading the P core with more L2 Cache and probably improvements to IMC and Ring Bus with added Clocks again. AMD however is sticking with their no small BGA friendly cores at all, only big powerful beast cores, their CTO mentioned what is Zen 4c, it is a lower frequency Zen 4 with more compactness, I assume they will cut off SMT off it to reduce the die space and thus add more core to compete with ARM Neoverse N2 and V1 as they also lack SMT. Intel has delayed their SPR now to 2023+, it's a bad sign for them tbh, XEON is losing the ground to EPYC and ARMjunk. Anyways Zen 5 is also on track with a brand new design too. True that, unlike Zen 3 AMD blowing the roof off with pricing, this round no such thing thankfully. EDIT - AMD's AVX512 is not like Intel's implementation, so I think the AVX512 won't be on the level of Golden Cove / XEON SPR and maybe not even the old SKL-X, RKL class since it's using 2 SIMD pipelines apparently to make it AVX512 unlike Intel's full width, I hope Intel goes with AVX512 on their new Raptor Cove and not step back like ADL. So if RPL has AVX512 that will be top CPU for RPCS3 again. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Been a while good to see all are doing great. Anyways got some important news. Batch of Arctic Liquid Freezer II Coolers Have Design Flaw, Company Announces Free Service I just checked ofc I'm effected since I got it during this time only, at-least the damn thing was not running since I took a break from building the machine fully imagine getting the PC getting shorted out due to the stupid AIO. This is why I always hated the idea of liquid cooling with an AIO. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Okay this cements that HEDT is dead. AMD pulled plug on Threadrippers and axed the socket 2 times. Intel on the other hand doesn't have any X299 successor. SPR-X but rather W series XEON with W chipsets. Intel confirms W790 chipset for its HEDT Sapphire Rapids Xeon Workstation CPUs Shame really. W series means TR PRO like pricing. It will be insanely expensive, and with very very less market cap for these, meaning we won't even see how much we can get out of them and less parts, less mobos as well. So they are too much Prosumer side like Quadro. End of an Era I would say, X299 is the last. The Smartphone dominance truly ruined PC space heavily from the UI, Power requirements, userbase mindset and other aspects. For a HEDT usecases, now we have to choose from used XEON or buy brand new and get shafted to hell with the pricing on these. Well I hope AMD's Zen 4 and Zen 5 delivers more PCIe lanes and more MT performance in the next 5 years. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

AMD shamelessly axed Windows 7 support in April only of 2021 despite having a chance to support because they did not use DCH I think at that time. Nvidia meanwhile did the Win32 until Sep 2021 for all of Ampere stack. RTX3090 Ti even after launching late in 2022 has a Windows 7 Win32 driver, the last of the last Win32, they will deliver security patches. For AMD it's SOL lol nothing at all. And use buggy trash drivers for 7. Also AMD had worse DX11 performance vs Nvidia on old titles, this was very important to me because I like old games far more than the new garbage. Esp the old era of X360 ones of horrible PC ports lol. AMD recently made some upgrades to that driver and posted some performance boost very recently just a couple of days back. AMD has Open Source Linux drivers but Ngreedia doesn't. Nvidia's NVENC is wayy superior to AMD's solution too. AMD's CPUs and GPUs (many say fine wine, idk) definitely have some Firmware QA problems not that Intel is flawless or Nvidia is either, but comparatively esp the CPU part is worst, tons of damn firmware updates and AGESA what not. I hope Zen 4 goes in step of right direction after 6 years of Zen experience. One last thing, many whine to hell about NVCP on all these blogs and sites. NVCP yea it's slow looks like XP era and doesn't look fancy. In contrast with AMD it looks outdated, but to me it just works damn it. NVCP simply works !! no questions asked at all. I love it. All the XP type is even damn better no shiny BS nonsense no bloat. Slowly they added a lot of telemetry junk, NVClean Install exists thankfully. But sadly these same fools gulp down that horrendous BLOATWARE DOG FECES GFE software. Sooner or later Nvidia will axe NVCP and go with GFE then these people will chug it all down while crying. Nvidia recently collaborated with that Pascal guy on Patreon for his RTGI Reshade and implemented it inside the damn GFE cancer, if we want those without Reshade we must open our PC to Ngreedia to penetrate it with their cancer. Absolute clown world.