-

Posts

142 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Events

Everything posted by Ashtrix

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

It is a new Windows 10 standard and it makes the drivers integrated further deep into OS also it makes the OEMs use Store based UWP apps as in it's an option to them (add the bonus telemetry that comes along with the UWP trash) which is why so much of the modern Software packages have a ton of bloat and are more utilizing UWP apps (MSI, ASUS, AW for eg). NVCP with the DCH drivers previously did not ship with NVCP we needed to add it through Store or NVCleanInstall injection, they provide the app now though, still NVCleanInstall is mandatory as the driver has a ton of stupid bloat. Below is their own documentation mentioning that UWP part more. Declarative (D): Install the driver by using only declarative INF directives. Don't include co-installers or RegisterDll functions. Componentized (C): Edition-specific, OEM-specific, and optional customizations to the driver are separate from the base driver package. As a result, the base driver, which provides only core device functionality, can be targeted, flighted, and serviced independently from the customizations. Hardware Support App (H): Any user interface (UI) component associated with a Windows Driver must be packaged as a Hardware Support App (HSA) or preinstalled on the OEM device. An HSA is an optional device-specific app that's paired with a driver. The application can be a Universal Windows Platform (UWP) or Desktop Bridge app. You must distribute and update an HSA through the Microsoft Store. And Intel Graphics app is a UWP one not Win32, so lack of proper filesystem for UWP applications and not easy to hook and mod etc. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Those iGPUs on Intel Processors use DCH driver cancer (aka nasty bloat). And The 10th gen is the last probably not using that. Also 10th gen has the last iGPU which can get a Windows 7 driver too (through a Win-raid trick). Tbh I wish Intel never wasted those iGPU die space and instead used it for eDRAM on die cache like i7 5775C. So all in all I will happily disable it rather buy a junk GT750 and use the PCIe DP out when needed in emergency. Personally I couldn't even get the MSI Z590 Ace output from on-board HDMI using UHD iGPU chip to a HDMI port on the Monitor properly with CSM option enabled and gave a headache Enabling CSM the BIOS didn't even work with PCIe PEG GPU HDMI either lol only DP worked, that's another story... It's always iffy with the UEFI BS always. This UEFI cancer has been a PITA ever since I laid hands on my AW17 which was the first time I got to experience it. Today there's some RTX4080 and 4090 DisplayID related Firmware update again related to some UEFI cancer BIOS in relationship with nvidia cards. Hilarious how CSM / Legacy ALWAYS is the best ever since the UEFI trash came out. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Dude goes on bashing Igor since the start funny part is how he utilizes Igor's slides and materials then applies a monologue, His theory is based on 1 sample size Adapter of Electron Microscope scan but since the user's 4090 is not available to do the analysis there's no proper other half of the issue, a $1600+ actual GPU which got fried is missing. So we do not even know the Major component which failed and how it's actual 12VHPWR Socket itself has changed over the time due to the melting process and most importantly what GPU VRM components failed. 50% of the analysis is missing, still proceeds onto conclusion which is basically more stressed onto user problem (Foreign Debris due to insersion) + NTK cables from Igor is not correct. I appreciate his shots of microscopic scans, and how Igor's piece is not a solution. And how the surfaces have their plating changed due to insertion and how their "Lab" is saying about the metallic findings could be from GPU socket and all but I do not feel there's a massive "TED Talk" as quoted by himself here, it's just another round of overly smart speculation. This is my conclusion below. This is a clear cut PCI-SIG and Nvidia failure despite their own reports. Not user problem, it's like iPhone 4. Apple says how you are holding the phone wrong with their Antenna gate and got sued. Which is why PCI SIG is creating a new standard with 4-Sense Pins groove being longer & 3090Ti did not fail and it never used 4 Pins despite using same connector so magically users did not have insertion issues with that GPU which used same 12VHPWR connector (btw 3090Ti never used 4 Pins because they are shorted on the PCB & sense pins do not carry any high voltage, so the pins are same as 4090) Finally Nvidia should own the problem, still no word on the issue shame. I bet Nvidia will change the adapters going forth 100% sure, they cannot change the socket because they have to axe their whole 3090 based cooler design as it won't have space for 3-4x 8Pin connectors and existing users will have no solution except a ticking timebomb. Also one should see why 8pin never failed. User insertion or not 8 Pins doesn't fail since 20 years of PC be it CPU HEDT X299 or 13th gen Raptor Lake same 600W+. GPUs also use the same one, and even more to reiterate AMD's R9 295X2 drawing 500W of power through just 2x8Pins going out of PCI-SIG specification and saw no failure. Well AMD knew that 8Pin has a higher ceiling of power while 12VHPWR does not. Yes 900W OC and all is fine and dandy but when it blows up then it will be real. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

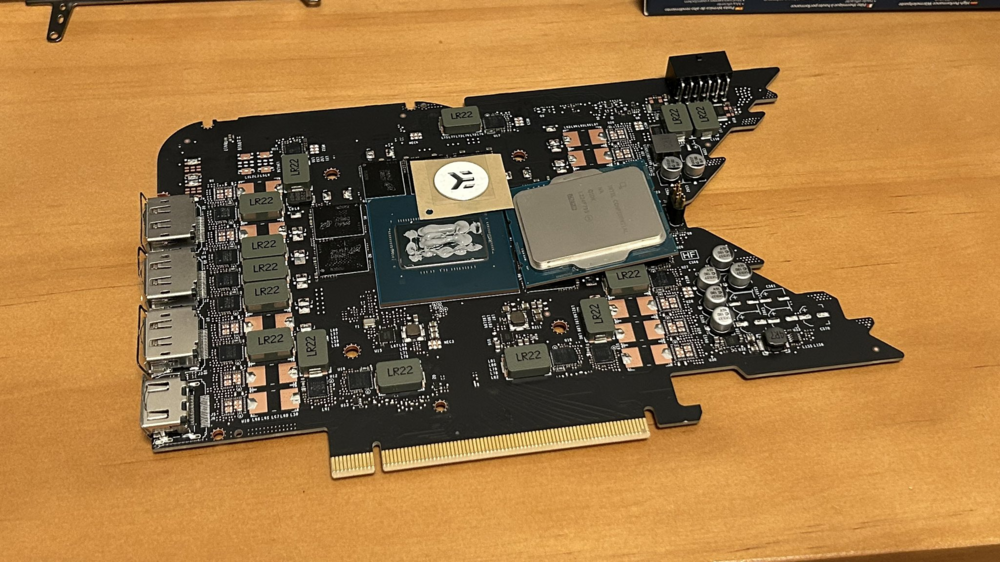

It is greed and stupidity and also banking on the dumbconsumer koolaider drinking folks. RTX 4080 16GB PCB Lmao Nvidia is ripping and playing the customer base HARDCORE. What an anemic display of BS cost cutting on a $1200 card ? Pathetic. RTX 3090 which is now under $1000 has more VRMs over this pile of trash oh it matches because 16GB lool vs 24GB and AMD also has 20GB base now. It would be interesting to see how many 12VHPWR failures will occur on this given it's a 320W card. The performance on the benchmarks side is improved vs 3090Ti but the Real world GFX performance I do not expect anything that would warrant a purchase since their official slide deck and the approximations, also maybe it's time for Nvidia to "Optimize" Their Ampere drivers to start nerfing the performance since the Ada Lovelace is out ?? The silver lining for Nvidia is they have a sale window of 20 days before Radeon 7900 RDNA3 series launches. Dec 13th, well more time for fireworks during Holiday season I guess haha 🥳 Nvidia is going to prepare RTX 4080Ti with 20GB memory now and beat the 7900XT else they are going to get REKT hard but what would the price ? Nvidia cornered themselves into from the looks of the RDNA3 initial numbers from Performance to Pricing. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

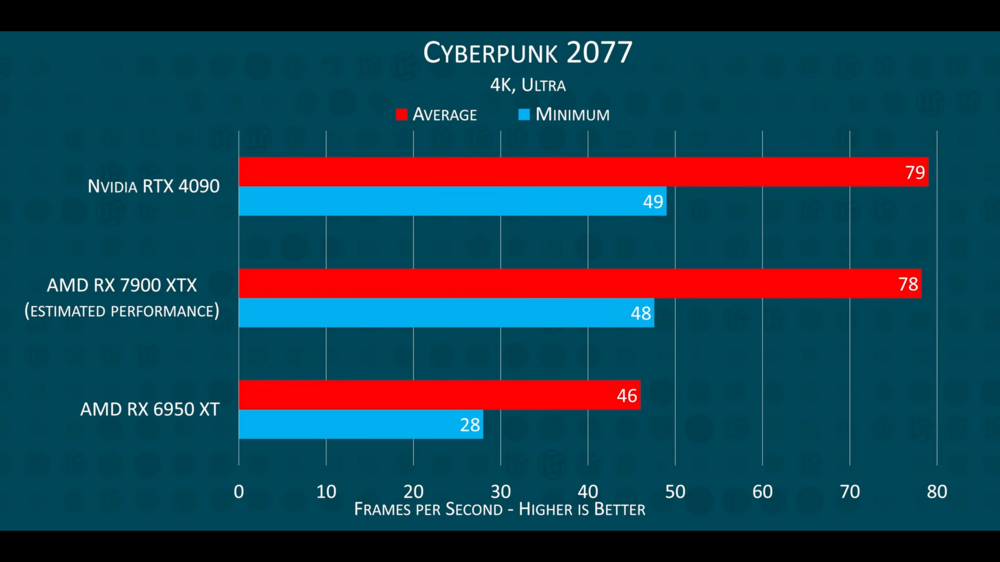

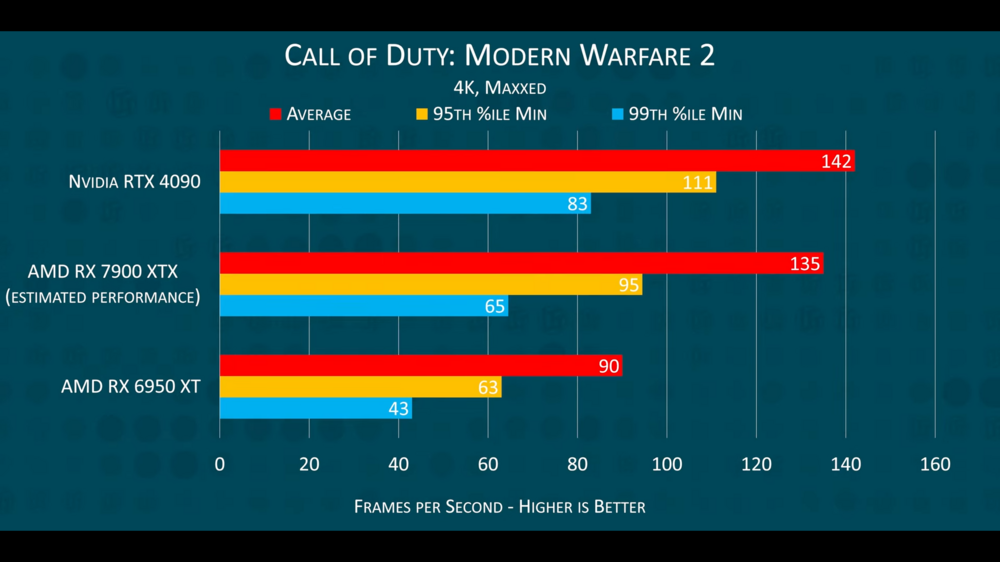

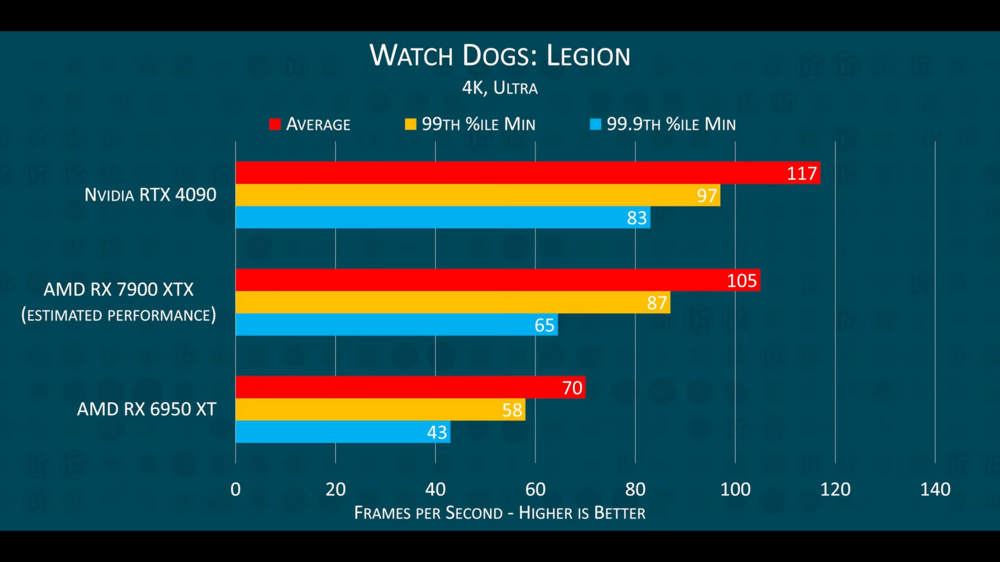

Well I lost respect to this Steve. AMD Radeon coverage is not well rounded and cringe. Goes off on how AMD misleading with fake 8K, yes it is FSR obv it's not real it is fake indeed and they got access to slide deck because these Techtubers go on a trip sponsored by all corporations, which is what TechPowerUp and Anandtech post. Anyways so I went and checked his Nvidia coverage, well not a single word on DLSS green bars that Nvidia showed. Here's his Nvidia coverage for Ada RTX 4000 cards. Then how AMD did a jab at Nvidia on power adapter.. and it's not good in his own words lmao. Well AMD is using the free opportunity and the 12VHPWR is a joke no matter what. Saying, how we can also use native gen 5 power adapter (yea just ignore the adapter in the box of a $1600 card and buy others, what about non-native ATX3.0 PSUs ? ah lets forget it right). Boy how well it aged like milk in a day, when we got native 12VHPWR connectors melting out. Also DP2.0 marketing is also not good as to him and somehow misleading by AMD as we do not see any frames... Hmm a $1600 (4090) card with no DP2.0 heck even Intel ARC has it. AMD X670E boards support it too so do their Ryzen processors. Going by that logic, why have PCIe 5.0 X16 lanes on the dGPU PCIe slot on latest mobos ? we dont have GPUs nothing utilizing them. Since when having more is bad.. He should have used this slide instead of wasting time on about fake FSR trash 8K marketing fluff nobody cares about 8K unless they are really pushing it native or using it to bench an SLI rig. Anyways here's the slide LTT mentions it as well btw. This is the exact slide which useful. Not that FSR benchmarks garbage that AMD showed. Same with Nvidia they had only 1 slide which was good it showed that 4080 12GB was losing out to 3090Ti. Nvidia slide btw which was worth..notice the 4080 16GB perf vs 3090Ti there. A small note - This is me who owns an Nvidia GPU and always owned them only. However that is not going to be in the future though. As for Performance estimates here's a LTT video on the charts (ignore that clickbaity thumbnail lol) Skip video and here's the estimates. Note these are Raster ONLY. He shows the RT ones where AMD falls flat totally as expected. Again except Metro I do not see any worthy title out there. Portal RTX, Quake RT are maybe a couple if you really want since both are fully pathtraced like Metro RT only edition. That 7900XTX will kick the hell out Nvidia flagship part for 60% less price. RTX 3090 TI vs 4080 16GB there won't be massive gains it will be limited as per Nvidia's own slides only reason left is to spend more cash unless they care about RT and DLSS3. This card will KO the 4080 16GB. And fight against the 4090. AMD will release a 7950XT to fight it again once 4090Ti gets released. Finally that awful Frame Interpolation type thing. AMD Is saying they are working to make their FSR3 across other cards than RDNA3 and say it explicitly by that idiot Frank Azor, note AMD is using AI accelerators in RDNA3 and not RDNA2 so if they use it RDNA2 won't but they are hinting it will get. Also no confirmation if its Frame Interpolation or not.. hoping it is not. Imagine that fake FPS booster doesn't use anything AI but also works on Ampere, massive kick in the nut for Nvidia for gating that tech to Ada Lovelace. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

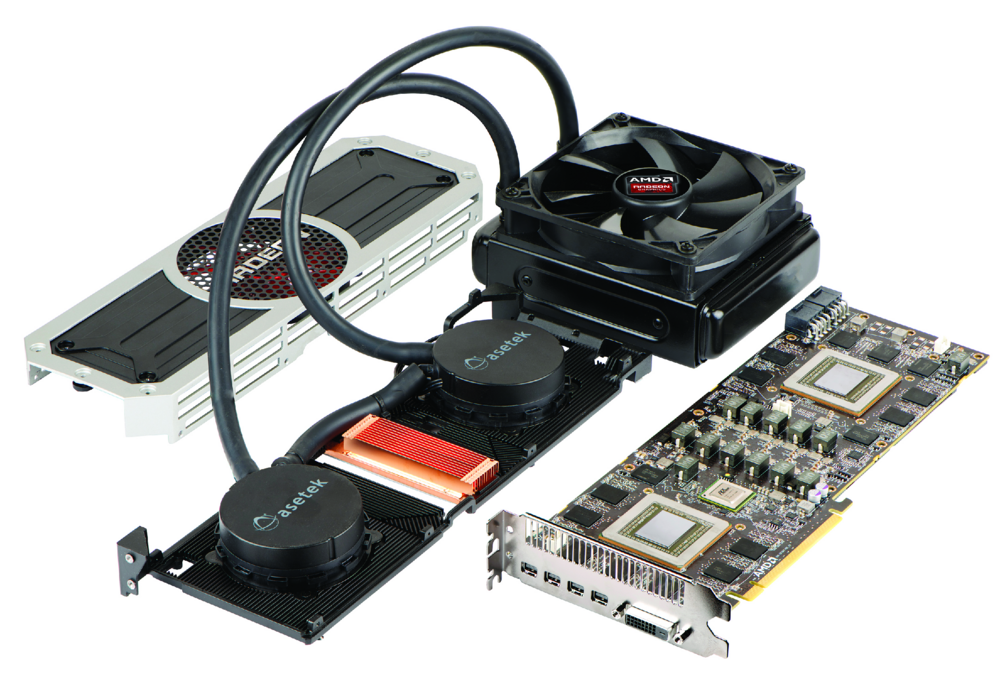

Class Action Lawsuit is brewing. Perfect. This is what Jensen and his gang deserves for ruining the PC market pricing since Ampere to now Ada and then forcing the trash 12VHPWR standard. I do not even know much on electronics part until I watched Ahoc and revealed how garbage POS this standard is. The maximum for the new 12VHPWR is under 684W and the standard retail is already pushing it to 600W that means already 87% of limit on the connector. Meanwhile the 8 Pin standard is 50% since it has more headroom for it's max limit as it's standard at 150W, but in reality it can handle 306W. So the safety margins on the 12VHPWR is just 14% and the 8 Pin is 64% considering worst power supply wire gauges. That alone speaks volumes for this TRASH connector pushed by everyone at PCI-SIG and Nvidia and all companies who did a green light on this into Retail channels. AMD Radeon R9 295 X2 with 2x8Pin connectors with 500W power. More on the above GPU Imagine designing a new GPU in 2022 vs 2014 GPU which broke the compliance even. And losing it out...TRASH. Junk Nvidia over engineered BS, to add insult the pricing scam for 4 damn years. 3080 -> 3090 100% price increase for 15% performance they got away because mining crap. Now they use it to make 4090 price acceptable. Meanwhile 3090Ti is now at $1000 MSRP which is actually 10-15% faster than 3090. Add the Ultimate Trash though which is Frame Interpolation, fake frames not even rendered by GPU inserted for that muh numbers + latency !! The RTX 3090Ti despite using same power connector as 4090 16 Pin 12VHPWR got saved because it doesn't have Sense Pins variables they are shorted and disabled permanently so of 16 Pin only 12 Pins are used. Second the Power circuitry of the RTX 3090Ti has load balancing land splits it into 3 150W type connections while the hot fire hazard Ada 4090 does not. RTX 3090 is not effected because its 350W only and also had a slanted connection plus it's only 2x8 Pin connections. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

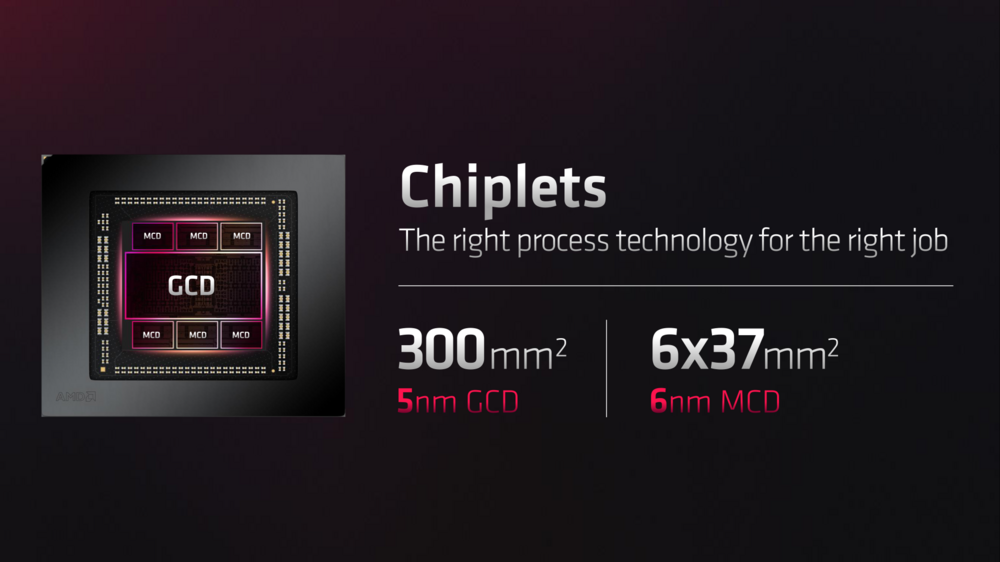

Looks like AMD RDNA3 packs a ton of punch in Rasterization. It is very fast upto 4090 maybe 10-15% slower, but at 60% less cost. And 100W less as well, 2.5 slot width too no questionable trash power connectors good old 8 Pin. It also packs AV1 Decode / Encode blocks. DP2.1, The RT performance is lower, expected as they barely match a 3090. No PCIe5.0 though. Smaller die much smaller lol,... 4090 is 600mm2, this is 1/2 !! 300mm2 imagine if AMD went 600mm2. Shame they go conservative always if they had 600W GPU like Nvidia it would blow the roof off with performance for sure. Killer price and kick in the nuts for Nvidia for sure. Solid value for many as the top card 7900XTX is $1000, 7900XT is $900. And RT is worthless to many, esp when we talk the GPU market it's not much on 3080+ bracket so all low end cards will be destroyed by RT also in games it's pretty much useless. A massive performance hog at the expense of very less fidelity improvement. The only title worth RT is Metro Exodus Ray Tracing Edition. All others are a joke. They have FSR3 upcoming which is called as "AMD Fluid Motion" I hope this is not that frame interpolation that Nvidia pushed, if that's the same then it's a big shame for PC Fidelity they already ruined with this upscaling BS but now the Frame Interpolation is far worse. -

Garbage OS has all sort of nonsense BS with it. Shame this crap OS is already gaining support on Steam survey (24%), and Netmarketshare (dead now sadly) Statcounter (11%) too. Normies are braindead to discern the difference between anything good or bad so not a surprise. Also AMD / Intel both recommend this garbage junk OS for benching (not surprising because both these companies make billions of devices for the BGA trash and they also want money from this trash company). Worst part is for AMD, despite that as it ruins their CCD system with the new scheduler changes and for Intel it benefits. Microtrash gated off HDR to Windows 11 and Storage API. Add extra complexity of VBR and other Win32 downgrades. It's basically muh "Direct X 12 Ultimate" which is essentially that new Directxstorage for the games utilizing high speed NVMe SSDs for texture loading. And it doesn't do jack for FPS, it just frees up the CPU and thus loading faster some think it will fix Unreal Engine's nasty stuttering problems, I do not have hope on a new game worth my time forget worrying about the API trash. DX12 came in 2015, and in 2022 we have only begun seeing DX12 only thanks to Microtrash enforcing all developers use the newest Windows WDDM thus enforcing Windows 10 versions (spoiler - LTSC 1809 is outdated, I bet by 2024 no game will run on it despite having lifetime until 2030). Some people think PlagueStation 5 is basically magically loading Sony's new games faster because of that Space Age SSD (Sony claimed it something extraordinary lol), so that's another place whre this BS theories come from about DX12U. Also a reference since I ranted on storage (old HU video from 2020). SSDs are already much faster for PC and they stay cool. NVMe tend to run hotter and have no advantage for maybe say 60-80% of the PC DIY mainstream users, I like new tech but when there's no point in increasing storage space what's the use ?? NVMe 4.0 4TB are super duper expensive still...5.0 SSDs will have same uber expensive pricing and again limited space with poor value. Well PCIE 5.0 is coming so Microtrash needs more fluff to sell to the idiots who will eat up that koolaid BS Windows 11.

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Was about to post it. This guy did a good job in showing the absolute pile of trash quality that Nvidia shoved hard. Esp with the quality of the soldering job, the pictures show the total horror as the title shows. Cheaped out garbage the high guage wires are going into this narrow thin sheet and crammed tight with low tolerance. RIP. Below image with cut adapter to show the insides and the soldering for the cable. I bet the metal is thin and not up to the mark. Even though he says the cable design / formfactor is fine I do not see it is a good replacement. Cablemod is explicitly making 90 degree plug for this, if the connector design was not a dumpster standard like this there would never have been any requirement. Heck when we plug the 8Pin power lines to CPU on mobo sockets we kink the cables hardcore even if they are 4Pinx2, like literally bending all ways possible and we never have any sort of failures on that connector. The 8 Pin standard is really made solid bulletproof and does it's job very well. And both ASUS and GB have metallic shields for those CPU connectors... None of the companies tried to even put something like this to reinforce the socket and adapters.. shame. This new 12VHPWR is a joke. Nvidia made it even worse. No wonder AMD is totally skipping this BS for a standard form factor which is tried and tested. "If it ain't broke, don't fix it" -

introduction What web browser are you using, and why?

Ashtrix replied to Mr. Fox's topic in General Software

I think Firefox will follow suite eventually. It is kinda expected from these companies. Because Microtrash has nowadays enforces new AAA games to have latest Windows 10 1909+, and in the future they will enforce Windows 11..,. shame how they kill their best OS ever made. On the otherside, old news - Abbodi from MDL mentioned this back in time at deskmodder.de, last time you posted it. Not sure if M$ will update their ESU in the future or not. I'm worried if they push Windows 11 hard they might axe Win7 ESU policy entirely, at the moment it is still Jan 2023. Since Google alr announced, I think MS may kill the OS. I hope for a miracle... a small ray of hope to see the Windows 7 ESU extension upto 2026.- 166 replies

-

- 2

-

-

-

- dopus

- directory

-

(and 50 more)

Tagged with:

- dopus

- directory

- opus

- file

- explorer

- zenbook

- 2022

- notebookcheck

- pc

- games

- fps

- performance

- laptops

- clevo

- nh55jnpy

- nh55jnrq

- nh55jnnq

- sound

- tutorial

- guide

- image quality

- quality

- blurry

- images

- storage

- storage limits

- laptops

- desktops

- desktop replacement

- janktop 4

- efgxt.net

- transfer

- notebooktalk.net

- gta

- grand theft auto

- rockstar

- open world

- crime drama

- m18x

- nvme

- mxm

- r2

- upgrade

- what laptop should i buy

- template

- opera gx

- chrome

- edge

- brave

- firefox

- dell precision 7760

- dell precision m6700

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

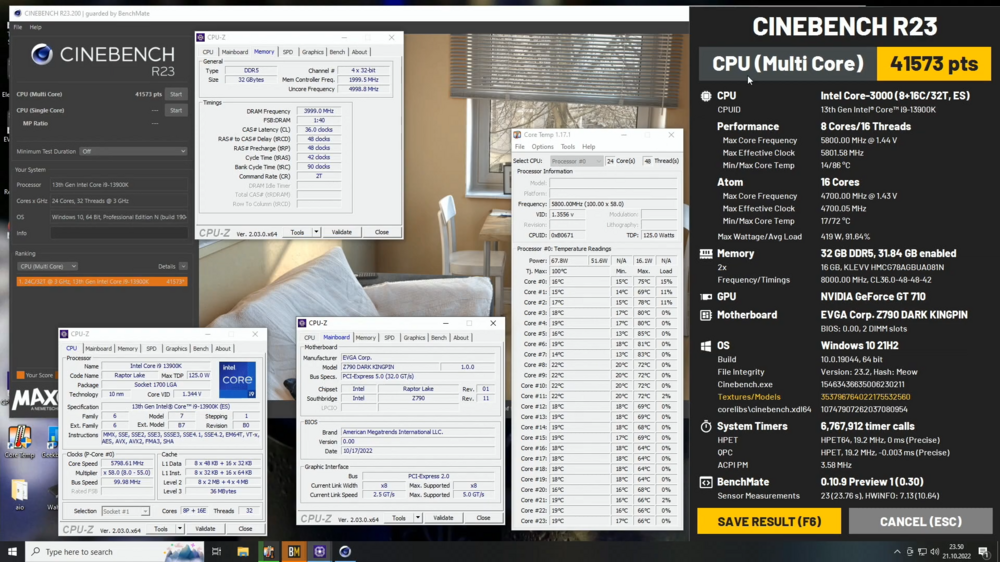

I think this massive SP variance is probably because of E cores nonsense and their sharing of powerplane with P cores. And the fact how the new 13th gen i7 13700K is basically 12th gen i9 12900K now as they added more E cores, and this is for entire stack, 13600K also got E bump. Plus anything lower than 13600K is Alder Lake on 13th gen lol. 9th, 10th, 11th did not have this much massive delta in SP rating. For MSI I do not know even a bit to think about so it's a total blacked out zone. The only thing which is certain is SP ratings is ASUS own big brain algorithm which reads from VID factory values and thus estimates their OC capabilities and etc on the side panel in the BIOS. However do note 'sometimes' ASUS adjusts them as the BIOS gets updates etc. ASUS solution is far superior to MSI esp given the fact how ASUS shows the factory VID + gives the approx values of Vcore and shows to us that is required atop the the clock / cache ratios (side note - Cache ratio got nerfed with 10th gen later on and 11th gen because Ring was too hard on 14nm++ and causes issues. ADL was start and it did not improve. RPL Intel explicitly mentioned that their Ring is very fast now as it operates at 5GHz it's a much tuned product than ADL ever was) Maybe MSI doesn't store these values in their BIOS code and integrate, that is why probably their ratings are not same and variances... Also ASUS offers V_Latch on their Apex and up series (however Hero also has SP) and that helps them to read exact Vcore values at die-sense directly without the added socket sense LLC impact just ASUS engg at play with E nonsense and binning .. It's all just speculation from my side. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

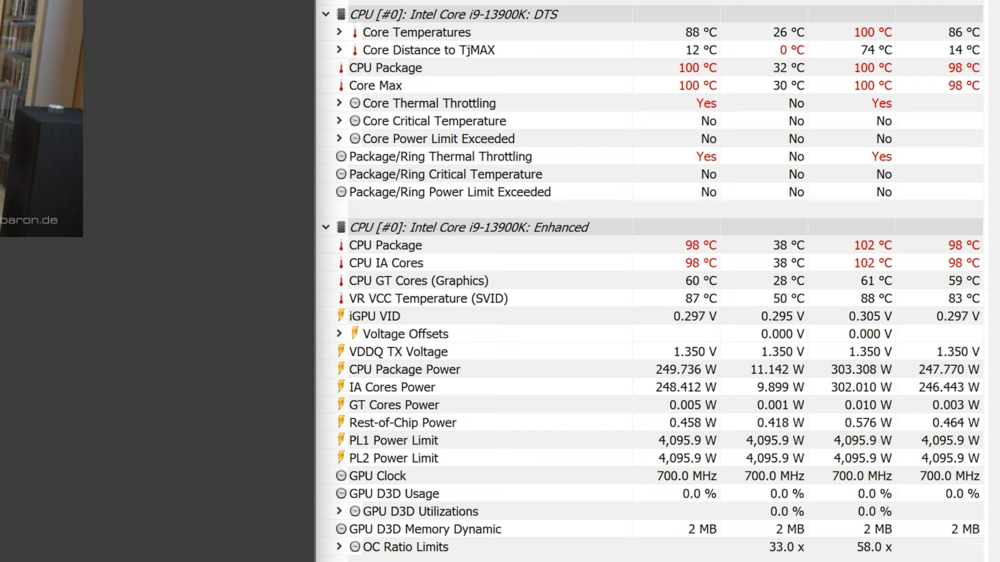

Luumi's OC run is taking more than 300W, on Stock 5.5GHz and 4.3GHz, its 320W and with OC it goes to insane 390W with P cores at 5.7GHz and Add-on cores at 4.5GHz, Cache Ring at 5GHz with 1.35v. Then he increases the Add on cores to 4.6GHz and P cores to 5.8GHz same Vcore it goes to blistering 415W. P95 goes to 468W with AVX offset. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

I think Nvidia rushed things hard. While Ampere was in development the new ATX 3.0 spec was probably being created and they jumped into the thing fast and created the 12 Pin for 3000 series first which was totally 100% proprietary, also they rushed it to the market cashing in on the GPU shortage and mining later released the full card with 12VHPWR connector with 12 pins active 4 sense pins being inactive. Remember Ampere series blowing up hard then Nvidia had to update the Drivers. Then they had another fallout with the MLCC caps thing, then again later with Amazon's New World GPUs started blowing up again all of them high power draw and etc power related spikes. Yep, 3090Ti uses Sense pins making it 16 pin up from 12 pin (proprietary not compatible with new connector) for 3090, but those 4 are not used, the PCB is capped so has them shorted, Yep nice observation, all Ampere cards had them in angled including 3090 but 3090Ti doesn't so it also has same issue. Which is why CableMod's 90 Degree custom cable is same for both 3090Ti and 4080. 4090 is separate because it needs more 8 Pin adapters than 3090Ti / 4080 class. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

About the fan splitters I found this one too. https://www.amazon.com/gp/product/B077YHLDSP I was about to buy something like this but since the mobo headers can take 2x Noctua NF-A12's I left the idea of having such, maybe If I need to use Noctua PPC Industrial like @Papusan It would be handy. Personally I do not like to have Corsair or any other controllers which rely on the software esp RGB junk mandates such controllers. Just mobo should be handling this at low level without any OS or Software dependency, so options are good to have. Nvidia is a trash company. Experimenting always with every damn generation be it SW / HW / Uber trash pricing rip off BS. Initial leaks on Radeon RDNA3 PCB showed the usual tried and tested rock solid 8-Pin power connectors for GPU and not this one. Gotta see what AMD will unleash, I bet their GPUs are much more efficient than Nvidia's 600W GPUs with melting power connectors. Legit this stuff is brand new shiny and appealing to many but the new ATX 3.0 PSU standard (Still not popular and only handful exist that too with compromises) and the new GPU 12VHPWR hamfisted power connector standard atop of the new PCIe5.0 SSDs, DDR5. Too much new stuff. I'd rather stick to old (which is what I did at-least 1/2 way) and move to new when it comes a standard and not be a guinea pig to these companies. With Intel LGA1700 need to buy an aftermarket CPU retention bracket to stop bending the damn mobo and CPU, with Nvidia buy 90 Degree aftermarket power connectors to stop a fire hazard that could burn your home / PC and pray it doesn't break. PCIe5.0 SSDs are still damn 1-2TB and 4TB max (exorbitant pricing incoming) and they will offer extreme prices too for basically nothing. PCIe4.0 SSD drives were super expensive for a whole year at rip off pricing. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

ASUS basically added it in all their BIOSes on Premium side. ROG Maximus / Crosshair series and up now will get that feature for Intel and AMD CPUs. It is the same VID tables that CPU requests as per max voltage/frequency from factory and looks like AMD also started doing it with Zen 4 Ryzen 7000 launch in a way so that ASUS can tweak their SP rating algorithm to AMD silicon too. I think this era of AMD and Intel almost all of the top end i9 / R9 stack will be super binned 90+ SP, just look at the sheer frequencies both Zen 4 and Raptor Lake push it's 5.7 and 5.8GHz boost insanity and All core clocks at 5.4/5.5GHz unlocked power from factory. Back with 10th gen 5.4GHz all core stable is only high end chips top golden silicon. Now almost all of the top end can hit that on all cores sustained. -

Better use MSMG and remove it, I won't install this pile of trash Defender ever. I disabled it on Windows 8.1 Enterprise but Windows 10 needs that to be totally removed from the OS itself. LTSC1809 17763.xx using MSMG deleted off defender totally. Windows 11 is not an OS that I will ever consider, even if it's getting LTSC or BS. It won't be in any of my machines. I will move to Linux, Windows 10 LTSC will be end of line for Windows platform to me.

-

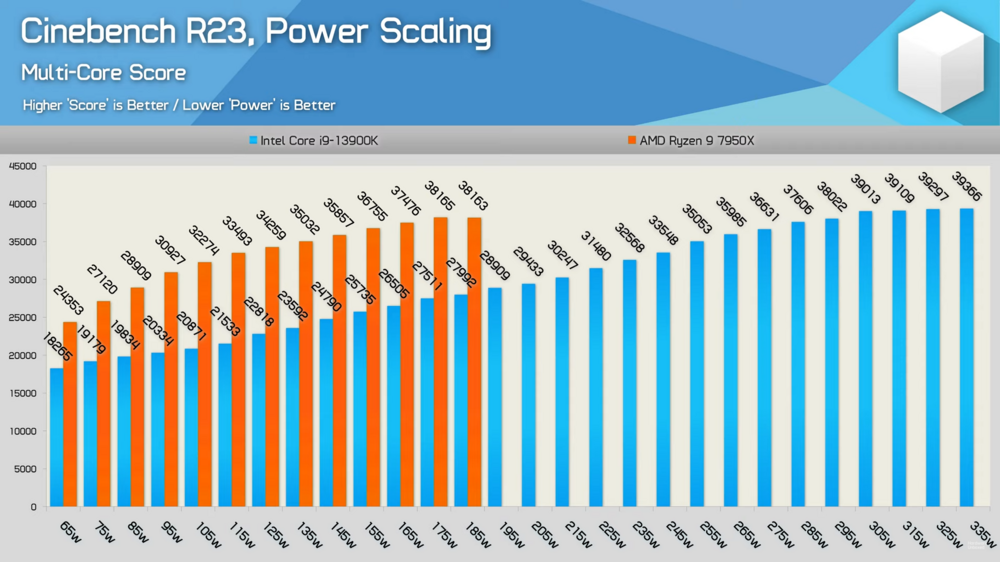

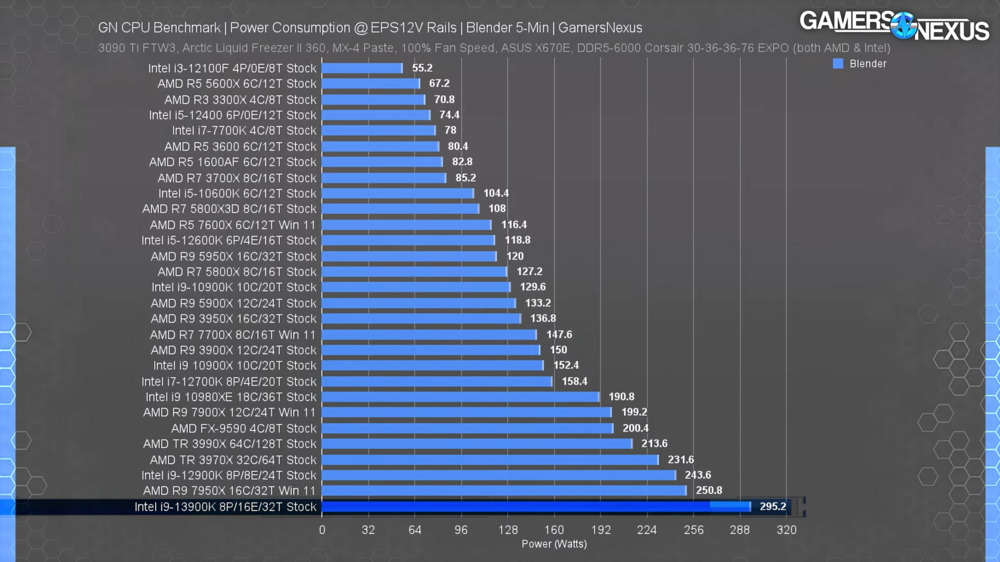

After reading through Anandtech & watching GN, HBU videos. It's basically neck and neck both Zen 4 and RPL. They trade blows, kinda expected. But what I did not expect was Intel catching up to AMD's MT performance by those pathetic E core formula the higher clocks provided them 90% of the boost with this revision. In the SPEC scores same thing, both duke out. And both AMD / Intel pushed to frequencies more than a brand new design. And ultimately both are fast BUT AMD is faster at lower power and it's max temp while Intel needs high power to compete vs AMD Ryzen 7000. Design More Cache on L2. Gracemont E cores same as Alder Lake no change, lower base clock now also higher boost clocks and more E cores. High Clocks for P cores, Raptor Cove 600MHz faster than Golden Cove Better IMC for DDR5. Z790 has lanes for more I/O replacing PCIe3.0 PCIe4.0 4 lanes BUT DMI is same as Z690 PCIe4.0x8 no change. RPL Z790 ADL Z690 20 x 4.0 12 x 4.0 8 x 3.0 16 x 3.0 OC still alive a little vs AMD as always with Intel. AVX512 is dead on Intel vs AVX512 monster Ryzen. Intel did laser fuse off... shame since 30% of the silicon die of P core is dead and wasted in the new Intel processors. ST and MT there's no leader, both AMD and Intel are neck to neck. IPC gain is basically nothing from RPL, it's just sheer clockspeeds pushing all bars in SPEC, Gaming, Prosumer etc.. Highlights 12900K is now i7 class 13700K since the latter has same P and E cores but a high clock rate but do note that RPL runs at 1.3v stock at 5.4GHz all core so you have lower room. 13900K is just a tad faster than 12900K in gaming and many, it's barely even an upgrade, just to get best CPU for socket that is all. And ofc competing vs AMD so more choice ? Intel Thread Director is being recommended heavily to be used with Windows 11 trash. AMD on the other hand suffers badly using Windows 11 garbage. Intel's Pricing is competitive heavily vs Zen 4 and DDR4 option allows for cheaper builds. Although Zen 4 B650 exists still DDR5 is pricey. Intel's IMC improved and possibly more DDR5 speeds possible AMD is limited to 6000MHz EXPO. At this point Memory itself the DDR5 is very marginal vs DDR4. Power consumption is higher on Intel as expected, 330W+ Since 10th gen Intel is over 330W with 5.4GHz OC but now mobos default vs AMD's PPT hard limit 250W. Z790 barely has anything, not even LGA1700 ILM bending fix revision, it's just extra PCIe lanes from chipset more I/O on same socket nothing else. Gaming is nothing major. It's just faster and not a substantial gain. And heck a 5800X3D beats both RPL and Zen 4. Conclusion Intel and AMD both need high end AIO coolers, maybe DH15 can handle some of AMD Zen 4 parts but the Air Cooling is not an option for Intel, the heat density is very high on Intel since 11th gen while AMD can have a bit of tuning on temp at 95C but note it doesn't thermal throttle vs the Intel chips which are not designed like Zen4 to boost directly to 95C regardless but if approached to high temps they downclock. Intel's LGA1700 Socket is dead. RPL is last, there is no CPU coming to this socket anymore and with DDR5 being brand new people are locked to a platform but silver lining is better IMC on Intel vs AMD and the DMI bandwidth is better and higher than AMD as AMD locked the X670 to PCIe4 downlink, maybe to keep costs lower on PCIe5 because Redrivers and more layers etc. My own decisions - I bought a dead end socket LGA1200 because Windows 7 and DDR4 and I hate E cores philosophy plus socket bending nonsense, avoided AM4 because the IOD was flaky, has USB issues, and the CPU cannot be tuned, weak IMC. AMD's AM5 will last upto Zen 5 -> 5D as well. 2025+ basically the major aspect of a purchase from my standpoint is Intel you get a hamfisted socket (bending) and stuck with this. AMD you get a longer living socket, Zen 4D will smash all the gaming to stratosphere in 2023 with 7800X3D. My guess is AM5 will have Zen 4, Zen 4D (Mild refresh), Zen 5 (Major refresh), Zen 5D (Mild Refresh). So buying AMD means you are buying it for a long lasting product just like AM4. Look at the power chart and temps from Hardware Unboxed, 13900K is having thermal wall with high core workloads. Under 30 seconds it hits 100C on Arctic Freezer II 420mil. MSI 360mil AIO cooler on MSI board this is the situatio, it has a lot of thermal wall and performance scaling. And from TPU review, this is hitting 100C on stock and thermal throttles. On Gen 5 SSD it will steal lanes from main slot, although there's no 4090 with Gen 5 lol. Guru3D GN ASUS board stock Steve is only mentioning about the damn wattage. Why not tell about the damn throttling and max temp hitting fast...Also his gaming suite is really pathetic. That Windows 1122H2 for all the review kits. P.s @Reciever May I suggest to change the title ? I think it would be better to have Intel 13th Gen thread And same for AMD's Ryzen 7000 Review thread to Zen 4 Thread to keep track of all things about these processors / talk and general stuff and not just reviews and maybe move them to Desktop section idk..

- 16 replies

-

- 4

-

-

-

-

- 13900k review

- review

-

(and 1 more)

Tagged with:

-

Entire PC market is in decline, Ignore Apple since it's a special case of human idiocy. AMD pricing is higher but I believe it's not ultra high insane BS like Nvidia's garbage or the use and throw dumpster products. All the reviewers where crying about how the mobo prices are bad. But they are totally out of touch and fail to make a solid point. The aspect of PC DIY, only a few people will go and shell out money on Day 0 or Pre-order, Most of them wait for deals and buy the mid range parts. Second is during the AM4 era of AMD due to high socket lifetime everyone bought one and they simply kept on upgrading their gear, which is why Ryzen 5000 is still chart topper on Amazon and Newegg CPU lists. That says one thing which is AM4 is still a widely used Socket regardless of new CPUs launched from both Intel and AMD. AM4 is uber saturated. And with COVID everyone who wanted a PC went to get AMD only. So the B650 mobos launched a week back, the pricing conundrum doesn't hold up well at all. AMD B650 motherboards are now available, pricing starts at $159 Looking at Intel boards and AMD top end both are blisteringly expensive higher BOM due to PCIe5.0 and more redrivers and DDR5 and thicker PCBs more VRMs PC is getting insanely expensive, GPUs from Nvidia trash are even worse. American Economic situation is a disaster. 10% inflation,highest in past 30-40 years, Housing market in America is insane at 6-7% interest, then we have the whole war thing where America keeps sending billions and billions of cash and MIC corporations keep getting their nice fat checks while the American population is still trying to get a hold total funds sent to Ukraine are whopping $60Billions+ and the Euro nations castrated themselves with decommissioning Nuclear power in the name of BS environment. Economic Impact is coming. Stock Market is totally cratered overall it's a disaster. Due to Global currency being USD standard entire world currencies are dropping vs American Dollar and losing their value because FED / Central bank printed trillions of dollars into economy to address COVID (I got this btw, 2 days of weakness that's it and had smell and taste sensation gone for 1 week due to phlegm in the sinuses that is all and now I'm all healthy did not took any jab and and my company dropped the mandate too noticing the issues of useless vaccines and side effects). And after effects of economic correction due to Fed hiking the basis points in interest and world trade hits other nations causing their currency to lose value and goods becoming expensive vs America and lot more factors but those are top of my head. Nvidia lost their sales in Client, covered by TPU in August. Anandtech and TPU both covered this Oct Press of AMD financials. Look at Datacenter and Client for AMD, the corporations are giving them insane amount of cash while Consumer market is suffering. Intel already posted a massive loss in decades too. Q2 All in all PC DIY took a massive hit nosedive, the economy failing is the primary cause and the AMD products, even Intel's own products already satisfy a ton of PC users barring those use and throw JUNK BGA CANCEROUS POS HW who buy every damn year like Smartphones. I expect this slump to continue towards 2023 Q3-4 as well. This should be a lesson to the trash Nvidia for not scalping their own goddamed market. We will see.

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Fantastic video on the new BS tech from Nvidia. It has a lot of issues as expected,. Yeah new tech (tbf another regressive tech like upscaling) but it will have some issues which are glaring and not easily possible to fix. Higher Response Times thus causing lower FPS perception experience even if the number shows high lol. Nvidia Reflex aka Low Latency mode is already there in this tech but if you watch the video you will see how DLSS3 won't lead vs the regular rendering even if using low latency mode because low latency exists for all GPUs from 900 series aka Maxwell so everyone can use it already and with DLSS3 it is barely changing anything for better it's worse in fact. And AMD had Radeon Anti-Lag aka Low Latency mode long before Nvidia announced Reflex. Ultimately First Person Shooters with high framerate likeDOOM and such titles will have negative experience when using this thing because FPS games will always be better when you have lowest response times this smoother gameplay. Image Corruption due to fake data which is not a real image at all but an interpolated image inserted plus the UI elements of game being effected as well, since UI is also rendered as an output of game engine, this will effect waypoints, and a lot of aspects in gameplay. Higher Image Instability of ghostiing, shimmering, artifacts due to adding a newer Frame Insertion which is not a rendered output image amplifies the effect plus most of these already exist on DLSS2/FSR type versions so it amplifies even more. High Refresh Rate Panel requirement, imagine having LCD overpriced display with response rate limitations, as most of the LCD tech demonstrate how they have poor color reproduction and ghosting etc esp for those panels which are not G-Sync Ultimate (these are binned and have better Panel quality) then having this tech causing all sort of bonus problems lol. vs OLED (instant response times). High FPS Target output requirement (200FPS+ min because of perception of gameplay smoothness is actually lower) thus making it worst for the low end / mid range cards esp low resolutions imagine using DLSS at 1080P and then pairing the Frame Generation, it's a total ultimate trash experience. DLSS2 / FSR are not worth at 1080P at all it's insanely worse and even at 1440P at all. Frame Capping restriction forcing to leave higher output and for those those who use VSync it will also cause issues. Limited to Ada and paywalled for now. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

They knew the reviews would blow in their face. With 3080Ti and 3090 and 3090Ti all will hemorrhage that card hard also all are in few % margins lmao add this nu408012GB to the mix that's a heavy backfire. I bet they saw the feedback from all the reviewers LMAO. Bonus is DLSS3 being total dumpster trash which is demanding 240Hz monitors because of actual latency increase giving the end user a lower FPS feel, plus the image corruption issues, all with an alpha/beta state. It won't help them sell and the massive elephant in the room 192Bit memory, reduced CUDA cores on a xx80 card severely backfired from PR standpoint everyone was aware of it plus the 30 series supply on top. Now this will be rebranded as 4070Ti probably and hold it off until 3080Ti / 3090Ti stock depletes. Since that is a fully enabled AD104 core, which means xx70Ti class. It's amazing to see how awful this POS card was that even the Green Goblin rolled back haha. Yep, and 4090 4K performance is higher than Ampere so they can demand a lot of cash. Plus it's a massive die size and on a TSMC 5nm which is expensive so a fully enabled AD102 means even more expensive, why would Nvidia even release 4090Ti this year ? They won't and they will safely keep it under until the 30 series stock totally dries out and the economy improves. Because 4090Ti is getting DP2.0 + 2000 CUDA cores and ofc a massive TDP bump again on top of 450W stock 4090 AND the $500 premium, totalling to $2000 MSRP. Honestly that card is worthless for 99% of the market, -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

HEDT is dead. Intel and AMD said good bye to the X299 and AMD's X399/sTRX40 as last goodbye to the market. SPR Xeon is delayed to 2023 Q1, that means it will not hit the retail anytime sooner than Q2-3 means more time again for the Xeon based CPUs for consumers. Intel is in similar line with Threadripper Pro, W series a.k.a Workstation class Xeon W. AMD's Threadripper is dead prematurely, AMD axed all their customer base hanging to the TR CPUs on that sTRX40 boards without a successor. It's all Threadripper PRO series which costs an arm, a leg and a kidney on top. It has more DRAM more Lanes and more everything.. Those things will not be cheap at all. On top the earlier rumors of Genoa EPYC TDP is high because AMD is going all in at 96C/192T monster this round, same for SPR Xeon with 4x14C tiles Intel finally got more cores now at 56C/112T plus Intel's not even using MCM it's EMIB, expensive to manufacture which is why Intel is failing to even get it to their bread and butter Datacenter market. So ultimately HEDT market is gone. It's all W class for Intel XEON W and AMD Threadripper PRO series from now on for that Prosumer market. Look at the PC Hardware reviews and benchmarks, how many even really actually care about PCIe specifications ? Of mainstream coverage TPU only was the one who covered the PCIe lanes on AM5, Anandtech is another who does it. Again with RTX4090 reviews none of them even mention the Display Port specification on them or PCIe either, this is a full fat real 4K card and nobody even cared to mention it has PCIe 4.0 only, buy a brand new expensive high end PCIe5.0 mobo and have only 4.0 GPU worth $1600 and a DP1.4a spec. CPU and GPU reviews it's all "Gaming" endlessly. So all the PC crowd is also done with those HEDT PCIe lanes high core compute or etc. The market doesn't even exist anymore on top unlike those Haswell E parts these HEDT barely scale any in gaming and have negative impact.. Heck only Tom's Hardware did a review on AMD TR PRO. Nobody even batted an eye. Bonus package ? Nvidia killed off SLI NVLink with Ada. HEDT lost even more now, RTX3090TI FE x2 SLI will be much more than a single GPU RTX4090 for those SLI workloads that scale.. ML/AI and etc esp those which use 48GB of VRAM with SLI. Well it was a good time until it lasted ? Shame how PC is bogged to ash by the Console trends a.k.a no dev cares about SLI or PC since 100% of the PC games are nowadays only Console ports with added tech some times more optimization and tweaks. And most of the PC buyers are only looking at gaming too. Here's that old video which I'm reposting again, Crysis 3 from 2013 running in SLI with fantastic scaling with 3090 SLI on 8K resolution no DLSS upscaling crap or DLSS fake frames here, what a beautiful sight... maybe the PC exclusive title of the century ? haha. The good ol Crytek which has been dead since a decade now, well we won't be seeing this type of PC only anymore with any company going forward. There's no passion left anymore in AAA space. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

TPU had pictures of the FE card, I did not watch that GN video on Nvidia's PR engine about how great the Nvidia design is yet. But yeah as we can see the 4090FE is having a thicker design, a bit fatter VC HSF area vs 3090Ti/3090 chassis. And the length is also a bit reduced vs the 3090/Ti design. This is how Nvidia was probably able to squeeze the GPU inside the massive 3 Slot brick design otherwise this GPU is not going to fit in that form factor of Triple Slot and would go to 4 slot a.k.a 3.x+. I thought 3090 was a giant card which will sag the hell out of a mobo, I plugged it already and yes it sags on the right side and over the time like 5-10 years, it will sag even more without some kind of holder. An anti-sag GPU holder is a MUST for all 3090+ class cards. RTX3090Ti FE - 2.2KG RTX4090 FE - 2.18KG Yeah that loss of length probably they shaved 100gm off. And this is Nvidia FE design which is the best for Anti-Sag because their chassis is screwed on the entire lateral PCIe slot metal brace both sides 3 sets of screws on each side total 6, plus the width of the brace is proper for 3 Slot form factor vs say Gigabyte - 2 Slot on a 3.7 slot 4000 series design, EVGA / MSI / ASUS / GB all use 2 Slot brace and lower number of screws and uneven screw position unlike Nvidia's FE design for all their Ampere RTX3000 cards. No wonder EVGA quit the scene on how expensive this whole GPU cooling thing is going to become on top of 12VHPWR PCIe5.0 cable adapter BS which is actually having lowest point at 30 times cycle. the cards are expensive to make, the cooling is expensive, the MSRP is expensive, the high TDP is demanding higher cabling requirement, fighting against the company which makes the GPUs themselves on top. Nvidia really kicked into high gear since Ampere with FE design. And with the massive 4090 ADA102 core, the damn thing is very high tech power consuming device no wonder the size of the HSF is holding up and not leaving much space anymore. I wonder how RTX5090 and up hold. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Only TPU has some respectable list of titles, many of them use pathetic list on top even Guru3D written articles also have almost same list of titles. The card is nowhere where Nvidia screams and pushes their Green Goblin PR BS engine,Yep the card is fast. But the price markup is pure BS in this economic state of American market and world wide financial situation. Expecting RTX4090Ti to be at-least 20-30% faster than 4090 and next the price also from $1900+ fixing the RTX5090 at $2500 AIB. Looks like the card is built for 4K resolution only. And it's worthless at 1080P resolution and shrinks with 1440P as well. Not a single Youtuber benched on Metro Exodus Enhanced Edition which had RTGI and only title worth of RT tag. SOTTR has RT shadows, why would I waste on that garbage ? Control is decent BUT it's all RT Reflections which is waste like BF V, DOOM Eternal also emphasizes more on RT Reflections they even have Ballista skin for Ray tracing in sheen silver. Pointless garbage. F1 lol let's move on.. Cyberjoke, is a joke of a title on all fronts. Here's Enxgma playlist the guy butchered when it came out along with a ton of others, he has latest 1.6 patch comparison too the point is not just bugs and glitches. The entire game is still hollow, not sure how much I would like to play in a depraved city, as if current CA state is not enough.. well news flash, the gas hit sky high in CA $6.46/Gallon. Add the Anime PR BS marketing shot it to moon, now expect far worse titles with extremely high PR marketing and lowest quality in AAA. FC6 personally horrendous game, here's a review if anyone wants to go through, a regurgitated garbage with horrendous politics. Dump the Watch Dogs Legion into it (You have Albion muh Fascist corporation and who are the enemies ? the people of England lol, well now why not look at the State of UK right now anyways) And it has worst GFX, guess what ? Ubitrash's titles all are same now. AC Unity shreds all of these new titles to hell and it came eons ago check that out below too (The RT mod is Reshade RTGI one), well the franchise also is dead since the Origins pseudo RPG nonsense. Unity was last worthy title in AC franchise. Maybe if you extend Syndicate a slight. Basically worthless AAA titles for $1600 - $2000 GPU at 4K. Maybe for older titles at 4K ? But those already peaked in performance from what I can recall. Hey at-least I can get the DLSS3 numbers to drool. -

Btw Noctua already made the IHS padding product, it's retail and available. Noctua presents NA-TPG1 thermal paste guard for AMD AM5

-

ULTIMATE BULLS**T LOOOOL Alienware fools are quoting TjMax Intel Ark specification of 100C for Alder Lake and using that as a base and saying here we are running into the temperate by using some fancy garbage formula and pushing the machine to max ofc they do not mention the absolute trash cooling. Also that guy says, we are making sure that "we do not waste cooling" WTF... Like since when more cooling is bad. Honestly their trashbox computers are the prime example of human stupidity and gullible nature of the normies who do not do basic research. Yeah I get it many do not have time BUT we are talking about Alienware tag products which are expensive to begin with. so you have to do some damn research. Horrible trash. Now with AMD Ryzen 7000 they will say the CPU is operating at 95C but will not mention the clocks LOL. It will be probably worse than Wraith in a few days thanks to the propreitary garbage mobo and dumpsterfire logic they use.