-

Posts

1,896 -

Joined

-

Days Won

13

Content Type

Profiles

Forums

Events

Posts posted by Etern4l

-

-

Nuke-launching AI would be illegal under proposed US law

Will be interesting to see if and when this becomes law...

Will other nuclear powers follow suit?

-

3 hours ago, serpro69 said:

If you're concerned about privacy then Android certainly gives you more headroom to disable various "features" (not w/o root in most cases of course) as compared to iOS.

As for GrapheneOS in particular, it does look very interesting from what I read about it. Haven't tried it myself though, but it's on my list.

With this in mind, I'd definitely go with pixel + grapheneos (or even if graphene doesn't end up being to your liking, just go with any other android OS that let's you do what you want with your device)

Yeah. The problem with Pixel is that it's a bit of a weird step in terms of more aggressive degoogling, and sadly GrapheneOS doesn't support anything else.

As for the GrapheneOS, a lot of people seem interested, but it's harder to find any remotely trustworthy testimonials.

-

1

1

-

-

Maybe it's a limitation on the laptop side, but desktop Alder Lake was capable of running 128GB at around 4800MHz, although after a good few bios updates and not with every kit. It was a bit of a mess initially.

Edit: On the other hand, DDR4 laptop RAM was always slower, so perhaps we can expect the same from DDR5. I guess is just must have to do with the size of the modules. A DIMM is roughly twice the size of a SODIMM (and there is even a larger discrepancy vs the even smaller CAMM?) specifically, the chip surface is much smaller. Temps, for instance? DDR5 can run hotter. That would potentially make the purported benefits of CAMM moot if not counterproductive, especially for higher capacity modules which run slower anyway. We'll see, although I would personally steer clear of this for now. Not sure if miniaturising and compressing is a step in the right direction from the performance/enthusiast perspective, whatever Dell claims. They didn't actually showcase the performance benefit by offering any RAM over 5200/5600 speeds, did they? In contrast, people are pushing 8200 on the desktop side, and 128/196GB runs at 5200, using the humongous in comparison DIMM modules.

-

1 hour ago, Aaron44126 said:

Yes, it is true. Precision 7X70 and 7X80 systems use CAMM modules before the standardization is done, and as you say, there is a chance that the standard could evolve before it becomes more widely used so future CAMM modules would not work in these systems. Dell launched these systems with CAMM to demonstrate their commitment to the standard and that the modules work. You aren't forced to use CAMM in these systems; you can order these systems with a SODIMM interposer and use SODIMM modules instead. Several users here have done so in order to save some money or install their choice of modules. I understand the reservations but I can't really fault Dell's approach here. They're the only manufacturer to get ahead of the problem with SODIMM and put out something that works (other than soldered modules), and they got JEDEC to go along with it.

(There's a downside to choosing SODIMM in these systems, though; with only two modules, 64GB is the memory cap... maybe 96GB soon, though, as it does look like 48GB SODIMMs aren't that far off.)

To me it looks like they jumped the gun with a proprietary solution. I am not sure about MSI Titan which also supports 128GB, but Lenovo ThinkPad T16 runs 128GB at 4800MHz on SODIMMs, whereas apparently Precision 7770 is advertised to support 128GB at 3600Mhz using "thinner and faster" CAMM... Not great - perhaps it's actually more about the thickness than speed?

-

58 minutes ago, Aaron44126 said:

…From last year. I posted a link to this very article in the Precision 7770 prerelease thread here when it was newly posted.

Lol, I'm too tired. The rest of the post looks valid as of today: not a standard yet, CAMM modules are proprietary and not available on the open market.

-

1

1

-

-

5 hours ago, Aaron44126 said:

Old news?

Dated April 28. Would be reasonable to assume Gordon got wheeled out for a reason, perhaps they need more of the industry onboard or something? Yays from 20 or so out of 332 JEDEC members doesn't sound like much. Anyway, apparently nothing has been published yet, so it's not an official open standard (the sources below estimate it might happen in Q3 2023).

https://www.makeuseof.com/camm-vs-sodimm-ram/?newsletter_popup=1

The way I'm reading the above, Dell developed a new proprietary RAM module "in (some form of) collaboration with JEDEC" and pushed it out to the market prior to any standarization being complete. The actual final standard is likely to differ from Dell's design. Whether the standarisation is actually finalised, the date of that, and whether the JEDEC implementation is compatible with the Dell's design are technically open questions.

-

2

2

-

1

1

-

-

4 hours ago, Reciever said:

I think maybe today I will try putting GrapheneOS on my 7 Pro. I used to always put Custom Rom's on my phones and have been gutted that the scene seems to have mostly fizzled out. Unless of course I just dont know where to find that stuff anymore

Cool, please do share how it goes if you do, trying to decide between a Pixel I guess, or a fallback to iPhone. I haven't found any negative reviews or opinions about the former. I think one could build it from source if the pre-built package is a concern.

-

Dell defends CAMM, its controversial new laptop memory

Just came across this gem.. where Dell can't solder RAM, they will use a proprietary module lol

Of course, good old Gordon to the rescue, as always.

-

1 hour ago, ryan said:

I think every angle is relevant to this thread, it's not just about cons. some benefit can be gained from AI. Do you have any sources for AI artwork? I would'nt mind seeing it in action!

The question is not whether a benefit of AI exists, the question is what is the balance of pros and cons, and who ultimately stands to benefit the most. Here is my quick take:

1. Billionaires, multimillionaires etc - big thumbs up, as in the short term they will be able to cut cost and extract more value from their enterprises. As AI cannot own assets, they will be safe until things get physical.

2. Senior professionals - net benefit in the very short term, will be able to use AI to automate away a lot of junior work, ultimately will just get squeezed out as everyone else as the capabilities advance

3. Junior professionals / students - I don't know what to say, they are in a bad spot

4. Blue collar workers - on the one hand may benefit from access to AI assistants etc., on the other hand will be increasingly subjected to ruthless Amazon-style management by AI

5. Photographers - done for in the next few years

6. Other artists - depends on the medium, anything graphical is at immediate risk. Will the art be better, probably not, but it will be much much cheaper

7. Drivers - at a fairly immediate risk

I would suggest you open another thread on AI art as it would be a distraction here. Surprisingly, it's the first and probably the most hit area of human endeavour, all I can say is R.I.P. human art by and large, at least in the commercial setting.

As for the GDP estimates, I don't think anyone can estimate this correctly, so would just ignore the marketing figures to be safe.

1 hour ago, serpro69 said:If you look at these reports above you will also notice that the majority of AI Ph.D. grads went on to work in the industry (in other words - they're in it for the dough), so yeah, definitely biased.

Of course, good link BTW. Moreover almost nobody invests in or goes into AI safety.

It's not just AI research, seems like broader academia is affected as well. There are some right nutjobs at the top level of the hierarchy. Anyone directly involved in one of the labs, but also guys like the computational biologist Manolis Kellis of MIT - just an insane cheerleader, says things like "cool, humans won't have to think anymore, we will have computers for that, we will be working on things like creating new dance moves".

Edit: @ryan Basically, the problem is that automation, even the "dumb" one ultimately has increased inequality.

From MIT: Study finds stronger links between automation and inequality

Same from the Fed: The Impact of Automation on Inequality

From BoE: Uneven growth: automation’s impact on income and wealth inequality

A journalist's summary: US experts warn AI likely to kill off jobs – and widen wealth inequalityNow the inequality will rapidly accelerate as everyone continues to be squeezed while the AI advances towards the Holy Grail of complete automation on the blue collar front, while also proceeding to raze the middle class too (which of course will impact the entire ecosystem). No more or severely limited opportunities for smart people from disadvantaged backgrounds. Everyone but the top 1% gets poorer.

-

1

1

-

1

1

-

-

15 minutes ago, ryan said:

yeah I was thinking from an investment standpoint investing in AI could generate billions off thousands if you put your eggs in the right basket, kinda like buying bitcoin when it was worth pennies. that unfortunatly seems to be the only benefit of ai, I don't think creating an avalanche is good for us skiiers

Not here to provide financial advice, but you need to be mindful of the risks, notably regulatory. For example, many large corporations have so far banned the use of generative AIs due to the legal uncertainty. Small caps/startups will probably be very volatile, some companies can go out of business overnight as a result to their product suddenly getting deprecated by a new model etc. (especially all this stuff around prompts).

-

14 minutes ago, ryan said:

Some sources are estimating 32 billion in AI generative income, or income due to AI. this could be a big deal. what do you guys think? will AI take over or are we just exagerating its capabilities. I think if AI can do creative work and create masterpieces of artwork we are in for a ride down the mountain.

source

Sure, we have to unpack "income" though. Concretely, it's a huge area of growth for the tech sector (mostly FAANG+Nvidia), and an even greater opportunity to cut cost through deployment of AI for many other industries. I liked this though:

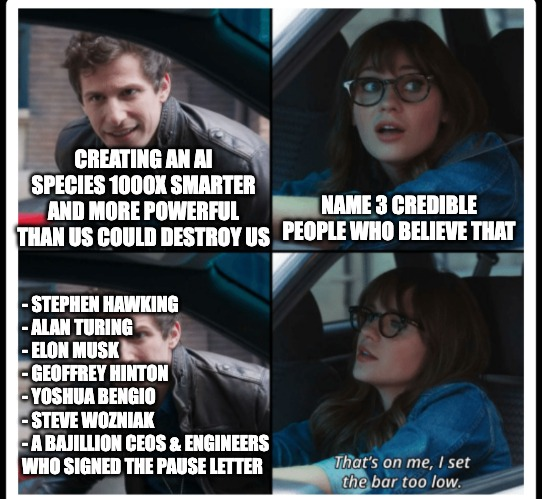

Only a third of researchers think AI could cause catastrophe. Only?

We have to remember that the data is biased, as it takes some serious balls to be an employed (rather than independent) AI researcher and come out with major AI safety concerns. It's rather like a butcher waking up and starting to voice concerns about animal welfare. Some tenured professors can afford this, and only because they are selling a need for what is arguably hopeless "alignment" research.

-

1

1

-

-

13 minutes ago, serpro69 said:

Stumbled upon this news article yesterday: EU proposes new copyright rules for generative AI. Sounds like a step towards forcing AI-development companies being more "open" about the data they use to train their models (Could lead to other regulations to make them more open in general as well.) Time will tell how well EU will be able to enforce this, but it definitely sounds like a step toward more "safe" AI.

Nice. This could kill generative AI off for quite a while, assuming the legislation proves to have teeth. Imagine the task of producing watertight evidence of rights to use tera(peta?)bytes of data harvested from the Internet lol. And its only fair.

-

1

1

-

1

1

-

-

Nothing new. Dell has been charging huge premiums on their SSDs. Luckily they are not always soldered, so you can upgrade on your own. Lately they have become more "clever" and are forcing higher-spec SSDs with higher-spec CPUs and GPUs though. A desktop is one answer to that.

-

2

2

-

1

1

-

-

Does anyone have the 96GB 6400 G.Skill sticks yet? They are pretty insane, and priced accordingly at +50% over Corsair Vengeance 5600 lol (although I'm sure some of that is just a launch tax).

They do 5600 at 1.1V.... I'm curious what the JEDEC timings look like at that frequency.

The QVL only states compatibility with Z790 although not clear why they wouldn't be compatible with Z690.

-

2

2

-

-

9 hours ago, Reciever said:

That is correct.

@Etern4l also had a picture removed for containing explicit language. Of which, if you recreate it without the use of such language it will be permitted.

Thanks!

Didn't notice, you could have let me know right then or inserted a note in lieu of the image ("image of a naive human startled by the AI capabilities at time t + delta") as that image was a continuation of the slide before it, and trying to complete the point. I'm not sure it's worth retrofitting given the overall level of discussion and engagement with the topic here TBH (with one or two exceptions of course). Maybe it's time to consolidate the best material on first page and post the link on reddit and twitter lol

@Reciever Since we are moderating quite a bit (this looks to be by far the most heavily moderated thread I have seen on the site by moderator actions/posts), which I generally view as a value-adding activity, ideally we would also moderate the thread for informational value and clean up trolling.

For example, if a hypothetical troll with a track record of disruptive behaviour and zero relevant background in the topic, possibly attempting to contribute in a sedated state (if, for example, he advertised being in possession of a stash of legal in his jurisdiction drugs elsewhere on the site), invades with silly messages and personal attacks with no informational content, for example: "conspiracy theory, so funny!", "Tinfoil hat, haha", "Hello, it's me again, what I posted earlier is likely correct for no reason whatsoever, period.", can we remove that kind of inane and inflammatory noise please (otherwise we are no better than reddit and some bad areas of twitter)? We are trying to discuss a serious subject here, so it would be great if we could set a somewhat higher bar on the standard of communication (I don't mind bad grammar, dyslexia etc. as long as some reasonable arguments are being made or attempted). Many thanks.To keep a slither of connection to the main topic, I consulted GPT 3.5 on the distraction at hand lol

TL;DR

Trolls are users who engage in intentionally provocative, disruptive, attention-seeking, and often anonymous behavior with a lack of empathy for other users. When determining the appropriate action to take against a troll, moderators should take into account a pattern of repeated "offending" and have clear guidelines and policies in place for dealing with trolls. This may include issuing warnings or bans, depending on the severity and frequency of the troll's behavior. The goal should be to maintain a positive and safe online environment for all users.

How to deal with an internet troll?Dealing with an internet troll on a forum can be a frustrating experience, but there are several strategies you can use to manage the situation:

-

Don't engage: The first rule of dealing with trolls is to avoid engaging with them. Trolls thrive on attention, so the less you respond to their comments, the less likely they are to continue their behavior.

-

Report the troll: Most forums have a reporting system that allows users to flag inappropriate behavior. Use this system to report the troll to the forum moderators. Provide specific examples of the troll's behavior, including links to any offensive posts.

-

Block the troll: If the forum has a blocking feature, use it to prevent the troll from contacting you. This will also prevent you from seeing any of the troll's comments.

-

Respond with humor: If you do decide to respond to the troll, use humor to defuse the situation. Trolls are often looking for a reaction, and responding with a joke can take the wind out of their sails.

-

Stay calm: Trolls often try to provoke an emotional response, so it's important to stay calm and composed when dealing with them. Don't let the troll get under your skin.

Remember that trolls thrive on attention, so the best way to deal with them is to starve them of it. By not engaging with their behavior, you can minimize their impact on the forum and create a more positive online environment.

Should trolls be just banned from the site?

In most cases, yes, trolls should be banned from the site. Trolls typically engage in behavior that is disruptive and can harm the community, such as posting inflammatory or offensive comments, derailing discussions, and harassing other users. Banning trolls sends a clear message that this type of behavior is not acceptable on the site and helps to maintain a positive and respectful online environment.

However, it's important for forum moderators to have clear guidelines and policies in place to determine what constitutes trolling and to ensure that bans are applied fairly and consistently. It's also important to give trolls the opportunity to reform their behavior and learn from their mistakes. In some cases, a warning or temporary suspension may be more appropriate than an immediate ban.

Overall, banning trolls is a necessary step in maintaining a safe and productive online community, but it should be done in a thoughtful and measured way.

What are the main characteristics of a troll?

The main characteristics of a troll include:

-

Intentionally provocative behavior: Trolls seek to provoke and upset other users by making inflammatory or offensive comments. (check)

-

Disruptive behavior: Trolls often disrupt discussions and derail conversations by changing the subject or engaging in off-topic tangents. (check)

-

Attention-seeking behavior: Trolls crave attention and will often continue to post inflammatory comments even when they receive negative reactions from other users. (check)

-

Anonymity: Trolls often use pseudonyms or fake accounts to remain anonymous, which allows them to post provocative comments without fear of consequences. (N/A)

-

Lack of empathy: Trolls often have little empathy for other users and may enjoy causing others to feel upset or angry. (check)

-

Persistence: Trolls may continue to post inflammatory comments even after being warned or banned from a forum or website. (check)

It's important to note that not all users who exhibit these characteristics are necessarily trolls. Some users may engage in disruptive behavior or be provocative without the intention of causing harm or upset. It's up to forum moderators to determine whether a user is truly a troll and take appropriate action to maintain a positive online environment.

When determining the appropriate action to take against a troll which ticks all the boxes above, should a pattern of repeated "offending" be taken into account? What would be your recommendation?

Yes, a pattern of repeated "offending" should definitely be taken into account when determining the appropriate action to take against a troll. If a user consistently exhibits the characteristics of a troll and engages in disruptive or offensive behavior on multiple occasions, it's more likely that their behavior is intentional and aimed at causing harm or disruption to the community.

In terms of recommendations, forum moderators should have clear guidelines and policies in place for dealing with trolls, and these should take into account factors such as the severity and frequency of the troll's behavior. For example, a first-time offender may receive a warning or temporary suspension, while a repeat offender may be banned permanently.

It's also important for forum moderators to document instances of trolling and keep a record of any warnings or bans that have been issued. This helps to ensure that bans are applied fairly and consistently, and that users are held accountable for their behavior.

Ultimately, the goal should be to create a positive and safe online environment for all users, and taking appropriate action against trolls is an important step in achieving this.

I mean that's solid and common-sense advice, arguably 75%+ of native English-speaking humans would be unable to produce that level of response within several minutes, let alone several seconds. "My" last contribution on the trolling problem. Hope we can resolve the situation for the good of the site.

-

-

GrepheneOS supports sandboxed GooglePlay, but yeah, in general it does seem a bit risky to run banking stuff in this manner. In theory it's open source and audited, but...

-

Yes, clearly - it was a 16/32 bit machine. 1987 or so.

-

Why is this exciting exactly? What will be tne benefits, and downsides? Not according to the exec, but in practice. Time will tell.

-

1

1

-

-

6 hours ago, kojack said:

Well, that notification was a waste of time. Alright, I guess there was a reason why you were on my ignore list more than once at NBR. See you there buddy, enjoy your stockpile of weed, hope it brings you more "clarity" and comfort.

While the death of stock photography is a slightly tangential topic, here is my parting gift to you my friend, food for thought (won't help with the munchies unfortunately):

Edit: a good follow-up video on the future of broader photography and creative arts. Bang on IMHO.

BTW A lot of the commentary on the Internet made by people with no CS background falls into the - one would have thought by now familiar - pitfall of assuming what we are seeing now is the apex of AI capabilities or close. That's obviously a gross mistake, as time will continue to tell us (if we continue to do nothing to control this explosive growth in capability, as is likely to be the case for now at least).

Edit: sadly people don't even understand the difference between a sedative and a hallucinogen.

-

1

1

-

-

Thanks, much cleaner.

I mean yes, "end of our civilisation" is just a nice way of saying "wipeout, concentration camps, or slavery if we are very lucky". We are currently on track. It's probably not obvious to see this without some background in AI and computing. Basic evolutionary biology helps. In fact, AI researchers are arguing about this as well, many hoping (hope springs eternal, lol) there is some way to "align" the AI (most likely because they don't want the gravy train of AI research to get shut down), which is at the moment actually pretty hopeless given the lack of theory that would justify any positive outlook, with the current (lack of) any sort of attempt at best practice being just as abysmal. Here is one more summary:

Pausing AI Developments Isn't Enough. We Need to Shut it All Down

The Internet being an occasionally fun place, there are some memes around this out there:

-

7 minutes ago, Papusan said:

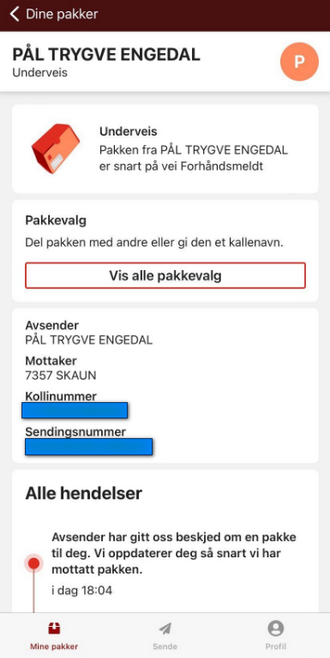

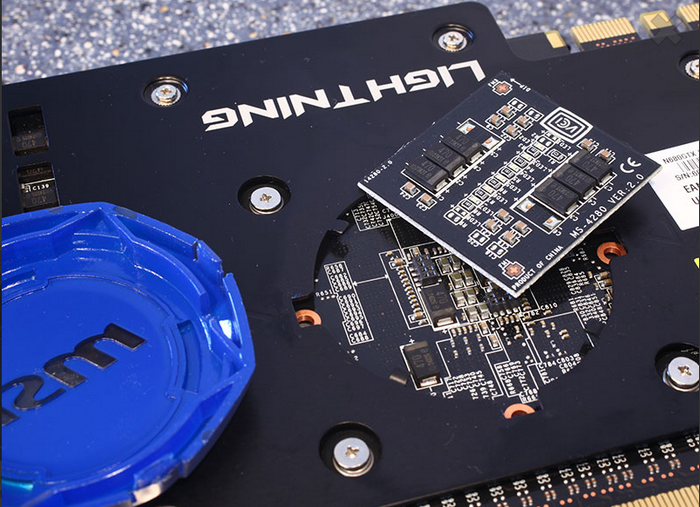

Then I'm finally an owner of a Lightning card from MSI. The card is now shipped. I just hope it works, LOOL

And I here I sit waiting for a MSI card to arrive. Not even the brand I prefer, HaHa

Here's the rela deal @electrosoft The crocs will show up once I have the card in my hands 🙂

The GPU Reactor add on card is maybe an gimick but if this feature (have blue lights) is less flashy than the new and modern bling bling RGB puke show I can nice live with that🙂 And from what I have read, the gpu reactor reduce the voltage drop with 10mv so it can help stability with maxed clocks..

Bro, how many classic vintage graphics card do you have in your collection???

-

1

1

-

2

2

-

-

Once again, the concern is that AI technology (AGI/ASI in particular) could upend or end our civilisation ("existential threat"), in the next few steps of its accelerated evolution. Hope that's clear enough.

As for the detailed rationale, I can only encourage you to re-read all the posts, and re-watch the videos. If that doesn't clarify it, I can no longer assist you.

BTW Can you adjust the font size down? I know it's a bit of extra hassle, but somehow I always do it when copying stuff from the web.

-

1

1

-

-

11 minutes ago, ryan said:

well I feel embarassed. I thought it was take over the world end humanity thread

The confusion here could be that you are not aware of the fact that ChatGPT is basically just a human-friendly frontend for the underlying technology, which can be used in many other ways.

Clever misinformation is indeed one of the most immediate concerns, and the effects of that can be very profound indeed, as we know.

-

1

1

-

-

38 minutes ago, ryan said:

this might not be well known nor seem logical or make any sense or have any merit but the reptilians are taking over. heres a picture of bidens black eyes. which is a clear sign hes a reptile.

but on a more serious note AI has advanced so fast in the last year eternals claims are waranted. I love you @kojackbut I also love @Etern4l your very smart adults, and I think its great to have a different opinion. but just try to see him without the tin foil hat and from another angle.

@Etern4l its like comparing apples to oranges. like comparing piccaso to sir issac newton. completely different type of intelligence. you guys are polar opposites. I see his point, think australia. yes exactly how do we know AI will have a negative bias. it won't or it might, but not it will if that makes any sense, think in terms of open ended programming, I used to program java and well everything has to be accounted for, its not just as simple as A=b<c-2 lol like it will make sense and throw alot of different variables into account.

also too add to this topic, did you fellows know open ai responds to up to 4kb of text and the python ai does up to 14kb. can you imagine how many lines of text would be needed for ai to destroy the world. 14kb is alot of info. but to take over the world or destroy us we would need un speakable amounts of data.

Aug 10, 2021 — It has a memory of 14KB for Python code, compared to GPT-3 which has only 4KB—so it can take into account over 3x as much contextual information ...How much code does artificial intelligence take?How Does AI Require Coding? While the applications of AI are still expanding, people have yet to discover its total abilities. AI requires coding language most commonly used in Python, C++ and Java. Python is the most frequently used of these programs, and the most popular libraries for AI are Tensorflow and PyTorch.Mar 30, 2022That 4KB is just the context memory, we don't know how much knowledge GPT-4 has been trained on but terabytes would be my conservative guess, and BTW in GPT-4 this already up to 100-200KB if I remember correctly. Again, nobody is saying that ChatGPT can literally take over the world., it wasn't even trained to do so. ChatGPT will affect the economy, and the underlying technology is powerful enough to drive potentially dangerous applications. On top of that, the companies involved are already working on systems which far surpass GPT-4.

Another problem is transparency. "OpenAI" has quickly become ClosedAI, there has been no paper explaining how ChatGPT works exactly. We just don't know much about it. Here is another researcher to elaborate:

-

1

1

-

System Shock Remake

in News & Announcements

Posted

That was an amazing game from Origin/Looking Glass, one of the best studios at the time. The bar is very high, I hope "Nightdive Studios" are up to the challenge. Production value looks low, they went for a cartoony "retro style" resembling the original 256 colour VGA graphics, not ideal IMHO.

Edit: looks like this is more of a remaster, one of a few, and actually a large step back from a similar 2017 version: