-

Posts

142 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Events

Everything posted by Ashtrix

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Alex shows how the wiggling BS done by all the YT content creators from GN to everyone to ensure it's right is disproved by MSI power connector and shows the blatant design flaw of this garbage connector.. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Seasonic Prime TX series uses all 12v pins for the PCIe outputs and label them as CPU / PCIe on the PSU side you can have all the watts you can ofc not exceed the maximum capacity. Ofc the cables vary for CPU EPS line vs GPU lines. But PSU has option not that all PSUs can do this but still a good PSU as you said should suffice. Clearly the existing 8Pins could handle it day and night 24/7 without any issue. But Nvidia wanted to savefor redesigning their stupidity of heatsink the denser PCB design too since Ampere V shaped PCBs due to the HS/Shroud design, all in all they just banked on the smaller connector and called it a day and forced the PCI SIG / Intel to push for it. Esp the fact on how Ampere lineup uses a 1/2 16Pin design with only 12 Pins, ironically this one had a latch while the 16 Pin does not. Plus none of the 3090s got burnt, and no 3090Ti too, a moot point. Also the HS not like mega tank, for instance if you scrape the Founders Edition Heat Sink Fins edges with a plastic pry tool or even your nails not even scrape just touch, you will lose the black paint, that's how awful their $1500 Heat Sink Fins are. Meanwhile Alienware 17 from decade ago heatsink fins never do that kind of BS on losing the black coating...Well It happened to me accidentally for a small imperfection on a 3090FE. Nvidia also got away with poor cooling on VRAM for 3090FE on the backside. Claiming how Micron GDD6X is fine for 118C, that's scorching hot and no matter what the heat cycling and the opposite side of VRAM having active cooling that heat will bleed everywhere and cause a mess in the long run. Garbage company end to end, esp the new VRAM fiasco and BS Fake frames. Cablemod support is really good, also their cables are really good quality not that they are way superior but they are just good as I noticed stock Seasonic cables have gold coating for the pins which go into the PSU while Cablemod does not. And they did a lot of design even though some of the QC issues exist like PSU pinout do not match for some custom orders etc and the 12VHPWR pin alignment like I showed, 8Pin connectors also do not align always for even Stock PSU cables but they do not have this BS issue because simply surface area of contact on 8 Pins is more than 12/16Pin BS. There's simply more Metal Mass, so much less reduced area of effect for any mishap. The 12VHPWR needs a v2 overhaul not just extending Sense pins and Nvidia needs to compensate every single owner who bought GPUs with this garbage connector with some money, I know both are not going to happen but I hope a Class Action Lawsuit like GTX970 VRAM crap. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Hyper X Alloy Origins is a good choice, its entirely made of AL chassis, removable USB C cable. Downside is UWP app for managing KB features. BUT the KB itself has 3 on-board profiles (which is very rare for many KBs including Corsair which do not have that), you do not need the software once you are done customizing. Another downside depending on user, it does not have media keys. https://hyperx.com/products/hyperx-alloy-origins-mechanical-gaming-keyboard?variant=42330451148957 -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Intel won't go anywhere. And if Intel dies that means DIY is dead, because x86 will be dead. Chipzilla status is not done over the night. It took them years of technology advancement and then fumbling as well under Brian Krzanich. Intel had world's most leading edge nodes, first 22nm, first 14nm and yet they faltered. They now have TSMC contracts up for Silicon fabrication. But they are not going to maintain that status so long, ARM is closing in fast. Ampere announced brand new ARM Server processors, and AMD already has Bergamo ready but Sapphire Rapids is DOA, vs EYPC Genoa. Their DC business posted loss last quarter. Which is why Intel is speeding up Emerald Rapids (More cores now vs Sapphire Rapids) and Sierra Forest (E-Core XEON to fight vs Bergamo and ARM). https://www.tomshardware.com/news/amd-will-hold-20-of-server-cpu-market-in-2023-analysts-say L4 is a given, I do not think they can make a chip with just 6P cores and beat the 7800X3D and upcoming RPL-Refresh. This is not a rumor or speculation, Adamantine cache (Intel L4 branding) patches were done in Linux kernel too. Intel's new MCM is chiplet tile based, they are putting the Compute tile + Xe GPU + SOC tile which will have P+E+LP-E cores and L4 access across the board like i7 5775C. L4 is slower than AMD's L3 X3D stack but given the new design of non monolithic design from Intel it may depend on how they execute. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

They are first launching the new processors on Intel 4, aka Intel 7nm. Which Supersedes Intel 7, aka 10nm. Also note TSMC 7N is inferior to Intel 7, but the issue is Intel is 5 years late. Intel 4 is now denser than TSMC 5N as well. Intel launched Tigerlake 11th gen on Intel 7 aka 10nm for BGA trash. And relegated Rocket Lake to suffer on 14nm while it was designed for 10nm in mind, and cut the 2C4T and IMC downgraded, they knew that LGA1700 will sell a lot due to PCIe4 Chipset and new I/O and with a disaster like RKL the graphs will look good vs 10/11th gen. And BGA will get sales regardless because Intel 11th gen BGA was on 10nm and sold a ton at that time AMD was not that competitive on BGA land. Plus ADL was on 10nmESF, which is 10nm+ now aka Intel 7. So Intel is repeating the same, they are experimenting their Intel 4 node on BGA trash and give them the most important thing - L4 cache this time on Multi Tile design and with the new Baby core LP-E added to mix they can mint out new BGA chassis and use them for 1-2 years again, so OEMs will also be onboard. Plus smaller silicon die tiles. I think TSMC is involved too for their Xe GPU tile. As for Desktop LGA1800 socket, they will launch them as a stop gap. The MTL-S Intel 4 desktop processors will beat the RPL/R and Zen 4X3D in games, so they will advertise "GAMING" a lot so they will get sales volume and wont bother with SMT / MT workloads. Remember most computer builds are now just pathetic gaming boxes only. And once Zen 5 launches with 16C32T, Intel may launch Arrow Lake-S with 8P and 16E to fight Zen 5. But I do not get one thing here, Intel launching 2 CPU refreshes in a single year ? Maybe they know AMD Zen 6 will not use AM5 socket, so they cant use LGA19xx or whatever for Lunar Lake / Nova Lake in the future. But if AMD uses Zen 6 also on AM5, then it's toast lol. But this is all LGA. BGA will be selling boatloads for their new MTL-S processors. Intel management team is absolute BS. They had everything, i7 5775C, Broadwell L4 eDRAM CPU which beats 7700K and approaches 10th gen i5 performance in Games. Imagine if they kept on using it since 5th gen to 10th, 12+ the refinements would be insane. But they did not care as it costs money and also because Intel management and Investors kept on eating that Data center Market money once Brian left after Spectre Meltdown and 10nm disasters, the investors jumped ship to Apple and Management is rotting it away plus a ton of talent bleed. They even let Jim Keller leave in middle of the design of his project Now AMD and ARM are destroying their DC / HPC marketshare finally and also x86 share is ceding away to AMD, Intel responded by axing Optane / 3D-XPoint (World's best Flash storage technology and it will never be replaced) and SSD NAND business and 5G Modem and a lot and now adding the EDRAM back to their processors. Their previous quarter is a disaster in the entire history of Intel corp (HPC is losing money...only Client got them cash) -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

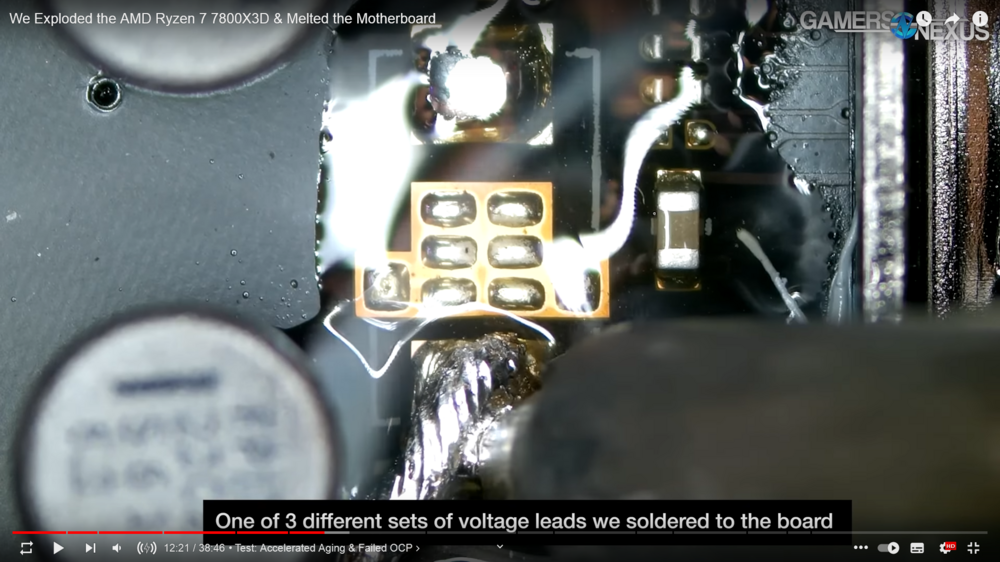

Thanks for this post bro papu. I have finally got the Cablemod Kit before last week, I was debating a lot of things regarding NVMe SSD which I will try to mention in a later post. In short Samsung, WD, all NVMe drives are a joke because M.2 standard is not use in Enterprise and they use all drives which are non 2280 M.2 so the quality is garbage (Controllers, Firmware, NAND Flash, BIOS issues, SLC caching) Anyways so the 90 Degree adapter I got from Cablemod I noticed that the bottom female connector is basically not flush, so I emailed to them and they kindly shipped me an RMA without asking anything. Now that I got another one, and I started inspecting it. Here are the photos, basically if you see the connectors the female pins on the 90 degree adapter the newer one are not perfectly straight. I also have the Cablemod 12VHPWR direct PSU cable too, it's unopened yet so I took a look and those are nicely straight. I will send another message to Cablemod for this and tell them to improve their manufacturing process. Below right is the new adapter look at the first row... Second thing, is I found this video who mentions the difference with the Nvidia stock adapter. Notice how Nvidia adapter is perfectly straight while BeQuiet is not. Looking at Igor's NTK (Tulip design Intel ATX3.0 revision also puts this in mandatory) vs Astron (Dimple outdated) Also for some reason Cablemod's 12VHPWR cables sense pins run 2 unsheated naked wires. Not sure why it is the case. Not sure if it's my OCD or not but it does not look streamlined like Nvidia adapter, I bet all the custom cables are having this flaw. Why is this important ? because as per the whole zoomed images that Igor's Lab provided the new NTK connector Tulip design latches to the male pins inside the female socket for the GPU connector and imagine if you have such variances and over the time what may happen ? I suppose the Northridge videos show what can happen, since most of those lot are from Cablemod directly. 8 Pin connectors are way thicker and are robust and never had this issue and won't have even if the pins are not perfect due to simple commonsense thinking - the 8 Pins have more metal contact mass vs 16 pins, so they are really feeble and the amount of current flow is also higher on the 16 pin vs a single 8 pin connector. Period. I already mentioned how AMD's R9 295X2 using dual 8 Pin handle 500W but mentioning it again and we all know Intel SKL-X HEDT i9 10980XE draws over 600W of power from 8 Pin CPU cables without issue when OCed to 5.1GHz all core, why does this BS company Nvidia has to do with this garbage design ?? Respect for that Northridge Fix on telling how this is not some "User Error" as quoted otherwise by GN but rather a serious design failure. GN still does not think this is a flawed product, look at their new fancy site which has some failures mentioned and conveniently says Nvidia's issue is "fixed as per GN Standards" but the expert repair guy is telling NO. His video catalog on Youtube clearly shows the dude knows about his stuff. Buildzoid also mentioned how the design is garbage vs traditional 8Pin. Really a mess. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Huge thanks to brother @Tenoroon for his good gesture on letting me borrow his GPU, the 860M 2GB card came packed well and I have been running it since last Sunday with same loads and one day I left it overnight no issue. And today I just played 6 hours of Dota2 with friends, 70W max power consumption on the GPU. So far not a single power off shutdown issue. Only a single day on iGPU the machine shutdown this happened 2 weeks back, perhaps the VRMs got overloaded and heated up after loading it on Dota2 for both GPU and CPU, It never happened again though on the same load. However now the same workload on the GPU like before and no issue. While 980M would just simply shutdown the computer at random playing music, watching movie etc. So far so good. I hope this means MXM slot is fine and Motherboard as well. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

I was about to post this.,..saw it yest. Glad to see it. All these Techtubers are just monetizing heavily on the AMD - ASUS situation to max and fooling people enmasse esp GN throwing a bunch of Electron Microscope zoom pics and look at comments everyone is just simply salivating and no one is really into trying to find the truth nor even think about the inconclusive outcome. Basically It's known knowledge to all of us here. Socket Sense vs Die Sense. ASUS APEX on Intel allows Die Sense readings from Z590 series exclusively using a hardware switch on mobo enabling that feature vlatch (bro @Papusan should know it..) and the ROG engineer in that above video knows how the AMD boards are made, basically unlike Intel there's no physical switch at-least on C8H, they just incorporated it onto the top end stack of AM5 boards so any HW sensor data polling will result in accurate reading (perhaps related to how Intel vs AMD Mobo design works) in our case HWInfo. Now look at Igors lab, and HWbusters YT channel, both use same tool to monitor the Motherboard read out points and show it on the same UI claiming how the 0.05 voltage is extra over the AMD 1.3v fixed cap. As such reputed testers fail to capture another data point. Both of them have Gigabyte board.. use same tool and miss same thing. GB posted a video too check below highlighting how the HW voltage readouts are not accurate because of the Die Sense integration (they are dumb as rock though why not just speak like ASUS lol just muted and showing it normal person won't even understand even 1% let alone grasp all that) It's really insane how they all are using a single data point. GN used the readout point from near PCIe slot if we see in their video snapshot below..Yea it's all cool lol but why just completely ignore the HWinfo.. These lads failed to be called as Techtubers. Just another YT reviewer crowd capitalizing on the sensationalism. Anandtech captured data on ASRock X670E, and they used HWInfo which is why their data is shown accurate but they also missed the point on Die Sense. However their testing is interesting as they test the total Power draw and Current plus Temperatures as well across all AGESA revisions. Here's more... from the actual real deal in HW Builzdoid (he already stated how 12VPHWR did not have good ceiling vs 8Pin) - https://nitter.snopyta.org/Buildzoid1/status/1658131479275216896#m clearly mentions how the the testing is not accurate. Second if you go all the way below that tweet responses, some fellow Nords of bro Papu checked it themselves too by following the ASUS engineer and found out same result.. They used HWInfo + ASUS engineer mentioned points to read actual Die Sense VSoC. AMD's new AGESA really locks it down is the conclusion. Even with increased LLC. AMD has MCM design I was not aware of anything how VSoC is related to die sense, if it was Intel it would have struck me in a second on die sense, as we do only check VCore there's no MCM design to read another voltage point. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

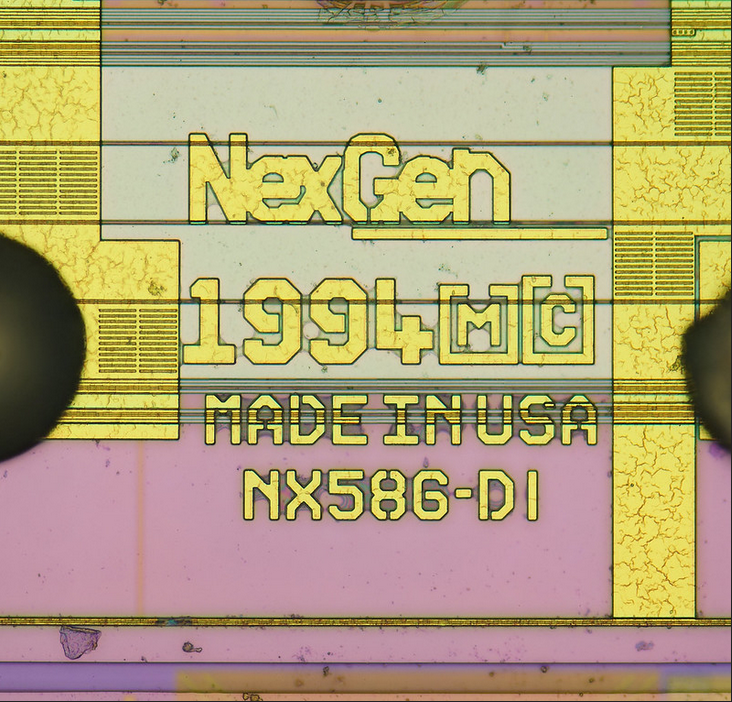

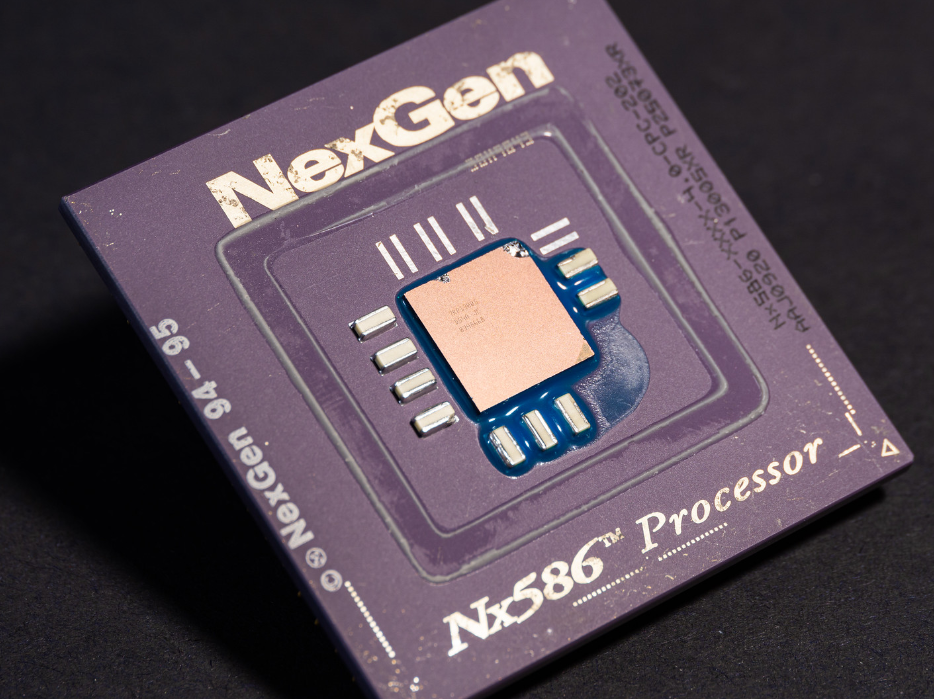

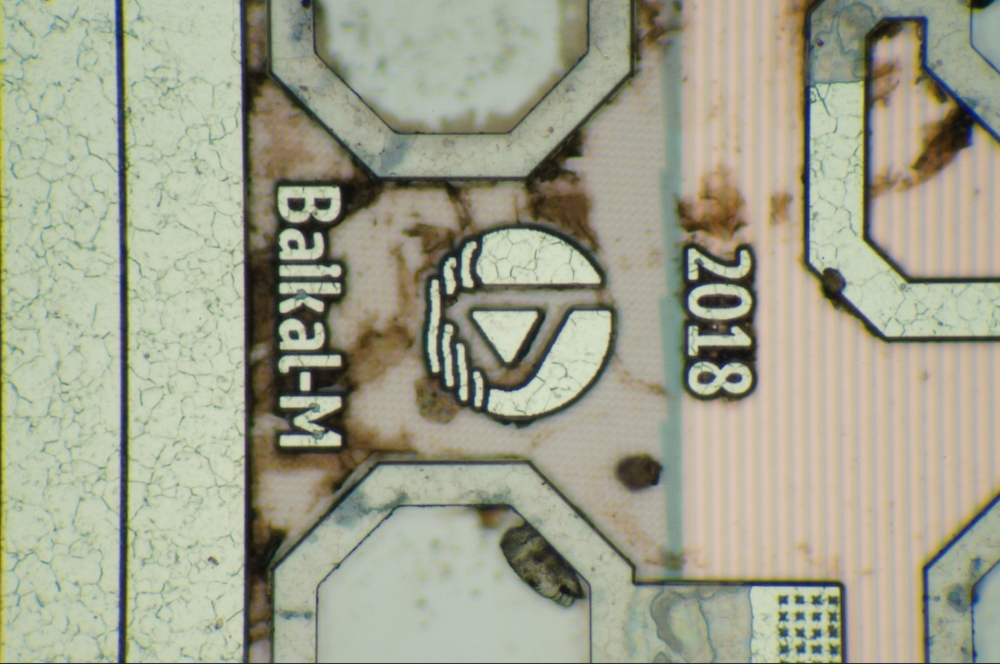

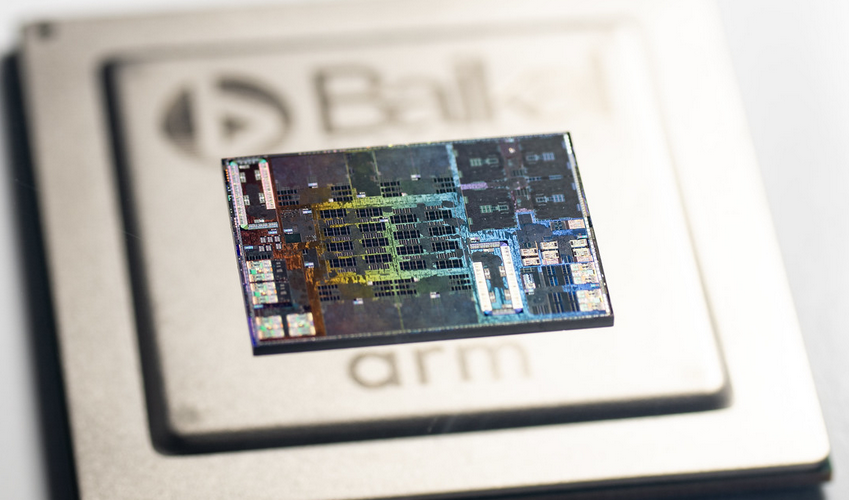

Don't want to critic him too much but here's my thought. All the video entire run of it has basically - Acoustic Scans, X Ray imaging, Cross section Microscope and then another deep Electron Microscope. Ton of Technical terminology thrown at the user, and what we are looking at is a Nanometer scale transistor packaging from world's advanced lithography of TSMC 5N under a Microscope, it will look same for older processors or any node of Semiconductors wafers. Then proceeds with their FA Lab contact to have even more ton of Technical terminology thrown like 20 items or more list of cause of failure. Ultimately there's no root cause conclusion which was actually the one being hinted at and discussed in their prev piece. This just makes Steve look super smart although his script is basically from the Lab team, credit is its looking interesting under microscope well, that's all. I expected the outcome, but I wanted to wait and see. It's simple, the problem's nature is pure Electrical Engineering standpoint and we cannot really see anything as 99.99% of the people do not know this high tech stuff. People do not even know how TXAA (Nvidia technology) blurs their game to garbage due to Resolution of Textures being downgraded as a result of AA blending which is the default on all AAA and we cannot disable it then they upscale that using DLSS lower quality image apply sharpening filter instead of using DLAA which is real Super Sampling unlike DLSS !! let alone the VLSI engineering. Fact is if we see anything under the Electron Microscope be it our skin, any piece of PCB, CPU things always look different and interesting due to simple fact of Naked eye vs Microscopic scale of perception. Just shows how over current, over voltage flow disrupts the electrons thus moving the chemical composition of the PCB and the CPU to changes, entirely expected to see how Copper and others flow inside out other elements... For Example lets look at these beautiful die shots NexGen Nx586 440nm RISC processor (Tried to rival Intel x86 Pentium at that time, tried but could not..) Baikal M1000 28nm (Russian ARM designed processor made on TSMC 28nm) However.... ASUS blaming... I won't say no to that. ASUS is a jackass company, look at their forum they just killed the forums, insanely worst QC dept of BIOS literally putting garbage that does not work (to recall beta BIOS fix for RTX 40 PCIe 4.0 fix on Z590 boards works and stable does not, then it has Anti Roll Back for Stable, nasty new practice by ASUS on ARB) Then the boards that I had to return back.. was a hell. Go to AMD forum entire ASUS forum us run by tons of issues. Same for Intel esp their Z690 series is a massive POS. Shame how their BIOS is top in UI but their BIOS itself is now not good add the other QC problems and their Software. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

I think the same, assuming it has gone bad since the black screening and causing some kind of trip off. I ran the machine for 48 hours now without getting any shut off issue. I loaded the CPU to max, by playing Dota2 with iGPU Intel HD 4600 + the usual 3.3GHz clockrate with undervolt that has been stable for years. Surprised the game engine Source is so optimized even after all these years it runs on the Intel HD outdated chip from 2013. So 70C-75 max on CPU package with that kind of load. And did not have shut off, it has some hiccups while loading the game initially before a match, but it's lack of the GPU and CPU esp the MQ chip's VRM limitations, not the powercut issue. And ofc ran the machine again for whole night for p2p download. This confirms that CPU VRMs are not at fault at the very least, and the power delivery for the CPU as a whole from DC Jack line in. As for the 980M GPU... If you recall, I created that 980M Blackscreen thread back at NBR and had over 100 pages of discussion on how poorly these were made, unfortunately I did not get the MOSFETs added to the GPU. As for Shuntmod, nope. The card just had Prema vBIOS but it was hot at higher voltage so I use Svl7+Johnksss version. So maybe the whole Blackscreening got worse and fried something on the VRMs that is doing this kind of issue, fingers crossed all the way hoping its not the MXM slot power delivery. For the Sparks thing, I remember when I plugged a new battery the port was having sparks but the machine and battery worked for long time, hope this AW 17 R1 is as resilient as other Rangers that still live. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Update. Some good news on AW17 R1 Ranger. I removed the MXM altogether and ran the machine for 24Hrs+ and yest night I did not shut the PC and kept it powered on. So no shutdown or powercut or throttling. It went on, downloading p2p for whole night. Still did not shut down and kept it running, now going to see for next 24hours. If that is the case going forward then - I think it's MXM GPU failing in requesting power draw through MXM PCIe slot ? Is that possible, because irrespective of Power draw it fails, like starting a Heaven Bench does not cause it to shut down immediately nor playing Dota2 can cause, but rather can occur anytime on idle or downloading something or watching movie, and even happens if the GPU is not used but just connected to MXM slot itself on SG Mode using IGPU only. So I suspect there's some electronic failure on the MXM VRMs over the time which causes it to fail, any ideas..? I think brother @Tenoroon's generous offer would help me in testing it out for one final test, to use his 860M and check if it's the MXM Slot or 980M MXM GPU. And if it's MXM GPU, then I'll be very happy as no mobo replacement required and can just try to get either Dell Quadro P5000 or HP Quadro P5200 MXM as replacement. On an another note, finally the Cablemod order is complete and got the email for FedEx it is right now in China. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Thanks a lot for suggestions, yea that MSI option seems really damn good compelling option like Precision 7720 but these all laptops (X170SM/KM, MSI, Precisions and older Clevo DTRs) are hard to find in good well maintained condition. I'm also trying to see if I can get this AW17 R1 repaired as like the machine and do not want to simply dump it. P775 is kinda too old so I did not put any research into them, P870 is a good choice but then again, I thought X170 series is somewhat newer with more recent LGA desktop processors and works better for a new purchase prospect. Esp given the age of those P775 and P870 platforms and my old machine too. So it has to be pampered which kinda are unicorns. I saw Framework thought it's too new for a gen 1 launch and as you add modules it gets more expensive and the HW performance is not that much for the Price esp when you see the price to performance of a Desktop. Only reason why I was into Socketed Clevo and others being I feel like the Mobos are built better rather than the welded versions. The Initial idea of BGA Lenovo Legion 7i I dropped it. $2000 of BGAware and for that cash I could just pick up a brand new X670E AMD AM5 board mATX box that would smoke out even the next gen due to AM5 socket being Zen 5 ready forget the BGA junk. While the BGA one might live as long as warranty exist but it's going to be expensive later on and replacement parts will be insanely expensive. I see AW17 parts being very cheap (including mobo), I see the BGA AW parts by side and they cost 5x more lol for which a P5000 MXM could be bought or even a P4000 choice being an option makes it outlast a lot of machines that came after it in welded format. Premamod is a mega bonus perk and an incentive but BGA is really a massive let down you mean there are Laptops with Premamod + BGA ? But the more I think the more old NBR BGA-Hate threads I recall haha, -

Greetings folks, I was thinking about this machine as a secondary PC for myself and this being last LGA socket vs the BGA land. (yea MXM is dead and the GPUs are not even close to desktop parts but it's at-least not a thin and light jokebook i guess)., If you don't mind can anyone clearly mention if the shutdown problems that were mentioned are they applicable to the entire X170-KM or only a few machines exhibit that behavior or just 11th gen on this machine ? Prema is not there sadly for this machine I guess, and Russian folks unlocked BIOS option exists (edit - sorry I did to check properly, ViktorV's the contributor for the Unlocked BIOS), does it provide any important features ? Also using 10900K in the same machine, can it get back 3200MHz DDR4 speed vs 2933MHz max for 11900K ? Anything important, I mean is this machine really worth getting or is it plagued by some EC / Firmware / BIOS bugs which ruin the ownership experience. Heatsink fitment is that good or not that good ? Sorry if I'm being straight forward in these, I hope you guys understand as spending money and time is valuable. Please feel free to mention any other important aspects if I missed. Thanks in advance.

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

I guess the time has come sadly. Yest it got shutdown on iGPU, SG mode. I disabled the Nvidia GPU too from Device Manager. Maybe one last test is to remove MXM and see.... @Vasudev, I did not update the machine to any new Microcode, the BIOS is also old A14+ unfortunate that your Echo is failing too. Yea I agree there's not much on the BGA land now, however I checked most of the BGA laptops and some videos yesterday. Perhaps there's some VRM component failure or PCH related issue I don't know if CPU failure can cause such things too.., cannot be sure at all hard to find out. Now I'm just thinking about 3 options of new machine - Clevo X170 KM with a lot of baggage attached.., 1/2 BGA Precision 7720 Refurbished for cheap. A BGA Lenovo Legion 7i 2022. Unfortunate. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

That is a good idea, it never struck me. Thank you !! I have the 120Hz panel I was saving it for Pascal 1070 MSI or P5200. And the eDP cable too. But as of now its 60Hz LVDS panel is only installed. So I did what you suggested did the MUX key combo fn+F5 and it asked me to reboot for switch and then the Dell ePSA Diagnostics worked (before when it was running on PEG only mode the ePSA won't boot, perhaps due to Custom vBIOS on 980M). As @Mr. Fox asked about ePSA, yea it passed. The error was Battery not installed and so it says "One or more errors found" and displayed the failure, last pass was in 2016. But as I checked the results tab everything passed except the Battery so ePSA needs to pass every single test then only it may pass the machine as 100% pass. And this is not a thorough test but rather normal one I think in the deep test it runs deeper Memory tests. So after booting into Windows 8.1 Enterprise 64Bit, when I right click as expected NVCP does not exist. But I tried to install the Intel HD 4600 Graphics drivers they were failing to start installation, I do not remember how to do this, I booted to the BIOS and put the Display Graphics to IGFX from SG... But in Windows it did not change anything. Tried older ones from Dell and they worked BUT it black screened and the OS display got corrupted lucky we have Unlocked BIOS so I flipped Video Adapter back to PEG mode and restored the OS which removed the bad Intel HD drivers. Later ran DDU and removed the Nvidia drivers and now the Intel HD driver successfully installed. 980M does not show up as it says Microsoft Display Adapter and it shows. That's expected I believe. Basically had to run DDU before, anyways no more 980M for now. I will run the machine on iGPU exclusively and see how it does. Thanks. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Thank you very much for the option to test, will let you know. The problem is not just any GPU load, it will shutdown at any point - Watching a movie / video I use MadVR for Video Processing on MPC, but that's minimal load and as I mentioned even with GPU capped to 30% of power and minus 810MHz on Core it may shutdown and once it does it won't power up instantly, not sure if that's due to the GPU unable to get power and not turning on etc. Browsing Internet, just sitting idle anytime it can happen 100% total random occurrence at-least from what I'm seeing. Thanks brother Fox, yea unfortunately not a single machine out there is interesting or worth spending money on, everything is just BGA cancer made with one thing in mind - Planned Obsolescence. And while at it they just rip off hardcore. There's no reason to price a BGA turdbook with gimped HW at beyond LGA Clevo X170 used pricing. Not even the brand new BGA 4090 and BGA 13th gen BGA books are worth as they will overheat and cook themselves to death with Tripod garbage. As for the Dell Precision 7720 that's a really good idea. The MXM with P5000/5200, metal build and removable battery bay with a 2.5" and cheaper cost esp makes it compelling.. I'm just watching your video on teardown on your channel too 1/2 way, the heatsink is also having 4 screws on both CPU and GPU. I will definitely let you know about it. Thanks a lot for that heads up For the AW17, the battery died, I bought Dell spare that also died, so I run it exclusively off the power supply since 3 years+ as for ePSA that doesn't seem to work as when I try that, it shows the custom vBIOS screen that says - Svl7 + Johnksss and goes off into beeping mode. I hate to say it but seems like something on the motherboard, no pattern at all. Plus on Windows Event Viewer nothing is logged either under System. Very good bro, yea this machine is built to last, I bought the GSkill memory when I had chance, it was lowest DDR3L timings too I should have bought one more set back then. I was actually planning to get i7 4930MX as prices dropped for that part nowadays. And eying that P5200 MXM HP Zbook pull one. Chichen's HS did not work for me though. That said, yea the issue is very weird and hard to diagnose. The first thing was Thermal Paste job and then using Throttlestop + NVInspector to downclock both, it did not work, then the PSU because I thought that was the issue of a faulty power delivery, I use TrippLite power strip and changed it too no dice. Then I thought the DC Power Jack might be giving up, but even that failed. Maybe the mobo VRM somewhere is having an issue and causes it to happen at a combined load or something, but too hard to find out. Also Khenglish is MIA here on NBT during the NBR migration unfortunately, his talent was impeccable and he would have known something which we might miss. T456 is another hardware expert even he is MIA sadly. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Sad news. While I'm waiting for the Cablemod to arrive and thinking about getting Seagate 4TB FireCuda 530. My Alienware 17R1 Ranger seems to be going down. It never had any problems aside from the 980M I have which has MOSFET issue and grey screen / black screens. I don't play anything on this machine anymore as I have the new one prepped up. Anyways the system just hard shuts off. Only Dota2 with friends that's a low load on GPU and higher load on CPU. Initially I thought it is happening due to some GPU issue so I tried to re-paste everything with the Noctua Thermal Paste I had. And tried to run heavy Unigine Heaven and Fire Strike it shut off after some time so I was sure it is the GPU. Then using Nvidia Inspector (Maxwell so can control Clock rate and Power) and limited Power capped to 70% only. Still failing. Then reduced Clocks to even lower by 500MHz on Core. Tried ordering new 240W PSU, tried the Dell 330W as well, but Delta one is discontinued and ordered new Dell GaN 330W one with smaller footprint but thinner cable (apparently the quality on these not good as cable may break faster) assuming it would work but it was shipping late so 240W brand new came in fast. And no dice, the machine shut off flat out. So no PSU issue (have the Dell 330W which I purchased 7 years back, so yea that thing is bulletproof it works solid) Still the sudden shutdowns continued while playing, then I tried to reduce the Dota2 resolution even lower to 720P. And still happened later I used Throttlestop to reduce the clocks to pitiful 2.5GHz on all 4 Cores from 3.5GHz, remember this was happening since 15-20 days so end of March to April. Later stabilized and now the GPU is power capped to just 30% lol and 810MHz negative Clock rate offset, so I thought the CPU VRMs or something else at play. Eventually it happened, the shutdown happened on normal torrent workload basic workload no GPU at all. Sometimes it happens when I'm watching a video or something it's random now. Then I ordered DC Power Jack from Parts-people, I remember them since NBR days. Well it came on Sunday and I was glad to see no Shut off but today right after work, it again happened. So to note - No Overheating of any component (GPU, CPU) - No heating around DC Jack area with both new and old parts. - PCH also stays normal no overheat (it only heats up to 79C when 980M goes to 78C (80C it GPU hard lock due to 980M issues) which does not happen at all. - PSU is perfectly fine - TRON lighting is also fine no issues - Soundcard is fine - All SATA ports work, ODD to HDD also works. - RJ45 Ethernet port works fine, I get super high speed data without issues - USB ports work (one on the daughter board is 2.0 only from 3.0 not a big deal) - Display works no artifacts no nothing. - RAM is fine I guess ? GSkill 2133MHz premium memory. - Both fans work perfectly fine. - KB works without issues, Touchpad too -Visually and Physically the laptop is very good as I do not use the KB at all. - Lid is like brand new no sratches, the heavy MG alloy. - Exoskeleton is also as is no dremels no nothing. - CPU die and GPU die do not have any cracks or scratches or chipping. - Heatsinks do not have any issues either. I tried running Aida Stress tests, it does not crash flat out or cause massive throttling either. One more thing is once it shuts off, I press power button it does not start. I have to wait like at-least 10-30 mins sometimes else it would shut off before going to Windows. Any ideas guys ? @Papusanbro any thing from your side as you still own this beauty ? I do not know where can I even try to send this to diagnose it. I mean it's been 9 years with the machine, I got it in 2014. Maybe the power management ICs or something on the Mobo are dying, unfortunate as I like this machine and have some old memories attached to it. I was planning to get the P5200 too as its a 1:1 fit with Revision B which has BIOS chip and I even archived the BIOS needed for that 1080 class GPU. Very sad news. Now I need some portable machine a laptop as I cannot rely on a Desktop when I go mobile to other places or out of country etc, and I checked the laptop scene, honest too god, what the actual F is with the whole industry, every single pile of BGA junk is overpriced to moon and the HW they are giving is pathetic dog feces use and throw BS. Plus none of them have Metal if they have they are super thin garbage like AW or Razer which is like $3000-4000 expensive. And 99.9% of them use Tripod garbage and VC is rare only Razer does. I can challenge not a single BGA junktop can last 5 years let alone 9 years of heavy usage of torrents, gaming what not. And sadly not a single Socketed machine exists. I should have bought a mint condition Dell Precision M6800 on ebay when I had chance....now I only see bro @electrosoft is selling one machine on the marketplace.. (nvm RIP that is also sold, even more sad now...all hope lost) all the BGA new gamer jockeys they all cost over $1500+-2000-4000 for Mobile Alder Lake you have 12700H which is a BGA but it has 6P Cores uber castrated nonsense, Desktop LGA1700 12700K i7 has 8P cores. I wont even waste time on he Nvidia BS GPUs (3060 6GB mobile turd, my 980M has 8GB wtf) inside them. Laptops went to sewage. Clevo abandoned everything, Area 51M is a joke as the DGFF was going up in flames and their GaN charger cable wont even last like my Delta 330W PSU. Oh while I'm at it, Razer is selling same laptop spec as the above one, which was Lenovo Legion 5 Gen 5/7 (apparently better BGA junk of all due to metal body and lid, not base and somewhat ok pricing ?) and the cost is literally $1000 extra for same HW. $2200 approx that Macbook rip off design with VC heatsink costs $1000 I guess. Then you have ASUS garbage rubbish, and all others belong to dumpster ? maybe even landfill is worthless as it will ruin the land. Oh those Macbooks ? remember muh ARM superiority once Alder Lake launched that was destroyed with Zen mobile and more even worse plus Apple charges a ton for all welded garbage and ofc you can get those M series joker processors which will be outdated the next instant new one arrives unlike x86 processors. Sorry for mega rant and such a long post I'm just diswsapointed in the industry and humanity due to this use and throw garbage culture everywhere, the mice are having li-ion batteries now... I use G305 which has AA slot and I use Eneloop AAA rechargable with AA adapter... I digress. Saddest part is about the way how things are going for the ever reliable Alienware 17 and the only ones who can understand are the NBR folks, now NBT folks. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

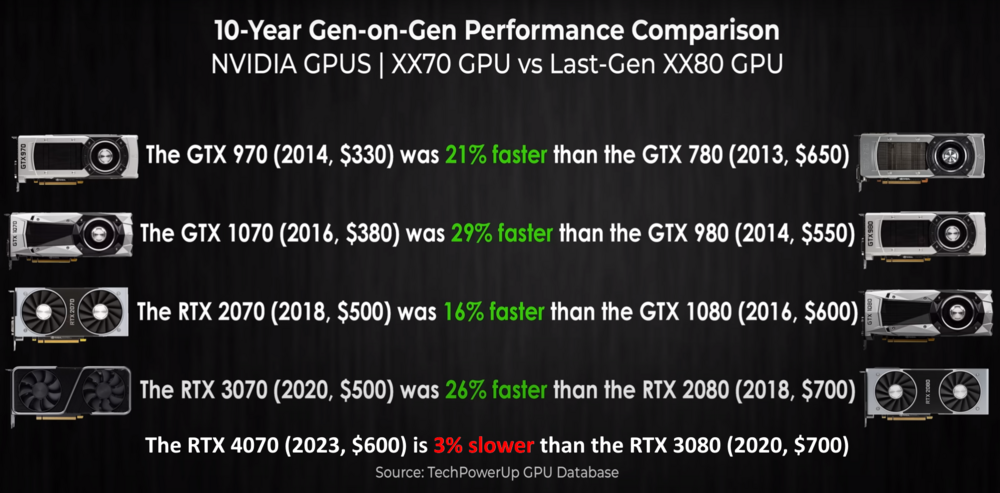

Regarding Nvidia shenanigans, I already explained multiple times on how garbage their business practices are. I showed so many graphs on how RX 6800 is a better GPU than RTX 3070. And it held with time. The VRAM chokepoint kills the whole RT BS in one shot. You enable RT FX it eats up your VRAM. Period. And the whole argument of RT goes out of window. Remember these are mostly Cross-Gen titles. So anyone expecting their GPUs to last Full Next Gen + Gen Refresh (PS5 Pro / SX Pro) they are royally screwed. RTX 4070 video by GN Steve small commentary itself paints the clear picture, the Radeon 6950XT destroys this garbage out of window, Remember a 6950XT is a 3090-3090Ti class of performance this 4070 cannot even dream about it with it's garbage Memory bus on top for $100 less price than 3080 FE MSRP AFTER 2 YEARS. In EU there's no pricing reduction for newer tech it's literally SAME. How about add Inflation factor ? That even becomes thinner. Nvidia rip off of poor brand loyal consumers continues. All for MUH Fake Frames and DLSS Upscaling and Shiny puddles. Flagship Radeon RDNA2 RX 6950XT GPU is now available for just $609.99 The PCB design is cheap you see the PCB posted above and compare that to any Ampere 3080 or the RDNA2 6900 and up... Nvidia is looting, the Power delivery (no need of garbage dongles or expensive 90 Degree Cablemod adapters) plus More Power Tool OC out of the box vs Locked down garbage. Best is VRAM, the 12GB limit is not going to hold well. Also, Flashback Nvidia GTX 1080TI launched at $699 6 years ago for 11GB VRAM. Remember that then the abhorrent garbage Turing came with poorest value where 1080Ti was competing vs 2080 lol. Then they had to fix the Silicon mess and offer an upscale of the Silicon grade to 2080 SUPER rip-off. Still 2080Ti had 11GB VRAM. FF to 2020, Ampere entire lineup got decimated by lack of VRAM. RTX 3080 gimped at 10GB lol, less than 1080Ti. RTX3080Ti 12GB. RTX3060 got 12GB lmao more than 3080, still enabling RT for those RT RT people this will add a choke point for incoming titles, I do not want to mention 4K and 1440P etc because it depends on what GFX settings you want, if you max out everything even the card may choke at 1440P. Meanwhile back to present, Ada vs RDNA3. RTX 4080 again capped at 16GB VRAM. This is right now enough for 4K RT on RE4R, but in the future that won't be enough the competition 7900XTX - 24GB VRAM. Then you have 4070TI which gets lower performance on 4K vs a 3090TI due to VRAM cap again competition ? 7900XT - 20GB VRAM. And here 4080 costs $1200+ while the rest are $1000 to $900. People will still buy this garbage RTX 4070 and get destroyed once the next gen unoptimized garbage AAA titles arrive. Finally one little shocker if anyone does not know. AMD makes as much as Revenue as Nvidia in Gaming despite having low sale volume, Why ? because Nvidia's GPUs are bigger and AMD uses smaller ones look at RDNA2, RDNA3 and TSMC costs a lot more so Nvidia will destroy your wallet by offering planned obsolescence tech. Plus AMD also sells a lot of Console APUs too. Also there's no 7700XT yet once the 4060 joke (yea it will have 8GB VRAM lmao) launches.... ....then AMD will launch their mid range as RDNA2 slowly fades out and destroy both 4070 and 4060 but people will buy Nvidia junk again lol only to get ripped off and then...Hardware Unboxed video caused meltdown will repeat again. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

If you watch Buildzoid video, it's not just the mobo, the LGA1700 socket is the main culprit. He mentions that DDR5 8000 is a mess on his side with multiple CPUs and multiple boards. The issue is about the Socket Pressure and LGA pin contacts. So maybe before getting rid of it, try to re-install the contact frame and the processor, then see how it works. Intel did drop the ball on this socket the only thing they were able to get more sales is due to pathetic Rocket Lake and years long stagnation on PCIe3.0 vs AMD's 4.0 since 2020. And the graphs they made out due to the Rocket Lake disaster. Add the 5800X3D, on an old platform and beats the Intel's 12900K easily and compete with modern i7 and R7 processors with ease, ofc this is Gaming workload but today most of them are buying PC for gaming only. I do not know how many even care about 4K BD Muxing or Archiving Games etc like myself and also have a bunch of SATA HDDs connected onto their main rig. All I see is Lian Li cases and others with 1 HDD at best and 2 NVMe for gaming and nothing else but full RGB and "MUH GAMING", this is where AMD's X3D gains market share. Sad but true, nobody cares about Per Core performance that much, at best they see Cinebench, many do not even care for RPCS3 / Dolphin / Switch Emulation performance either. Even Intel and AMD are doing this with gaming focus, esp their Slide decks and look at Intel - 8P core MAX, you ain't getting anymore than that. AMD CCD Design is 8Cores with MCM design, at-least they have other 8Cores as proper x86 not Intel's SKL class E Core. Intel's new LGA1800 platform is also fixed 8P cores. AMD Zen 5 is also topping at 8 Cores per CCD I doubt they are going to change that. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

Hope all are having a great weekend !! I have finally ordered Cablemod 12VHPWR Adapter Variant B that Igor reviewed it is an essential part for those like me who are looking for "Longevity" and "Stability" of the PC. It is well made, solid 2 Oz copper PCB with extra copper on top. And passively cooled using CNC AL top frame (apparently Igor worked with Cablemod on improving the design). Yes, 3090Ti does not need it because of the lack of Sense pins utilization as they are grounded. But I want to be on the "Tulip" design of the micro connectors without causing the internal wear and tear of the socket, oh also this Tulip / Spring design is a part of the new revised Intel ATX 3.0 standard update of 12VHPWR that along with Sense pins male plug being elongated, remember when GN Steve was shaming Igor and published it's the user error, the nonsense that Apple spews, anyways what Igor mentioned is 100% accurate as the Spring latching is better than Dimple mechanism trash plus that awful Nvidia abomination of Adapter is unbearable to look at and work around with plus the unwanted 35mm bending annoyance with the new 12VHPWR be it custom cables or not, the Adapter is a must imho. That along with Microfit 16-Pin with 8Pin x3 connector with AL frame cover and full custom Modflex kit, it costed a TON, almost as much of my CPU (as I needed more SATA power too), really BS. But I hope it is worth the cash as I was already frustrated with the stock PSU cables which are stiff and ugly as hell. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

4th Socket advantage too ... which is big. That is not a small one. 12th to 13th -> Intel added L2 Cache and increased clocks AND power and E cores to match Ryzen. And that's the end of the line. In gaming it's really not much of a big deal with 12th to 13th since you are still using 8P only on both platforms. RPL Refresh which will be probably just cache and DLVR with optimization to clock rate at best, I doubt they are going to put more effort as they are bleeding cash flat out and lost more than 2Billion USD on AXG alone causing them to fire Raja to cut costs. Zen 4 -> X3D only gaming advantage which is not that much BUT just a fraction of power easy to cool even with basic coolers then you have -> Zen 5 on same socket same board on a TSMC 3N node jump which will have a brand new design as Zen 4 is just higher clocked optimized Zen 3 variant in contrast to Intel, the 14th gen Arrow / Meteor Lake requires a new mobo entirely ($700 at-least if you want high quality one with 2 DIMM). And then you have Zen 5X3D. A Zen 5X3D will demolish any 13th gen processor without a doubt. One more thing is AMD Zen 4 processors have high Base Clocks. Intel needs to ramp up many times vs AMD's Zen 4 as base frequency is higher, 7950X is 4.5 GHz and 13900K is 3GHz, that is 1500MHz higher speed on ALL 16Cores of 7950X vs 8P cores of 13900K this adds up with the high power utilization and heat density of Intel 7 based processors vs TSMC 5N Temp based scaling of Ryzen Zen 4 with much more better efficiency. -

Oh boy what a DISASTER. Samsung meant worry free, top quality Memory and NAND. Lately they are really falling short. They also nuked the Note line with SD card. Also their financials recently have also not been good either, Samsung LSI Semi is also a massive loss, Samsung 8nm aka 10nm++ was a bust only Nvidia used it because Jensen knew the mining boom and his GDDR6X with cheap die wafer cost he would mint money. Anyways the NAND division as bro @Papusansaid MLC was TOP NOTCH. Class leading endurance. 860 PRO SATA was 4800TBW 4TB and today Samsung does not have a single drive with high endurance like that and ofc they even do not make them anymore but they have 8TB 870 QLC trash. the 1TB 970 PRO was too old for Samsung so they killed the MLC based last NVMe and now they give "PRO" branding as TLC with 1200TBW on the 980 PRO 2TB. Pathetic really because Micron high 176L denser NAND has top notch Endurance, Firecuda 4TB has 5000+TBW that's MLC class in TLC package. Why cannot Samsung do it ? Because their market branding, PR is huge for Samsung anyone will buy based off trust. Because Western culture is all about trust (which is slowly fading), Samsung is abusing it for profit and greed. Look at 990 PRO it also has Firmware drive life reporting issues. Brand new drive... The 980 PRO I have is from 10/2021, manufactured which means it is effected (because the quick Firmware search shows when it's released, more below). I did not use it yet because I'm waiting for some SATA cables for my machine (Chinese Lunar year delay), imagine buying this junk for massive price and then it dies off randomly after a few months. And 0E cannot be fixed at all, all the drive is dead and the pain of removing the SSD from the Mobo is horrific as you have to remove GPU and sometimes CPU if it's a DIMM.2 + Air cooler (in my case) lucky I did not install anything else I would have to remove all components,. Anyways now I'm buying an adapter for NVMe with tool free design like this to do a firmware upgrade on an another machine instead of installing any single bit of data to that SSD to avoid any of these errors show up in the SSD or prematurely damage the SSD with that broken firmware, hope that works. 4B2QGXA7 Firmware is from 11/2021 (quick google search), so any drive with 2021 manufacturing year probably will have earlier SSD breaking firmware. The newer fixed firmware is from 2022. Pugetsystems also notes that Primary OS drive cannot be upgraded to new Firmware which is a potential issue which can happen, another disaster when using Samsung SSDs. Imagine your primary OS NVMe is in the mobo slot unlike DIMM.2 + AIO (easy swap) so you get shafted big time. Horrendous QC.

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

@Papusanare you ready for latest tech ? It must be better and greatest ever !! Samsung 990 Pro SSDs Report Rapid Health Degradation Samsung 990 PRO Flagship SSD Has an Endurance Problem, Users Notice Rapid Drive-Health Drops Samsung actually ruined their Memory division badly. They axed MLC and gave TLC, and Z-NAND for Enterprise. The endurance took a nose dive. 860 Pro 4TB has 4800TBW. 980 Pro 2TB has 1200TBW, utter disgrace. Now they wanted to milk the heck out as if the PCIe4.0 SSDs were priced up though the roof, I bought 2TBx2 for $500 a year back (yea I was stupid to buy them early when I couldn't even build PC) and in 2022 Blackfriday they dropped the price for same bundle for $200 less. A.k.a $300 bundle.. Money down the drain. Anyways now the milking must continue so they made the new 990 Pro 2TB ($290, so 2x of them means more cash than 980 Pro 2TB during Blackfriday) with some extra useless BS benchmarks (Remember Optane ? The NAND killer and it destroys them in Sequential constant performance and no DRAM drama). And absolutely no Endurance upgrade over 980 Pro 2TB. And there's a 4TB option with 2400TBW but that is not yet released. Still i'd rather buy Micron NAND Seagate Firecuda 4TB with much better speed and 2x endurance. But since newer = better, 990 Pro is dropping endurance by 30% in few weeks ... imagine spending that much money a JUNK product with bggy trash Firmware on a damn SSD, that too Samsung. Imagine you brought a brand new "Samsung" Memory and they deny your warranty RMA. Could be firmware reporting bug, but if it's a real write NAND failure bug then RIP. Still this level of incompetence for a brand new product. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Ashtrix replied to Mr. Fox's topic in Desktop Hardware

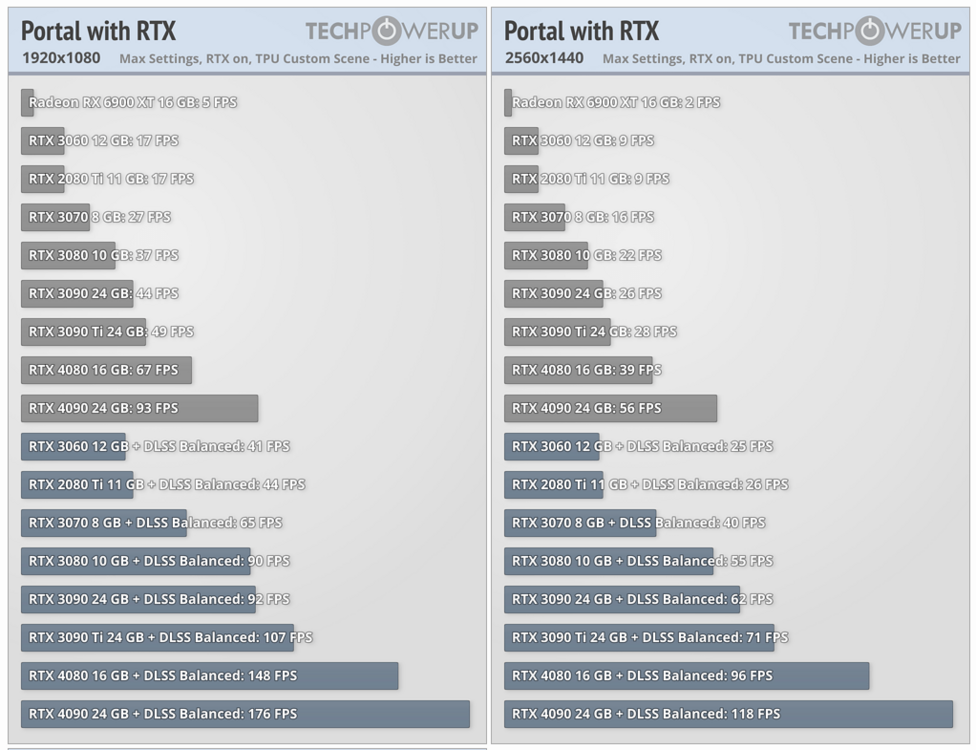

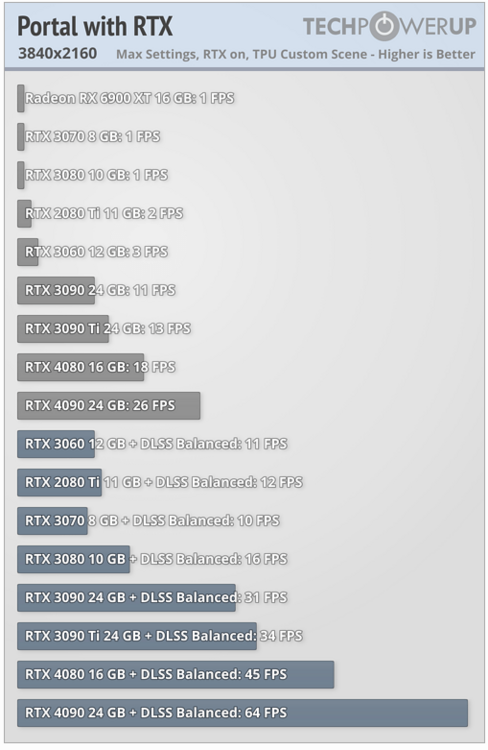

To me, I see RT as a waste of Silicon. 30-40% of Nvidia die is wasted by that hybrid raster-ray tracing esp that DLSS. I like the technology of having immersive Global RT Path tracing, but I do not like the upscaler add-on that Nvidia puts forth to use the RT because of performance impact pair that with poor optimization by modern developers. It is not mature enough to have it mandatory or regard as highest value. I mentioned many times about RT equipped games. And of all technologies of RT implementation - RT Ambient Occlusion, RT Shadows, RT Global Illumination, RT Reflections. Only RT GI has highest impact (it even has a reshade mod that impacts games in visual quality, more below) and RT AO is second most in visual impact. We have to look at RT using all these RT features, not just RTX ON = Good, Off = Bad or not desirable or inferior etc. Baked lighting artistic impact is much much higher than what Nvidia says about RT. And of all AAA titles that have been released since the debut of RTX Turing. The only title which makes a visual impact is METRO Exodus Enhanced Edition, you can watch a few trailers and comparisons it does have impact due to all the light sources in the game are path traced so the Enhanced Edition won't even run on normal pre-RT GPUs. Now the question arises, is it even worth ? IMHO nope. EE is fine addition as a free add-on they released it to all owners, even on GOG platform, it makes the game unnatural in some cases where light should not be there, making it brighter. All in all not a mandatory experience. You can watch it below. Metro EE has RTGI and RTAO, RT Reflections as well. Another video you can clearly see how it's more brighter and this is a Flagship title, yea it does look pretty in some cases and outside though. The only other games which use RTGI and are big budget games are Cyberpunk (more on it below), Witcher 3 Next Gen both of them run awful and TW3 Next Gen simply decimated all the optimization into dumpster as GPUs with triple FPS went to double and below 60. Then the RT Impact is negligible, waste of time and your GPU power, I mentioned it when the game patch was coming. As for Metro again, watch at 38 seconds mark and see how bright the room is below in EE vs original. Lot more examples like that, it kills the Atmospheric immersion of METRO from Darker to brighter. Now moving to RT Reflections, which 99% of the modern games have it is like watching puddles doing better Screen Space Reflections (SSR), this won't even be noticed by you in the game unless you stop and watch those reflections, maybe in a Spiderman Remastered game because all windows are glass and use RT Reflections but that is a single application, and effect is worth the FPS drop ? Nope. Esp if you do not have a top end RT GPU the RTX will kill your frame rate and on 4K you are forced to run Upscalers, just go to TPU and see any RT related benchmarks on cards less than 3080 class. So it all worth to run a lower render resolution, imagine paying $1000 and you are running an upscaled texture on a high res display you bought bonus you get image stability baggage running upscalers. And the major impact is TAA, it's far more important than RT. I mentioned about it here if you are interested. Enabling TAA = Blurfest, because TAA makes the game renderer run the textures at lower resolution unlike MSAA, which is dead because of the rendering technique changes and hard impact on GPUs far more than TAA. DLSS uses Temporal AA - TAA, and people say it is better because TAA = Blur, DLSS = TAA + Sharpening, so it will look better BUT the Shimmering, Artifacting, Noise, Glitching, Ghosting, Low resolution upscaling is not better. You are paying $1000+ and running an upscaled texture, that is a loss. Reason I mention DLSS because, if you enable RT on 4K the perf hit will be massive, 1440P also same, depending on the game and GPU used, so DLSS is defacto for most consumers esp where the majority of the market lives which means 1080P/1440P vast userbase and in that area DLSS is worse you can check this TPU God of War FSR vs DLSS analysis (scaling of upscalers on low resolutions is lower) or TPU Ghost Wire Tokyo FSR + DLSS + Native analysis (look at the lit board adverts) or TPU Spiderman Remastered DLSS vs FSR (LOD) and see how 4K picture is far more clearer, PC got regressive technology like Console Checkerboard rendering. Personally, I will never ever run DLSS, if I want RT, I will run at 1080P as I have a 1080P panel and a potent GPU, plus have better FPS and rather run DSR which will use the GPU power to render at higher resolution and fit the lower res monitor if I get an OLED 1440P then also nope to upscalers. That is a superior technique or approach rather than DLSS+RT regression. Question may arise what if you want to play modern games, well I dislike modern games because of multitude of reasons like wokeness, poor optimization, cutscene simulators from Sony playstation etc, that's a totally different ball game... Control is a game with RT and TAA, but this game can disable TAA using hex edit on the exe it's on PCGW, you can get better fidelity in the game without TAA, RE2, RE3, DMC5 all do not use TAA, Capcom updated RE2, RE3 RE7 with DX12 and RT which nuked the Ambient Occlusion and made it worse in every aspect there's a beta option in Steam to disable that new update as these look solid without any RT / DX12 API update. If I buy a 4K BD, do I like 2K Upscale ? Nope, nobody does which is why some BD's like Pirates of Caribbean got major negative reviews on 4K Disc due to piss poor garbage upscaling and loss of detail vs 1080P BD. A bit tangent but I want to emphasize running some fancy RT and tacking an upscaler with sharpening pass is butchering the fidelity. Now does a game exists which uses all those fancy tech and beats Red Dead Redemption 2 (no RT at all) in visual fidelity or any RT game that has it default from developers that will beat GTA V Natural Vision Evolved mod (has RT in the reshade) in visual fidelity, both these are older games and RDR2 without RT decimates all titles. And 2013 Crysis 3 build without any TAA beats any modern game with RT due to fantastic art direction and way ahead in GPU rasterization in Crytek's old vision, those games are solely made for PC another example is Assassins Creed Unity without any RT beats a ton of games in visual fidelity again. This game does run in 4K60 on latest consoles only and PC gets 120FPS at 4K. Plus, one can include RTGI Reshade by Pascal Glitcher preset into many games, or those who want simple solution can install bloatware GeForce Experience and use the same RTGI Reshade Filter on all old games and not have the modern RT performance drop. It works even on AMD cards too. PS I disregard Cyberpunk totally as I feel it is not a game at all, only to be fake marketed and hyped to moon and created a sheep consumerist reaction by masses plus that Edgerunner Anime caused it's spike even though it has all those RT FX and even more, but also not only that, the game sucks big time in optimization and runs awful on modern hardware and forces you to run those fake frame generators. The company CDPR is dead, all their staff left. They are killing RED Engine for Unreal Engine 5, they lost their top brass. Witcher 3 RT edition is a junkfest nightmare with horrendous optimization, I mentioned it earlier. I did not include Portal RTX, because that garbage runs like trash even on 3090Ti and 4090 cards, awful you need a DLSS3 Frame Generator to run at 60FPS 4K on 4090 plus that's a damn decade+ old title sponsored by Nvidia to run awfully on AMD Radeon just because of useless bunch of RTX proprietary junk.