All Activity

- Past hour

-

Eluktronics Hydroc G2 / Uniwill IDY 2025

win32asmguy replied to win32asmguy's topic in Uniwill (TongFang)

Out of curiousity what are you running for tREFI at 6400MT to get 84ns in Aida64? I currently have my 2x16GB A-die kit at 6400 38-40-40-80, tREFI 24k, NGU & D2D 30x, Ring 38x which results in around 88ns in Aida64. I also have a 2x24GB M-die kit which can do 7200 at similar timings but I have not long term stability tested it in combined loads, especially given that I do not use the watercooling addon so memory temps have been observed up to 87C. It can do a bit better in Aida64, around 85ns. I did get the final Premamod bios recently so its tuning capabilities are better than the stock bios along with updated microcode and other throttling fixes. -

Eluktronics Hydroc G2 / Uniwill IDY 2025

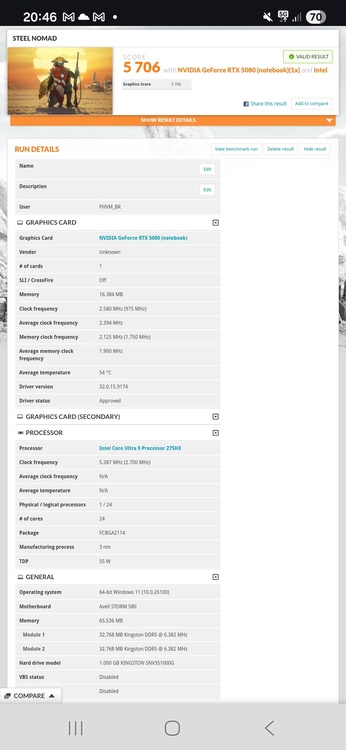

PHVM_BR replied to win32asmguy's topic in Uniwill (TongFang)

I don't have my laptop with me right now. I can post tomorrow or the day after, but there's no secret. Undervolt the P-core, undervolt the E-core, undervolt and/or overclock the cache, ICCMax = Unlimited on both the iGPU and the CPU. - Yesterday

-

Mie joined the community

-

Nice score, I know i asked a lot, But im a little lost with xtu. Can you share screenshots of xtu advanced tuning tab of what you changed, I have BS2 Pro cooling pad and my cpu throttle before finishing the bench @95c.

-

Eluktronics Hydroc G2 / Uniwill IDY 2025

PHVM_BR replied to win32asmguy's topic in Uniwill (TongFang)

You must disable virtualization in the BIOS. Core isolation is disabled in Windows. The XTU version compatible with Core Ultra (Arrow Lake) is version 10xxx. The version you are trying to use, 7xxx, is compatible with older generation CPUs. -

Core isolation is in windows not bios , memory integrity

-

Eluktronics Hydroc G2 / Uniwill IDY 2025

PHVM_BR replied to win32asmguy's topic in Uniwill (TongFang)

I use adaptive voltage. XTU is a heavier software than Throttlestop, so I recommend applying all settings and then closing the program. Your settings will remain until the system is turned off or restarted. If Throttlestop works better for you, use it! I've always used and preferred Throttlestop, but I switched to XTU with this system because of the extra adjustment possibilities. I don't have a liquid cooling system and I use an IETS GT600. I've tested Steel Nomad a little and haven't yet fine-tuned the system for this benchmark: https://www.3dmark.com/sn/12124446 -

Eluktronics Hydroc G2 / Uniwill IDY 2025

MiSJAH replied to win32asmguy's topic in Uniwill (TongFang)

Yup, got CPU ADV Perf Menu active. -

Eluktronics Hydroc G2 / Uniwill IDY 2025

MiSJAH replied to win32asmguy's topic in Uniwill (TongFang)

I have Intel VMX Virtualization disabled, can't find Core Isolation in the BIOS. Trying to install XTU 7.14.2.69 -

You need to select Cpu advanced per mod in CC

-

Eluktronics Hydroc G2 / Uniwill IDY 2025

PHVM_BR replied to win32asmguy's topic in Uniwill (TongFang)

Have you disabled Windows Core Isolation and virtualization features in the BIOS? Which version of XTU are you trying to use? -

Eluktronics Hydroc G2 / Uniwill IDY 2025

MiSJAH replied to win32asmguy's topic in Uniwill (TongFang)

I'm trying to install XTU but it's saying unsupported platform on install. I have secure boot disabled and unvolting enabled in the bios, have I missed something? Thanks. -

Thx for all this clear clarifications. Another questions please, In XTU for the P core, do you use adaptive voltage mod or static?. I got around 41k in cinebench 23 using a mix between bios high perf mod and some undervolt with TS. But now i revert to full xtu the max i can get is 40k. Also do you use watercooling or cooling pad? Can you test nomad steel bench? Im getting around 5400 5500.

-

Eluktronics Hydroc G2 / Uniwill IDY 2025

PHVM_BR replied to win32asmguy's topic in Uniwill (TongFang)

Yes, I use Afterburner. In the advanced BIOS tab, which is unlocked by checking the option in the manual settings of the Control Center, you adjust the memory and unlock undervolt protection. If you've never adjusted memory before, I recommend doing some research first. Generally, if the adjustment doesn't POST, the system itself reverts the changes and restarts Windows after a few minutes of trying to train the memory. If the system doesn't restart automatically, simply restore the factory settings: With the charger disconnected, press and hold Ctrl + F11 and click the power button for a few seconds. When the keyboard starts flashing, you can release Ctrl and F11. Wait a few minutes and the system will restart with the default settings. -

Eluktronics Hydroc G2 / Uniwill IDY 2025

MiSJAH replied to win32asmguy's topic in Uniwill (TongFang)

Not first time. Left it on while I went out for food and returned to this. About to attempt a win 11 reinstall. -

What is this? First time boot? Should not take all that time, did you connected to internet for windows uppdates?

-

Eluktronics Hydroc G2 / Uniwill IDY 2025

MiSJAH replied to win32asmguy's topic in Uniwill (TongFang)

Got my Neo 16 (E25) today. It's stuck in a: It's been like that for 35 minutes. Any suggestions? -

I think the Samsung isn't too glossy, way better than the 2021 lg Gram IPS displays - which were like a mirror. The LG OLED on the new gram has quite effective matte coating. But yes, depending on the eyes of you the refresh rate is or isn't problematic. I actually never worked a longer time with a OLED display laptop so I should try that out before I ever upgrade. I remember on CRT I was fine as long as refresh rate was 120hz. 50/60hz really strained my eyes. But I worked quite long with a 21" CRT and only upgraded once 24" IPS got decently priced. TN was really horrible but my first notebook was TNT... This Samsung is too heavy too - I linked it here because many people still believe OLED use more power - but it's crazy how little the now need at high brightness, it's like 1/3 of IPS at that brightness. On very low brightness IPS still wins - but that's not really relevant as we are talking maybe about 0.5-1w difference max in favour of the IPS. Maybe 1-2 generations more and OLED will win at any brightness. I think LG uses 480hz right now - some review mentioned it but I cannot remember for sure. that's what last years model used in any case. It's a bit better vs 240hz.

- 39 replies

-

Hi, my Alienware fans dont work on their own so I had to setup HWINFO64 I just want to make sure the gtx1070 gets cooled properly, the gpu never gets hot right after turning on the laptop right? (because when setting up HWINFO64, I think it only starts the fans and cools the gpu when windows boots, not when pressing the power button of the laptop) Thx

-

- alienware 18

- gtx1070mxm

-

(and 1 more)

Tagged with:

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

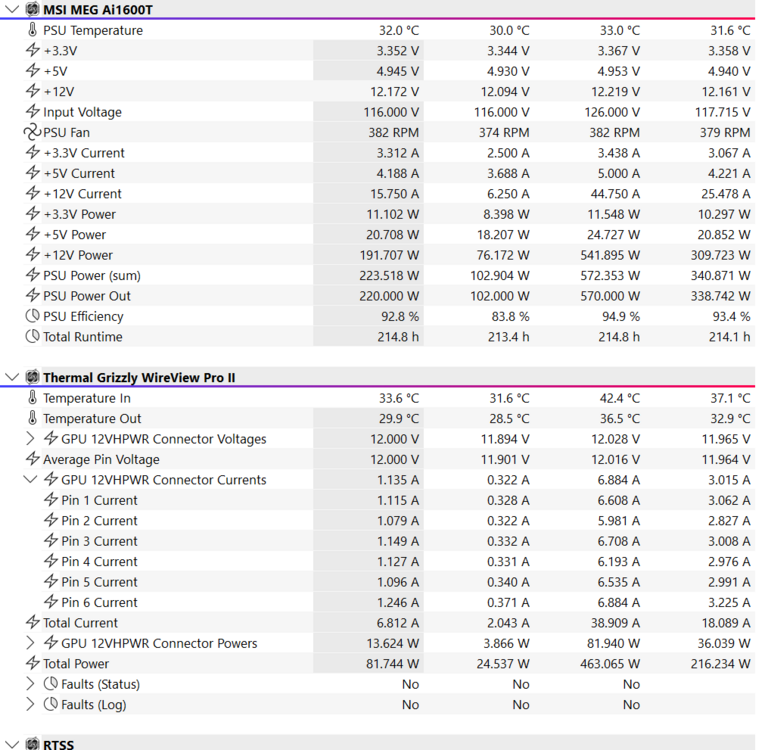

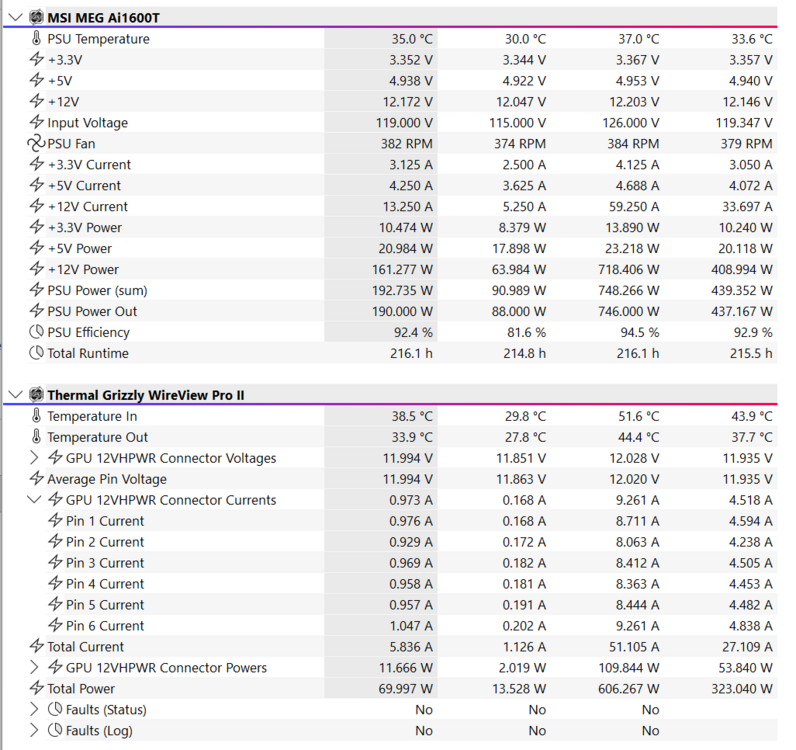

Loving the updated HWInfo for WVP2 along with the MSI MEG 1600T data..... Still boggles my mind MSI stepping me up to the AI1600T as a replacement. I wonder if they took a gander at my "registed product" history and went "whoa!" including the Vanguard 5090 🤣 But with all things taken into consideration, and the effective death of EVGA, MSI is my favorite and has been for quite awhile. Again, new cable and Pin 2 runs a smidge lower and Pin 6 a smidge higher. This is across three cables (Corsair 600w native, MSI Tentacle, MSI single run AI1600T) and two PSUs (Corsair SL1000 SFX, MEG AI1600T) Only causes a bit of separation closer to 600w. I'm going to go ahead and wire the auto shut off interrupt cable and heat sensors because....well....why not? 90 min of FO76: vs 90 min of WoW Midnight (Harandar Region): -------------------------------------------------------------------- Man, your chip is brimming on golden status running 2200/6400 line my 9800X3D. I tried everything I could to get it stable at 2200/6600 but that was a no go. I was asking for a unicorn though..... I never thought to run tRCDWR so low.... I'll have to give it a whirl. -

Ahh i see, good then, i dont want to flash the xmg bios and finish with missmatch FN key. I will try XTU, for cpu OC. For GPU you use Msi afterburner i guess? For the ram i have kingston fury impact 2x16 5600mhz single rank. I never messed with ram timing, so i just have to change only those timing and voltage in bios? If it dont post i need to clear cmos isnt?

-

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

So, I think I had mentioned that I had an old G.SKILL 8000 CL40 XMP 48GB kit that I could never get to run stable on any Ryzen platform I have tried to use it on. It ran fine at 8400 C38 on Z790 and I just saved it in case I might need it. Well, I got it stable on the Strix. Not at 8000, but 6400 with 1:1 (Gear 1) mode and it runs pretty nice. I might be able to tighten things up a bit more, but the way RAM prices are so stupid right now we have to make do with what we have because we not only cannot afford to waste money on better RAM, finding it to buy it is difficult. The only thing you can buy is overpriced crappy bin quality right now. -

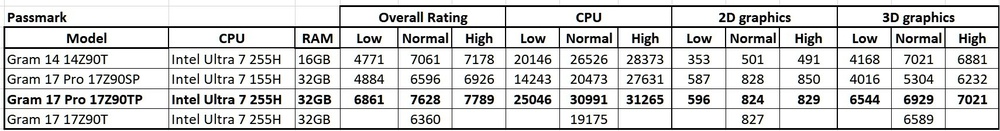

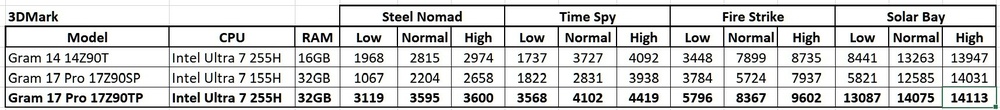

The same pattern of the 2025 gram Pro on low fan being faster than the 2024 gram Pro on normal fan is also seen on the Passmark benchmark test. Finally, I ran some 3D Mark tests although I don’t normally have a need for 3D graphics. The results follow the trend of the 2025 gram Pro on the low fan setting being faster than the 2024 gram Pro using the normal fan setting. I carried out some battery testing using my normal method of playing an MP4 movie. At 60% display brightness the average power drain over 8 hours was 5.3W. Lowering the brightness to 35% reduced the power drain to 4.4W. Illuminating that big display is what uses the lower. I have little doubt that the computer is capable of spending a working day of normal office usage without needing a power socket. It can’t match, however, the 14” gram where the power drain for the same test is less than 3W but higher CPU usage will have a bigger proportional effect on the latter machine. While talking of power, LG ships the UK models of its notebooks with a power brick which has a separate mains cable which adds a lot to the travel weight. The computer will, however, work off almost USB-C PSU which has a 20V output. It will complain if the PSU rating is less than 65W but this has no adverse effect on performance. If needed, some power will be taken from the battery. The photo below compares the LG 65W PSU with an Anker 65W PSU plus 3m USB-C cable. In conclusion, it appears that my objective of having a notebook similar to my 17” 2024 gram Pro which can match its performance but with less fan noise is satisfied by the 2025 version. Perhaps the Panther Lake 2026 gram Pro will be even better but it might also be more expensive.

- 3 replies

-

- lg gram pro 17

- review

-

(and 1 more)

Tagged with:

-

Dell Precision M6700 RTX Ampère and Ada Lovelace issues

SuperMG replied to SuperMG's topic in Custom Builds

I tested with the Cicichen heatsink, it's poor performance way way poorer than the M6800 with bad heatpipes... Waste of 100 USD to be honest. 3 heatpipes full copper, can't handle 150W... Heaven benchmark good temperatures but... On M6800 I get 245FPS and on M6700 I get 170FPS... Furmark seems similar, mostly throttled because of the thermal throttle. Userbenchmark doesn't power throttle. I get 86% on M6800 and on M6700 66%. Both laptops can do 150W max on the MXM slot. -

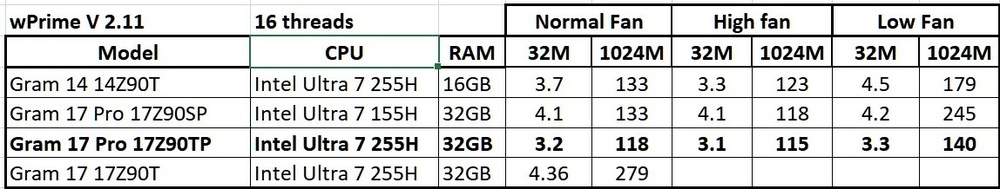

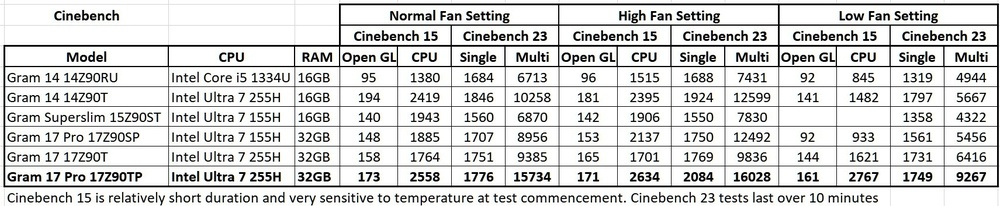

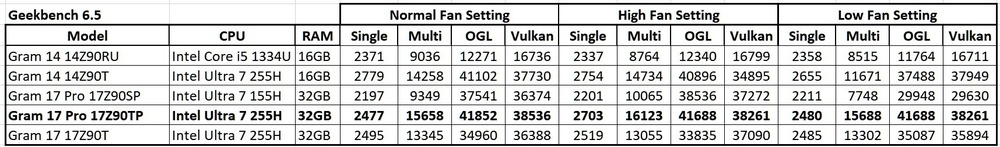

For performance testing I started with the old and simple wPrime for which I have results going back many years and which measures the basic CPU performance. The 32M tests, which only last a few seconds on modern hardware, don’t vary much. Two outliers on the 1024M test are the 17Z90T with its poor design of the cooling vents and the 2024 gram Pro on the low fan (and low power) setting. The 2025 gram Pro on low fan isn’t far behind the 14” gram or the 2024 gram Pro running at the normal fan setting. LG seem to have selected a good power value for this low fan setting while there’s marginal performance gain in return for the much greater noise of the high fan setting. The next benchmark to be examined is Cinebench. The older Cinebench 15 tests are completed relatively quickly on newer computers but are representative of short duration tasks. Cinebench 23 keeps running for at least 10 minute and is a better indication of thermal performance limits during longer duration tasks. There’s not much difference in the Cinebench 15 results for the Arrow Lake 14” gram and 17” gram Pro while both are well ahead of the non-Pro gram 17. There’s also not much difference in the single core performance of any of the notebooks in my summary table. In fact, the 14” gram leads the field for this test. I suspect that this is due to the lower power limit keeping the CPU running faster for more time before reaching a thermal limit which triggers a slowdown. In Cinebench 23 the same pattern is noticeable for the single core test but the 17Z90TP is well ahead of the others for the multi-core test. On the low fan setting it’s faster than the 2024 gram Pro at the normal fan setting. The next set of tests is Geekbench 6 where the single core test results are all close together with the 14” Arrow Lake gram being slightly faster than the 17” gram Pro for the same cooling option. The 17” Arrow Lake gram Pro pulls ahead on the multi core tests and also on the graphics tests, perhaps helped by more and faster RAM. This notebook, on the low fan setting is faster than its predecessor at the normal fan setting. More speed with less noise!

- 3 replies

-

- lg gram pro 17

- review

-

(and 1 more)

Tagged with:

-

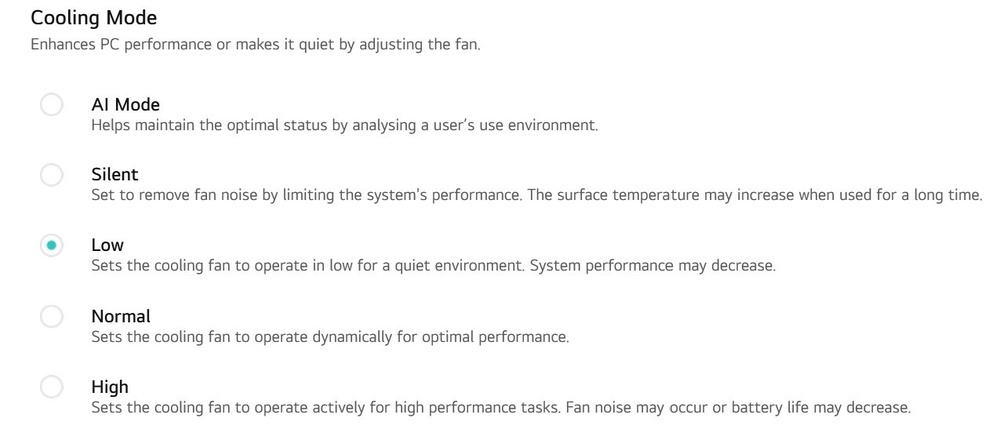

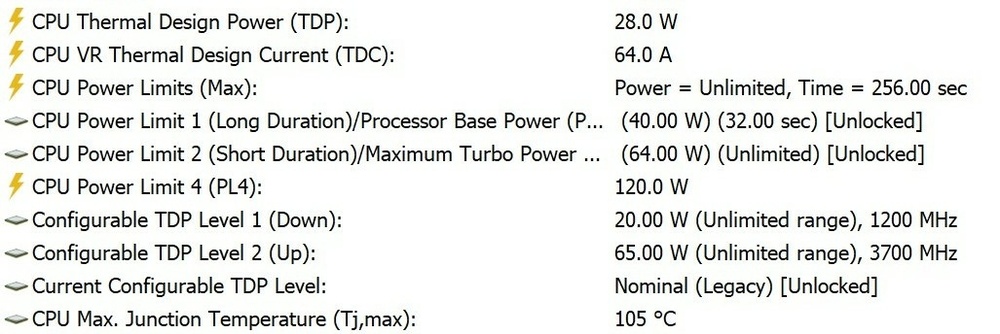

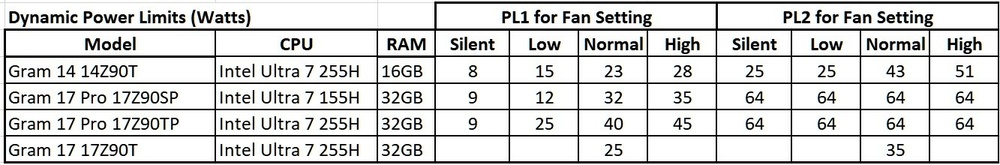

It's time to do some testing which is made more complicated because the user-selected fan speed controls the CPU performance in longer tests. LG’s design logic is to specify different power limits in the BIOS according to the cooling mode option selected by the user using either the LG “My gram” app or the Fn+F7 key combination. The system has two power limit values. PL1 is the maximum long duration power which can be supplied to the CPU and PL2 is the short duration power limit. HWiNFO reports this information for the Intel 255H CPU: Those are the static (ie base value) power limits which LG designers considered appropriate for the notebook’s thermal performance. There are also dynamic power limits which change according to the cooling mode and can be seen using HWiNFO’s sensor data. I have summarised these and compared them with the corresponding values for some other LG notebooks. I have ignored the AI cooling mode because one initial test showed slower than the low fan setting. Perhaps it measures the ambient noise. It can be seen that LG have given the 2025 gram Pro higher PL1 values than both its predecessor and the other 2025 models with the same CPU. The difference on the low fan setting is substantial. More power should enable higher performance until the CPU reaches the maximum allowable temperature of 105°C.

- 3 replies

-

- lg gram pro 17

- review

-

(and 1 more)

Tagged with: