-

Posts

132 -

Joined

-

Last visited

Content Type

Profiles

Forums

Events

Everything posted by Ionising_Radiation

-

I have a 180 W adaptor. I need to buy a 240 W one... Bummer. The repaste was performed by the Dell technician who replaced my RTX A4000 for the RTX 3080. I have just purchased 80×80mm of the Honeywell PTM7950 thermal pads, which should arrive in a couple of weeks. I thought Thermal Grizzly Kryonaut (or Conductonaut, or Carbonaut) or any of the various carbon pads were the rage a couple years ago back in NBR...

-

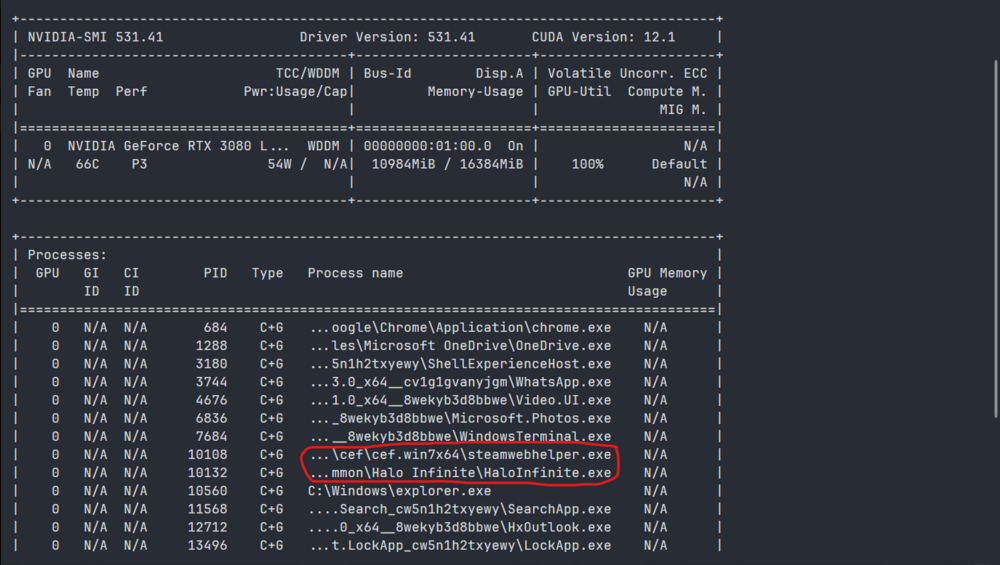

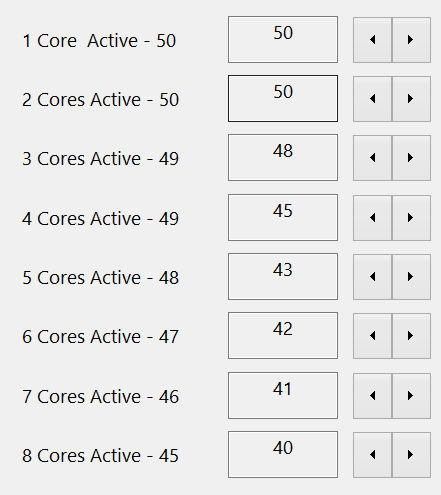

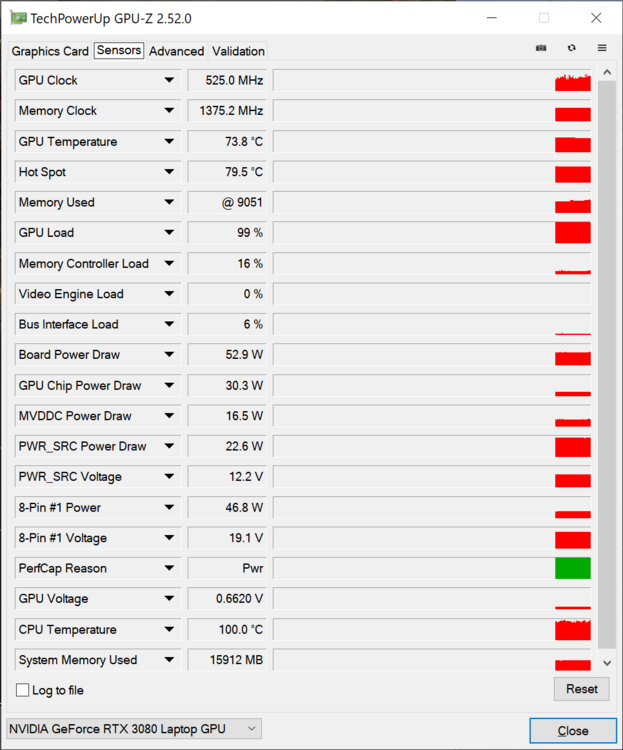

It has been doing this intermittently over the past several months, at least. It seems Halo Infinite is one game where this happens particularly often (it's not even that GPU-intensive). I presume this is the Dell Power Manager thermal mode? It was set to High Performance. I'll change it to 'Optimised'. Is there somewhere I can monitor this? @Etern4l, I have downclocked my cores as you suggested:

-

It's switched off for me. There appears to be another setting, 'Adaptive C-states for Discrete Graphics' which was on. This forum thread suggests that the same problem as mine (sudden clock speed drops and hence framerate drops) is caused by it. I have now turned it off. I'll see if the problem resurfaces after this.

-

At a first glance, the new ZBook Furies look very favourable compared to the equivalent Dell Precision 7680 and 7780. The biggest issue for me is price; when I last checked, HP's laptops were easily 50% more expensive than Dell's and it is hard justifying this sort of price increase. I still want to see where it goes, though, because I much prefer HP's all-metal design, straightforward keyboard layout, real trackpad buttons, high-quality speakers... And perhaps most importantly, the fact that HP offers a 4K-class, 16:10, high-refresh-rate, deep-colour, wide-gamut display (whereas even the 7680 is still stuck with a 1920×1200 60 Hz) is very enticing indeed.

- 166 replies

-

- 2

-

-

Dell is pushing CAMM, a priorietary RAM module format

Ionising_Radiation replied to Etern4l's topic in Components & Upgrades

But... it's not a proprietary solution. @Aaron44126 literally just said Dell offered it to JEDEC as a standard connector/form factor for notebook DRAM, which has been accepted and even expanded by JEDEC. -

`nvidia-smi` will answer both. Arch Linux has nvidia-prime which provides `prime-run`. It looks like Ubuntu has a similar package, which provides similar functionality with `prime-select` or the NVIDIA X Server Settings window (`nvidia-settings`). `prime-run` is essentially a one-liner shell script that sets environment variables for the script's session (and any child processes): #!/bin/bash __NV_PRIME_RENDER_OFFLOAD=1 __VK_LAYER_NV_optimus=NVIDIA_only __GLX_VENDOR_LIBRARY_NAME=nvidia "$@" Then you run any program off the terminal with this, e.g. `prime-run vkcube`. I have never played games on Linux (and I fundamentally dislike gaming with a translation layer, even if it works more-or-less seamlessly), so I haven't tested this in great detail. Again, the programs and utilities in `nvidia-prime` ought to help. Overall, on Linux, the GPU-switching and power management mechanisms are provided in the OS by PRIME and PRIME Render Offload (pun: Optimus PRIME), and the NVIDIA driver's Runtime D3 power management implementation (RTD3), respectively. With a modern NVIDIA GPU (Turing and later), there is generally very little configuration needed to fully power-down the dGPU. I can achieve > 12 hours of battery life with PRIME, which is better than Windows.

-

Check out sbctl. Or if you want to do it all yourself, the Arch wiki has a detailed guide on self-signed boot images. If you do this, make sure you also read this section on dual-booting with Windows. If you don't include the Microsoft 3rd Party UEFI CA certificate, you will have a soft-brick when you change to NVIDIA-only in the firmware, because the GPU OpRom can't load, and you won't have any display output.

-

You'll need a fork of libfprint, libfprint-tod to get started. Presumably the Ubuntu package is libfprint2-tod1. As for your desire for a more Windows-esque workflow... You should really consider trying to get a KDE Plasma-based distro to work, you might end up fighting with it a lot less than with GNOME.

-

@Aaron44126, thanks for the quote. I don't use Ubuntu + GNOME, but rather Arch + KDE Plasma, so I'm not sure how much translates to that, but the key ideas should be the same. The biggest sources of headache with Linux are NVIDIA graphics cards; I get a feeling your woes with KDE Plasma have to do with Wayland + NVIDIA, which isn't a great combination (yet). Here are some relevant tips... Blacklist the nouveau open-source module during install, if you're using NVIDIA-only: nouveau.blacklist=1 Use the latest possible proprietary driver from apt, not NVIDIA's official sources. Ampere and later require no additional configuration for PRIME nor Optimus power management, unlike Pascal or Turing. Linux works well with both Optimus and discrete-only. Ensure the i915 nvidia nvidia_modeset nvidia_uvm nvidia_drm modules are in your initramfs. This allows the Intel and NVIDIA drivers to be loaded as early as possible. You may need to add ibt=off in your kernel parameters, again, because of NVIDIA shenanigans. Apparently the newer 500.XX drivers don't have this issue; I haven't tested this yet. Other tips: Disable Secure Boot. I don't know if the Ubuntu bootloader is signed, but this really makes life easier. Consider installing Linux on its own disk to make life easy (Windows updates may mess with GRUB). My own setup involves the following specialities: BTRFS subvolumes instead of ext4 and partitions to allow for dynamic resizing LUKS-encrypted drive, keys enrolled in the TPM to automatically decrypt on boot systemd-boot instead of GRUB Copied-over Windows bootloader from Windows' EFI partition to the Linux EFI partition, which systemd-boot automatically recognises and lists in the boot list Unified kernel images which are also automatically recognised by systemd-boot. These are self-signed with sbctl for Secure Boot, but I have since disabled it altogether because I was facing issues with hibernate on Windows. I use the linux-zen and linux-lts kernels, so it's easiest for me to use DKMS with nvidia-dkms to automatically generate the modules every time I receive a kernel update. These require the linux-zen-headers and linux-lts-headers packages, of course. iwd wireless backend, but NetworkManager front-end so KDE Plasma has a nice settings menu Others are mostly personal preference (zsh over bash, custom icons), but by and large I use Plasma almost stock.

-

Sort of opposite to the 7530 and 7540; there, the silver and black frame around the laptop was the 'cage' that everything was attached to, so if you wanted to change the palmrest, it was only about a third of the way into disassembly. In the 7550 and 7560, the palmrest itself is the frame upon which everything is attached to. Not sure how it is in the 7670 and 7680. My laptop has already been thoroughly stripped down and reassembled two times by technicians; both requiring motherboard replacements. I have run out of patience to tinker, so even my RTX 3080 was installed by the technician the second time the motherboard was replaced.

-

I experience this too. On top of that, sometimes the external output occasionally just stops working, and all the active windows I have collapse back into the internal display, and I have to reboot my monitor for things to get going again. As far as I've seen, this doesn't happen on Linux (whether X11 or Wayland), but Linux comes with its own set of issues which keep me from using it day-to-day.