-

Posts

461 -

Joined

-

Last visited

-

Days Won

4

Content Type

Profiles

Forums

Events

Posts posted by Clamibot

-

-

12 minutes ago, electrosoft said:

RTX Pro 6000 is absolute pure baller class. If price was no object, I'd scoop one up ASAP and pray it doesn't sound like Der8uaers.... 🤣 It is the 5090FE TI Super edition.....

As for the Astral 5090, I had fun for two months playing around with it. Trying several vBIOS, OC'ing it, benching and collecting data in WoW, Fallout and Deus Ex along with 3Dmark stuff. Testing V/F curve offsets vs traditional, idle testing and all the fun stuff that was offered along with the 5080FE from launch moving through all the drivers and such.

I'm good right now with the 9800X3D + 9070xt. $3600 is steep and personally I loved the 5090 overall but the bang:buck just wasn't there especially in WoW. I think I said it when I got it the problem was the 4090 was already being underutilized in PvP/PvE content and the 5090 just extended that even more.

I really gathered a lot of data between the 9070xt and 5090 with WoW and I don't know if it is driver overhead in combination with the WoW engine but when the 5090 is firing on all cylinders, it is firing monstrously. Put the 5090 in an area with zero players and letting the CPU feed it properly and it was almost doubling the fps of the 9070xt (also at 5x the price) with both cards hitting 99% utilization. The problem comes back to player data/physics and when raids/orbs/assaults come into play, both cards tank hard but I found in let's say the new Radiant Flame assault and the final boss fight that the 9070xt actually gets better fps than the 5090 on the bottom end when the CPU is just getting hammered overall. I ran it over and over switching between the 9070xt and 5090 using two independent SSDs with their own W11 installs and the 9070xt just handled the CPU tanking better than the 5090 which results in better game play overall when fps really start to dip.

I think I said it during my first round of ownership with the 9070xt when AMD fixed their drivers (understatement) and while the 9070xt was getting overall lower fps, the gameplay was just "smoother" than with the 5090 or 5080FE in WoW. Having run numerous Tier 11 delves solo, the gameplay, smoothness and responsiveness of the 9070xt is >=5090 even when it is getting ~160-170fps vs the 5090 clocking in at 238fps capped to monitor 240. This is with all the cards running 4k Ultra RT max.

I'm tempted to pick up another (that would be my 4th or 5th) 7900xtx so I can see if it is fixed properly too with the newest drivers and runs just as smooth but its Achilles Heel was RT on just brutalized it and it was always recommended to turn it off. The 9070xt doesn't suffer from that problem in WoW.

It seems odd to say it, but I'm finding the 9800X3D + 9070xt providing the best overall WoW experience atm especially during crunch time vs the 5090 when the CPU is gasping for breath and GPU utilization plummets.

On the other hand, the 5090 absolutely wrecked the 9070xt in Fallout 76 and when dealing with outdoor bosses or dailies with plenty of other players, you can definitely feel that difference between the 5090 and the 9070xt. 9070xt is completely playable, but man that 5090 brought the high heat and then some.

In the end, it was the $3600 just like in the end it was the $2665 for the KPE 3090ti that I could not reconcile....

The only cards on my radar now are potentially a 5090FE via VPA/BB because while it too is priced stupidly it isn't AS stupid but I'm in zero rush and that's because I want to dabble in some SFF build outs with it and that's the last area to explore with the 5090 for myself. When I get one, if it is a coil whine mess, off to eBay it goes.

Yep, AMD's performance these days screams. This is the reason I've switched over to only using AMD GPUs. Nvidia's drivers have a CPU side bottleneck that holds your framerate back. My how the tables have turned. This kinda stuff seems cyclical. We had Intel/Nvidia be the go to combo in the past, now it's full AMD. They'll continue leapfrogging each other.

The great thing is, we get increasingly more powerful toys! I don't have any brand loyalty, but I sure am a fanboy of whoever has the best stuff at the time.

-

2

2

-

1

1

-

-

Bartlett Lake confirmed!

Looks like we won't have to go to Xeon CPUs to escape the E-core madness after all!

-

1

1

-

2

2

-

1

1

-

-

2 hours ago, Mr. Fox said:

Everything would be so much better if NVIDIA would just admit 8-pin legacy PCIe power connections are better and recommend their AIB partners go back to it. But, they'll never do that. It is hard to respect people or companies that cannot admit that sometimes change is not good and demonstrate unwillingness to correct their own mistakes. Pretending to be right when it is obvious to everyone they are wrong makes them unworthy of respect.

This is the phenomenon of the "Intelligent Idiot". It's a very strange phenomenon where some otherwise intelligent people double down on their position even when they've been proven wrong because they cannot accept that they're wrong. Some highly intelligent individuals are also higly out of touch with reality.

-

2

2

-

1

1

-

-

1 hour ago, electrosoft said:

285k vs 9950X3D both tuned (ish)

Overall 9950X3D has higher fps, but the 285k has higher 1% lows sometimes substantially.

Now THAT coil whine is identical to my 3090 FE. Just throw in a massive amount of variable pitch changes like the Aorus.....

But with that being said, outside of the price, this is what the 5090 should have been from launch with just 32GB for the stupid prices being charged for the 5090.

I LOVE the look and design of the Pro 6000 more than the 5090 FE plus you get the full fat GB202. Icing on the cake is the 96GB to run larger LLMs (well for me, I don't know about you). For AI work, it makes more sense for me to pick up a M4 macbook than a 5090. It makes more sense to pick up an M3 Ultra than the RTX Pro 6000.

"The 5090 is the waste. The garbage from the production of these (6000)" - brutal.

His end rant is spot on. You are getting the die rejects for the 5090 and yields for the full fat are much smaller. I'm sure Nvidia is stock piling the dies that can't even make it to mid range 5090 status and we'll get a 5080ti to dump those off.

The fact the 6000 Pro can look and run like a 5090 is all you need to know in regards to Nvidia just pushing and upselling everything to absurd levels.

Everything about the RTX 6000 Pro is the 5090 FE on steroids from the looks to the internals. 🤑

I still want a 6000 Pro though.....just not at $8k+

Interesting video. I wonder what causes the drastic differences in 1% lows to flip flop depending on the game. I'm guessing it has something to do with inter-CCD latencies and the fact that not both CCDs have the 3D VCache, which in my opinion is a bad idea. Homogeneous designs yield the highest performance.

So looks like for Intel to reclaim the performance crown, they need to do 2 things:

1. Add their own version of 3D Vcache underneath their CPU cores (I believe they have something similar to that, which they call Adamantine cache)2. Get rid of the stupid E-cores and make all cores P-cores. I don't get the point of the E-cores. All they do is reduce performance for the most part, so I leave them turned off since leaving the E-cores turned off significantly increases my performance in pretty much everything, with games getting the biggest boost. I only had one case when using my 14900KF where they increased performance, and performance didn't increase by that much. Tripling your core count to get only a 17% increase in performance is an insanely bad ROI in relation to the amount of extra cores (yes, I know the core types don't have the same processing power, but even assuming the E-cores are half the processing power of the P-cores, it's still a very bad performance increase in relation to the number of extra cores). Intel's server class Xeon CPUs have all P-cores and they perform great due to not being shackled by E-cores. No weird scheduling shenanigans.

Since Intel doesn't seem to want to ditch their E-core idea, we may have to move to their server class CPUs to escape the E-core insanity.

-

2

2

-

1

1

-

-

-

On 5/14/2025 at 1:58 AM, Developer79 said:

Yes, it is possible, as stated, but it doesn't make much difference since Nvidia has abandoned SLI technology!

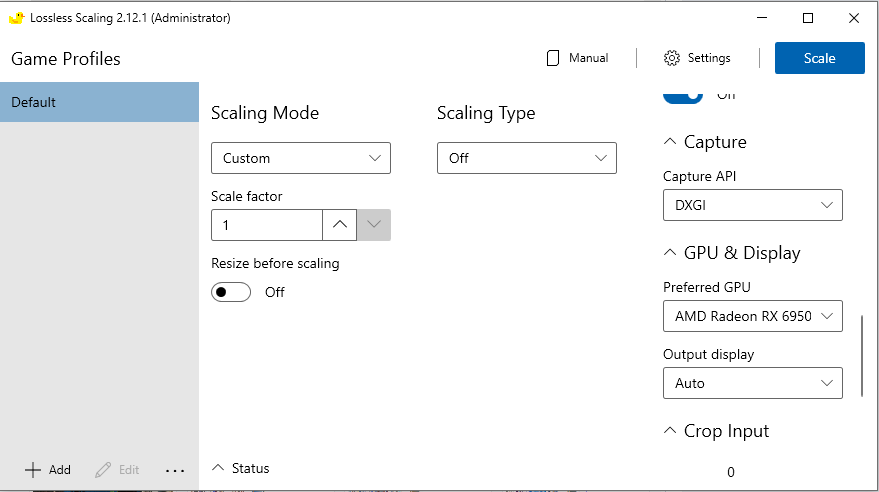

It makes a big difference now if you have Lossless Scaling. You can use one GPU for rendering the game and then a second GPU for frame gen. It's awesome, and is the best $7 I ever spent on any software. We're bringing back the days of SLI and Crossfire with this nifty little program!

-

2

2

-

-

3 hours ago, electrosoft said:

All that is guaranteed (assuming compatibility) is what is stamped on the boxes regardless whether it is memory, CPUs or GPUs.

For memory, you have to toss in MB compatibility and IMC along with other things on top of it.

But yeah, when I grabbed some Patriot 8200 kits before that couldn't even do 7800 TM5 30 minute let alone 90 or Karhu on my SP109 + Asrock Z790i but turned right around ordered TG 8200 that worked no problem up to 8600 and could post 8800.

Same problem binning B-die sticks too especially when it gets to voltages and timings. Same kits but others could go well beyond the others.

Crazy.

But good set of data to chew on!

Glad out of all of that a set emerged that could do 8000!

Yup, I can attest to this. My DDR4 kit I have in my older desktop (with the super bin 10900K from bro Fox) can do 4400 MHz CL 15 with default XMP subtimings as long as I have my Noctua IPPC fans blowing on the sticks at max RPM (3000 RPM). If I use lesser fans, the sticks aren't stable even with the normal 4000 MHz XMP profile as the RAM can't be kept cool enough to not error out. No wonder the previous owner waterblocked these sticks. The 4000 MHz CL 14 kit costed double what this kit did at the time I bought this kit, so I went with this kit instead and am still satisfied with having the second best. It looks like it can definitely still be pushed further with better cooling.

I typically do 4200 MHz as a daily driver speed as that is the best compromise between performance and stability (barring better cooling). A 5% overclock over XMP isn't bad given it was a brute force approach and I'm not experienced with RAM overclocking.

-

2

2

-

2

2

-

-

I'd buy one of these if I hadn't already bought a 14900KF from you last November. That thing is awesome.

Bro Fox is an awesome seller. You can buy with confidence from him as you will get exactly what you paid for. He will also make sure you get what you paid for. As a result, I scavenge used hardware from him like a vulture when something is available.-

1

1

-

-

9 hours ago, Maro97 said:

Now that's a real laptop! We need Clevo to make something like that.

-

1

1

-

-

6 hours ago, 1610ftw said:

Not sure what the argument is here. Clearly an desktop GPU board would only fit in the P870 but for using it as is the X170 is a better laptop because the added capability of the P870 to use a second GPU is not of much use any more and does not outweigh the keyboard, sound and lesser CPU cooling. In any case what is holding the P870 back the most is by now the CPU unless maybe you manage to put a 5080 in there for 4K gaming where frame rates will be affected the least.

I'd have to argue against your assertion that the P870's second GPU slot is not of much use nowadays, as dual GPU configs are having quite the revival right now due to Lossless Scaling being able to utilize 2 GPUs to render any game. The caveat is that this pseudo SLI/Crossfire revival is only amongst technical users, not the general public.

Additionally, there are productivity workflows that benefit from multi GPU configs such as lightmap baking, scientific computing, and video rendering just to list a few. Admittedly, these tasks are better performed on multi GPU desktops, but a multi GPU laptop is a boon for people like me who want or need all our compute power everywhere we go.

For the general public though, your statement does in fact hold true. I'm making an argument on a great degree of technicality here, and it really only applies to a niche set of users. I don't think the general public has really ever been interested in multi GPU configs due to the cost. However, the P870 is definitely a laptop oriented towards more technical users, so I think the multi GPU capability is still very much useful. I believe a dual RTX 3080 MXM laptop would have a little more GPU compute or similar GPU compute to the current top dog RTX 5090 laptop.

Having said that, I like the X170 a lot as well. It has the best speakers I've ever heard in a laptop and has VERY good CPU side cooling capabilities.

I'd really like multi GPU laptops to make a return to the market. They're awesome. Apparently it may even be possible to get a 40 series core working on a 30 series MXM board according to Khenglish, so it'll be interesting to see where that goes. Dual RTX 4090 P870? That would be a sight to see.

-

1

1

-

-

7 hours ago, Mr. Fox said:

I don't need it. I just today received a brand new 3080 from Israel that has more of them for under $350. These are like what they use in servers so they're a bit loud and hot, but I can fix that. An aftermarket backplate and waterblock will do the trick. This is indeed brand new and it shipped in generic whitebox type of packaging as though it was never intended for retail consumer distribution. Works like a normal 3080 though. Just stripped down with a crappy blower cooler. They've got more old stock on hand.

https://www.ebay.com/itm/187160768672I love blower fan cards!

Although they're louder than axial fans, the noise doesn't bother me as it's not a high pitched whine. Blower fan cards also tend to be the cheaper models too (yay!), and they exhaust heat directly out of my case instead of dumping the heat inside. That's the reason I prefer them.

Also, blower fan cards are only 2 slots which makes it easier for me to build out multi GPU configurations.

-

2

2

-

-

1 hour ago, tps3443 said:

That’s excellent! I almost bought a copy from another guy who received a free copy as well. But then I discovered it’s free for Game Pass users, This new Doom game well, It’s not the typical Doom which are usually “Amazingly optimized on Vulkan” game, prior Doom mentality is = (run it maxed out on any GPU ya got) lol. 4K native pushes the 5090 ridiculously hard and actually uses it! Makes no sense though. Of course, DLSS+Frame Gen make it run really really easy. But, I don’t understand why they did this. It should not be this hard to run.. My 5090FE smacks it down hard, especially when I’m not recording gameplay, but not everyone has a 5090 lol. This game would whoop something like a RTX 5080 during 4K Native 🫠Unfortunately, Doom: The Dark Ages is artificially demanding. It forces ray tracing, and you can't turn it off.

If you ask me, it doesn't look any better than Doom Eternal while costing significantly more performance to render. This is a significant regression in their game design as it doesn't make sense to use ray tracing for static environments. Baked shadows would've been much better.

The thing is, as a game developer myself, I actually have insight into this kind of stuff unlike regular players, so I know what's really needed and what isn't. I understand the team behind the game wanted to focus resources on making more levels, and that's great, but one of the selling points of Doom games were how easy they were to run despite how good they looked. Optimization went down the toilet with this release. Having said that, I still want to play the game as I'm a big fan of Doom, but now I need more powerful hardware again to reach my framerate target. It doesn't seem necessary for the game to cost so much performance to render though given what they achieved with the previous 2 installments. Hopefully we get a mod that allows us to turn all the raytracing crap off.

Seriously, it doesn't take that much effort to bake shadows on levels. It's an automated process, and you just have to let it run its course. Games run much faster with baked shadows. Rasterization will always yield superior performance, which is one of the most important things to consider with realtime interactive software like video games. If you can use baked shadows, you do that. If you have a static environment, use baked shadows. Raytraced shadows only make sense for dynamic environments where you can destroy stuff in the game world.

In regards to video game graphics in general, we're at a point of seriously diminishing returns. We need improvements in gameplay a lot more than pushing raytracing in every game.

-

1

1

-

2

2

-

-

10 hours ago, Snowleopard said:

Hi, has anyone see the other thread about the new mxm graphics cards in mxm a and mxm b formats? will any of them work with the x170sm-g?

I saw the posts in that thread.

I don't think these cards will work in the X170SM-G, but I'd like to be proven wrong. Looks like we'll need custom heatsinks to start with as the X170 models use the custom bigger Clevo form factor MXM modules rather than the classic MXM type A or type B modules.

-

How does the Cryofuze compare to Phobya Nanogrease Extreme? Are they about the same? Phobya Nanogrease Extreme is the only paste I've been able to use in laptops without it pumping out.

-

1

1

-

1

1

-

-

6 hours ago, SuperMG3 said:

Hello.

Indeed my GPU does P0. With or without regedits.

When the GTX 485M has a driver, P5200 can do max 70w average and without a 485M driver, P5200 does 95W average...

I don't understand. 485M is also at state P0 at the same time as the P5200 while the 485M is doing nothing other than displaying...

I went back to read your previous posts and had a thought. The Quadro P5200 is the slave card and the GTX 485m is the master card, correct?

If that's the case, no wonder your benchmarks aren't as high as expected. You're running your benchmarks purely on the Quadro P5200, correct?There is a performance and time cost associated with transferring information across the PCIe bus between 2 GPUs. If the master card is tasked with an operation, then sends data to the slave card to then perform another operaiton on that data, then the data is read back to the master card, that will result in a significant performance decrease due to latency penalties. However, in your case, the slave card does the work directly right? There will still be a performance decrease in this case since there's still the readback time cost, but at least only going one way this time (slave card to master card). Since the slave card doesn't output directly to the screen, the work performed by it has to be routed through the master card and passed to the screen. There is a performance cost associated with this as both GPUs have to use their encoder and decoder units to process this transfer of data.

The confusing bit to me though is the massive performance deficit you're getting versus what you would be getting if the Quadro P5200 was connected directly to your laptop's screen. I would think there would be somewhere between a 10-20% performance decrease from having to pass the output through another graphics card (depending on how good the encoder/decoder units on both cards are). However, you're getting around a 40% performance deficit, so something is definitely wrong. Perhaps the encoder/decoder units on the GTX 485m can't keep up with the thoughput of the Quadro P5200 and that stalls the rendering pipeline? The Quadro P5200 is much more powerful than the GTX 485m, so I have a feeling the GTX 485m is a bottleneck. Have you tried with any other cards as your primary display output card?

Typically with dual GPU configs, you want both cards to have the same amount of processing power or close to the same, as one card will bottleneck the other if the disparity between the cards' capabilities becomes significant. What is the most powerful card that will work in the master slot of your laptop? Were you able to identify any particular reason the Quadro P5200 would not work in the master slot?

-

1

1

-

-

@SuperMG3 Hey man, sorry for the late reply. I know you've been trying to get input from me for a bit as I've been tagged multiple times across your posts in multiple threads. I kept forgetting to respond.

So to solve your performance issues with the Quadro P5200, my first suggestion would be to check your power supply. When I upgraded my Alienware 17 from a GTX 860m to a GTX 1060, I ended up having to get a 240 watt power supply, else the card would never kick into its highest performance mode (its P0 state). It would stay stuck at its P2 state (medium performance) no matter what I tried when I had my stock 180 watt pwoer supply connected to my laptop. If you already have a 330 watt power supply, that should be enough, but using dual 330 watt power supplies or one of those Eurocom 780 watt power supplies wouldn't hurt.

We can help diagnose your issue by checking the performance mode the card is going into by using Nvidia Inspector (not Nvidia Profile Inspector!). You should see a section in the program that says P-State, along with the performance state readout of the GPU. From what I remember diagnosing my GTX 1060, there were 3 possible states: P8 (low power state), P2 (medium performance state), and P0 (maximum performance state). Perhaps your Quadro P5200 is getting stuck on the P2 state for some reason like my GTX 1060 was, thereby limiting the maximum power draw. You can try overclocking the P2 state from Nvidia inspector to see if that nets you any gains.

You should be able to force the P0 state using this guide: https://www.xbitlabs.com/force-gpu-p0-state/

If you cannot force the P0 state and the GPU insists on staying in its P2 state, either the card is power starved or something else is wrong. If you've determined the power supply isn't the issue, then we'll have to do some further investigation.

-

1

1

-

-

This looks a lot like thay Rev-9 laptop that came out a while back: https://www.notebookcheck.net/Massive-T1000-mobile-PC-supports-AMD-Ryzen-9-9950X3D-RTX-5090-and-other-desktop-CPUs-and-GPUs.977868.0.html

Soo maybe my Slabtop dreams will be realized sometime soon since these niche true desktop replacements keep popping up?

-

1

1

-

-

So interesting development today. I bought my mom a new laptop over the weekend since her old one was dying (had a good run though as it's 10 years old), and decided to do some tuning and benchmarking with it while setting it up for her. The Ryzen 9 HX 370 inside it is a powerhouse of a CPU when you max out the power limits. It benches higher than my 14900K, both in single core and multicore (specifically in Cinebench R15, which is the only version of that program I use for benching)! I also got rid of the windows 11 installation on it in favor of my trusty windowsxlite edition of windows 10 I really like for absolute maximum performance.

In short, after doing some tuning to maximize performance, the system is extremely snappy, and my mom is loving it. She snaps her fingers, the laptop is done doing what she wanted it to. I also tuned the speakers to give a sound quality boost. All in all, the Asus Vivobook S 16 is actually a pretty good laptop for general users (but bleh BGA🤣). The integrated graphics in this thing are pretty powerful too, I mean you can actually game on this thing! Even though the laptop isn't for me, since I bought it, I might as well have some benching and overclocking adventures while I'm setting the thing up. 🤣

However, this isn't even the most interesting part. This laptop has a really nice, glossy OLED screen, so I decided to do a side by side comparison to the screen installed in my Clevo X170SM-G. The verdict? Holy crap, my X170's screen is almost just as good as an OLED. I did not realize just how close to OLED level quality it was, which I was not expecting. So basically, rip off the stupid matte antiglare layer, increase color saturation a bit (from 50% to 70%), and now your IPS display looks like an OLED screen (yes, I modded my X170's screen by removing the matte anti glare layer, so it's a glossy IPS screen now).

The OLED screen on the Vivobook was kind of underwhelming when I tested it out some more. I mean, it's a super sharp 3.2K screen, but it suffers from black smearing? What? I thought OLEDs were supposed to have near instantaneous response times! It doesn't look like that's the case though as this OLED display gave me PTSD of me using my Dell S3422DWG VA panel, which has very heavy black smearing that I absolutely hate. The black smearing on the Vivobook's display isn't as bad, but it's still there, and my X170's IPS display has no black smearing whatsoever. If anything, my X170's IPS display feels much more responsive than the OLED display in the Vivobook. Granted, my X170's display is a 300 Hz display vs the 120 Hz display in the Vivobook, but OLED is supposed to have sub millisecond response times. It doesn't look that way to me at all, as sub millisecond response times should mean no black smearing.

So I guess OLEDs aren't the juggernaut the hype is making them out to be. Couple that with the expiration date on OLEDs, and I no longer want one. I'll just go with glossy IPS thank you very much. IPS seems superior in every metric except image quality, which it can almost match OLEDs if the IPS display is also glossy and you tune your color saturation, so good enough for me. Just goes to say, don't fall for the hype on any technology. Always do your own comparisons and testing, because sometimes the reviewers are just flat out wrong, just like how people keep saying there is no performance difference between windows 11 and windows 10. Uhh... yeah there is. I did my own benchmarking and get 20% higher framerates on windows 10, so I call BS. I'm now calling BS on the hype on OLEDs too. I'm glad I did not buy one, and now I no longer plan to buy an OLED display for my desktop. I'll just have to find an IPS display that has the matte anti glare layer glued on top of the polarizer layer instead of infused into the polarizer so I don't destroy the screen when removing the matte layer. Either that, or I'll have to find another way to glossify my Asus XG309CM monitor.

-

2

2

-

-

3 hours ago, ssj92 said:

This is cool, I'll give it a shot later, but I did find asus Z390 and Z490 SLI certs online, so I am going to try those.

Don't think I can bench 2x rtx titan in 3dmark even using that app

Yeah, the guide I wrote is more for maxing out gaming performance than benchmarking. It'll be really useful when I eventually get a 480 Hz monitor. I really like how these new ultra high refresh rate monitors look so lifelike in terms of motion clarity.

One of my good buddies has joked on multiple occasions that Icarus keeps flying higher (referring to me) whenever I get a new even higher refresh rate monitor.

-

2

2

-

1

1

-

-

On 3/21/2025 at 1:38 AM, ssj92 said:

Could someone with an SLI capable motherboard please help me out.

I just found out my W790 SAGE motherboard doesn't have an SLI certificate so I cannot enable SLI on my RTX Titans.

I tried extracting SLI cert from my old AW Area-51 R2 desktop motherboard, but it doesn't seem to work. So I wanted to see if a newer cert is needed (mines from 2014 X99).

if you have Z690 or any modern motherboard that supports SLI, can you dump your DSDT?

To do so, make a clover bootloader usb stick using: cvad-mac.narod.ru - BootDiskUtility.exe

Make the following folder once the usb is done: USB Drive>CLOVER>ACPI>origin

Then boot from it, at the boot menu when it asks you to select OS to boot from, press F4 and it will dump your DSDT file to:

USB>CLOVER>ACPI>origin>DSDT.aml

I want to see if a newer cert makes any diffference. I have 2 X99 certs that I tried injecting and it didn't work.

OR if someone is good at DSDT editing, I can attach mine here along with the guide I am trying to follow.

I messaged ASUS support but highly doubt they will send me a bios update with the SLI certificate.

I don't have any SLI capable motherboards, but I can offer you an alternative if you can't get SLI working. You can use Lossless Scaling instead to achieve pseudo SLI with much better scaling in the worst case. I posted some instructions on how to set this up a while back on this thread. You can even use a heterogeneous GPU setup for this and it works great!

On 12/2/2024 at 10:13 PM, Clamibot said:Dual GPU is back in style my dudes!

I did some testing with heterogeneous multi GPU in games with an RX 6950 XT and an RTX 2080 TI, and the results are really good. Using Lossless Scaling, I used the 6950 XT as my render GPU and the 2080 TI as my framegen GPU. This is the resulting usage graph for both GPUs at ultrawide 1080p (2560x1080) at a mix of high and medium settings tuned to my liking for an optimized but still very good looking list of settings.

I'm currently at the Mission of San Juan location and my usage on the 6950 XT is 70%, while it is 41% on the 2080 TI. My raw framerate s 110 fps, interpolated to 220 fps.

One thing that I really like aboout this approach is the lack of microstuttering. I did not notice any microstuttering using this multi GPU setup to render a game in this manner. Unlike SLI or Crossfire, this rendering pipeline is not to split the frame and have each GPU work on a portion of it. Instead, we render the game on one GPU, then do frame interpolation, upscaling, or a combination of both using the second GPU. This bypasses any timing issues from having multiple GPUs work on the same frame, which eliminates microstuttering. We also get perfect scaling relative to the amount of processing power needed for the interpolation or upscaling as there is no previous frame dependency, only the current frame is needed for either process (plus motion vectors for interpolation, which are already provided). No more wasting processing power!

Basically this is SLI/Crossfire without any of the downsides, the only caveat being that you need your raw framerate to be sufficiently high (preferably 100 fps or higher) to get optimal results. Otherwise, your input latency is going to suck and will ruin the experience. I recommend this kind of setup only on ultra high refresh rate monitors where you'll still get good input latency at half the max refresh rate (mine being a 200 Hz monitor, so I have my raw framerate capped at 110 fps).

To get this working, install any 2 GPUs of your choice in your system. Make sure you have Windows 10 22H1 or higher installed or this process may not work. Microsoft decided to allow MsHybrid mode to work on desktops since 22H1, but you'll need to perform some registry edits to make it work:

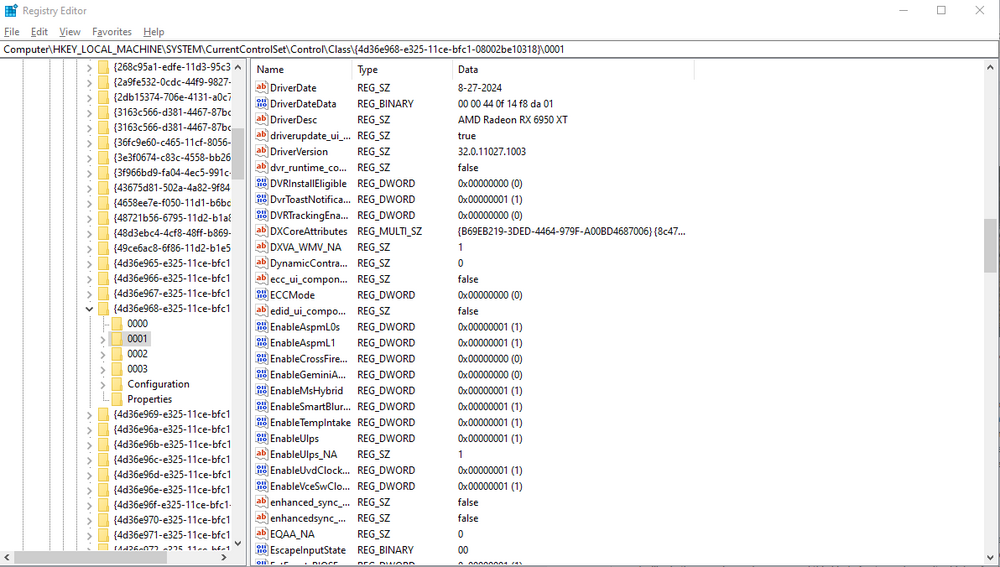

- Open up Regedit to Computer\HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Class\{4d36e968-e325-11ce-bfc1-08002be10318}.

-

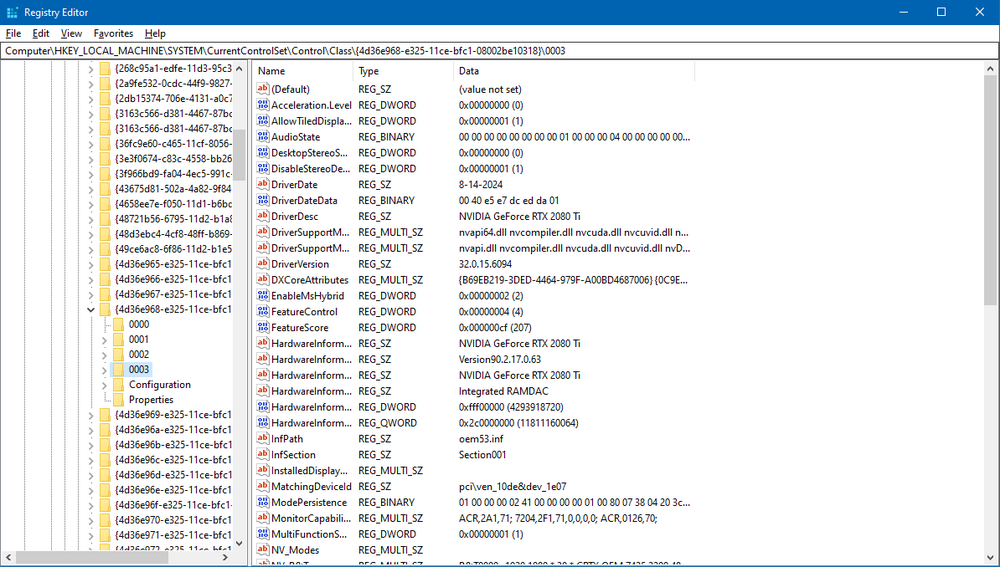

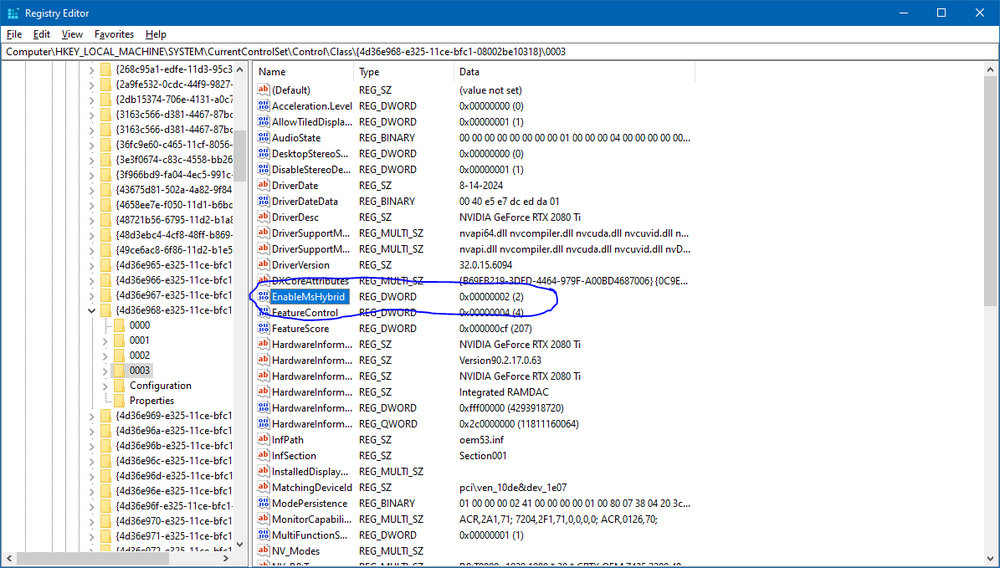

Identify the four digit subfolders that contain your desired GPUs (e.g. by the key DriverDesc inside since this contains the model name, making it really easy to identify). In my case, these happened to be 0001 fo the 6950 XT and 0003 for the 2080 TI (not sure why as I only have 2 GPUs, not 4)

-

Create a new DWORD key inside both four digit folders. Name this key: EnableMsHybrid.

Set the value of the key 1 to assign it as the high performance GPU, or set it to a value of 2 to assign it as the power saving GPU.

Set the value of the key 1 to assign it as the high performance GPU, or set it to a value of 2 to assign it as the power saving GPU.

-

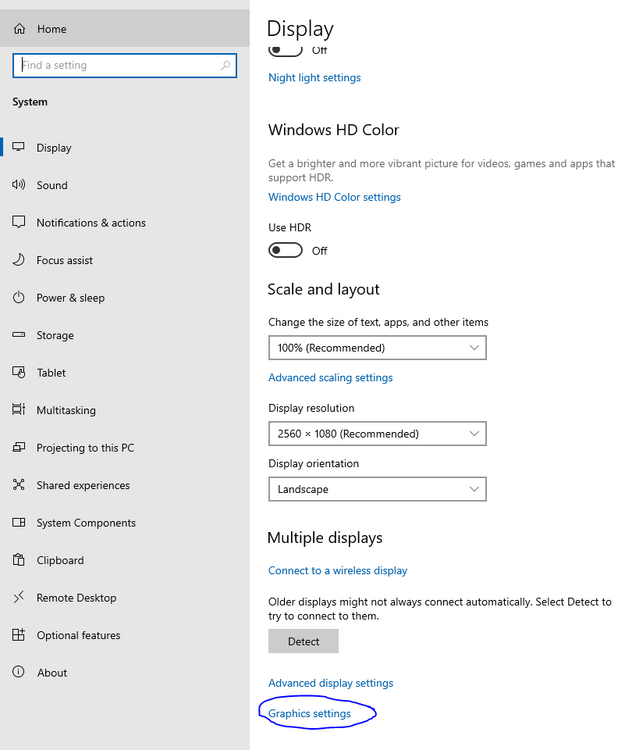

Once you finish step 3, open up Graphics Settings in the Windows Settings app

- Once you navigate to this panel, you can manually configure the performance GPU (your rendering GPU) and the power saving GPU (your frame interpolation and upsclaing GPU) per program. I think that the performance GPU is always used by default, so configuration is not required, but helps with forcing the system to behave how you want. It's more of a reassurance than anything else.

- Make sure your monitor is plugged into the power saving GPU and launch Lossless Scaling.

-

Make sure your preferred frame gen GPU is set to the desired GPU

- Profit! You now have super duper awesome performance from your dual GPU rig with no microstuttering! How does that feel?

This approach to dual GPU rendering in games works regardless of if you have a homogeneous (like required by SLI or Crossfire) or heterogeneous multi GPU setup. Do note that this approach only scales to 2 GPUs under normal circumstances, maybe 3 if you have an SLI/Crossfire setup being used to render your game. SLI/Crossfire will not help with Lossless Scaling as far as I'm aware, but if it does, say hello to quad GPU rendering again! The downside is that you get microstuttering again however. I prefer the heterogeneous dual GPU approach as it allows me to reuse my old hardware to increase performance and has no microstuttering.

-

4

4

-

Looks like the 9070 XT is a really good card. It's super enticing since its MSRP is only $20 more expensive than what I paid for my 6950 XT about 2 years ago, and it's a big upgrade over the 6950 XT.

I know this is FOMO, but I think I'd like to get 2 of these before tariffs hit and relive the days of Crossfire, but with near perfect scaling through Lossless Scaling. I'd be set for a very long time with 2 of those cards giving me a worst case scaling of +80% in any game.

I have come to like AMD graphics cards very much after getting used to their idiosyncrasies and learning how to work around those, and I plan on only buying AMD graphics cards in the future. They are a better value than Nvidia and offer superior performance vs their Nvidia equivalents at all performance tiers, at least in the games I play. They work pretty well in applications I use for work too.

Usually I do upgrades mid generation and do so once every 3 GPU generations at least, but I may make an exception this time if prices on everything are going to skyrocket. Makes me wonder if the tariffs are just a ploy to jump start the economy by inducing FOMO in everyone to buy stuff up now, therefore drastically increasing consumer spending. I hope this is the case but am prepared for the worst. I'm no stranger to holding onto hardware for a long time as that is already my habit, but it's rare for me to upgrade so quickly, and it kinda feels like a waste of money if I don't need it. However, I also don't want to pay more later, so it may be better to just eat the cost now.

-

3

3

-

1

1

-

-

On 2/15/2025 at 1:35 AM, MushroomPanda said:

Besides that, I also have some questions。

Since there aren’t many users of this laptop around here, I haven't been able to find answers。- Is the highest supported CPU for the X170SMG the 10900K? Would it be worth upgrading from the 10700K?

- Is the highest supported GPU the 3080? I was told that most second-hand MXM 3080s available now are leftovers from mining and are of poor quality. Has anyone tried upgrading to a ZRT 40-series MXM GPU? ZRT MXM GPUs

- If I want to overclock the 2080 Super, can air cooling maintain safe temperatures? Spending $100 to upgrade to liquid cooling seems a bit expensive.

The most powerful CPU supported by this laptop is an i9 10900K. Depending on your workload, it may be worth upgrading to as the 10900K is a 10 core CPU vs the 8 cores in a 10700K. That's a 25% increase in core count, so a 25% increase in theoretical multicore performance.

The RTX 3080 is the most poweful GPU that can be installed in this laptop. Unfortunately, Clevo abandoned this model really quick and never made any further upgrades available. That's really sad as I got this laptop specifically for its upgradeability. Given the cost for a GPu upgrade (since a new heatsink is also required), it may not even be worth upgrading this laptop (not that I need one right now, but still).

You can overclock the 2080 Super fine in this laptop. I can do a 10% overclock, which is the max I can do without the GPU drivers crashing, and the heatsink is able to adequately cool the GPU. As for the CPU side, it is possible to run a 10900K full bore at 5.3 GHz all core depending on workload. I can run that speed while playing Jedi Fallen Order, which becomes a very CPU intensive game when you have insanely high frmereate requirements like me. I have about a 200 watt power budget for the CPU side before it starts thermal throttling.

A few things to note about my CPU side cooling in this laptop: I got the laptop from zTecpc, so it was already modded from the factory. I also use a Rockitcool full copper IHS, which is flatter and has 15% extra surface area over the stock IHS, allowing for improved heat transfer. I also use liquid metal on the CPU, both between the CPU die and IHS, and also between the IHS and heatsink, so basically a liquid metal sandwich.Also, this laptop does have a USB-C port with DP support, that one is right next to the USB-A port on the right side of the laptop. You should see a strange looking D above it.

-

On 2/7/2025 at 11:56 PM, Tenoroon said:

Hey fellas, this is a less ideal post that will go down in my catalog, but i think it ought to be discussed. Some touchier topics will be discussed, so if you would rather not peer into my personal life, I completely get it.

I will edit this with a spoiler here at some point, but I cannot figure out how to add one on mobile. My appologies.

Last night, on Febuary 6th, 2025, I was leaving a laboratory at my university. There's a sketchy road I must always cross by foot to get to my apartment, and while I thought I could make it, the impatience of man taught me a valuable lesson at a terrible time; Man does NOT win against automobile. The speed limit was 35mph on that road, and the driver did not see me. Luckily, he had an old, intelligently engineered car (a late 90s - early 2000s Chevrolet Cavalier I believe), so I ended up rolling over and on top of the car. I was blessed with some wonderful bystanders, and with the remorse of the driver.

I was carried away on an ambulance and to a hospital that is essentially on the campus of my university. I remember it all clearly, and I've been given every drug known to man it seems. The medical staff here and every gear in this machine has done phenomenal work, and I appreciate every person who has helped me along the way. Luckily, my head and spine are fine, and the only thing that needs repairs is my right leg, where my tibia shattered into multiple pieces. I'm only 18, and I will have to live with a titanium rod in my right leg for the rest of my life...

I'm not inherently a funny individual as I tend to be serious and practical, but during this whole situation, I have tried my absolute hardest to keep my family and friends from stressing all too much. There were times last night and today where I had managed to knock all of the air out of someone's stomach, and successfully bringing light to such a dark situation.

It's a really strange time, and my outlook hasn't changed much. I must keep rolling with the punches and focus on the good instead of the bad. I type this from a hospital bed, expecting a somewhat speedy, but life changing recovery. I don't write this out for any of you to pity me. As a matter of fact, the best thing you all can do is read this as a lesson, and NOT worry about me. I know I'll be fine, so you all spend your energy doing what you guys do best, I hate to be an obstacle in everyone's seemingly jam-packed lives.

However, I want this to be a message to you all to keep pushing to be the best person you can. This might sound a bit grim and edgy, but that is not my intent. I also realize it may sound like I am struggling with life, but I want you all to know that, even in my worst times, I have never had a desire to give up. I'm still learning and growing, but right now, I am a man who fears death in its entirety, and want nothing to do with it.

As bad as things seem to get, there is always a future, and while it may be impossible to see the light at the end of the tunnel, light will always welcome you. It's up to you to have faith in the light, even though you may never see it.

Take care of yourselves fellas and keep working to do your magic! Without you all, my life would be so different that I couldn't even fathom explaining it. You guys have enlightened me to some of my greatest passions, and I cannot thank you all enough!

I'm usually one to stay anonymous, but I think now is an appropriate time to quit. I might still be naive, who knows, but you guys are either good people, or really good at convincing me the you're not ill-intented. I guess I'll never know!

I'm sorry to hear you got hurt but am glad you're ok. I wish you a speedy recovery!

Like me, you are still very young so you will most likely make a quick full recovery along with some physical therapy. Scratch that, you WILL fully recover. Mind over matter. A positive attitude makes a world of difference. Although you don't know any of us here on the forums in real life, we're still here for you and care about you. Good luck in life brother!

-

1

1

-

3

3

-

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

in Desktop Hardware

Posted

Funnily enough, if one buys Lossless Scaling for $7, you can get MFG on any GPU. You can even use the program to bring back the days of SLI/Crossfire with it's dual GPU mode!

The software suites locked behind either Nvidia's or AMD's products do not add any value to their products. They're technologies that should and can work on any GPU, proven by this nifty little program.

Supposedly Lossless Scaling frame gen also runs better on an AMD card than a Nvidia one. I don't know if that's completely true, but that's what I've heard. It makes sense given AMD cards typically have very good theoretical compute capabilities.