-

Posts

461 -

Joined

-

Last visited

-

Days Won

4

Content Type

Profiles

Forums

Events

Posts posted by Clamibot

-

-

Just now, Mr. Fox said:

Totally agree. You could even do up to 1.600V. The biggest problem is keeping the memory cool enough to not error out. Once the memory temps go above about 45°C you start to lose stability.

Good thing I have some Noctua 3000 RPM iPPC fans.🤪

-

1

1

-

1

1

-

-

2 minutes ago, tps3443 said:

About 1.550V to IMC is okay daily.Thanks. I'm going to see what heights I can achieve on this 4 DIMM motherboard then. Overclocking the memory has greatly increased my framerates in newer games (40% increase in performance in games like Control and Shadow of the Tomb Raider) vs what I get on my X170SM-G despite having the same CPU in both systems. Anyone who says memory doesn't matter for gaming performance is grossly misinformed as it makes a very big difference.

I saw people saying that 1.35v was the maximum safe voltage for the IMC on a 10900K 24/7, but these were the same people saying 1.55v on your memory would fry it despite there bing XMP kits rated for that voltage. I wanted a second opinion from someone on these forums as opinions here are very accurate. thanks again!-

1

1

-

1

1

-

-

I finally got around to doing some memory overclocking with my kit. I'm on an MSI Unity Z590 motherboard with a 10900K and am running all 4 of my memory modules at 4100 MHz CL 15. What is the maximum safe voltage for the IMC on a 10900K? I had to raise the IMC voltage up to 1.47v to get this memory overclock stabilized.

-

The Clevo X170SM-G And X170KM-G also have 4 M.2 slots, with a potential 5th if you replace the wi-fi module with an M.2 2230 SSD.

-

5 hours ago, electrosoft said:

It's about time for the fall hardware liquidation. I opened back up my ebay store and I plan to start listing items this week, but I'll be listing some items on the forums too. I think I'm going to sell off my 4090 or 7900xtx along with my NH55 along with the 12400 desktop, 7600x desktop and oodles of little parts here and there along with a couple of 12900k chips, some sodimm kits and maybe some other parts as I dig through my shelves and closet.

If anyone is interested on our little cozy thread first, let me know

Me me me! I like poaching used hardware off you guys as I know it's been well taken care of despite being benched on. I also know I'll get the item I paid for.I lurk here like a shark, waiting to chomp on any opportunities that arise. I won't guarantee I'll buy anything, but I definitely want to take a look at what's on offer.

-

2

2

-

3

3

-

-

@saturnotaku If this card hasn't been sold yet, I'm interested in purchasing it. How used is the card?

-

14 hours ago, tps3443 said:

Take a look at these Sapphire rapid platforms. These are overclockable, unlocked, and even allow up to DDR5 6800+ on the ram. They have all of the modern features as Z790 does, only these CPU’s do not use E-Cores on the CPU’s they have all real big cores lol. “Think very modern and unlocked 7980XE” lol. It’s so cool! Or modern Xeon 3175X lol.

Intel has the Xeon 2565X which is a TRUE 18/36 unlocked Xeon chip that supports DDR5 6800+ in Quad channel, so the bandwidth is really impressive. I had no idea this platform even existed. They have much more powerful CPU’s available that drop right in to the same socket. Really an awesome platform. The IPC is very very good! These could easily be used as gaming CPU’s in a normal PC or work station PC that has full on overclocking ability.

Another note: LGA 4677 water blocks are available from several manufactures. So this platform does not have many limitations.

I feel like I have been under a rock. $1,500 for a CPU like that is expensive. But not too crazy. I have seen the motherboards as low as $700 range. Considering one could drop in a Xeon 3595X which is 60/120 cores/threads and is also unlocked. It’s not a too terrible value. Stuff like this intrigues me.

I’m amazed Intel went the direction we all scratched our heads about years ago. Intel makes a whole slew of unlocked Xeon CPU’s released in 2023 and 2024 for this socket LGA 4677.

@Mr. Fox Check out this video!https://youtu.be/jSae9muJviI?si=JI-YCpdR7dJTS4ib

Yeah I don't understand why Intel went the hybrid route on consumer CPUs when they didn't do the same thing on their server class CPUs. It seems very strange to me.

-

1

1

-

1

1

-

-

1 hour ago, electrosoft said:

Now THAT'S a proper laptop! That's exactly what I want! Looks like it has a 17 inch screen. It'd be nice if it was an 18 inch screen instead.

Optane P5800X as the boot drive with proper desktop hardware inside the chassis? This thing must be screaming fast.

Now where can I buy this case? This will be my last laptop as it's forever upgradeable. I'm sitting here thinking about what must be the eye watering price for this thing, but that's just the upfront cost. In the long run, this route is much cheaper than buying a new laptop every 4-5 years.

Come to think about it, the kind of laptops I like weren't meant for long batery life and I rarely ever use my laptops on battery power either. If I need to be away from a power outlet for a while, I usually just use my Legion Go instead as it's the more convenient device to use in the case I'm away from a power outlet, but 95% of the time I have access to a power outlet + a desk or table.

Dude, I want this thing so badly. Hoppefully there's a way to buy it.

-

2

2

-

-

13 hours ago, ryan said:

Thanks for sharing that, I might give it a go..never hurts to have another game.

@Clamibot I bought the LS app and cant for the life of me get playable latency. it is like a 1-2second delay in movements. Im downloading horizon 5 right now to check out the FG mod vs LS too see if they are doing the same thing, thing is even if the latency was acceptable(vsync) the image quality doesn't appear to be good, alot of artifacting in which case I could buy a program to lower resolution to 480p and have a smoother experience and great visuals. what are people using this mod for and is it possible my laptop has something configured wrong?

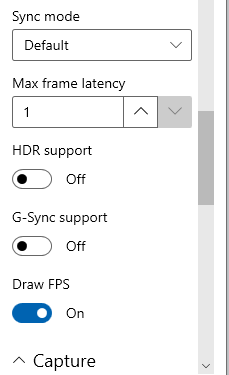

For best results regarding latency, your raw framerate should be 60 fps or higher, and you should be using the DXGI capture API. Also, try using the vsync options built into Lossless Scaling rather than your in game vsync options. Some people have reported running into issues when using the in game vsync settings while using Lossless Scaling.

As for the artifacting, are you using any upscaling from Lossless Scaling or just frame generation by itself?

-

Yeah I find the tool works best when your raw framerate is 60 fps or higher. Any lower than that and the input lag is just too much. Even then, my preference is for the raw framerate to be at least 120 fps. I'm so glad this tool exists as I'll really need it for 240 fps gaming.

I've been compiling a list of software tweaks/windows setting changes/overclocking settings over the years to max performance in games. Lossless Scaling is yet another tool to add to that arsenal.

-

2

2

-

1

1

-

-

I'm still a bencher/gamer hybrid. My desire to game hasn't waned at all, it's just that I have less time now than before because I have a lot more responsibilities.

The upside? OH MY GOODNESS I CAN BUY MY OWN HARDWARE NOW! YES YES YES YES!

I still spend time tweaking and tuning cause it's fun, but ultimately my purpose for it is to get max performance in games, a la the Framechasers approach. Icarus continues to fly higher in me as I keep desiring higher and higher framerates. I'll be completely satisfied once the motion clarity looks like real life to me.

That point probably won't be until we have a 1000 Hz monitor or something. Maybe I'll be satisfied if the refresh rate is high enough to where it's "good enough" and I won't care much for additional improvements. I'll be moving to a 240 Hz monitor soon as I really like how smooth motion looks on those monitors. I love Micro Center, but sometimes it entices me to spend more money than I need to.🤣

It's all good though as you gotta enjoy life. I'm very blessed to have everything I need, and it helps that I only have 2 hobbies that require me to spend money (computers and video games).

As for the point on game performance, yeah I find it ridiculous that today's releases require so much processing power to perform well. Want the secret from an actaul game dev (me!)? The games are just unoptimized. I specifically engineer games to run well on low end systems as doing so expands your potential audience and will make you more money. I don't understand why the big name studios don't seem to understand this. In fact, the studio I work for will be releasing a new game this Thursday, and it runs at 120 fps on a freaking Quest 2! The game has quite a bit of content too. Not everything has to be bleeding edge. Bleeding edge can sometimes also mean unrefined and suboptimal.

But hey, this is just my perspective as a high framerate gamer. What do you guys think?

-

2

2

-

1

1

-

-

11 hours ago, ryan said:

53fps basically double. I settled with 2x as the input lag is less noticeable. really nice bump in smoothness. just tested 4 games all are promising. going to test horizon 5 and see how i fair with the input lag, if i can game without interference then its a big thumbs up to this app.

@Clamibot can you clarify something, when i run forza horizon 5 im getting 80fps without FG native settings no dlss at 4k. when I use LS it says my framerate is 40 and being doubled to 80fps with an image that looks like 480p detail wise while moving. Just wondering if you could show us what settings your using and why it reports half the framerate then claims its being doubled when infact the double framerate is identical to native framerate.

heres my example

clearly getting 80fps

one change(LS)

says 40/80????????????

It reports half the framerate since that is your raw framerate, and then your actual framerate will be double that if you selected x2 frame generation since the interpolation will generate the rest of the frames.

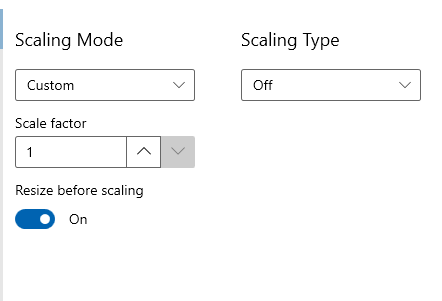

If you just want to use the frame gen and not use the upscaling feature, you'll want to set the scaling mode to off, scaling mode to custom, and scale factor to 1. That should prevent the game from looking like it's rendering at 480p.

Lossless Scaling has its own fps counter that will show you your actual framerate vs RTSS which shows the raw framerate.

-

3

3

-

-

1 minute ago, ryan said:

LINK?

https://store.steampowered.com/app/993090/Lossless_Scaling/

If it ever feels like it's not working, try a different capture API. DXGI is the most performant, but WGC and GDI may be the only ones that work on certain games.

-

1

1

-

-

I tried out frame gen through Lossless Scaling, and it produces some really good results. This is a really nifty little program!

While yes these are interpolated frames rather than raw frames, it still leads to a significantly better experience vs running your game at half or a third of your screen's refresh rate without frame gen. I find I get the best results when running games at half my monitor's refresh rate (raw framerate) and then using frame gen to interpolate to double that, matching my monitor's refresh rate.

I can definitely notice the latency penalty due to my raw framerate only being half the displayed framerate, but as I said, it's still a significantly better experience vs no interpolation at all. However if you use a controller rather than a mouse, this almost completely hides the latency penalty as a controller is a rate over time input device rather than an instant input device like a mouse. I had very good results on my Lenovo Legion Go when using x2 frame gen. I would not recommend doing x3 as the input latency penalty is very noticable when your raw framerate is only a third of your refresh rate.

All in all, Lossless Scaling is awesome for handheld devices like the Lenovo Legion Go. If any of you guys own something like the Legion Go, Steam Deck, or ROG Ally, I'd highly recommend getting Lossless Scaling as that will allow you enjoy high framerate gaming on the go with AAA titles!

Of course nothing beats raw frames, but frame gen is really good if you're using a controller and can't hit a 144 fps raw framerate.

-

2

2

-

-

1 hour ago, Papusan said:

And here is the fun facts. Nvidia hate cheapo gamers.Now you need buy more expensive OC cards to have the same performance as the released vanilla 4070. Once again gamers have to pay extra due the AI boom. Last time it was crypto, now AI. What next?

And if you want throw your hard earned money down the drain.... Buy this pretty overpriced 5.7L wreck. What a disgusting -- product.

MXM Type A RTX 4060 in that desktop in the second video 🤪.

That should provide a nice upgrade to anyone still running a laptop with an MXM GPU.

-

1

1

-

1

1

-

-

So it appears if you just lock all your cores to the same frequency, 13th and 14th gen CPUs from Intel should not experience the degradation issue we've all been hearing about. Makes sense since you won't be supplying higher voltages to any cores than necessary.

I've never understood single core boost vs all core speeds. With every CPU I've ever owned, I always lock all the cores to the same speed (usually the single core boost speed so all cores operate at that frequency if I'm gaming or working, or at around 2.5-3.5 GHz on battery power). I don't know why anyone wouldn't want to run their CPU this way as you can't truly get max performance unless all cores are running at their maximum frequency. Not only that, having a big rift in clock speed seems like it would cause problems as you'd end up frying certain cores with a much higher voltage than necessary for the speed they're running at. Do newer chips have the ability to supply a per core voltage independent of all others? I'm pretty sure they all still get supplied the same voltage, which is going to be dependent on the highest frequency core.

All the CPUs I've had can run their single core boost speed across all cores at once. Is that uncommon, and thus the reason single core boost exists? To increase performance in lightly threaded workloads on chips that can't handle running that speed on all cores at once? A little disclosure, I don't usually run benchmarks but rather game on that all core frequency across all cores, or run in game benchmarks. Maybe that affects my perceived results?

-

2

2

-

2

2

-

1

1

-

-

2 hours ago, tps3443 said:

Windows 11 24H2 performs really well. I'm too obsessed with performance as is. What CPU are you using anyways?

A 10900K, both on my desktop and laptop.-

2

2

-

-

I'm still on Windows 10 LTSC V1809. It's held up incredibly well and there are minimal compatibility issues with new software at the moment. As far as I can see, just Unreal Engine 5 titles and Starfield so far.

I have so many games I can play through that the incompatibility with a few newer titles doesn't matter, and I'll only be updating if I get a performance uplift or if performance remains the same. I've benchmarked Windows 11 vs Windows 10 LTSC 1809. I get about a 20% uplift in framerate with LTSC V1809 vs Windows 11 across most of my games.

I don't know how people are getting better performance in Windows 11 vs Windows 10. My benchmarking says otherwise. To be fair, they're probably comparing against standard Windows 10 rather than LTSC, but even then I wouldn't expect Windows 11 to perform better.

-

3

3

-

-

As the title says, humble bundle currently has a deal for a big collection of Resident Evil games. You can get 11 games for $30, which is a heck of a deal, especially if you're a big fan of the series. The deal lasts 19 more days as of the date of this post. Enjoy!

https://www.humblebundle.com/games/resident-evil-decades-horror-village-gold?hmb_source=&hmb_medium=product_tile&hmb_campaign=mosaic_section_1_layout_index_1_layout_type_threes_tile_index_2_c_residentevildecadeshorrorvillagegold_bundle&_gl=1*16xde0z*_up*MQ..&gclid=CjwKCAjwnv-vBhBdEiwABCYQA-8JV3PZhb3nyQF17gicwE05JWhEUMfSWKd1f0yzHuz0KX0DX-EHoRoCkekQAvD_BwE-

2

2

-

1

1

-

-

15 hours ago, cylix said:

Windows 11 is crap as usual😁

I wonder if this also applies to Windows 10 or even the LTSC editions of Windows 10. If so, this is yet another software tweak to further increase performance. I'll take it! Screw security, just gimme more performance!

Added to my knowledge base. I keep track of software optimizations/tweaks that I hear about that will increase performance. I've compiled quite the list, each item of which on its down only does a little, but hey, a ton of small performance increases combined yields one big performance increase.-

2

2

-

-

Who likes my new laptop?

-

1

1

-

1

1

-

1

1

-

-

3 hours ago, ryan said:

interesting, looks like the integrated solution might be the best buy for once. 0.4in thick 4070...they are really pushing thin and light these days.

I'd love to see that Strix Halo APU in a handheld! That would be super awesome! Hopefully we can unlock the power limit and push it further than 120 watts for MAXIMUM OVERDRIVE! MWAHAHAHA!

I like all my stuff big, including handheld gaming devices. I don't understand why people want such tiny screens in their devices, and I say this as someone who has hawk vision. Small screens aren't very immersive.

I'm taking a balanced approach to new deivces that are coming out and not immediately writing all of them off. I like desktops, laptops and handhelds. They each serve their purpose and I make sure to have every tool possible to be prepared for all situations since there isn't a single system that can do it all. I do need to be able to have fun with them though as I hate anything that's locked down. I was able to overclock my Lenovo Legion Go and games perform great on it!

You know what would be awesome? A handheld with 2 m.2 slots. Equip one with an Optane P1600X as your boot drive and the other with your storage drive. Bam! Super fast hibernation and wake from hibernation that'll feel like the suspend function on a PS5.

-

2

2

-

-

6 hours ago, tps3443 said:

5.6Ghz with a 10900K? That has to be an amazing chip.Indeed, it's the super 10900K I acquired from @Mr. Fox. It's a beast of a chip. This is the same CPU I ran at 5.4 GHz all core in my X170SM-G when first taking it for a spin and am now running in my desktop. My super fast, low latency memory in my desktop was able to give a significant boost to my framerates in games despite using the same CPU, hence why that chip got moved to my desktop, to take full advantage of its capabilities.

I remember some dude on either overclock.net or overclockers.com that had a mythical grade 10900K that could do 5.7 GHz all core on air cooling. It sold for about 3 grand. An absolute top tier chip.

-

2

2

-

1

1

-

-

1 minute ago, Mr. Fox said:

I have never used a TEC before, but they seem simple enough that fixing it should not be too difficult if you can confirm what went wrong. If I had to guess it would be the TEC plate itself probably gave up the ghost. Unless there is something proprietary about it, you might be able to replace it with a generic module.

I've been toying with the idea of upgrading the peltier in this cooler with a more powerful one just to see how hard I could push my 10900K. I've been wanting to top your 5.6 GHz all core achievement on it. I suppose this situation gives me an excuse to do that experiment.

In any case, I think I'll go ahead and get the Li Galahad II Trinity Performance 360 as @electrosoft recommended so I can at least have a high performing cooler to fall back on should my experiments not be fruitful. I trust your guys' recommendations a lot more than any other group. I just needed confirmation that someone here thought the cooler was excellent, so thank you guys for that!

-

1

1

-

2

2

-

1

1

-

Official Clevo X170KM-G Thread

in Sager & Clevo

Posted

Nice RAM overclock! What RAM voltage and what IMC voltage did you need to achieve that?