-

Posts

461 -

Joined

-

Last visited

-

Days Won

4

Content Type

Profiles

Forums

Events

Posts posted by Clamibot

-

-

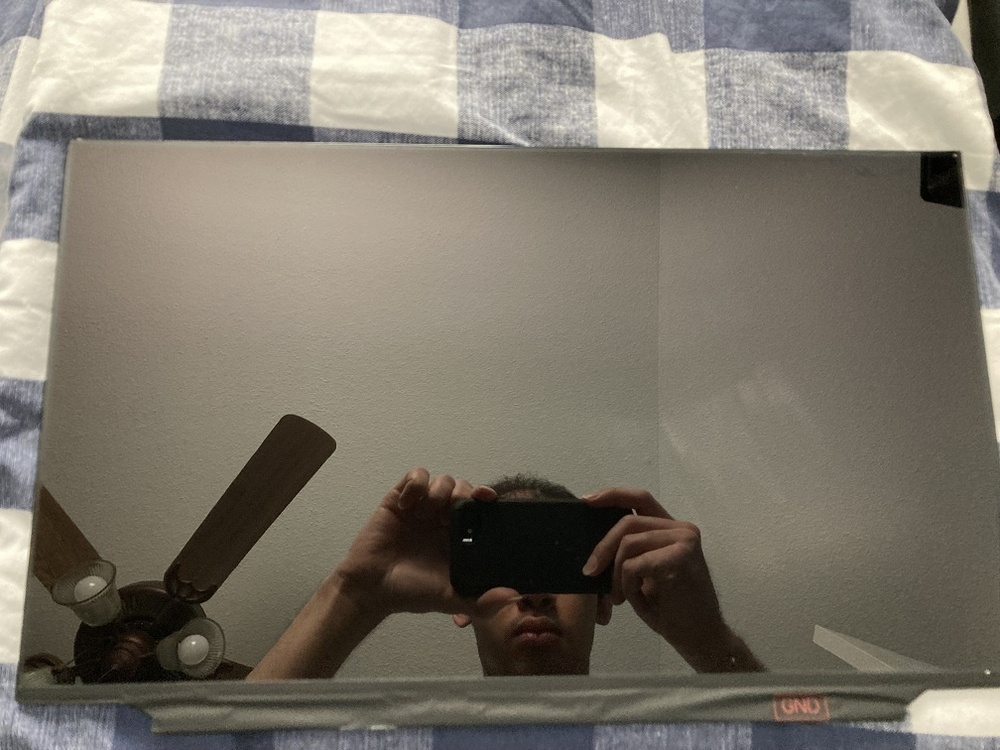

300 Hz glossy perfection:

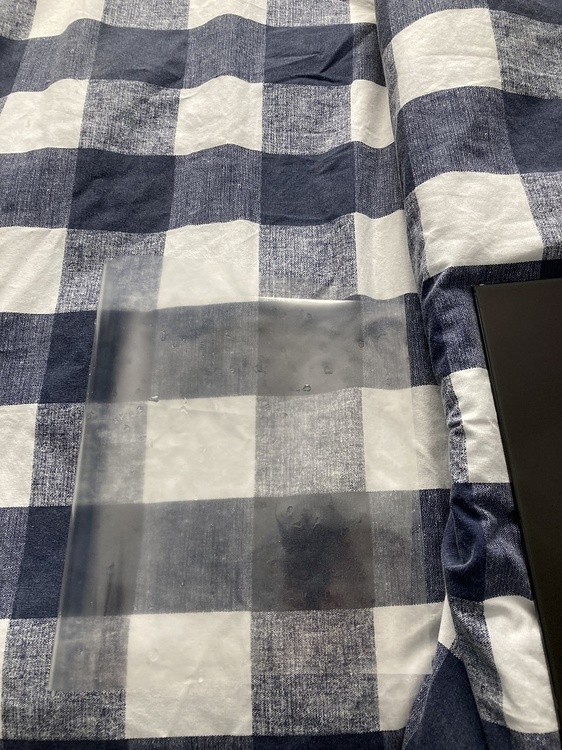

Bleh matte layer I peeled off:

This stupid piece of plastic ruins every screen I lay my eyes on that has it. Glossy forever baby!

I got a 300 Hz screen for my X170. Since there are no glossy screen options, I had to take matters into my own hands and glossify it since I absolutely detest matte screens. This came out well just like when I did it on my current 144 Hz screen. It's good to know this mod still works on laptop panels as I know on some of the newer desktop ultrawide panels, the matte anti glare layer is stupidly infused into the polarizer layer istead of being a separate layer.

-

1

1

-

3

3

-

1

1

-

-

Yasss! It's alive!

I'm glad to hear this project is still in progress and has not been forgotten to the sands of time. I've been looking for and following similar projects when I find them in hopes I can gather enough knowledge to build my own version of such projects. My only critique would be to allow for an 18 inch screen, but I'm also a go big or go home kind of guy, plus I like big screens. Weight is not a concern for me.

I'd really like a return of 19 inch class laptops, but I'd like a rise of mini itx laptops even more.

-

1

1

-

-

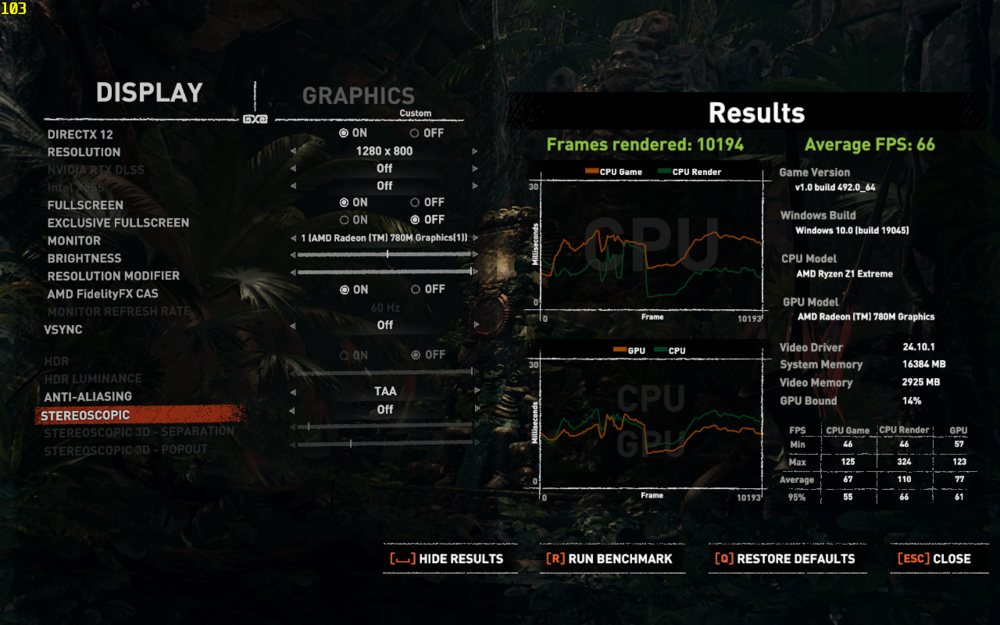

Shadow Of The Tomb Raider Benchmark on my Legion Go (also using a new WindowsXLite 22H2 install):

I set the power limit of the APU to 54w and overclocked the iGPU. All 8 CPU cores were active. I also set the temperature limit of the CPU and the skin temperature sensor to 105°C to stop the APU from throttling because it thinks my hands will get burned. The results are a definite improvement over stock settings, but still not up to par with my standards for raw framerate. However, the input lag is not very noticeable when using a controller and setting my raw framerate to 72 fps, then interpolating to 144 fps, thus giving me my high refresh rate experience on this device.

Despite raising the power limit to 54w, the system was maxing out at 40w sustained. I'm not sure if this is because the APU was using 40w, with the remaining 14w of the power budget being used for everything else. I thought the power limit I set using the Smokeless tool was for the APU only. I'm definitely pushing the power circuitry pretty hard here as the APU is rated for 28w only.

I was able to perform a static overclock on the iGPU to 2400 Mhz and, performance did improve. Interestingly, performance dropped if I tried pushing further as it seems there is either a power limit, voltage limit, or both. UXTU allows me to overclock the iGPU up to 4000 Mhz, but I didn't try going that far. I was however able to overclock my Legion Go's iGPU to 3200 Mhz without the display drivers crashing, so looks like this iGPU has a lot of overclocking headroom left, but is held back by power/voltage limits.

I can't wait to see a handheld with a Strix Halo APU and a 240 Hz screen.

-

2

2

-

1

1

-

-

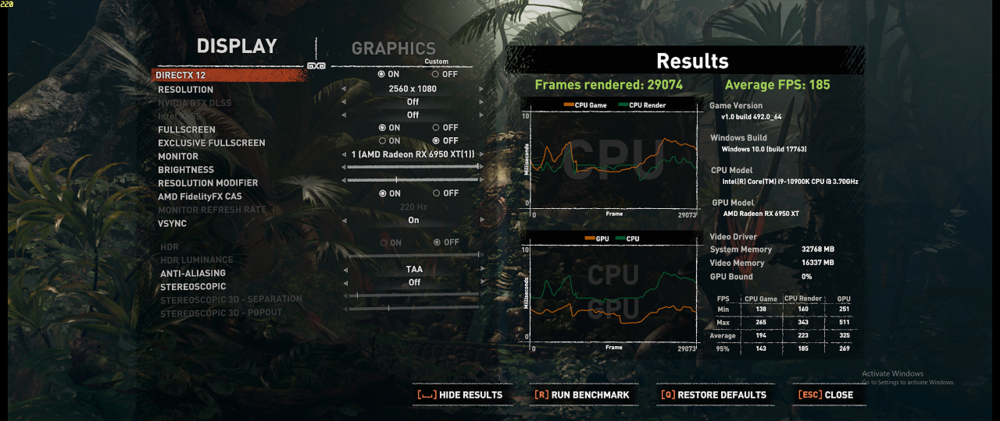

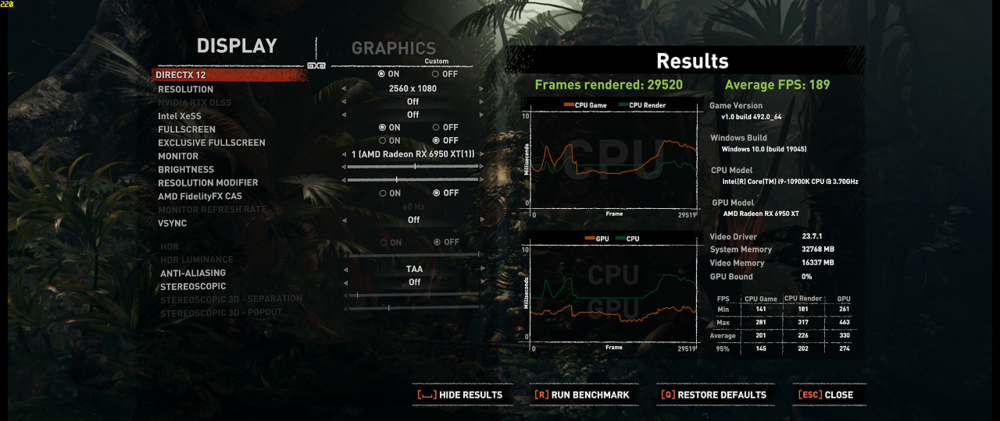

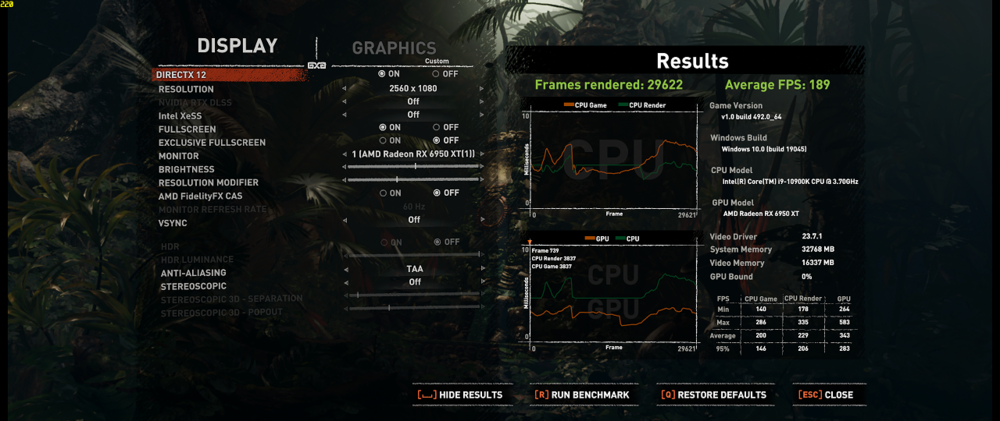

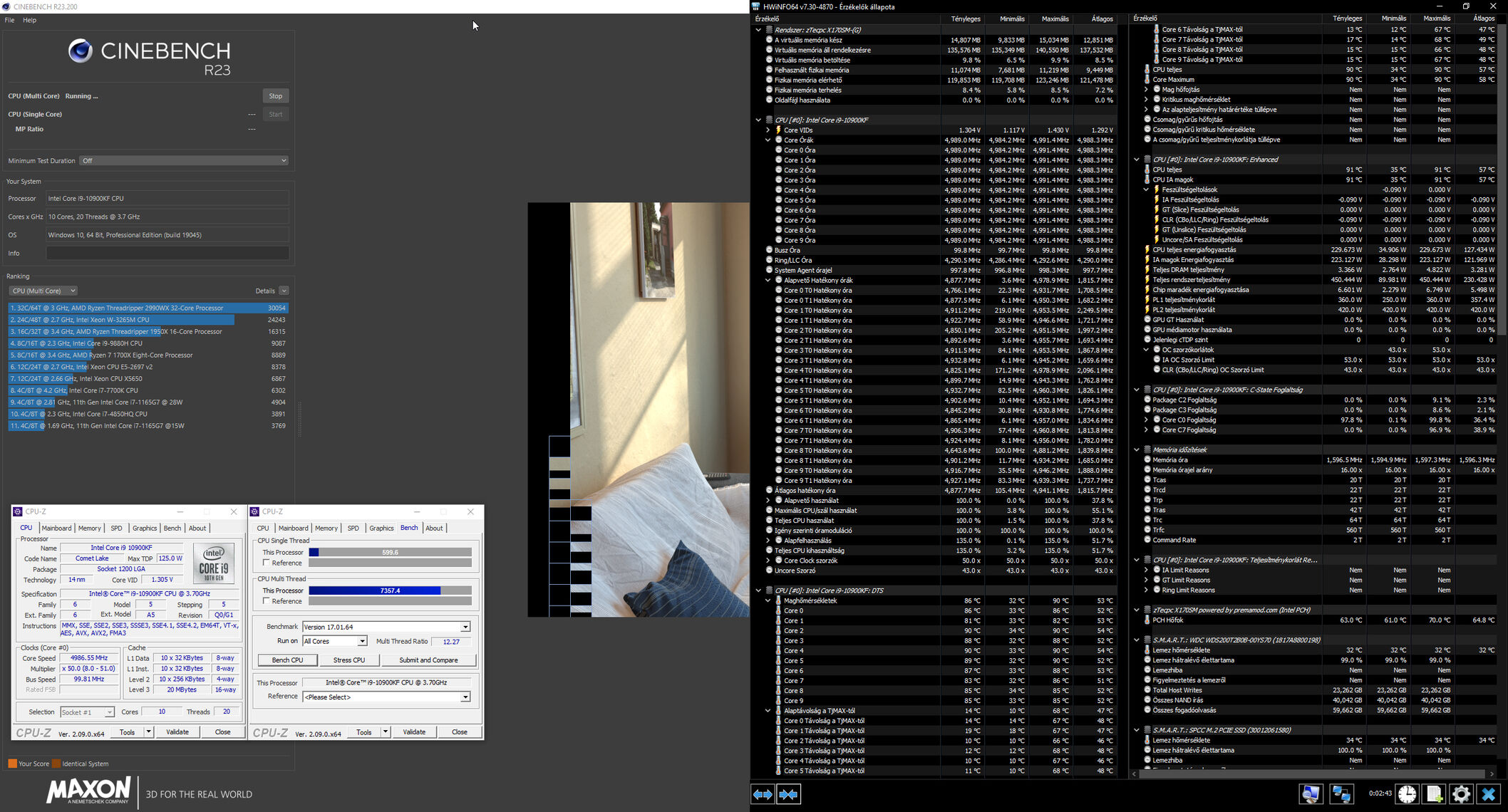

Well guys, I benchmarked my 2019 LTSC install vs my new WindowsXLite 22H2 install in Shadow Of The Tomb Raider again (this time using the built in benchmark), and I can confirm the 22H2 install does indeed perform better, even with all my programs installed (which seemed to have no effect on performance at all). Looks like WindowsXLite 22H2 is the to upgrade from LTSC 2019! I'm currently installing it on my Legion Go and will be installing it on my X170 next.

I had my 10900K running at 3.7 GHz to induce a CPU side bottleneck. My GPU is a Radeon RX 6950 XT.

2019 LTSC:

WindowsXLite22H2 (minimalist gamer only installation):

WindowsXLite22H2 (all my programs installed + some extra services running):

WindowsXLite22H2 wins by about 2%. I did not expect this at all. I was expecting a performance downgrade, but I am very happy I got a slight performance upgrade instead. You don't see that very often with installing newer versions of windows.

I like that Shadow Of The Tomb Raider is useful both for CPU and GPU benchmarks. This makes it an easy all in one benchmark that saves me time as it will give me a general idea of performance differences between different machines and windows installs.

-

3

3

-

-

3 hours ago, tps3443 said:

Thats really good to hear. Once it goes inside of an ATX case it’s definitely gonna be using only the Subzero 360.

How do you think 4x16GB will overclock on a 4 dimmer? Like 4266? Maybe 4400?

So far I've gotten my 4000 CL15 32 GB (4x8GB) kit to 4200 with 1.5 IMC and 1.54v on the memory itself. I haven't tried pushing further, but I do think there's headroom left if I push the memory voltage higher. Samsung B Die is awesome!

This is of course dependent on the motherboard. I have an MSI Unify Z590 motherboard, and I know these were made for overclocking. If you get a similar quality board, you should obtain similar results.

-

3

3

-

-

1 hour ago, tps3443 said:

I've had a lot of fun with the ML360 Sub-Zero. That's the cooler I've been running the entire time I've had my current desktop. It's incredibly good for gaming and allows me to do 5.6 GHz on my 10900K in games, which really helps with games that have an artifically induced single core performance bottleneck.

I also know liquid metal wasn't recommended with this cooler, but I did it anyway and that made the temperature results even better of course.

-

1

1

-

2

2

-

-

11 hours ago, MaxxD said:

I also just placed my order for a complete (KM-G) motherboard. eBay handles the entire process. I paid all the expenses. At home, I just have to wait for the package to arrive, they take care of everything. That's perfectly fine.

Is there a full service book available somewhere for the X170SM/KM-G model?

All the parts are there to use the KM-G version and the picture will be complete.

I hope I made the right decision!☺️

Clevo X170SM-G user manual: https://www.manualslib.com/manual/1890112/Clevo-X170sm-G.html#manual

2 hours ago, MaxxD said:Now I was wondering if I replace the SM-G card with a KM-G card, will G-Sync be available on the internal monitor?🤔

The +15W GPU Boost is reliable, it already has HDMI 2.1, so 4K 120Hz is also available on the TV, only G-Sync is questionable on the 144Hz G-Sync SM-G model display.🤭

I think G-Sync won't work once you upgrade the GPU, but I personally wouldn't worry about it. Gsync is a gimmick anyways since we'd always want our games running at the monitor's max refresh rate anyways for the best experience.

I lost Gsync after replacing my laptop's monitor, and I found it really didn't matter. I haven't missed Gsync at all.

-

1

1

-

-

1 hour ago, tps3443 said:

I’m running 22H2 as well. My 3175X absolutely rips on this OS. I tried 24H2/22H3/22H2. Only thing I have not tried yet is Windows 10 on this system. It’s an older system with lower IPC. So I need every advantage I can get. I think 22H3 barely performs slower than 22H2. And it always reflects in my CPU physics scores, and GPU usage.

(1) Fastest bios

(2) Fastest micro code

(3) Fastest OS

(4) Highest possible overclocks.

(5) Good game optimization is always welcome 😃Oh you're using Windows 11 builds? My WindowsXLite install is based on a Windows 10 Pro 22H2 build.

To add to that list you have:

(6) Disable unnecessary security mitigations like Spectre, Meltdown, Core Isolation/Memory Integrity (Virtualization Based Security), and Control Flow Guard(7) Install DXVK Async into games that see an uplift from it

(8) Use Lossless Scaling (you get best results if your raw framerate is already 100 fps or higher with mouse and keyboard, or if on a controller, if your raw framerate is already 72 fps or higher). This software is absolutely amazing!

(9) Perform settings tuning in games. I find that some settings barely have a difference between low and ultra, especially in newer games.

(10) Disable anticheat and/or DRM if possible

-

1

1

-

1

1

-

-

After some preliminary testing with Shadow Of The Tomb Raider in Windows 10 LTSC 2019 and WIndowsXLite 22H2, it looks like the CPU side performance is actually slightly higher on the 22H2 installation. I'm using the maximally tuned OOB install without Windows Defender. I can probably do a bit more tuning with it too. My LTSC install is also tuned with stupid security mitigations disabled, but it's probably not tuned as much as some of you guys have managed to do with your installs.

GPU side performance seems to be higher too, but I don't know by what amount as I was only testing CPU side performance. I need to get some concrete numbers, but this is looking really good so far. To be fair, the 22H2 installation is currently set up as a minimalist installation purely for game testing, so I need to install all my programs onto that Windows install and compare again as that could affect the results. It probably won't, but I need to cover all my bases.

If WindowsXLite 22H2 truly outperforms Windows 10 LTSC 2019, I will be happy. This will be the first time I've seen a version upgrade in Windows actually deliver a performance upgrade rather than a downgrade (other than upgrading from regular consumer editions to Windows 10 LTSC since I've seen this firsthand, and that jump in CPU side performance was significant). I'll then need to upgrade all my systems🤪

-

3

3

-

1

1

-

-

As the title says, humble bundle currently has a deal for a big collection of Sid Meier games. This bundle contains the past 5 Civilization games + DLCs for Civilization 6, and some other Sid Meier games I'm less familiar with. You only have to pay $18 to obtain the entire collection, so some get it while it's hot! The deal lasts for another week as of the date of this post. Enjoy!

-

1

1

-

-

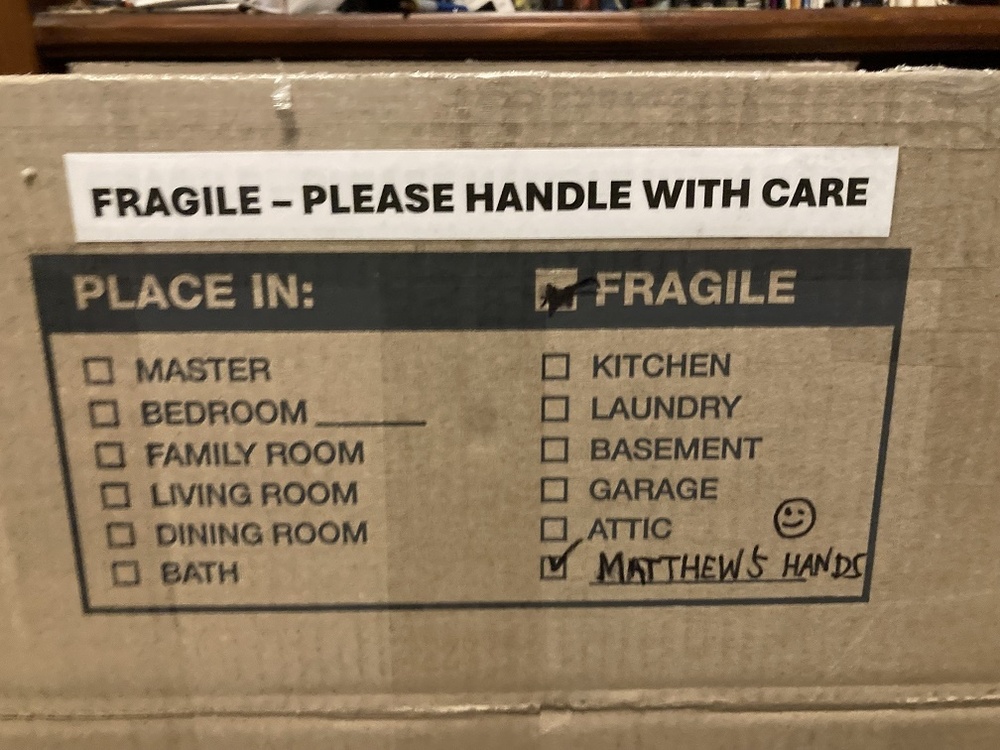

Christmas came early for me this year!

Lol, yep, you get more done and you get your money's worth when buying from bro @Mr. Fox.

A super duper mega ultra deluxe hardware bundle being placed right into my hands.

You betcha awesome is here!

Lots of goodies!

I haven't gotten anything set up due to work being very busy this week, but I'm definitely looking forward to assembling and tuning 2 systems! One will be for me and the other will be for a buddy building his first desktop after graduating college and years of using laptops and being dissatisfied with the diminishing options for upgrade paths.

Full hardware bundle contents:

- Super bin i9 14900KF

- i9 14900K

- Asus Maximus Z790 Apex motherboard

- Asrock Z690 PG Velocita motherboard- Super bin G.SKILL 32 GB 8000 MHz DDR5 memory kit (pre tuned to 8400 MHz CL 36 just for me!)

- Crucial 16 GB 6000 MHz DDR5 memory kit- Gigabyte Aorus Xtreme Waterforce RTX 2080 TI (bro Fox even included an air cooler for it!)

- Iceman direct die waterblock

- Iceman direct touch RAM waterblock

- Bykski RAM heatsinks for the super bin G.SKILL memory kit-

6

6

-

-

8 hours ago, jaybee83 said:

i know EXACTLY the position ure in, i got my build right before our little one came along, as well haha 😄 get in all the building and tuning u can before ure a dad!

nice, so hows the experience with the P5800X so far? how does it compare with a regular SSD as an OS drive? does it actually provide any real life difference?

As an owner of an Optane 905P, I feel qualified to answer this question.Depending on what you're doing and your setup, you may or may notnotice a difference.

If you use Optane with something like an i7 7700K, you probably won't notice a difference vs a standard m.2 SSD. If you are however using Optane with a recent platform (like 14th gen or current gen), you will definitely notice a significant difference. I currently have an Optane 905P installed in my system with a 10900K in it, and the difference is significant.

The first thing you'll notice is that the system feels more responsive. I can't assign this particular metric to a number, but it feels snappier vs using a standard SSD. For everyday usage, programs load significantly faster (provided your CPU isn't a bottleneck on load speeds). You can also open a ton of programs at once and the Optane drive will just blast through your file read requests. Optane also excels at small file copy speeds due to the much faster random write speeds vs a standard SSD. Optane also doesn't slow down as it fills up vs a standard SSD, so you can load these babies to the brim and not see a decrease in drive performance.

The most major difference I've noticed is in file search speed. When doing development for my job, I osmetimes have to look for particular files. With Optane, I can search the root directory of a Unity project using windows explorer file search (mind you, our projects are pretty big for VR games, at least 30 GB or larger for the repository), and my 905P will have already returned a bunch of results after I snap my fingers (it's still searching, but at least it found a few files right off the bat). File searching is so much faster on an Optane drive. If I were to perform this same task on a standard SSD, it would take a bit before the file search returned any results, even the initial few.

For my development workloads, code compiles faster, asset imports complete significantly faster, and builds complete a bit faster. For gaming, games load faster, especially open world ones. Any game that does heavy asset streaming also has loading microstutters gone. Both development workloads and game load speeds will continue to scale with ever faster CPUs on Optane whereas they've already kinda hit a wall with standard SSDs.

Oh yeah, also hibernate and wake from hibernate is far faster on Optane vs a standard SSD. So Is file zipping/unzipping.

So overall, Optane is a must as a boot drive if you want the snappiest experience, or if you're a developer like me, or just want the best game load times, or if you do tons of file operations (especially with small files), or if you want some combination of the 4. Optane benefits newer platforms far more than older ones as newer CPUs can really take advantage of the throughput of Optane random read and write speeds.

-

1

1

-

3

3

-

1

1

-

-

The 9800X3D seems like a really good candidate for Intel's Cryo Cooling watercoolers. Fortunately it's now possible to run those waterblocks and AIOs using modified Intel Cryo Cooling software that doesn't have the stupid artificial CPU restriction check from here: https://github.com/juvgrfunex/cryo-cooler-controller

-

5

5

-

1

1

-

-

-

Update on my shenanigans:

1.52v on the RAM was not enough to stabilize my memory overclock. It appeared to be stable but crashed. I tried 1.53v and it still crashed, but took far longer. 1.54v has not crashed after an entire work day doing heavy multiplayer testing on our newest VR basketball title. I'll consider this stable as I was also able to load Mass Effect Andromeda without my system crashing, which usually means my CPU or memory overclock is stable as loading onto that game is a very CPU and memory intensive process.4200 MHz CL15 DDR4 on a 4 DIMM board is pretty impressive. I don't know if I want to take the IMC voltage any higher than 1.5v long term, but I know these current voltages on the memory and IMC are for sure safe long term.

Heh screw it, I'll allow up to 1.55v on the IMC and 1.6v on the memory. Nothing should go wrong, right?🤪

I'm begrudgingly going to be moving to a WIndows 10 22H2 install due to software incompatibilities starting to creep up on me. The WindowsXLite downloads brother @Mr. Fox linked me to seems like they'll perform as well as my 2019 LTSC install, so I'll be satisfied if that's the case. I'm happy windows 10 support will be ending soon ish because I don't want any more dang updates! They're incredibly annoying, and my computers always get these updates and force install them when I'm using the machine, usually in the middle of me working or playing a game. I know that's not supposed to happen, it's supposed to update when I'm away from my machines, but it updates during active use for me, so I'll be really happy when the updates stop for good. The updates don't ever contain anything I care about anyway.

Having tested multiple versions of windows myself in games, I can confirm that all this marketing surrounding windows 11 is complete BS. I've tested on multiple laptops, a desktop, and my Legion Go. My framerates are around 20% higher in windows 10 LTSC vs windows 11 across all those devices. It does depend on the game, but that's the performance increase I found on average, with most of my newer games showing a slightly greater than 20% increase in framerates.

I'm hoping WindowsXLite Optimum 10 classic gives me that LTSC grade performance. You all know I will be doing my game benchmarks to compare. This is gonna be a fun weekend.

-

3

3

-

1

1

-

-

Woo hoo! I tried my hand at memory overclocking for the first time today and was able to successfully get a 5% overclock on the memory speed on my 4 dimm motherboard from 4000 MHz to 4200 MHz. IMC voltage is at 1.5v and the DRAM voltage is at 1.52v. This seems stable.

I did at first try my hand at tightening timings but ultimately gave up for now as I couldn't get it stable after messing with them for a few hours, so I instead opted for the brute force approach, which I was successful with. I probably should've gone witht he brute force approach first with me being new to memory overclocking. I'll try my hand at tightening timings again another time.

After having used a system with an AMD dGPU for a while and getting used to it's idiosyncrasies, I much prefer AMD graphics cards now. Turns out, my black screen driver crashes that I've spent moths trying to figure out weren't because of AMD's drivers sucking. That was merely a symptom of the root cause, which was memory instability. My XMP profile was unstable at stock IMC voltages. Raising the voltage by 10mv made all the stupid crashes go away.

So PSA to those with AMD GPUs, if you experience random black screen crashes, consider raising your IMC voltage just a tad. This made all my headaches go away.

-

2

2

-

2

2

-

-

15 minutes ago, Mr. Fox said:

Well, stay tuned. There may be some hell to pay.

We are on page 666 after all.-

2

2

-

1

1

-

3

3

-

-

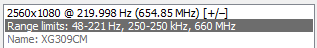

So I got the Asus XG309CM, and I'm absolutely loving the 220 Hz refresh rate. It's so smooth! Significant improvement over 144 Hz in smoothness and it's starting to get close to looking like real life in terms of motion smoothness. My original reason for getting one is so I can have a flat ultrawide screen since I hate curved screens, and having used a curved ultrawide screen for a while, this flat ultrawide is far better. It's much more comfortable to look at since there's no image distortion (since there's no curve, yay!).

Unfortunately because I have now adjusted to the 220 Hz refresh rate and really like its smoothness, my performance requirements have gone up yet again. However, this means I may have a use for dual GPU rigs again, which will be fun to play around with. Lossless Scaling works with a multi GPU setup, so you can render your game with one GPU and then run Lossless Scaling's frame gen on a different GPU. It's essentially like SLI/Crossfire, but better since you get superlinear scaling in most cases (since the frame gen generally takes significantly less processing power than actually rendering a frame), and it works in pretty much any game. The only caveat is input lag, but you probably won't be bothered by it much if your raw framerate is already sufficiently high (in excess of 120 fps), and you will notice the increased smoothness from the higher interpolated framerate much more at this level.

Since there's no singular GPU powerful enough to render every game in existence at hundreds of frames a second @ ultrawide 1080p, this is my ticket to lifelike motion in all the games currently in my library, and games I'll be playing in the future. This kind of setup will be especially useful when I inevitably move onto even higher refresh rate monitors (I saw a 480 Hz one, like dang!). Motion clarity at 220 fps is pretty dang good. It's super smooth, but still not as smooth as real life. I don't know what my perception limits are, but I know I'm still not there.

Ahh, the sweet dream of planning yet another new build. I guess we're never done here are we? I am currently satisfied with this 220 Hz monitor, but you guys know me and my extremely high requirements. You all KNOW I will eventually get an even higher refresh rate monitor because I want video games to have the exact motion clarity real life does. I demand it because for me, motion smoothness increases immersion for me much more than better colors or higher resolution. Lifelike motion smoothness or close to such is incredibly immersive to me.

-

3

3

-

1

1

-

-

3 hours ago, MaxxD said:

My processor has a core voltage of 1.3v+ under 5GHz (R23 Test process Run), which is very high. I looked at Prema BIOS and there is a core voltage setting, you can only enter a number there, but + or - cannot be set. So how do I know what I'm saying now? Do I add or lose?🤔

Obsidian 5GHz (all core) -0.090V UV

R23 Test process run...

The Obsidian program also remained on the SSD transferred from the PCS machine, and I can use it after manual startup after system startup. There is a license for the PCS machine and if automatic start is switched on, there is no longer a license for it and the program cannot be used. I would like to solve BIOS Overclocking, it is better and does not require many programs under the Windows system...that would be nice!☺️

Personally I always do my overclocking through Intel XTU so I don't brick my machine if I apply bad settings, plus you can perform adjustments so your setting dynamically.

This will depend on your chip's silicon quality, but I can apply a 20mv undervolt at 5.3 GHz with the 10900K currently installed in my laptop (5.4 GHz for the better binned chip in my desktop) and it remains stable for me. Up to 1.5v is a safe 24/7 voltage on this CPU within this laptop. Up to 1.6v is safe if you have really good cooling (like custom water cooling, which we can do on this laptop), so I wouldn't worry about the voltage being 1.3v on your CPU as that's not a super high voltage for this specific generation of CPUs.

To answer your question on the sign of the offset (whether it is positive or negative), I'll have to jump in the BIOS and take a look to see where that can be identified. I'm pretty sure there's an option to set the offset sign somewhere. I also have not done that memory overclocking I was going to do over the weekend just yet. Unfortunately enabling the realtime memory tuning option in the BIOS causes the system to not boot, so I can't do that on the fly withing Intel XTU unfortunately.

-

3 hours ago, Mr. Fox said:

You should try this. I have found it to perform a lot better than GhostOS. Far less bloat on this one. I obviously prefer Windoze 10 (22H2) over Winduhz 11. I would recommend avoiding versions past 22H2 for either OS.

I'm definitely gonna try that out as I'm starting to run into sofware compatibility issues (both for games and work) with my V1809 LTSC install, so I now have a genuine need for a newer version of windows. Thanks for posting this!

I also got an Asus XG309CM monitor and absolutely love the 220 Hz refresh rate. I don't like that the max refresh rate isn't perfectly divisible by 24 or 30 though so I've been looking into some monitor overclocking (which I've done before), but am running into a bit of a snag this time. Apparently, there's a refresh rate limiter on this monitor according to CRU. Does anyone know how to bypass such a thing? I've never seen something like this before on a monitor. I just want to overclock it to 240 Hz, which I think will be doable on this panel.

-

2

2

-

2

2

-

-

Stained copper is actually ideal as copper that has had liquid metal leech into it is more thermally conductive than regular copper, although I think this has more to do with the gallium that bonds with the copper filling up the pores on the contact plate, thus making the contact more flat.

With the heatsink screw mod performed by zTecpc + my delid and full copper IHS from Rockitcool + liquid metal between the CPU and IHS + liquid metal between the IHS and heatsink + a 20 mv undervolt, I can go up to about a 220 watt load on the CPU side indefinitely without thermal throttling. This is enough of a power and thermal budget to sustain a 5.3-5.4 GHz all core speed in games on either of my 10900Ks indefinitely. And no I don't live in the arctic, I live in a temperate climate.

Yeah the only way to get the most out of the CPU in this laptop is through mods. You won't get that stock, but you can get a massive improvement from stock if you put in the effort to do said mods.

-

1

1

-

-

I have the PremaMod BIOS on my system but do CPU overclocking through Intel XTU. I do plan on doing some RAM overclocking this weekend as I think I'm starting to get the hang of it from doing it on my desktop.

We'll see how it pans out.

-

10 hours ago, Eban said:

I go the opposite way. Borrow a game I "might" like from my friend with an eyepatch....If Im still enjoying playing after a couple of days I buy it.

Haha yeah that's a good approach in the absence of demos. This really highlights the importance of demos, and I'm not really sure why those mostly went away.

After my boss decided we should start offering demos for our games, we got a significant bump in sales. It didn't even require that much effort to make the demos.-

2

2

-

-

My personal policy regarding this matter is to buy the games I want so it pays the devleopers who wrote the game, then I download a cracked version that I can play forever (if necessary) and add that to my vault of offline backups.

No moral issue here since I already paid for the game. I'm simply downloading a backup copy that will work forever. As a game dev myself, I have absolutely no problem with people doing that with my games. Fortunately not all games have DRM, so this process isn't necessary for all games.

I don't use DRM on my games and never will. It doesn't even prevent piracy so why bother? People who don't want to buy things will never pay for that item. No reason to make things harder for everyone else. DRM is more of a headache than it's worth as it negatively affects honest customers.

-

2

2

-

1

1

-

3

3

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

in Desktop Hardware

Posted

Yeah I don't get it either. Matte screens are unreadable under intense light sources because the diffusion of light across the screen from the matte anti glare just makes the screen blurry. At least on a glossy screen, even with glare, it's still sharp, so you can read whatever parts you can still see.