1610ftw

Member-

Posts

1,260 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Events

Everything posted by 1610ftw

-

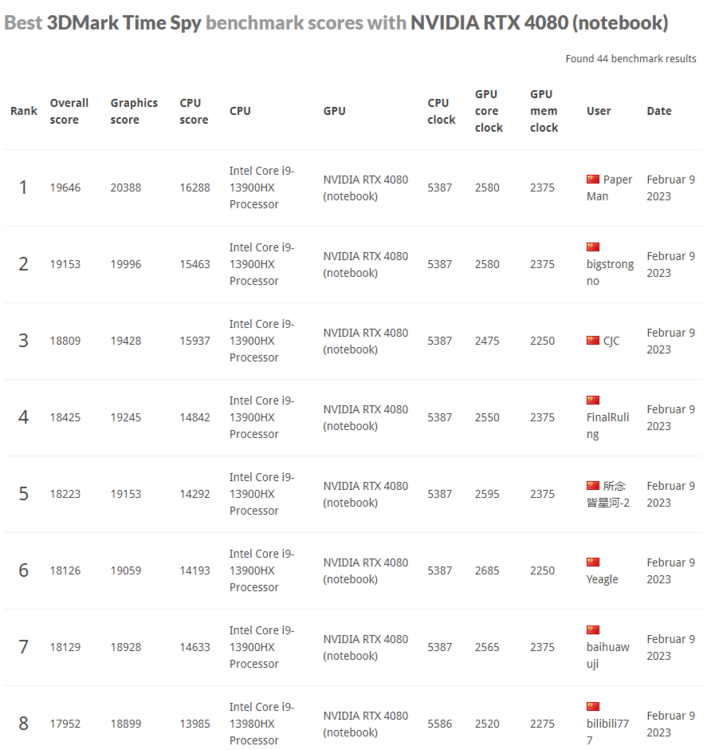

I like what you have achieved with your 7770 but you took a wrong turn somewhere. Why would we not compare Time Spy scores between generations when this is what we have always done? It really has a nice spearate GPU score which is there so that the CPU will not have much of an influence which is easy to see if for example you disable your turbo altogether. In any case those 4080 scores look like they are from desktop cards that are somehow attached to laptops, here is the 4080 mobile leaderboard as of now, note the (notebook) to designate the mobile GPU: Looks like at QHD that 4080 is working extremely well.

-

Not all laptops are that expensive this generation. The Asus Rog Strix 18 with the 4080, a 13980HX and an 18" display is only 2499: https://www.bestbuy.com/site/asus-rog-strix-18-intel-core-i9-13980hx-16gb-ddr5-memory-nvidia-geforce-rtx-4080-v12g-graphics-1tb-ssd-eclipse-gray/6531333.p?skuId=6531333&intl=nosplash Looks like a pretty good deal for most if two memory and storage slots are enough.

-

Top of the line laptops indeed have not gone up in price much. But in the days of the GTX 1080 and RTX 2080 they were also quite close in performance when compared to desktop solutions. Now they are still priced similarly but the GPU performance just isn't competitive any more.

-

Supposedly this is the GPU score, not the combined one. Up until the last generation improvements in Time Spy were a pretty good predictor for improvements in QHD gaming at least.

-

I have no clue, should have just written male or men. Point is that average men should have no trouble carrying and handling a laptop that is a bit heavier and bigger than what we get these days. That is if they are mainly looking for a DTR. I get that there are exceptions and people who cannot lift that much but there are plenty of lighter and thinner laptops for them already.

-

They cannot charge that much if enough people do not buy. Do yourself a favor and check what resolution you really want to game in. If it is QHD you might be happy with an older laptop with a 3070Ti or better or a new one with the 4070. Personally I would probably not game on a laptop in 4K - not good enough for what you have to pay nor do I think that my eyes appreciate the added resolution on a laptop screen. So if you still want to game in 4K on a laptop then you are entering a world of (financial + performance) pain - you have been warned 😄

-

You only have to look at the Clevo X170 for a traditional laptop design that was rated at 325W of cooling, the P870 went even well beyond 400W. There really is no reason for not dissipating more heat except for the manufacturer and Nvidia not wanting to go there. When MSI can do 250W with traditional heat pipes in a super slim chassis (GT77) they could certainly make that design a bit bigger and thicker with an 18" screen and go to 300W+ with the added thickness and real estate. Weight would probably be somewhere between 8 1/4 to 9 1/2 lbs but I bet that enough people of the male variety who just carry their laptop from one place to another would not mind, especially when they also get a socketed Intel CPU and stacked NVME SSDs like HP does it. You only need on average about 5mm more height for all of that to happen.

-

Not all games work that well with reduced power but it is possible that at 250W TGP the average performance would be around 90% across a wider variety of games. Personally I am not affected as I would be happy with a 4060 or 4070 for what I do and I happen to think that save for too low memory the desktop cards aren't that bad either. It is just that the naming and the low power limits on laptops together with the skyrocketing prices rub me the wrong way, it is not the proper way to do things.

-

This is indeed what Nvidia is trying to pull off - pay more for more performance is probably suppossed to be the new normal. I think Nvidia noticed what big of a leap they were making and they tried to capitalize on it. Did not work out completely as now they are being called out for it - A LOT! And that was just in the desktop space where the new cards are really very powerful but now in the laptop space their their decision to use smaller chips than in the desktop card with the same name coupled with anemic power limits is not perceived favorably out there - time to call a spade a spade. What does not help is that they are extremely stingy with memory in the mobile lineup. Instead of 8, 12 and 16 they should have gone for 12, 16 and 20 to 24 for the 4070, 4080 and 4090.

-

They should have just called it 4080 mobile. Then possibly later release a 200W+ solution based on the desktop 4090 chip and call it 4090 mobile. Honesty, transparency and problem solved. With the situation being as it is let's hope that as many reviewers as possible work together to really rub it in.

-

A bit above in this thread there was a quote for a 23K+ Time Spy for the 4090 in the MSI GT77 - that is a very big upgrade in non-DLSS performance but who knows if Nvidia has done some behind doors shenanigans to drive up those numbers, too. Probably best to test dirive one of them and test the stuff that one actually uses.

-

Looks like the LED display and the 4090 together have now made the GT77 the most overpriced laptop on the planet. If I would be getting a laptop this generation I would just relegate myself to looking for something with at best a 4070 and a 13900HX or better CPU but even that is not possible as when you want 18" and good memory and storage options there are no offers so far as all the 18" laptops only have 2 full 2280 slots it seems and 2 memory slots - they cannot even get that one right in their top of the line BGA books.

-

It gives an idea what could be accomplished if notebooks really had a proper 4090 chip and 24GB memory. Performance would probably be about on par with a 4080 desktop card and then give or take a few percentage points depending on TGP.

-

I am not talking about a single company wanting something. I am talking about the 3 biggest manufacturers demanding something from Nvidia or else. No chance obviously for a single manufacturer like Tongfang / Uniwill or Clevo but if Dell, HP and Lenovo would ask... Believe me Nvidia would listen but the current state of affairs is probably rather convenient for everybody as Nvidia has less service cases and the laptop manufacturers do not have to build really powerful laptops any more.

-

Looks like some embargo was lifted today:

-

CPU is doing reasonably well, GPU not so much. Looking at Time Spy the 4090 mobile does about 21K on notebookcheck.com while 100th place for the 4090 desktop card on 3Dmark.com is 41K and 100th for the 4080 30.5K - quite ridiculous to lag that much behind at the prices that these laptops fetch. As for this being on Nvidia I am pretty sure that if laptop manufacturers all wanted 200, 225 or 250W they would get those, too but they are probably happy that the 4090 only goes up to 175W so that they do not have to invest in better cooling and their laptops can stay thin and light.

-

It is quite obvious that we do not get to see any Time Spy benchmarks. Pretty sure Nvidia does not allow it at this point which in turn probably means they are less than flattering.

-

We live in sad times when excellence is at most relative and at worst just mediocrity pretending to be excellence. Clevo is doing it with even less shame than others with the X370 and with them I find it to be the most disappointing. I am pretty certain they will suffer for having done that as top of the line Clevos were all socketed for several generations.

-

It is a combination of thin bezels and much less internal height. As for maximum screen size the old top of the line 17.3" 16:9 chassis like the Asus 701/3, Clevo P870 and MSI GT72/73/75 could hold 19.2" 16:10 screens if they had the 5.5 to 7mm bezels that are found in current laptops. In such a chassis it is possible that even Asus, Acer and Alienware would find the space for 4 memory sticks and 4 2280 NVME slots outside of workstations. And if you are looking at something really big like the MSI GT83 or the last Alienware 18 then you are looking at a chassis that would even hold a 20.5" 16:10 screen. Now that is a nice screen size and something that I would also sign up for.

-

The revolutionary part is that today more than ever mediocrity can be sold as superiority. This takes on an Orwellian quality as of late with zero progress or regression being sold to the customer as some stupendous improvement. I guess that all major laptop manufacturers except for Clevo have the advantage of having stopped really powerful designs years ago so now they can celebrate mediocrity as something to be applauded as they had these in between years that were even worse. MSI is as guilty as any company as it is beyond silly to sell the exact same power envelope as last year as revolutionary. They did not even bother to go with a small increase to let's say 260 or 275W as I can only assume that manufacturers have formed some secret cartel of mediocrity whereby no manufacturer is advertising more than 250W combined for CPU and GPU so that they can all continue to offer their thin and light "behemoths" as the gullible call them. The result is that now we have 18" screen laptops with a chassis that has less volume than former 15.6" workstations. As it looks like the only exception is the Alienware 18m which seems to be bigger but with its own strange shortcomings despite its weight and dimensions.

-

Tuxedo version of the X170SM-G: https://www.tuxedocomputers.com/en/Linux-Hardware/Linux-Notebooks/17-3-inch/TUXEDO-Book-XUX7-Gen11.tuxedo# Tuxedo version of the X170KM-G: https://www.tuxedocomputers.com/en/Linux-Hardware/Linux-Notebooks/17-3-inch/TUXEDO-Book-XUX7-Gen13.tuxedo There indeed seem to be a number of instructions on their site with regard to how to install Linux on them.

-

If you are curious if it is in any way temperature related you could keep the bottom open and blow some really cold air on it in a cold(er) room. If that doesn't help I would expect other limiting factors. In any case you did very well with your P775, what is the max power draw of the GPU during Time Spy?

-

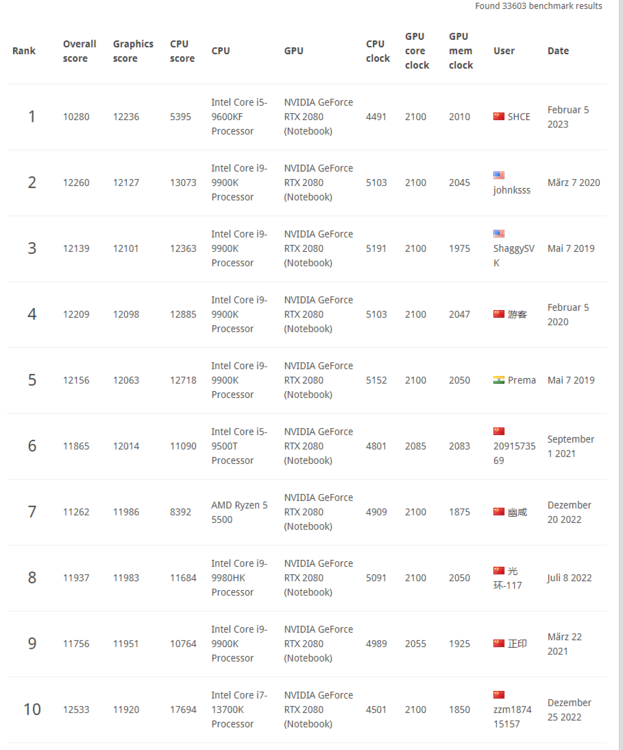

Very close to 3Dmark top 10, currently you would sit in 17th place. So excellent job overall with even the top 5 only being 2.5 to 3.5% away and the first place is somebody with a desktop motherboard so that one does not even count, @johnksssis really the first placed laptop score:

-

No problem here with 10th generation, 4 memory slots and overclocking enabled (to enable undervolting and higher all core clocks only). So it may have to be a combination of overclocking and ccc. Of course with 10th gen one gains stability but loses one SSD slot - love those brilliant Clevo engineers...

-

If a laptop can call itself DTR then it should have space for an ethernet jack as then it will not be so thing that the jack is an issue. Personally I have zero interest in any network connector that does not allow for at least 2.5G and I hate those flaps. I guess one could argue that they offer some kind of protection from dust but that is being too kind imo as I never had an issue with dust in normal ethernet ports.