-

Posts

6,271 -

Joined

-

Days Won

717

Content Type

Profiles

Forums

Events

Everything posted by Mr. Fox

-

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

What is weird, if I interpreted @tps3443post correctly, he simply connected one end of the CAT cable to his PC and the other end to his wife's PC, no PoE involved unless he forgot to mention that. How that would happen is a mystery to me. Make no sense at all. I don't know where or how that much current would be generated with a direct network cable connection between two computers. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

LOL, no... typo. Thanks for identifying it. I will fix it. That's backwards. E would be "extreme" and P for "puny" for that to be accurate. This is basically the same SP rating that Brother @Papusan12900K had. I think there was a point or two different on the E-cores, but do not remember exactly. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

Wow, that is nuts. NO IDEA. So happy you didn't lose your PC or golden 13900K in the process. Thank the Lord for that. I ordered an open box Strix Z690-E so I can move to DDR5 on Banshee and get rid of the white (the replacement is all black - my preference). This might be the last glam shot, LOL. I am probably going to offer the nicely binned, delidded and lapped 12900K SP92 (104P-70E), Strix Z690-A, DDR4-4000 kit and Velocity2 (or silver Optimus - buyer's choice) waterblock in the marketplace in the next week or two. If anyone wants info or is interested before I list it in the marketplace thread, let me know. I also have a spare XSPC pump/res and a couple of 240MM radiators I can include. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

50% of the time. What varies is whether they are the plaintiff or defendant. For every winner there is a loser. In some venues the bad guys usually win, while in other venues the opposite is true. In either case, the outcome is a direct reflection of the heart and mindset of the people that live there. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

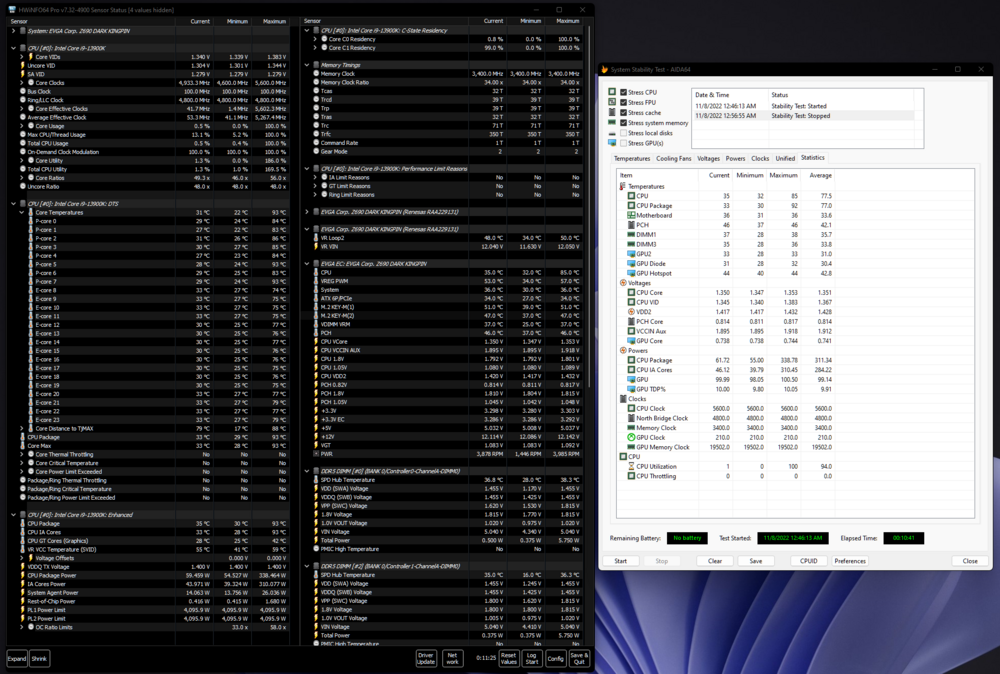

OK, here is an update. I remember Igor's Lab testing a "sausage" with a more viscous paste versus spreading a creamier paste like MX-4 or KPX and he found better results using a really thick thermal compound down the middle of a GPU core in a straight line. So, just for shiggles, I thought why not. Instead of using KPX or MX-4 (both of which I tested already after finding before and after temps the same) and Phobya Nanogrease Extreme, I grabbed a free tube of the TF7 from the CPU contact frame kit. I put a straight line down the middle, and I also followed what Igor suggested with the GPU and did not go in a criss-cross pattern. I snugged down all four nuts until they made contact with the block while pressing down in the middle. Then I tightened two on one side first, then two on the other side. I also noticed that I had forgotten to disable V-Core Guardband in the BIOS. So, I did that, too. While my V-Core setting remains the same, disabling Intel Guardband prevents the VID from increasing and keeps VID and V-Core at or near the same value. Don't know that it matters, and I think it actually doesn't matter because VID is not the real voltage going to the CPU. But, I like seeing the VID and V-Core values resembling one another rather than the CPU "thinking" it needs more voltage than it really does and fibbing about it with a bogus VID value. So, here we go... same stress test... ready... wait for it... ...see you tomorrow, fellas. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

Yes it's good that they made an aftermarket clone (dimensionally) of the stock IHS so that other parts are compatible. I do not expect it will perform any better, but it will give us something good to use with liquid metal instead of ruining the markings on the stock IHS. Please be careful. Watch closely to make sure the IHS is not rotating during the delid. I'd hate to see you knock all of the SMDs off of the CPU in the process. If I hadn't stopped to check mine in the middle of the delid process it would have happened to me. I don't know why it rotates like that. I've never experienced that in the past during a delid. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

If that is true it has been completely redesigned from what it was. I purchased one for 12th Gen and it is as wide as the CPU with cutouts for the "wings" and SMDs near the edge of the PCB. The one I purchase was way too big for the opening in the contact frame. So, it was either totally redesigned to match stock dimensions or the people saying that are full of baloney. Edit: it has been totally redesigned. That is good news. I got one of the first 12th Gen delid tool and copper IHS, so they probably figured out people wanted to use the contact frame instead of the stock ILM. I am ordering one so I can use liquid metal on top and preserve my stock IHS. https://rockitcool.myshopify.com/collections/12th-gen-intel-processor/products/copper-ihs-kit-intel-12th-gen I already have liquid metal under the IHS. That is all I ever use. I am not going to ruin the identification markings on the IHS by putting it on top. (See comment above.) Yup, several times. Mount is perfect and temps are exactly the same every time. I even tried using the EKWB Velocity2 block and the temps were within 1°C of the Optimus Foundation block. Edit #2 - I also have one of these on order. Cuplex Kryons NEXT 1700. Should be interesting. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

Yes, 100%. I believe he will have my 2080 Ti FTW3 beast GPU back in working order in a jiffy. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

In this video Tony reballs a GPU core. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

I have used liquid metal on raw copper for years without issue. That is the only option available on laptops. You're correct that once it gets saturated and no longer absorbing the liquid metal there's no meaningful difference between the two in terms of performance. However, a high quality nickel-plated surface is preferable for ease of maintenance. You don't get the hard clumps of liquid metal that have to be sanded off like you do on copper. On the plus side, liquid metal is more likely to stay exactly where you place it on raw copper, versus the slippery nickel surface. Given a choice between the two, I would choose the nickel. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

I am not following you, but maybe I missed something along the way. What are you referring to here, brother John? Anybody tried this yet? https://store.steampowered.com/app/1472250/ROG_CITADEL_XV/ (it's free) This freebie ends tomorrow. https://store.steampowered.com/sale/vermintide2giveaway So, grab it before it's gone. I didn't want Vermintide enough to bother buying it, but it is fun to play now that it is free. Now I can see why my son that enjoys it so much likes playing it. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

Yeah, but it changed and unless your CPU changed that should have remained constant. With the ASUS SP ratings, I haven't seen accuracy in the voltage predictions, and they also change. At best, it is a ballpark calculation. I have found the voltage required for stability was always higher or lower than the prediction. You know you have a good sample based on the results you are getting, so the ASUS SP rating or MSI Force number doesn't really matter in the grand scheme of things. These numbers are useful for identifying defective trash CPU samples than need to be RMA'd though. They do not identify whether or not your IMC is good or garbage. Having a screenshot showing the ASUS SP rating is also good for resale value because some peope place tremendous stock in that number. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

That can happen with ASUS SP ratings sometimes as well. It's only a tool. While it is useful for some things, it is not a 100% reliable indicator of results. I wouldn't worry about that number changing too much. At the end of the day, results are what matter. Not a rating assigned based on a programmed algorithm... GIGO. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

Does not work with the contact frame and I don't want to use the stock ILM. Copper is also inferior to nickel surfaces when using liquid metal. Yes, it's clean. I no longer use the Signature blocks because of the solid metal unibody housing. I prefer the clear plexi top on the Foundation so I can visually monitor the cleanliness of the cold plate. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

I always use liquid metal under the IHS where the solder used to be. The only exception was with the Ryzen 5950X nightmare, which ran hotter after a delid because the chiplets were inconsistent height and there was no longer any solder plugging all the gaps and liquid metal doesn't work for that. Using thermal paste made the temps the same as stock solder (before delid). Using liquid metal on top will absolutely yield better results than thermal paste, but I don't want to destroy the markings on the stock IHS. Maybe I will buy a broken 13th Gen CPU on eBay (something very low end like i5) and use the IHS. I could lap it and use liquid metal and keep the stock IHS looking new. When I had to lap one of the 12900K IHS due to the damage caused by the delid process that made a huge difference. But, the IHS was already destroyed so I had nothing to lose. -

FYI - stop the nonsense YouTube ads with this awesome Chrome extension... SponsorBlock. It is my Windows substitute for Vanced MicroG on Android. Never wait for a video to play or be interrupted during the video again by advertisements.

-

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

Had I known it, then of course I would not have wasted my time on a worthless exercise that yielded no benefit. Based on the temps he is seeing, I would also suggest that @tps3443not waste any time on it or risk damaging his CPU. I do not think he would see any benefit, just as I did not. The 13900K does not have the super tiny SMD components on the PCB at opposite corners of the die like 12900K/KS does. There is nothing under the IHS except for the die. Like 12th Gen, it is very difficult to get the stock rubber gasket off of the PCB. More than any other prior generation CPUs, the black rubber sealant sticks extremely well and it is very time consuming and tedious, especially near the resistors and capacitors mounted near the IHS. I used plastic razor blades and even using them it was hard to get the PCB cleaned off. Here is the damage to the left wing on the IHS. You can see that it was deformed by pressing against it. I had to sand it down to take the burr off of the edge. I also sanded the underside of the IHS to remove the black rubber seal and to make sure it was perfectly flat after the damage to the left wing on the IHS. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

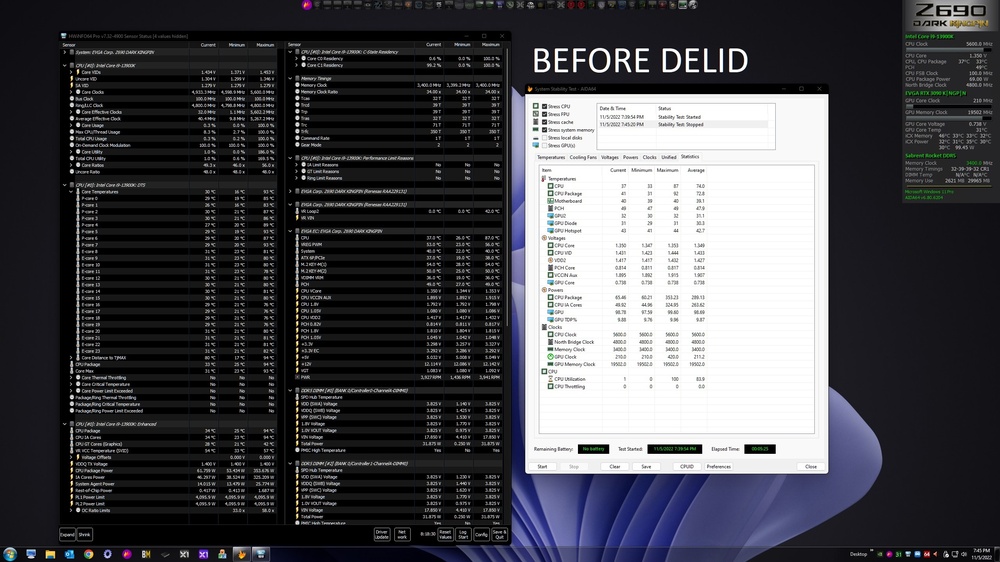

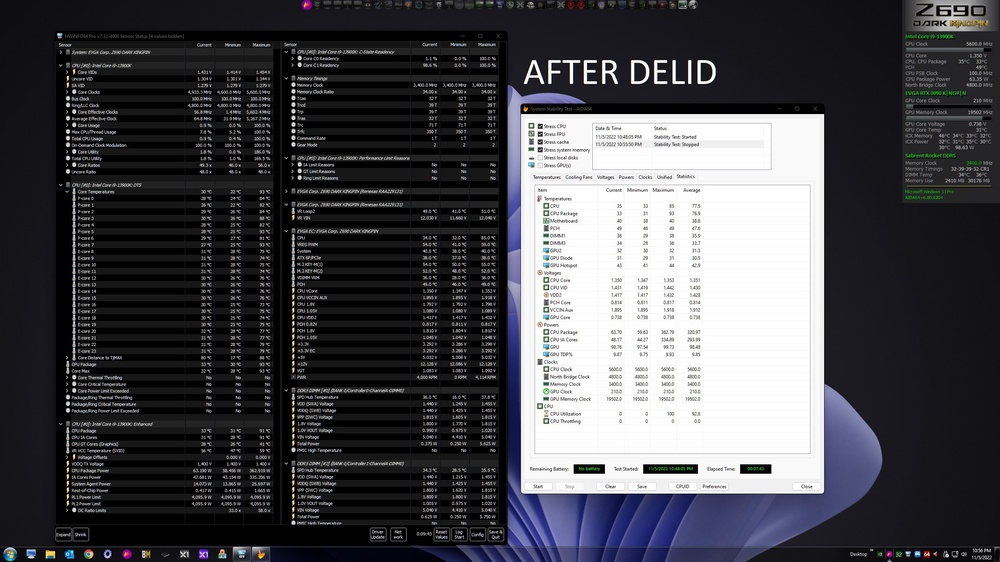

I also have probably better than average P-cores and not so great E-core, probably average E-core quality. Yeah, seems like I must have had a good factory solder job. I've not seen this happen before with an Intel CPU. I always have seen a benefit from delidding until now. The temperatures per core saw essentially no change. I was like "Wait, what? The same?" If I did not mark the before and after screenshot you would not be able to tell which temperatures were delid or stock solder except by looking at the clock in the system tray. It was also more difficult to delid than 12900K/KS. The IHS was trying to rotate counter clockwise instead of pushing straight sideways, and the solder was less brittle than normal. It seemed more like lead and it was a bit stretchy. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

100%. Looking really amazing, bro. How are you keeping the CPU that cool? Is the radiator outside in freezing weather? I delidded my 13900K this evening and the temperatures before and after essentially did not change at all. They are within 1°C of the same. I regret having wasted my time doing it now. (And, yes... @tps3443 it did bugger up the IHS a bit, as it did with 12th Gen every time. I was able to fix it with sanding the burr off the wing.) You know where I stand with AMD. I am glad that NVIDIA and Intel have more competition than before. Based on the fact that I haven't had a positive experience with anything from their brand in more than a decade, I am really not open to the idea of taking another chance. I still haven't recovered pschologically or monetarily from my lapse of judgment with 5950X abortion. I am not interested in purchasing a product that runs too hot and doesn't respond favorably to overclocking efforts even when you keep it cool. Based on what I want and expect to receive in exchange for my money, I can see no point in owning such a product. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

I know, right. Buying something you don't want just because it is new, almost what you want, and some people love it, is a great way to end up disappointed... and broke. Always better to wait for what you want, or if you're going to buy something you don't want due to the certainty that availability will continue to be poor or the price is expected to remain unreasonable then make it something adequate, but do it as cheap as possible. Your expectations are already in the gutter. If it turns out to be crap, it is exactly what you expected and your surprised look isn't on your face. You have validated it to be crap. But, it was cheap crap... not the end of the world. However, if it turns out better than what you expected then you have something to be thankful for and happy about. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

This really clarified a lot. It's hard to make sense of the media nonsense with all of the talking heads and emo crybabies on the internet. They're mostly a bunch of stupid goofballs that enjoy running their mouths more than using their brains. The quest for low power draw is very anti-enthusiast. While the specs might sound high for an uneducated consumer or gamerkid, the 4090 doesn't have a higher TGP or pull more watts than our Kingpin GPUs and shunt-modded cards have for several years. I view the high power draw, high TGP for a GPU and high TDP for a CPU as being very positive, not negative. You just aren't going to get more horsepower without it. You can gain efficiency and do more with less, but you won't accomplish as much as you will be doing more with more. Drawing less power almost always means less performance than you would find by using more power. I always have been, and always will be, an advocate by doing more with more. Doing more with less only applies to gaining efficiency from where you used to be compared to where you are now. I'd much rather go the route of using as much or more power than ever before and taking the performance higher than possible if you're doing more with less. Achieving similar performance using less power is progress, but it should also be a cue that you're not doing nearly as much as you could be by limiting yourself to the same amount of power as before instead of pouring on more power than you ever have in the past and just pushing the performance even higher along with it. The "efficiency" should be used to facilitate that rather than using less power than before. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

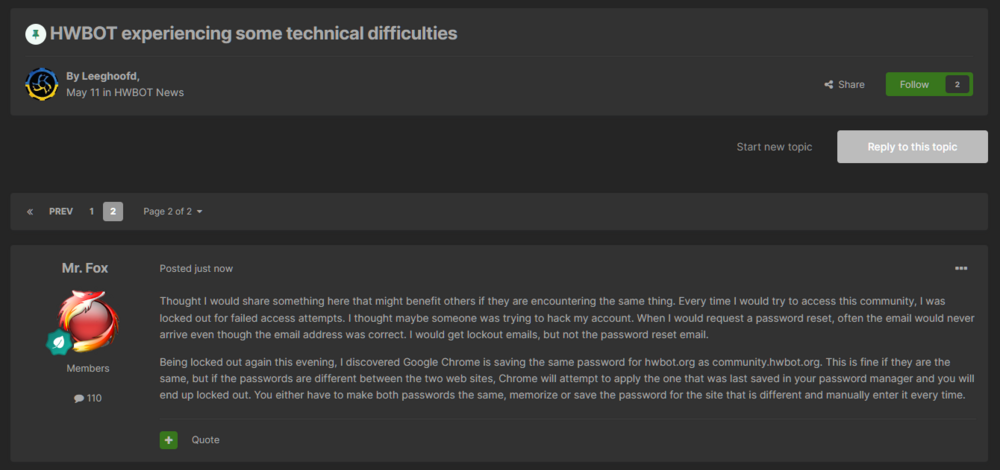

FYI - in case it helps someone else avoid the frustration... user error on my part... sort of. To avoid issues, recycle the same password for both accounts. Being smart and using a distinct password for each account doesn't work. Chrome thinks they are the same. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

If it comes out of Azor's mouth then it should be considered a lie until proven otherwise. AMD hiring a worthless snake like that clown makes them a less trustworthy company and harms their brand credibility on face value. Not mine... I've got no use for compromised garbage like that. Gateway to turdbook city in a non-portable form factor. No thank you... hard pass. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

That would not have required a mod firmware if there were still such a thing as a Kingpin GPU. 1000W power limit and unlocked voltage would have been taken for granted with the standard XOC vBIOS. Sad days with us being left with nothing but gamerboy crap. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

https://hwbot.org/submission/5113085_ | https://www.3dmark.com/3dm11/15355153 https://hwbot.org/submission/5113080_ | https://www.3dmark.com/3dm11/15355078