-

Posts

2,947 -

Joined

-

Last visited

-

Days Won

146

Content Type

Profiles

Forums

Events

Posts posted by electrosoft

-

-

12 hours ago, Mr. Fox said:

For the lovers of Crocs it's not about looks. It's about how it feels. But I agree with Fernando in that it is better to look good than to feel good.

🤣

-

3

3

-

-

2 hours ago, Raiderman said:

What the hell man!!!! Et Tu @Raiderman?!?! 🤢

On the other hand, the system looks great as long as you remove that abomination from the foreground.... 🤣

-

2

2

-

-

Decent video showing once again the power of X3D but more importantly the overall futility of 6000 vs 6400 vs 8000 for AM5 along with the strength of 2DPC boards vs 1DPC with 800 series chipsets....

I'd like to see this run back on non X3D chips....

-

1

1

-

1

1

-

-

10 hours ago, electrosoft said:

Nice!

I'll have to give these a once over and see what they do on my Hero x870e and 9800X3D w/ T-Force 8200 sticks and B650i Aorus Ultra (1DPC) and 9600x.

I've just been running 2200/6400 (Hero) and 2000/6000 (Aorus).

I forgot to add in I have those Kingspec 8400 sticks too that are currently running tight CL28 2000/6000 on the B650i I'll also test.

9 hours ago, Papusan said:Where is the equally cheaper 5090's ? Sub $1800 would be much easier to swallow.

GeForce RTX 5080 now 10% below MSRP, RTX 5070 and 5060 Ti down 13–14% at MicroCenter

See also my latest post here..... Everyone should be able to be offered something better from Redmond R***ds.

Sheesh, my local MC has 25+ of those 5080s in stock for $899.99.

I'm still waiting for 9070xt's to bottom out a bit more to pick up another one for my SFF for testing.

8 hours ago, tps3443 said:Who would have ever thought that a 5090 even at 0.895mv undervolt still uses over 630 watts for 1080P Furmark! Lol.

Furmark is excellent for stability testing very quickly. (If your card is shunt modded that is)

I have and will continue to avoid Furmark like the plague, but always fun to see others run it for shiggles.

9 hours ago, win32asmguy said:Starting to run the Pro Max 18 Plus through its paces.

https://www.3dmark.com/spy/58934149

It has full W10 driver support which is great. Surprisingly enough, WoW runs properly on the P-cores without any micro managing via Process Lasso just like it does on W11. SuperPI also selects the best P-core when run as well so CPPC / Thread Director must be working better now with Arrow Lake.

I have heard the GPU can sustain 150W and that leaves around 50-60W for the CPU in combined loads. However when running OCCT while I was working on another project I noticed it was down to 180W combined load after a while. It also was draining the battery (12% over the 1 hour test) so the 200W advertised really is not sustainable or good for battery health. I am hoping the "Optimized" performance mode is one where it keeps limits out of the battery drain range.

Whew, CPU still toasty? What was your final analysis of the Alienware 18 5090 you have (had?) overall and in comparison. WoW on both systems?

Full W10 support on the newest hardware is nice! I didn't realize it came with the 285HX either.

-

2

2

-

-

3 hours ago, Mr. Fox said:

The latest Strix X870E-E BIOS improved the memory tuning slightly. Finally able to get the latency down from about 58ns to below 56ns. Nothing spectacular, but small improvement is better than a small regression. I'll play with retightening the tRCDWR to see if I can eek a little more mojo from it with losing any stability. It's interesting that it's not better, considering the overhyped "Nitropath" gimmick ASUS chirped about and played up to be such a great invention. It has that feature but I've yet to identify any real or tangible value that it added.

It is still not as good as the AORUS Master, but I have been too lazy to move the 5090 over to it and install the 4090 in the Strix. I haven't felt like messing with the cooling system and the tubing/fitting orientation is different. When I get around to it I am going to try to normalize the two configurations so I can freely move the GPUs back and forth without any kind of rigmarole. The Strix has the better of the two 9950X CPUs in it, so I should probably swap those out while I am at it. Maybe I will get ambitious when I install the EVC2 mod on the 5090 and do all of that at once.

Nice!

I'll have to give these a once over and see what they do on my Hero x870e and 9800X3D w/ T-Force 8200 sticks and B650i Aorus Ultra (1DPC) and 9600x.

I've just been running 2200/6400 (Hero) and 2000/6000 (Aorus).

-

1

1

-

2

2

-

-

3 hours ago, Papusan said:

This is how the favorite brand to @electrosoft want to push their trashware on consumers. Tempted brother ?😀

GamingLaptops moderators warn of MSI marketing bots flooding threads on Reddit

I'm not surprised. Targeted and blanket marketing across many companies and spectrums, like many things nefarious, will use the tools available to them and now it happens to be AI and bots.

Still sad to see from MSI 😞

(Hugs Vanguard while rocking back and forth and asking MSI 'Why? WHY?") 😂

3 hours ago, Papusan said:Steam Beta brings TPM/Secure Boot checks to System Information

Secure Boot and TPM 2.0 have become requirements for an increasing number of games, mainly those with stricter anti-cheat systems. Recent examples include EA’s Javelin anti-cheat in Battlefield 6 and the upcoming Call of Duty: Black Ops 7, both of which will not launch without these security features enabled

Steam beta helps players prep for games requiring Secure Boot or TPM

Steam Hardware Survey will also start collecting Secure Boot and TPM data

As always. No love for Gigabyte

Best bet is to have a games only install of W11/10 if you're that concerned with nothing else of value installed. The low level access afforded to anti-cheat measures is pretty alarming, but it shows the extent some gamers and companies will go to cheat.

-

2

2

-

-

-

15 minutes ago, Mr. Fox said:

I suspect it is all part of the Green Goblin's evil master plan to eff things up for everyone in the world except for themselves, but especially AMD. Stats quo. Best time to make a strike against the enemy is when they are at their weakest. And keep hitting them until they are dead. Playing fair and allowing them to come up for air is a stupid idea if you're playing for keeps. Dead enemies don't fight.

Seeing as AMD offers a complete vertical offering with CPU and GPUs, it makes sense even if it makes me feel a lot of icky.

I suspect over time, based on how things go, Nvidia will continue to purchase more of Intel and could actually acquire a majority stake if not buy them outright at some point. This is the logical conclusion and AMD vs Nvidia is headed to final boss mode showdown.

Heck, maybe Nvidia can right their CPU division down the road.

Let's not forget Nvidia is an American company too as is AMD.

A lot of this is on Intel....

And a lot of this is on AMD....

-

2

2

-

2

2

-

-

2 hours ago, tps3443 said:

Updated Nvflash for flashing FE's is possible now?

11 minutes ago, Mr. Fox said:I saw something on overclock.net that a guy that done something to override the flash blocking but it was not clear if it works on FE. I saw someone ask, but he had not responded last time I checked the thread.

Only one way to find out @tps3443. Give it a whirl. If it works, that would rock as you could run the gigachad!

----

Gigabyte Windforce 5090 on sale at MC atm for $1999.99. I know my local MC has 5 in stock.

Best Buy had a small drop of 5090 TUFs for $1999.99 drop today. They're all sold out in my region though.

-

3

3

-

-

As predicted by mid Sept at the latest, price drops bottomed out on the 5090 (don't say I didn't tell ya a month or so ago), and now stock is slowly drying up on several models and prices are slowly trending back up on several models especially with MSI cards where they are also out of stock across many models everywhere on several models including the Vanguard which has gone back up from $2499 to $2599/$2669. Even the Ventus is back up to $2399.99.

On the plus side, Asus Astral has dropped to $3199.99 (yay?) at Da Egg and Amazon atm....

9070xt's continue to come down in price and a few models are back to their MSRP including the Taichi 9070xt at Microcenter for $729.99. With MCC discount, $693.50. I might snag one of these as they have the most robust build yet again this time around for my Jonsbo Z20 fun times and my sff testing is rocking a dirt cheap, open box Cool Master 850 SFX w/ 12vhpwr connector.

-------------------------------------------------

On 9/16/2025 at 2:28 PM, Rage Set said:What's up everyone! I am sorry I have been away for awhile. I am trying my hand at YT and hopefully build a large enough following to get sponsored by a CNC maker or continue to build my reserve to buy one.

I haven't had the urge to upgrade any of my PC's to the latest and greatest (although I am thinking about the 9950X3D). I came across some local listings on a 5090, that weren't exactly deals (used, still above MSRP). The days of great used hardware prices are dead - people want 80 to 110% of their purchase price, and with new cards closing in on their MSRP's, I don't know who is buying used at these prices.

I think that if Intel releases the B770 and it's under $400, I'll pounce on that card. I only need something good enough for video editing and CAD.

I hope you and your families are doing well. I'm back here fully, doing my normal lurking.

Glad to see you check in @Rage Set! Hope everything is going well in life and yeah 5090s and 4090s even are still commanding a pretty penny. As stated above, prices are slowly trending back up on 5090s in the retail channel along with stock dropping. I'm seeing a lot of out of stock on many 5090 models at Newegg and Microcenter now where a month ago almost all of the models were in stock everywhere.

-------------------------------------------------------

12 hours ago, Papusan said:Let e'm burn in with the overpriced cards that no one want. Let'm bleed money.

Estimates by JPMorgan and Morgan Stanley suggest Nvidia may be on track to produce between 1.5 and 2 million of these GPUs before the end of the year, potentially leaving it sitting on a huge stack of unwanted cards.

Funny to see China sour on Nvidia a bit lately especially with all the improper channels to get cards into the country at better prices. As long as they can memory mod the 4090s and 5090s in tandem with the cost for a 6000D, Nvidia will win out in the end either way. They can just slow production and redirect dies elsewhere as needed.

12 hours ago, Papusan said:Only $1,119 USD. For 5070Ti. Disgusting. Asus can't stop maximice profits from stupid consumers/gamers. Amazon exclusive, LOL

ASUS TUF Gaming RTX 5070 Ti 16GB GDDR7 Released – Amazon Exclusive

ASUS has incorporated a range of reinforced electrical components, including TUF chokes, MOSFETs, and advanced 5K capacitors, engineered to sustain higher temperatures and provide greater stability than standard solutions.

Its Amazon-exclusive release strategy underscores a targeted distribution model while providing gamers with a GPU optimized for stability, thermal management, and next-generation GDDR7 memory performance.

If the stability is a problem with cheaper SKU's.... Why make cards that ain't stable? Here in Norway we have 5 years warranty. Some places even 7 years. Good luck A$$us if you cut down on quality&QC. There is something that called "payback" time. There is no place here you can offer/sell pure trash. Not here atleast🙂 Good luck greedy bastards! Our very good consumer laws will crush you. And that will help rest of the words consumers. Go back to the draw table and make products that will last 7 years. Or you'll lose money. Not this scam. God bless real consumer laws🙂

Two points here.

#1. The Japanese Yen is very weak atm so I would expect higher costs.

#2. Making a better / more robust version of something doesn't mean the original isn't still competent. Look at the tiers of PSUs when Gold is more than enough in the face of Platinum and Titanium. Look at their tiers of GPUs all with the same die in there and the price variance based on the surrounding components. This is no different.

On the other hand, this is Asus...... 🤣

12 hours ago, Papusan said:Modern times. Exactly what Jensen said... Pay more get more.

All you have to do is use DLSS and FG..... (massive eye roll here)

but in all seriousness, this is how it goes (sans poor coding/optimizations). Devs push the boundaries in upper end settings. Modern cards struggle to run it as is pushing high end settings (4k, High/Ultra settings, etc...). As time passes, what was once nearly impossible to run will eventually run with ease as hardware advances over X amount of years

I like to call this the 'Crysis Paradox' 🤑

-----------------------

16 hours ago, Reciever said:So I finally have a formal staycation lined up for christmas time, first time in maybe 6 years...So naturally I have some work lined up lol

2x GTX 690 w/ accelero xtreme

1x ATi HD 5870 w/ accelero xtreme

2x GTX 280's (1x w/ accelero xtreme)

2x 9800 GTX's w/ accelero xtreme (Having to modify, doesnt line up perfectly)

2x ATi HD 5970's (1x accelero xtreme)

1x AMD R9 280X TRI-X

1x AMD R9 390X TRI-X

1x ATi 4870x2 Reference

1x GTX 295 Dual PCB

1x GTX 970 MSi Gaming1x RTX 3090Ti

1x 7900 XTX Red Devil (Never Finished)

1x GTX 1060 3GB (Reference)

I might pick up a few more GPU's if the price is right.

Thanks to @Mr. Fox's writeup I'll likely start to pick up some of the water cooling equipment so I can put the 7900 XTX and 10850K (also courtesy of The Fox!) under water and get a bench built around that next to my Daily Driver.Luckily I am still working more than I sleep so picking these parts up should be more than plausible...

If you guys have any 3rd party heatsinks for older cards feel free to reach out, I seem to have a fascination with accelero xtremes 🙂At this rate, you're going to be in competition with @Papusan for collecting and benching older cards!

Do either of you have a dedicated wall kind of like JayZ2C has for his EVGA card collection for your collections?

-

2

2

-

-

4 hours ago, Mr. Fox said:

This is what happens when you build a desktop GPU like a turdbook instead of a one-piece PCB. This is why I instantly rejected it when I saw how it was made. Predictable outcome with a product deliberately designed with multiple points of failure.

Final verdict:

"Too many points of failure"

"Stick to something traditional"

I'm trying to figure out how the card was damaged to that point. They said UPS damaged it, but the way its designed no way the core comes away like that without zero damage anywhere on the outside and zero damage on the PCIe external connector but clearly bent/damaged pins on the internal mating and core contact damage.

My guess is someone messed up blocking it and/or trying to reapply LM.

Best thing is Tony learned a lot from working on one to refine his process for future 5000 series FE work including knowing to charge more for those repairs due to the complexity and headaches.

------------------------

*Sigh* and another scam over on OCN......

https://www.overclock.net/threads/asus-tuf-rtx-5090.1817558/page-2?post_id=29509662#post-29509662

You have to understand with so many compromised accounts that many are still in the proverbial chamber of bad actors to try and get people to pay with Zelle or PPFF or VenmoFF.

You should never, ever use these methods unless you absolutely know the person personally. I was in discussion for a few GPUs recently and the breaking point was payment. I'm not sending off FF no matter how good of a deal it is ever....

You suck it up and offer G&S like every other real business and entity offers.

-

2

2

-

1

1

-

-

1 hour ago, Clamibot said:

Well looks like 9070 XT prices are finally coming down. they're available at my Dallas Microcenter for $650 as of the time of this post. There are lots of them in stock too!

Yup, and HUB dropped a nice, timely video revisiting and comparing all the models across every spectrum.

King of the hill overall for cooling is yet again XFX Mercury OC, but that is also one of the largest. It is $809 right now down from a high of $929 but still crazy expensive. Best GPU and hotspot temps. It can fit in a Jonsbo Z20 though.

Reaper is a good compromise of price and form factor along with a sub 1000g weight (~905g). Next closest in weight is the Asus Prime at 1100g. That puppy is asking for some seriously small SFF loving. $650 is icing on the cake. Toss in 5% off from Microcenter CC and you're looking at 617.50 + tax.

Performance wise, they are all within ~6% of each other at most and usually much closer from the best to the worst.

Steve also addresses the Reaper model specifically in this video as to why they went out of stock for so long (Powercolor, like many AIBs during the initial rush were focusing on higher prices, bigger margin models) and why everything is coming back into stock now.

I might scoop up a Reaper. It checks a lot of the boxes for SFF builds and still gives you basically the same performance on the desktop overall.

-

1

1

-

1

1

-

-

1 hour ago, win32asmguy said:

I would say the same about the AMD models. For a while there I had the Clevo NH57 which actually ran fine with a 3950X installed and CCD1 disabled. It was much more efficient than any of the other 8-core CPUs and was also hitting DDR4-3600 with the 32gb B-die kit.

I think my Alienware 18 may be one of the ones with a poor CPU paste job as its reaching 100C under load. The GPU stays under 80C which is fine although the palm rest temps get up to 105F so its kind of warm to use.

I have gotten used to being able to tune almost everything on the Hydroc G2 including GPU TDP from 95W to 175W. Having something that can do less feels not as nice. I guess if a default profile happens to be what I want and comfortable to use then its fine.

The MSI Raider 18 would have the exact same issue with retaining RGB along with Acer. Its a shame they treat it that way and as it is only Clevo and Eluktronics seem to have fully configurable RGB including boot override.

I am surprised yours goes up to 175W on the 5070ti. I thought those were limited to 140W including boost.

The default RGB would drive me nuts. Having to run MSI Center and SteelSeries GG software irritated me more than just running AWCC along with a lot of other not needed software. Once I was done my eval, I blasted that and did a clean install and scores in TS and SN immediately went from "good" to "excellent" just from the clean install. I can live with just AWCC. It isn't nearly as crippling as Asus's AC on the two Strix 18 4080's I tried and returned.

My next step is to try and get ahold of the newest Intel APO to give a whirl on this and the wife's 14900KS SP109 for WoW.

---

Yikes, yeah that's not good at all on the Alienware. I know samples are supposed to be seeded like regular line models, but only an idiot would not assume samples sent for eval / reviews aren't perhaps double checked a bit to make sure they don't get dinged or even better performing samples make their way out the door.

Here is a run I did on my Alienware 18 with the 5070ti. You can see the 5070ti is pulling ~175w. This is in balanced mode. If I switch to overdrive/performance mode, the 5070ti drops back to 140w to give the CPU more juice. CPU topped out at 80c and GPU at ~63c in Timespy run. Leaving fans on their default profile and they went to I think 50% max? I initially thought this was all they could do and went, "wow, that's quiet!" then I switched to performance later and thought to myself, "Ah, there they are!' Nowhere near as bad as the Raider or other laptops I've tested though.

I'll be curious to try some overclocking given the 175w budget and already cool running temps later.

---------------------------------------------------------

In performance mode, letting the CPU run free it pulled up to 170w and the fans were automatically aggressive and temps topped out at ~68c. In this mode the 5070ti takes a back seat in testing and tops out at 140w.

-----

And just as a refresher, here is a 275HX in my Predator 16 OLED eval unit throttling like a champ and on an ice cold run clocking in at 33k which quickly reduced to 26k on run 3 whereas my Alienware stayed the same throughout....

It is a much smaller form factor though. I'm upgrading the wife with this one over her Asus Vivobook 16 w/ Ultra 185 and 3050 OLED for the back half of our travels this year. Even dialed down, WoW was crazy chunky on that Asus at 1080p. That was pre Tazevesh

Ice cold run:

3 runs:

-

2

2

-

-

4090 vs 5090 @8k anywhere from 24 to 58% faster.....

4090 is running out of VRAM with DOOM Dark Ages so toss out that 67% difference.

Overall average difference ~43% faster on the 5090.

------------------------

On 9/6/2025 at 1:29 AM, Mr. Fox said:So, as best I can tell just playing a game like DOOM: The Dark Ages with no overclock the GPU is pulling around 700W now and between 950 and 1000W from the wall now. Boost clock is a little over 3000 MHz and no fluctuation.... just a flat line. Speedway the same. Stock boost is a flat-line 3000 MHz and about 1100W from the wall. runing Speedway. The power cable is not even getting a little bit warm to the touch.

I ordered a new Cyberpower 1500W UPS because both of the 800W untis that I have power off under 100% 3D load. They used to beep once in a while using the 4090 but never powered off. They clearly cannot handle what this shunted 5090 is requiring of them.

I also ordered a new WireView Pro with the down-facing connector so I can actually see how many watts are getting pulled through the 12VHPWR cable. GPU-Z is showing like 480W paying DOOM, so it may be more than 700W just stock. Both of those will be here tomorrow.

Here's the stock clock flat-line graph from Speedway. Before the shunt mod a stock run was something like 2850-2870 boost clock and constantly fluctuating. So, without touching anything is running much more aggressively now.While I am waiting for those items to arrive I am going to switch to the stock vBIOS and see if the behavior is the same, better or worse compared to the GigaChad vBIOS after the shunt mod.

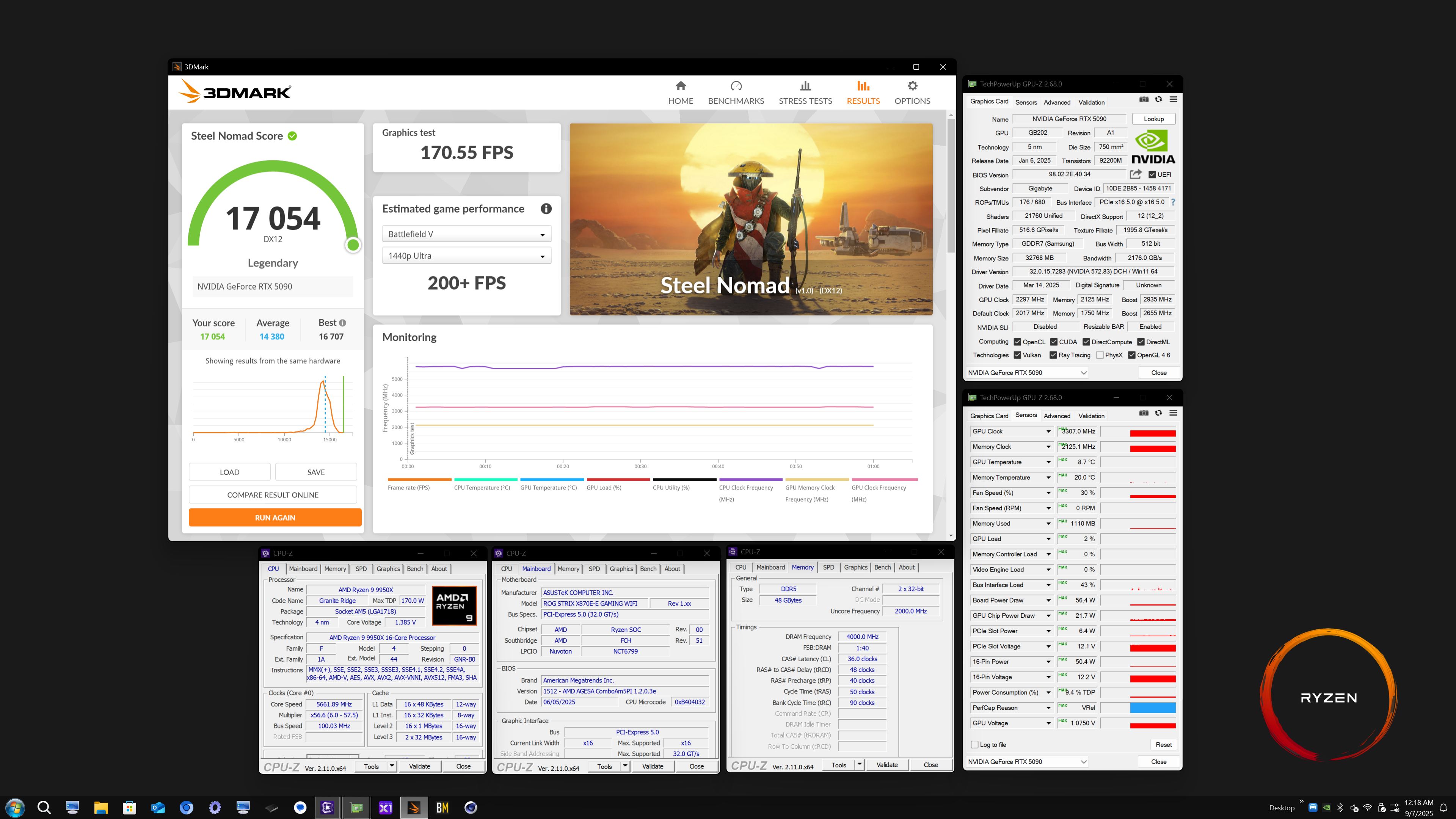

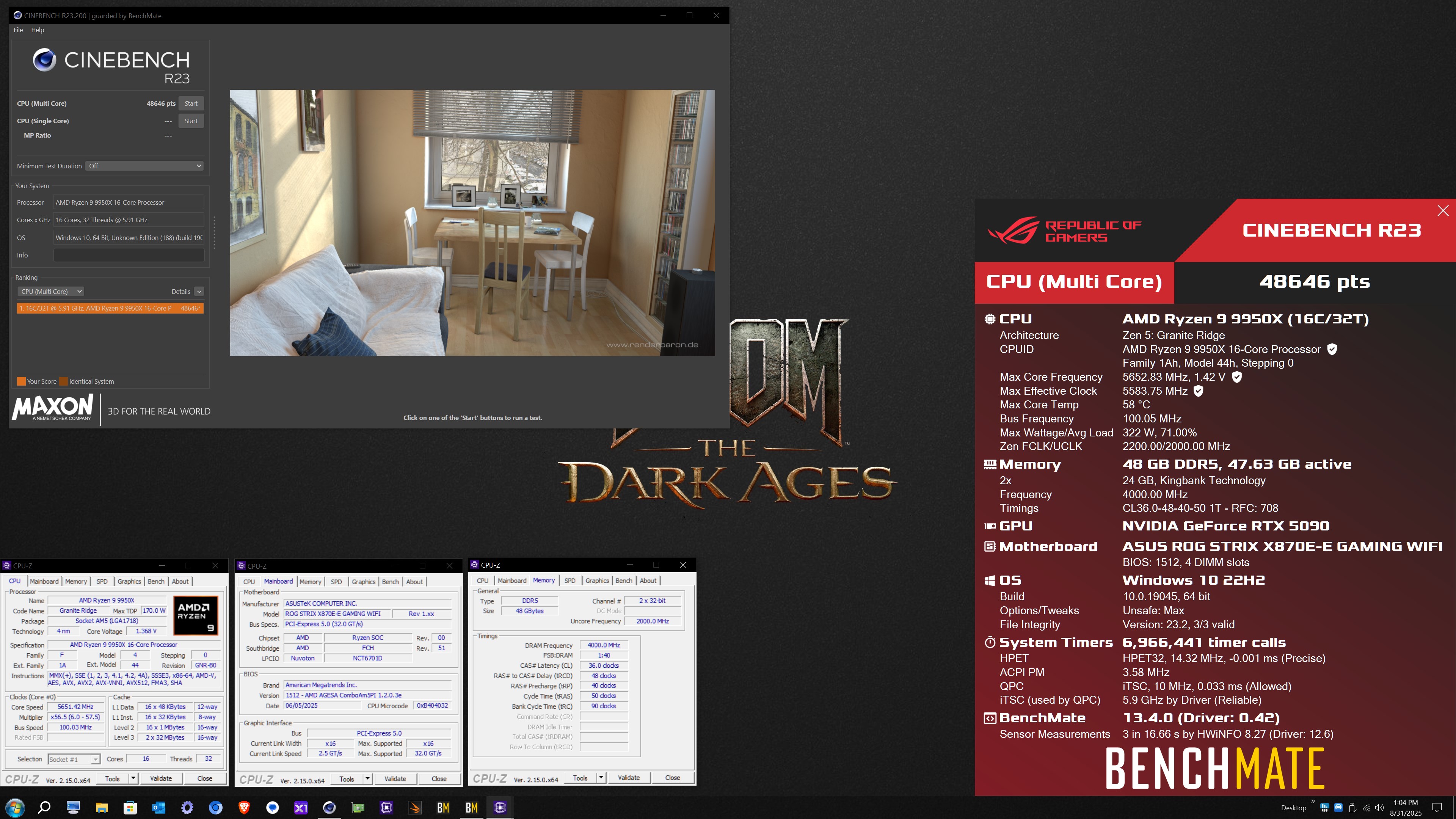

13 hours ago, Mr. Fox said:Nice! Breaking the 17k mark on SN. Are you going to push for higher numbers? Chilled 4090 vs chilled 5090.

I'll be curious to see how the WireView works out for you too as @tps3443 alluded to, it is hit or miss for temps but so many variables are in play and as always PEBKAC is always a factor so let's see ...

-------------------

On 9/6/2025 at 11:12 AM, win32asmguy said:Started testing out the AW 18.

It came with Sunon fans that are not whiny which is nice. I agree the pitch is improved and the noise levels in general. I can also have this setting on my laptop stand without it causing whine or a pitch change which is much better than M18 R1. Its definitely a better air cooling solution than the Hydroc G2.

The RGB was Aurora by default and overriding to teal still does not set it as a boot effect. Ideally I want no RGB on any subzone by default (may have to unplug the mobo connectors) and white only on the keyboard. I could even live with solid teal everywhere but it seems like the RGB controllers don't have the few KB needed to store a profile to memory...

Installed the Kingston Fury XMP 6400 kit. It booted at 4800 but after enabling XMP it was not booting at all. I have heard that SREP still works on these so maybe manual tuning is possible. It will be painful though as not even a RTC reset is recovering from these memory brick scenarios. There is supposed to be a validated ADATA 7200MT 2x16GB XMP kit coming for these but I cannot find any info about it other than a part number in a support document.

Its a shame these still ship with E31. This is a perfect example why I think they should ditch it and use PTM7958SP, just too much risk of it leaking through the barrier. I would certainly be fully removing the board and carefully replacing it before it became an issue. I still hear reports of wide temperature variance on these new models which likely is from poor QC with E31 application.

Yeah, the noise levels (especially sitting next to my former Raider) were immediately noticeable. I also think it is an AMD issue with their mobile variants even back to AM4. They just boost super aggressively and are overly aggressive with their cooling profiles. I've had 4 AMD laptops in my possession and the fans are very present. Three Acers, one MSI.

Overall, the cooling system is very good on the new Alienware 18. Looks like Alienware might be playing BIOS customization games depending on model color and theme. My teal model defaults to solid teal no matter what unless I override it with ACC.

Not retaining settings is the new tactic by AIBs and OEMs to force you to use their data harvesting software at all times. Asus literally removed the retention mechanism in a BIOS update on the Z690 Strix D4 to try and force Armory Crate down our throats. Maybe SignalRGB will work.

Glad to know I didn't crack it open and install those sticks just for it to not work. Score one for other projects and life keeping my attention atm.

My Alienware was an eval review model so while not always valid, I do tend to think the OEMs tend to double and triple check their samples sent out so there's that.

How are your temps and scores so far? 5090 is more powerful, but the 5070ti also pulls 175w too so the overall cooling scenario should be about equal unless your 5090 is pulling more.

-

1

1

-

-

6 minutes ago, Papusan said:

5050 should be the only card this gen Nvidia with 8GB vram. And 5060 with 16GB vram shouldn't be much more expensive than the 8GB variant. Maybe +25$ on top at best.

In an ideal world, absolutely but Nvidia will eventually be brought kicking and screaming into more baseline vram both mobile and desktop from top to bottom.

With that being said, are there actually any games that can't run on 1080p medium with 8GB of vram?

I do think Nvidia shot themselves in the foot when they offered the 3060 with 12GB. They opened Pandora's box a smidge... 🤣

If Nvidia is moving the baseline with the 070 class on up now with Supers, and slowing orders of 8GB 060ti variants, eventually it would make sense to:

050 = 8GB

060 = 12GB

060ti =16GB

070 =18GB

070ti =24GB

080 =24GB

090 =32GB

The only fear of course is when 6000 series drops they regress back to non Super configs.

-

2

2

-

-

20 hours ago, Papusan said:

Nvidia have sold more 5000 series cards than anyone though at the beginning of the year. Another nail in the AMD cards coffin. Right into the 9070XT heart. And they need to stop price their products so close to Nvidia. Won't work. Fire Azor!!! Today.

Rumor: Nvidia to Replace RTX 5070 Ti and 5080 With SUPER Models in October 2025

Published 13 hours ago by Hilbert Hagedoorn

If true, this move suggests Nvidia wants to lock down the market for graphics cards priced above $550 while future-proofing them with larger memory pools.

Nvidia doubling down on AMD and at least finally admitting gamers want more vram. 18gb and 24gb variants along with the 5070 getting a performance boost. It will close the gap between the 5070 and 9070 and give the 5070 more vram.

24gb variants of the 5070ti and 5080 just put the nail in the coffin for ther 9070xt atm unless prices seriously change.

19 hours ago, Mr. Fox said:Too bad for the dumb-dumbs that already wasted money on the latest GPUs with only 8GB. Sucks to them, but also not cool on NVIDIA's part to screw them over like that.

For anyone that believes NVIDIA actually wants to futureproof (i.e. sell fewer) GPUs, I have some ocean beach property in Phoenix that I am selling very cheap.

5060 8GB makes sense for budget minded buyers. 5050 is a farce. At least AMD and Nvidia give buyers a choice between 8GB and 16GB models of the 9060 and 5060ti. Recent market data shows buyers are greatly gravitating towards the 16GB variants....shocker.

Yeah, Nvidia wants to lock down the market even more. We need AMD. They keep Nvidia on their toes at least a little bit. Only reason we're getting Supers with memory bumps along with maybe a bit of stagnant sales atm from those who want more vram.

Future proof? I'm sure that is blasphemy in the halls of Nvidia....

18 hours ago, Mr. Fox said:Alienware has met its match... they no longer the hold the title to "worst" thanks to HP. They are now the runner up.

"No one can out screw up a known boutique mash up like Dell and Alienware!!!"

**HP and Voodoo have entered the chat**

🤣

2 hours ago, Mr. Fox said:We are surrounded by stupid.

Here is some new well-deserved Razer hate... https://youtu.be/8w8m1UuLsEQ

I received the shunt resistors yesterday so I will probably do the mod tomorroww.

w00t! Looking forward to it! Seeing results from stock, AC, water, chilled and now shunted shows a nice progression.

-

2

2

-

-

2 hours ago, Papusan said:

Nvidia have sold more 5000 series cards than anyone though at the beginning of the year. Another nail in the AMD cards coffin. Right into the 9070XT heart. And they need to stop price their products so close to Nvidia. Won't work. Fire Azor!!! Today.

Rumor: Nvidia to Replace RTX 5070 Ti and 5080 With SUPER Models in October 2025

Published 13 hours ago by Hilbert Hagedoorn

If true, this move suggests Nvidia wants to lock down the market for graphics cards priced above $550 while future-proofing them with larger memory pools.

The scary part is Q1, ok, I get it since AMD had not released the 9070xt and Nvidia was the only new game in town so 12%->8%, but Q2 when AMD was fully on the market brand new and Nvidia's market share grew yet another 2% while AMD contracted that same 2%.

AMD has to get it through their thick skull that they will need to be better and priced super competitive to wrestle away customers because if not, why would they entertain switching? I mean, I held onto my 9070xt because it is an absolute 1440p WoW beast with the newest drivers and WoW updates. I am blown away at its performance and smoothness on the wife's 32" 165hz Asus display.

Even back in the 5090 camp, I still stand by the 9070xt handling the lows better than the 5080 and 5090 in WoW. That hasn't changed.

But those prices are going to continue to hold AMD back.

-

2

2

-

-

Well, this tracks with Steam Survey and AMD's new cards basically having no break through while NVIDIA continues to grow. They now own 94% of the market up from 88% last year this time. Insane....

but it makes sense considering how closely the 9070xt has been priced to the 5070ti. $599 vs $749 and the 9070xt makes sense. Any other pricing and it's a no brainer for the most part to go with a 5070ti.

Other tiers are just too closely priced to make an AMD purchase a potential no brainer. AMD needs to compete on price and performance.

Understand, for every 100 GPUs sold, 94 go to Nvidia and 6 go to AMD. I'd like to see numbers dialed down explicitly to gaming but those numbers are bonkers.

Newest Steam survey released just yesterday shows the 5060 is the fastest growing GPU and yet again AMD has not made enough of an impact to enter the charts.....

https://videocardz.com/newz/jpr-nvidia-discrete-gpu-market-share-reaches-94

-------------------------------------------------------

Insane gains, basically same price $299.99 (even cheaper with inflation calculations)....many will still complain because it's their nature....

-

2

2

-

-

So strange a few days ago to see 5090FE's available on Best Buy almost all day with the, "See Details" button blue before they eventually sold out. I didn't want one (explained below), but still good to see. Makes sense considering in the EU they are actually being discounted now a bit in certain regions.

------

Check out the temps which are great too.

Astral has almost the exact same dimensions as a Vanguard 5090. This case was in my top 3 before but after seeing it can fit a Vanguard 5090 sized card and actually seeing it in action here at Quake Con from a gent in the BYOB line, I'm sold on it. It seems damn near perfect and checks all the boxes..... plus it's only $99.99 now. I especially like the no riser design.

Timestamped with gent in line.....

It can handle monster quad slot behemoths like the Astral, Vanguard and more. Looks like a fun case to play around with too.

---------------------------------------------------------------------------------------

20 hours ago, Mr. Fox said:I figured I had better do it while I had the wild hare up my butt or I'd end up deciding it wasn't worth the effort. 🤣 I wheeled everything into the living room and benched from the comfort of my recliner.

Definitely power-limited. There is no point in repeating these benchmarks without a shunt mod because it's maxed out on the power budget now. But the clocks are holding a lot higher with the chilled water. These were pulling less than 1.000V under load. +300 core offset. I will have to order the resistors Brother @tps3443 mentioned since all of my spares are too high resistance. Travis do you have a link where you bought yours? Digi-Key or Mouser?

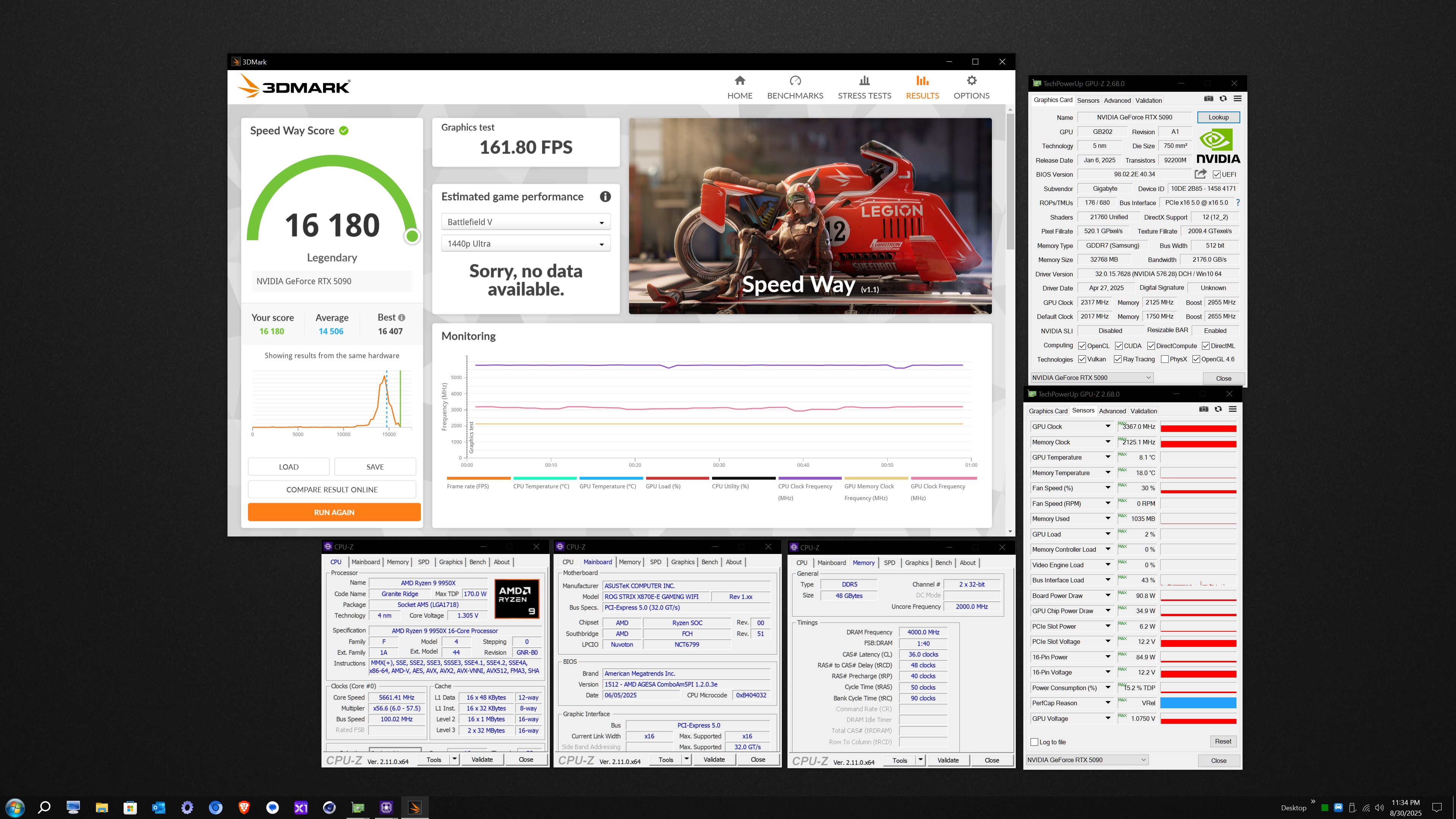

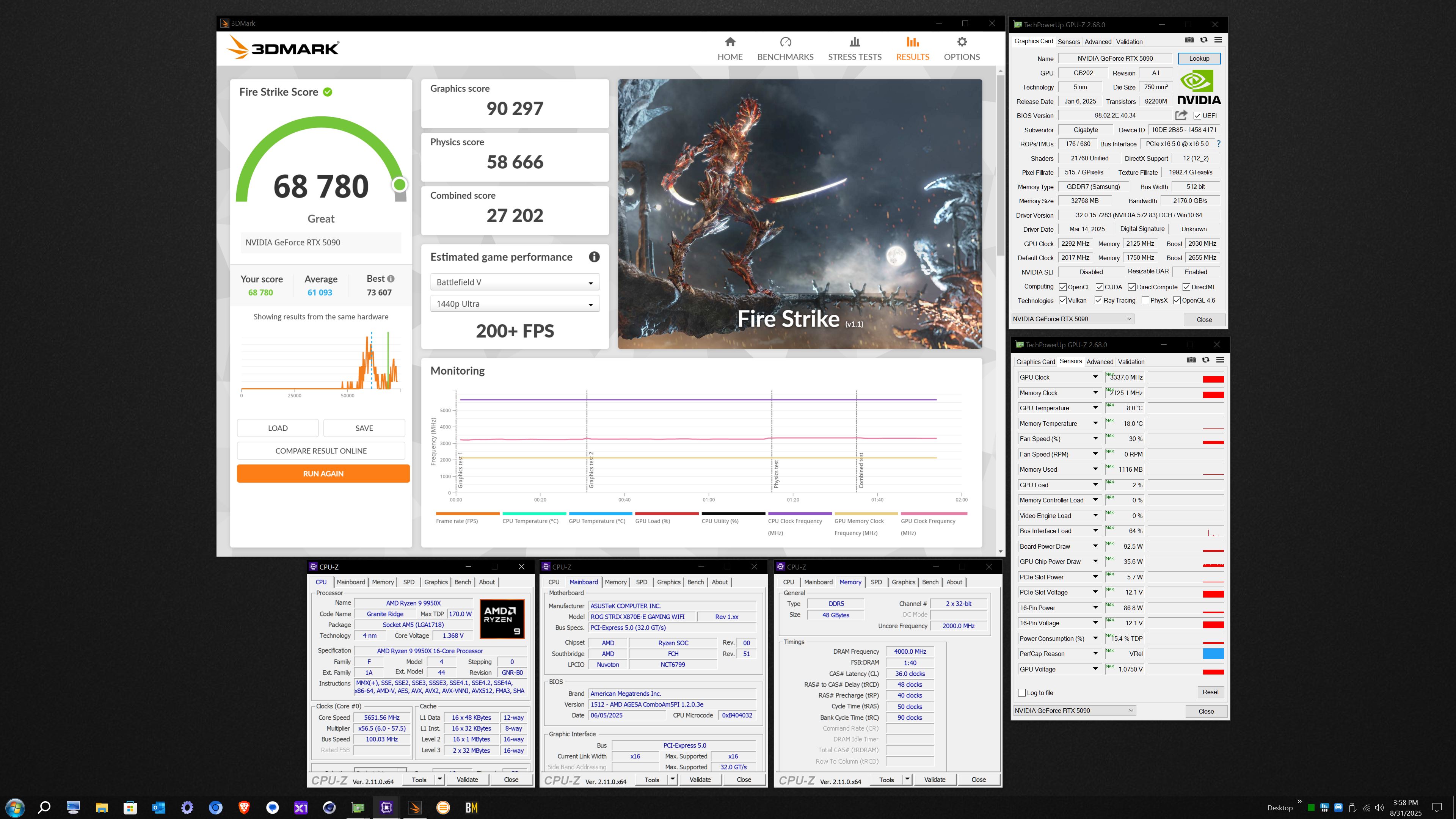

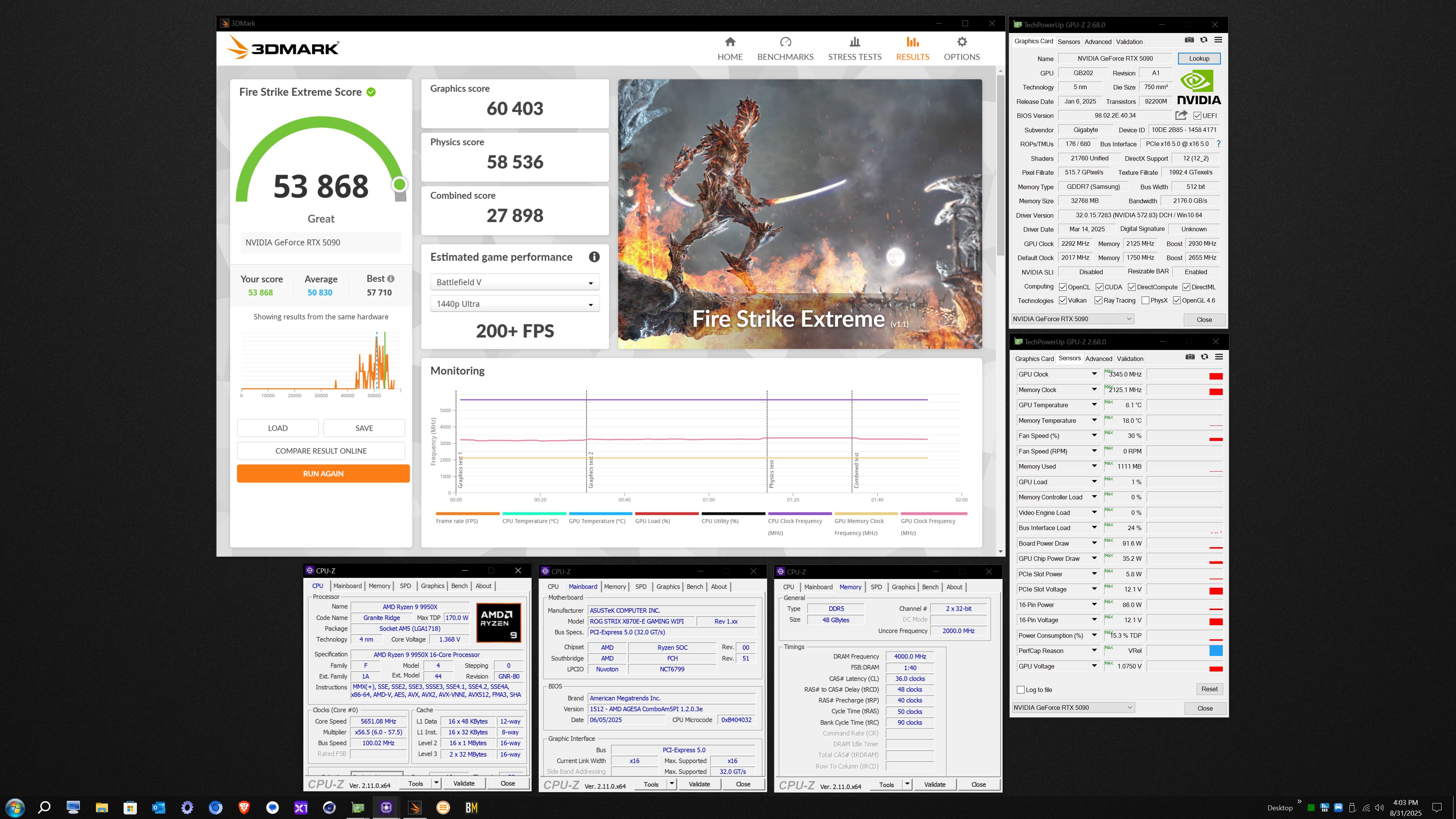

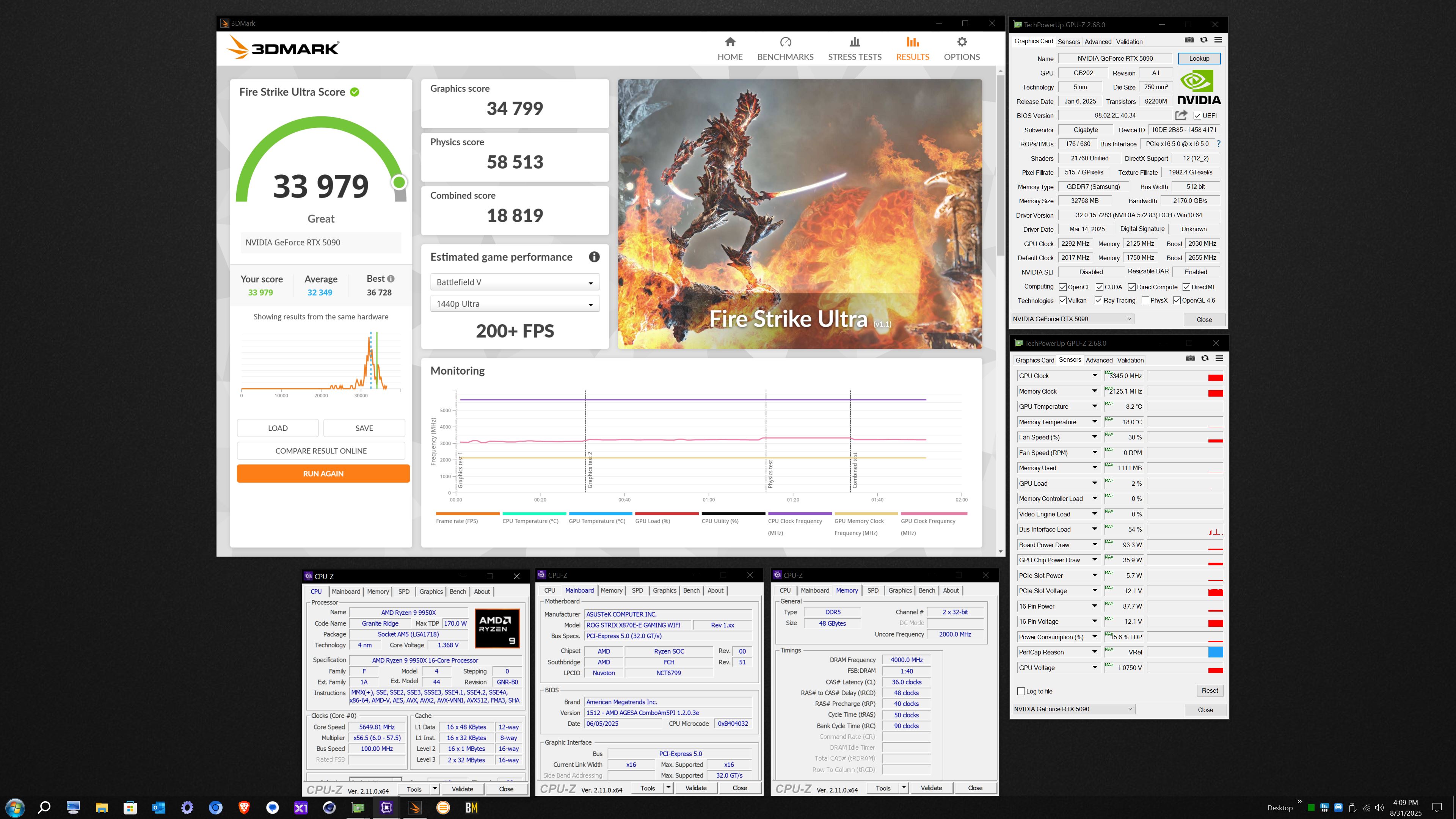

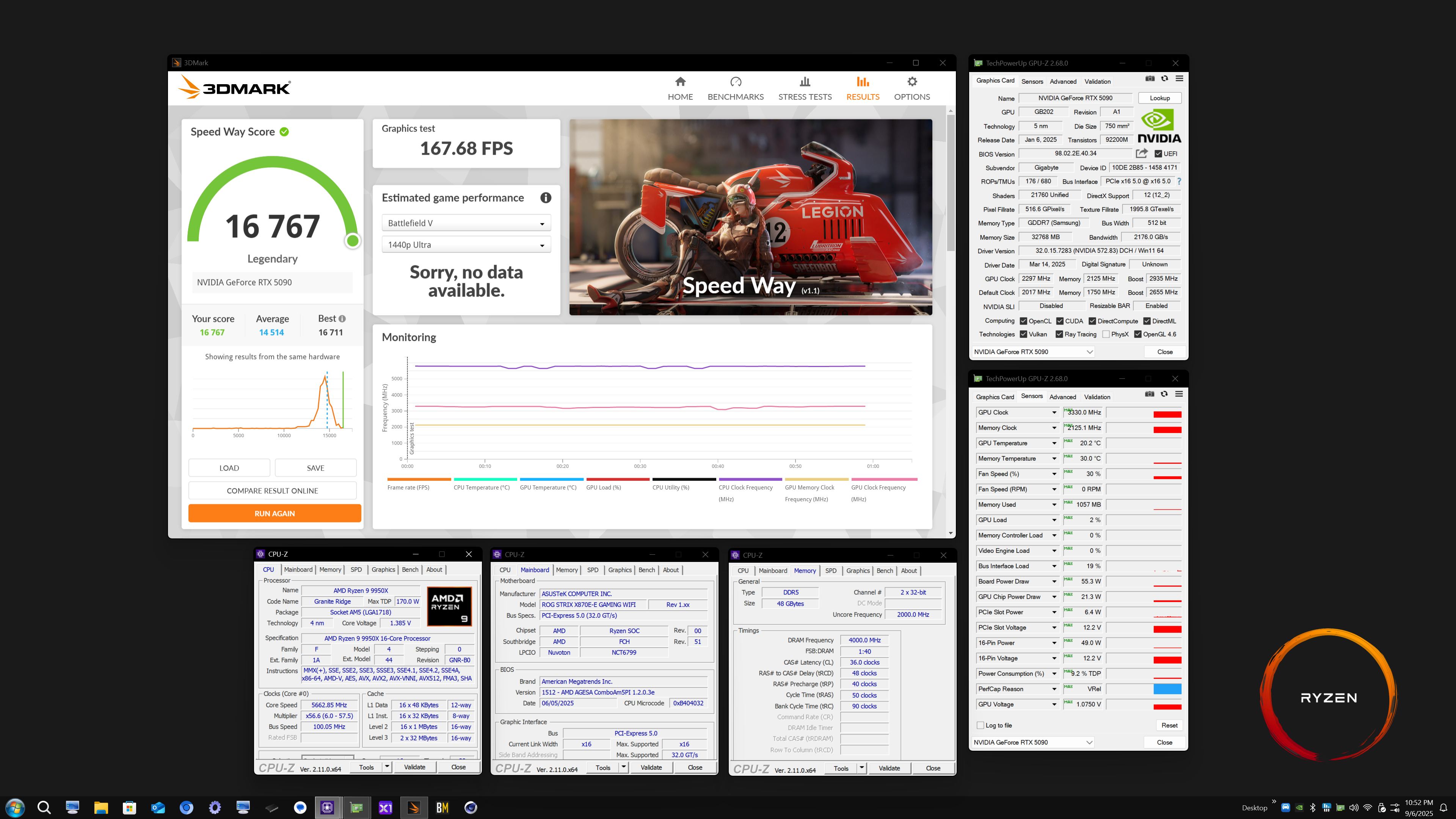

Speedway - https://www.3dmark.com/sw/2656446 | https://hwbot.org/benchmarks/3dmark_-_speed_way/submissions/5888556

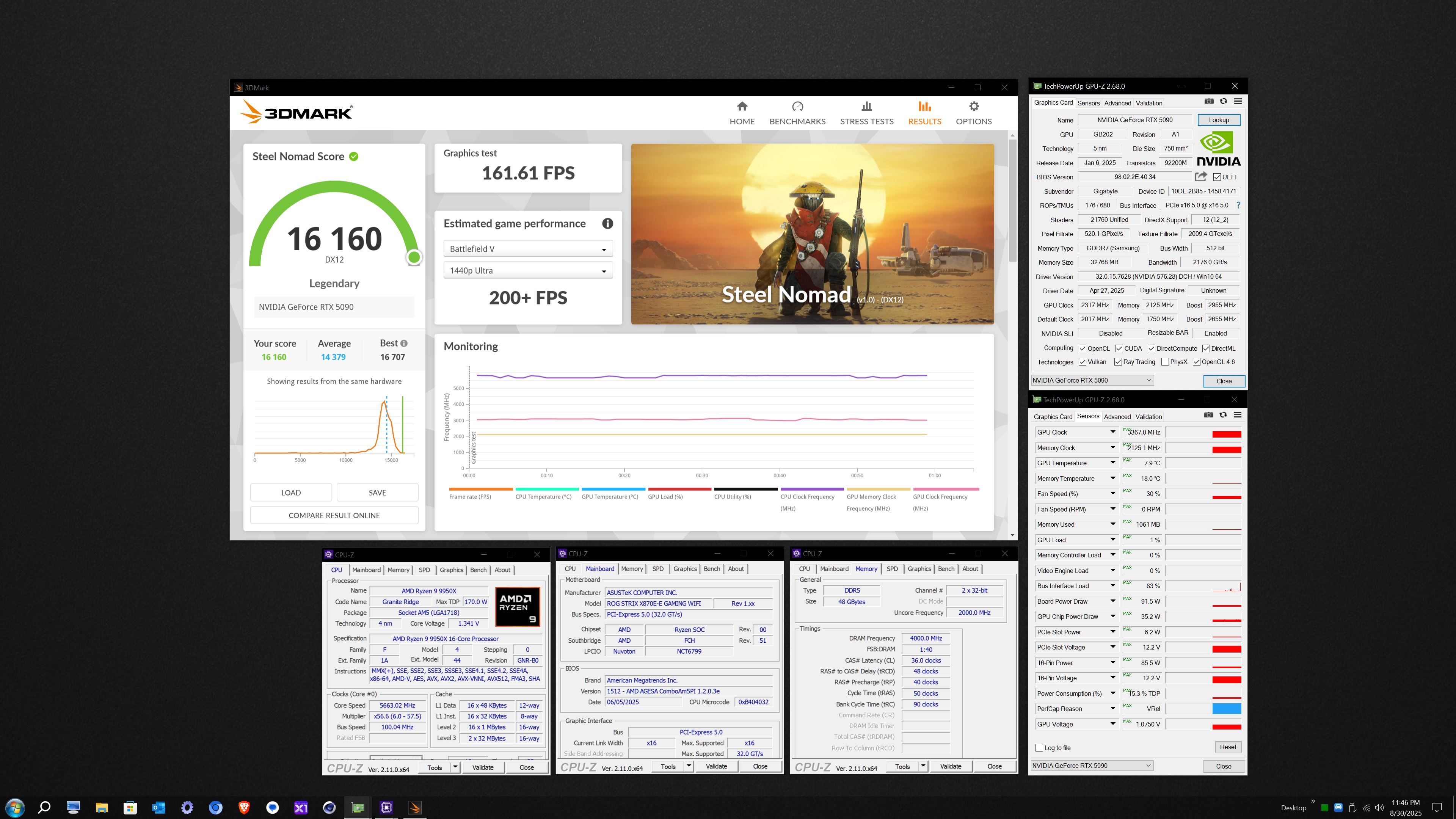

Steel Nomad (DX12) - https://www.3dmark.com/sn/8377250 | https://hwbot.org/benchmarks/3dmark_-_steel_nomad_dx12/submissions/5888557

Steel Nomad (DX12) - https://www.3dmark.com/sn/8377250 | https://hwbot.org/benchmarks/3dmark_-_steel_nomad_dx12/submissions/5888557

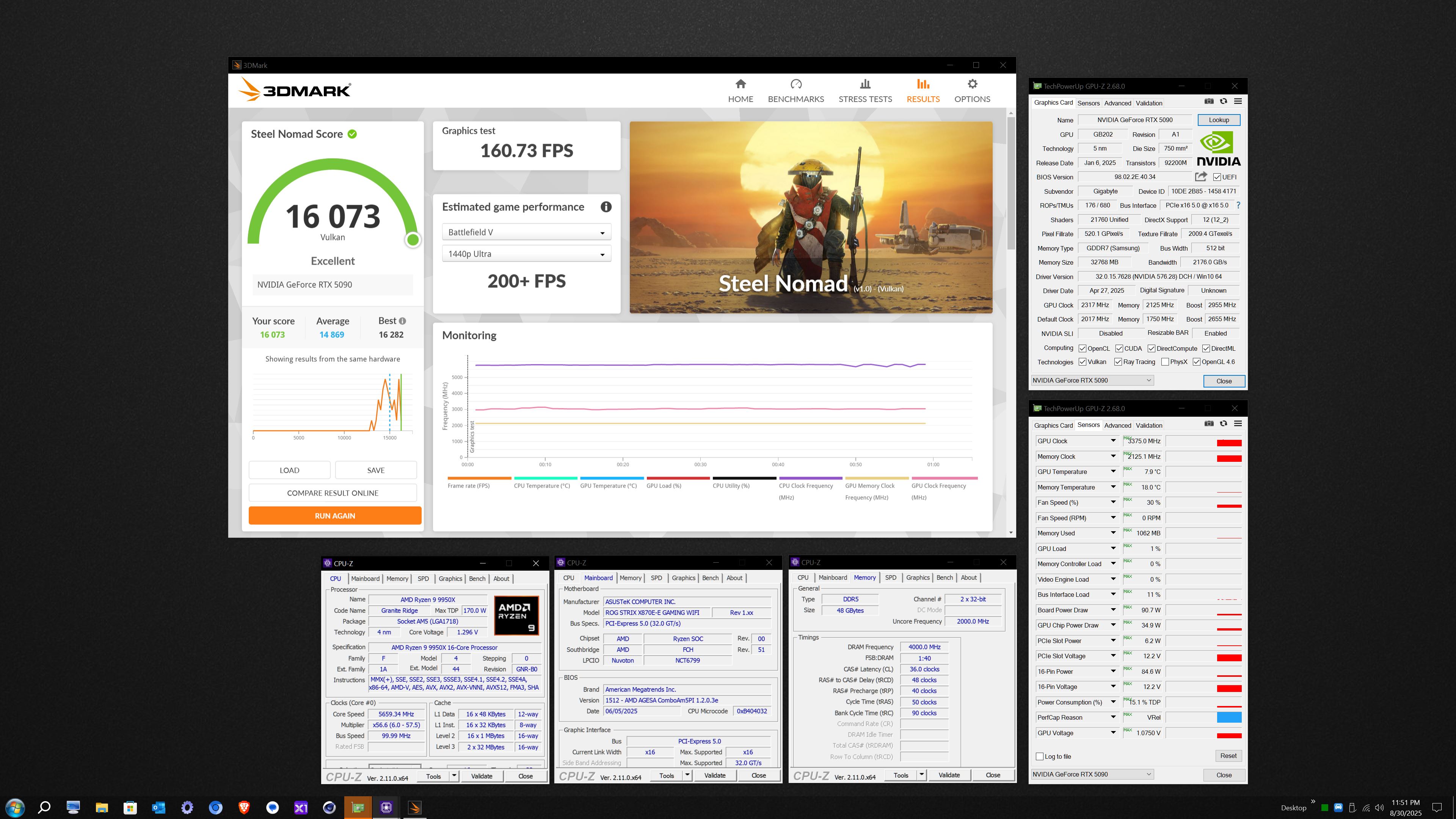

Steel Nomad (Vulkan) - https://www.3dmark.com/sn/8377374 | https://hwbot.org/benchmarks/3dmark_-_steel_nomad_vulkan/submissions/5888558?

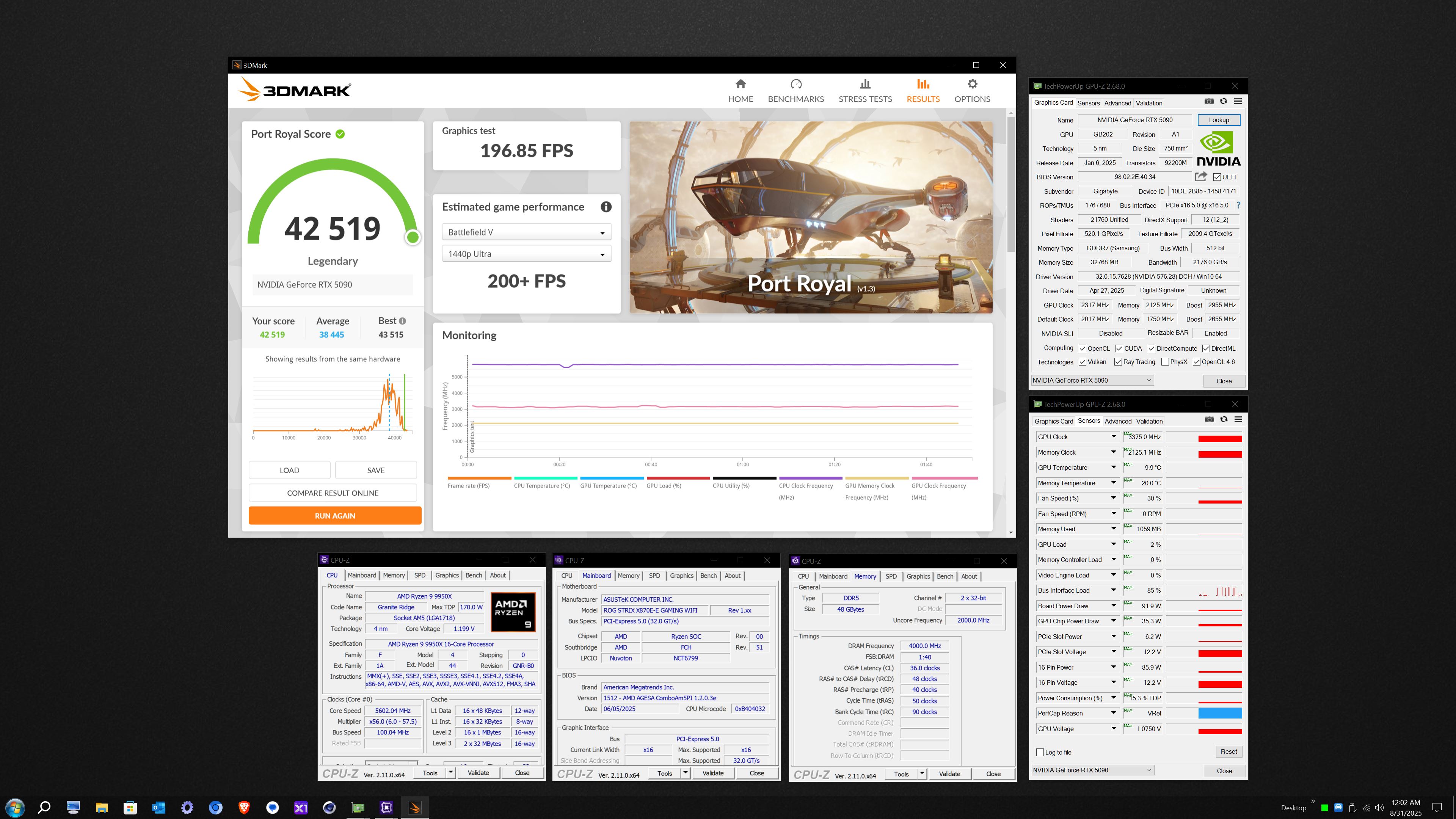

Port Royal - https://www.3dmark.com/pr/3623727 | https://hwbot.org/benchmarks/3dmark_-_port_royal/submissions/5888560?

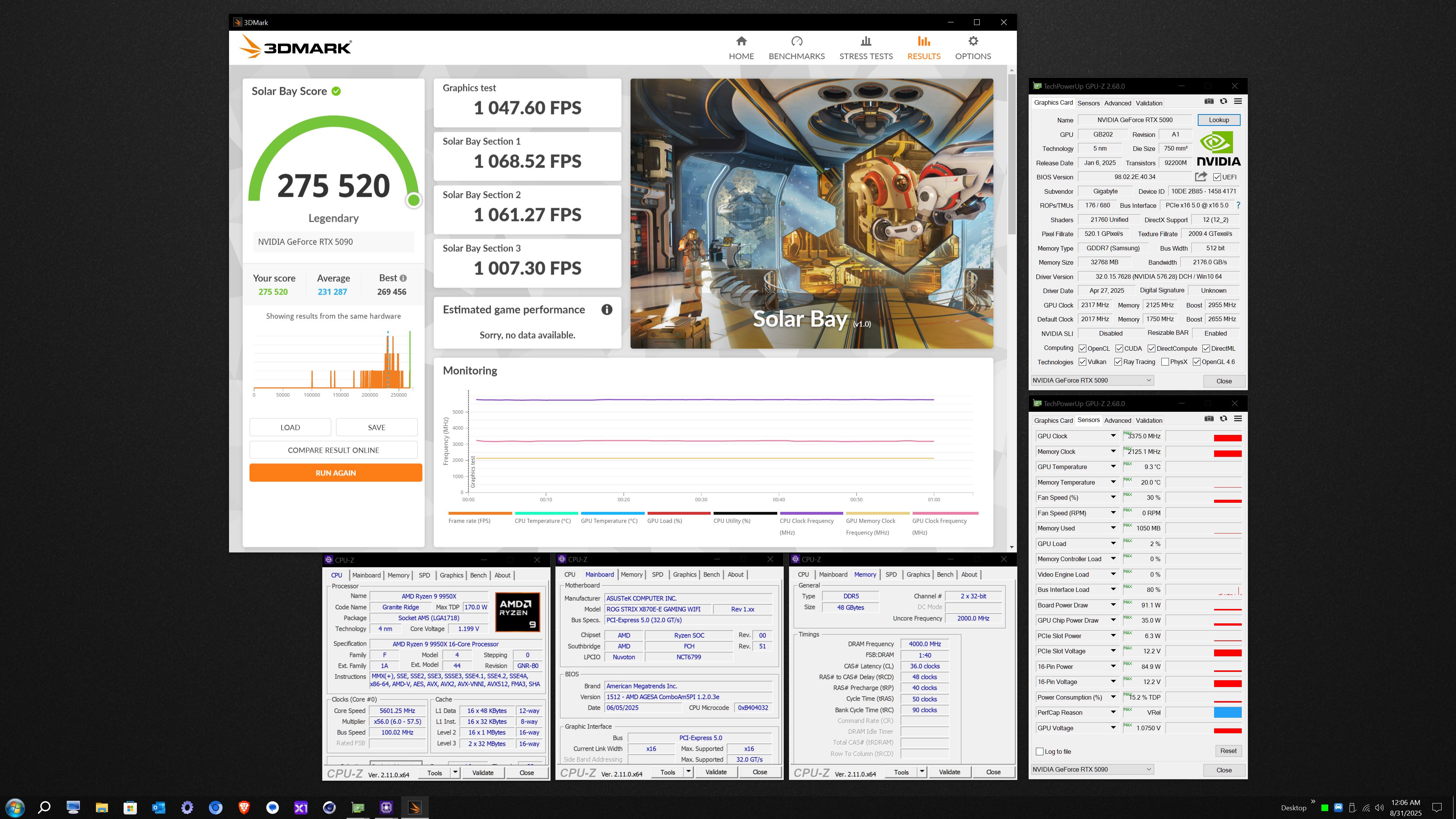

Solar Bay - https://www.3dmark.com/sb/367617 | https://hwbot.org/benchmarks/3dmark_-_solar_bay/submissions/5888561?

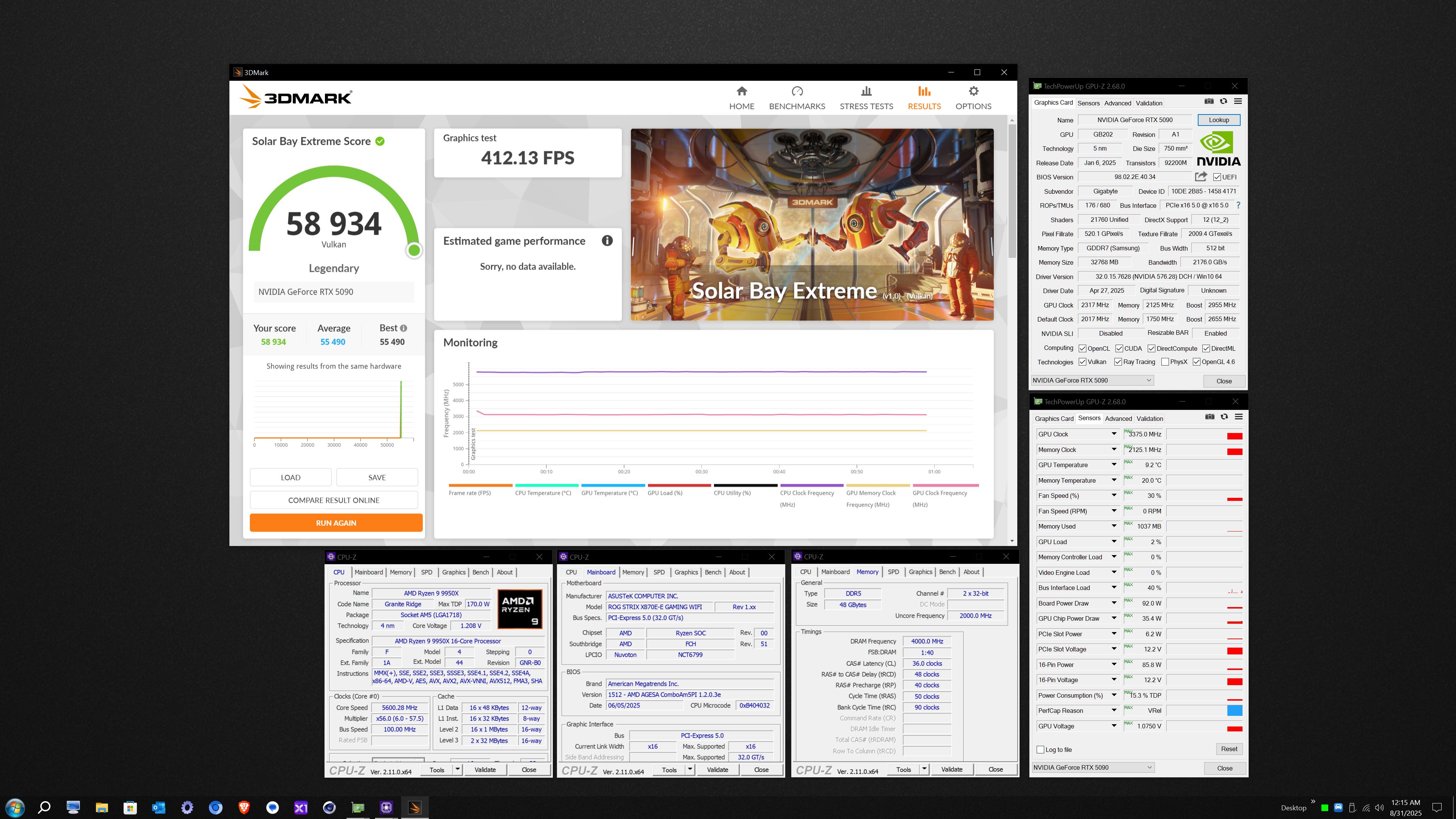

Solar Bay Extreme - http://www.3dmark.com/sb/367638 | https://hwbot.org/benchmarks/3dmark_-_solar_bay_extreme/submissions/5888562

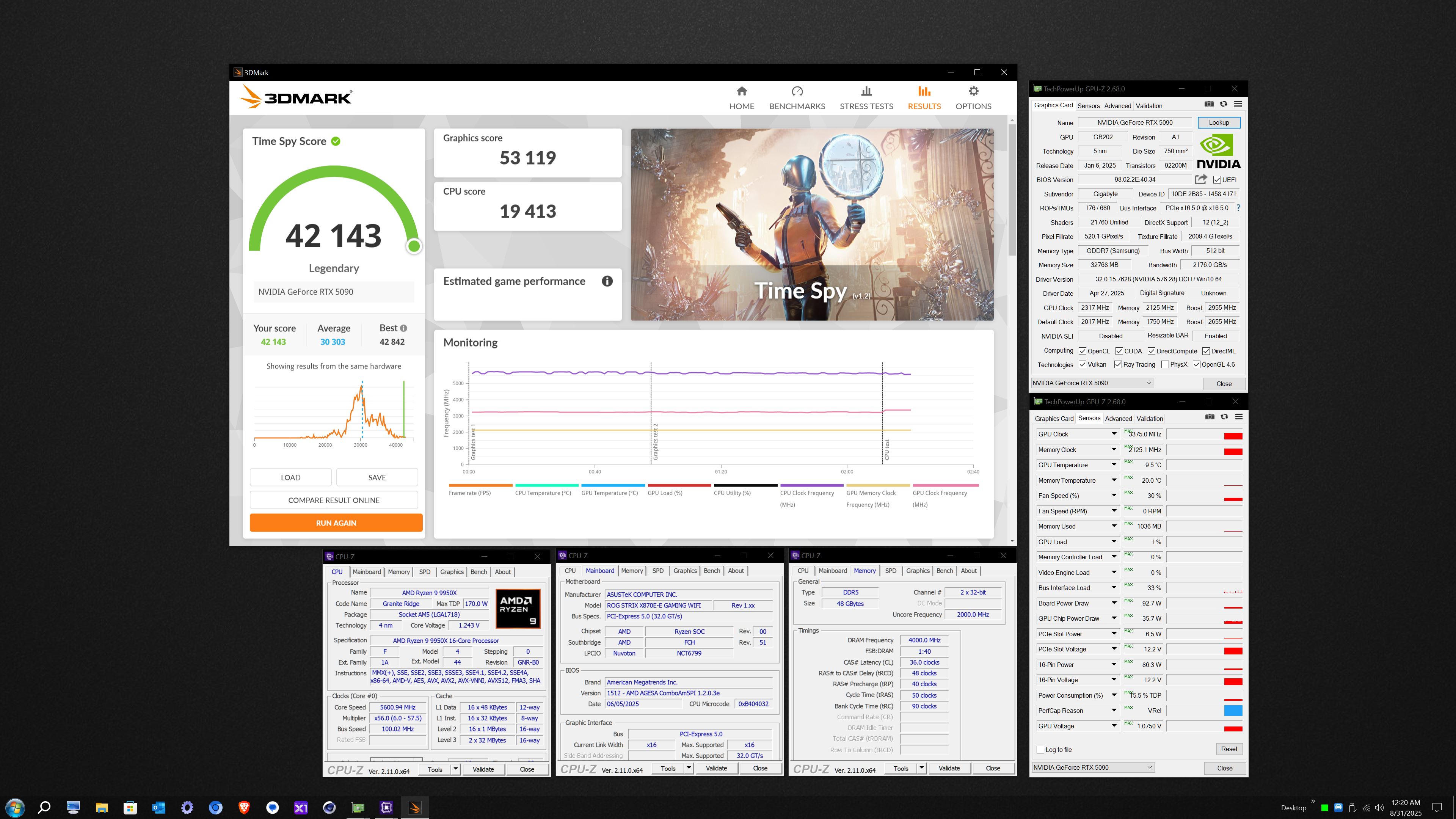

Time Spy - https://www.3dmark.com/spy/58438069 | https://hwbot.org/benchmarks/3dmark_-_time_spy/submissions/5888565

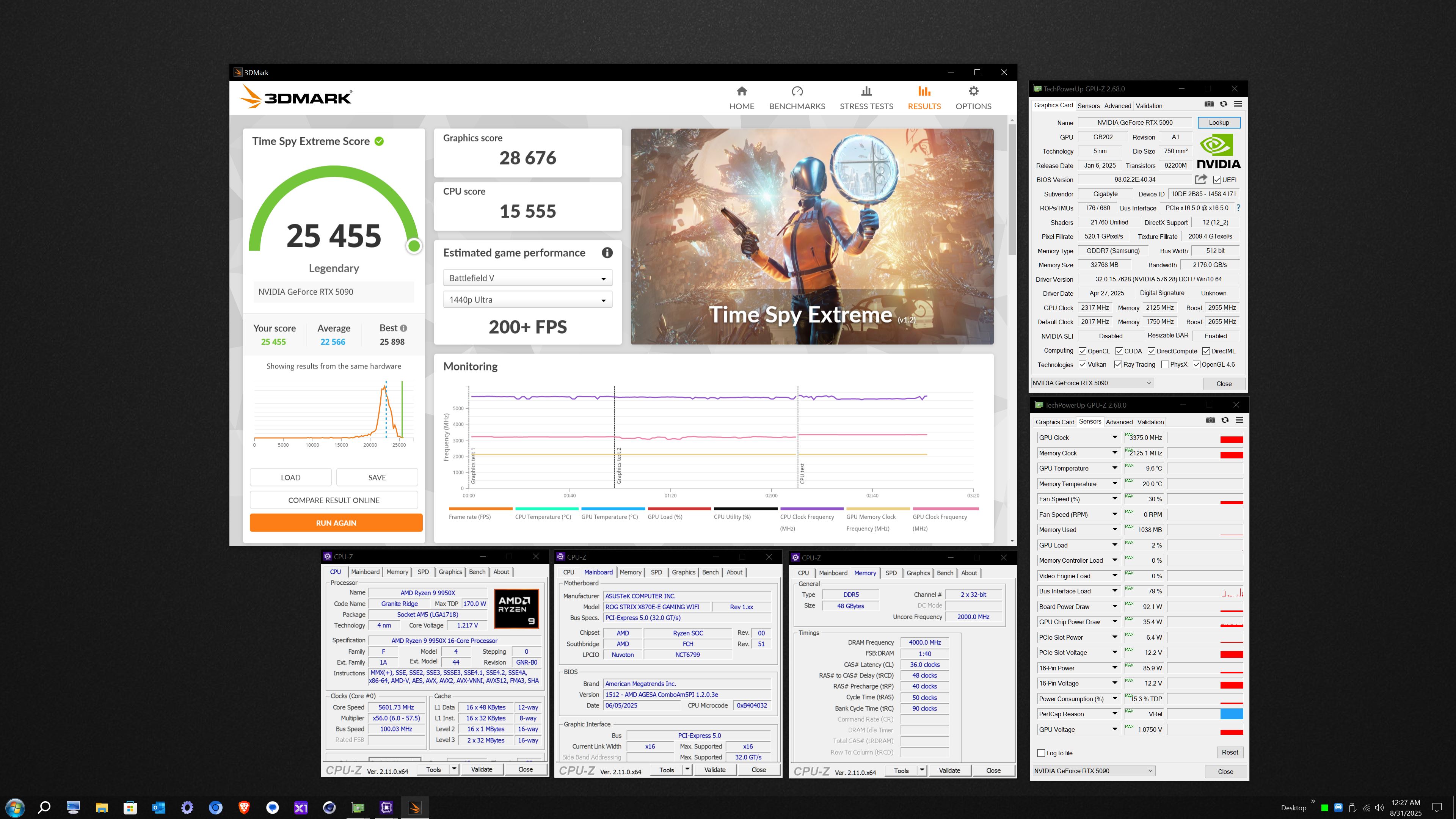

Time Spy Extreme - https://www.3dmark.com/spy/58438160 | https://hwbot.org/benchmarks/3dmark_-_time_spy_extreme/submissions/5888567

8 hours ago, Mr. Fox said:4 hours ago, Mr. Fox said:

8 hours ago, Mr. Fox said:4 hours ago, Mr. Fox said:OK I think I am done now

Fire Strike - https://www.3dmark.com/fs/33747306 | https://hwbot.org/benchmarks/3dmark_-_fire_strike/submissions/5888849

Fire Strike Extreme - https://www.3dmark.com/fs/33747317 | https://hwbot.org/benchmarks/3dmark_-_fire_strike_extreme/submissions/5888851

Fire Strike Ultra - https://www.3dmark.com/fs/33747324 | https://hwbot.org/benchmarks/3dmark_-_fire_strike_ultra/submissions/5888853

Chiller mode ACTIVATED...

The Mrs. was ok with a living room benching session? 😁 My wife gives me deliberate side eye when I start to use the dining room table for projects.....

45 minutes ago, Mr. Fox said:I only need two, but I will have spares. I am going to try the Jufes no detection easy reversal sneaky technique this time instead of solder and see how that works. If it doesn't I can always solder it after the fact. If we eventually get a 1kW XOC vBIOS leaked then I can just peel them off without a trace and without any effect on the warranty. If it works well I will send you a couple for your 5090 @electrosoft

https://www.mouser.com/ProductDetail/755-PMR100HZPFV2L00

Looking real good! Once that shunt is in place that Zotac is going to really start to sing full volume....

lol, me and shunting.... 🤣 Right after @Papusan and @Talon shunt theirs first. 🙂

14 hours ago, Papusan said:This what you said while Intel almost say Core Ultra (Arrow Lake) was a failure as desktop chips 😎 You and Intel's CFO can't both have correct brother, LOL

One of you has to be wrong😂

As you know, we kind of fumbled the football on the desktop side, particularly high performance desktop side. So we’re as you kind of look at share on a dollar basis versus a unit basis, we don’t perform as well and it’s mostly because of this high end desktop business that we didn’t have a good offering this year.

Intel Confirms Arrow Lake Weakness, Bets on Nova Lake to Compete With AMD Zen 6

At the Deutsche Bank Technology Conference in 2025, Intel’s CFO David Zinsner explained that the company sees Arrow Lake as a step that didn’t quite hit the performance target for high-end desktops.

Well, he clearly is talking the general (m)asses that didn't take the time to properly tune it.

It is 13th vs 7800X3D all over again. Everybody screaming X3D superiority among the general end users and channels, and when I decided to tune them both up, guess what? 13900KS made massive performance improvements and the 7800X3D marginally so since its main strength lies in that cache.

I'm not saying an Ultra 285k is going to slay a 9800X3D, but it can definitely gain a lot of ground when properly tuned. I'm just fighting the urge to do it all over again like before because that itch is picking up.....

-

3

3

-

-

6 hours ago, win32asmguy said:

Got the little Claw 8 setup with WoW on the stock install, performance is pretty impressive so far! It holds around 108fps at your testing spot near the Tazavesh entrance. That is unplugged with 20W/19W PL1/PL2. ConsolePort (the addon to streamline using the game with a controller) needs a bit of setup so I just hooked up a keyboard/mouse to test. Also have Amazon delivering a screen protector and case for it today and will swap to a 2TB WD SN770m along with a clean install of W11.

For fun I ran AIDA64 on it and memory latency was 96ns, better than most Arrow Lake HX I have seen! So maybe the LPDDR5X is doing ok here. In this case the device has 32GB soldered which is sufficient and the form factor does not have space for SODIMM or CAMM.

Eluktronics has also posted their 9955HX3D laptops for sale, but only in pre-built configurations due to memory compatibility issues:

https://www.eluktronics.com/HYDROC-16-9955HX3D-MLED-5090-32G-2T

Hopefully once Premamod is available they can do a proper Intel vs. AMD comparison with both systems tuned. My guess is the HX3D will do better than Intel in performance but have worse battery life. I wonder if it would consistently have better performance than the 275HX during world bosses / raids / busy Dornogal. From your positive testing with the 9800X3D it seems like it very well could. I even get a lot of stuttering upon initial login while switching between alts for profession activities and it would be nice if it handled that better as well.

Whoa! I see BB has them for $1k. Man, it does look sweet. Let me know if you're able to fully sort out the console controls to actually play because if not, then it still would make more sense to get a lower powered, but capable, laptop and use that while driving in the car. If console controls can actually translate to a real, valid gaming experience just even dailies, farming, WQ, etc... that is definitely something I would think of picking up.

Crazy the latency is better than both my 275hx laptops lol.

Man I wish they offered that in an 18" model. Right now, I plan on riding this free Alienware 18 for awhile. I might give the Raider 18 9955HX3D a try down the road once prices become less crazy or even the Raider 285hx + 5090 since it routinely drops to $2999.99. Wouldn't mind snagging an open box at MC then use their credit on top of it for another 5% off.

The fan noise difference testing in store really caught me off guard and I'm not sure if MSI switched to slightly different fans or the 285HX is just boosting more sanely than AMD models tend to do in laptops which makes them harder to control even with extra bios functionality.

Are you experiencing stuttering on your Hydroc G2 playing WoW? I really haven't had a chance to "play" on my Alienware 275hx + 5070ti to see if it stutters or has issues. I know that Raider 18 7945HX3D didn't stutter during raids or gaming on the newest BIOS.

2 hours ago, Mr. Fox said:Well, my ""official" benchmarks were with a portable AC ramming 34°F (1°C) air directly into the GPU so the water cooler is not going to get it that cold due to the ambient temperatures. I can compared against the benchmarks I ran without the AC unit. I think I have a few of those. Boost clocks are absolutely staying higher on the waterblock than they were on normal air cooling. The GPU and memory are about 20°C cooler under load, the memory about 8-10°C and essentially equalized with GPU core temps now. The memory was generally 10-15°C warmer than the core. I hate NVIDIA's "normal operating temperature" GPU boost limits as much today as I did when they introduced that crappiness into the mix. Things were better when clocks ran full blast until you reached TJMax. Now that stupid cancer has spread to CPUs.

I did not take pictures and figured I would do that when I do the shunt mod. The resistors are very close to the 12VHPWR connector, so I am going to have to be very careful not to get that too hot. I had multiple doctor appointments yesterday so I was trying to hurry to get it done. I am assuming I need to shunt the two right next to the power connector and no others.

I am considering the idea of doing a shunt mod and installing a ElmorLabs EVC2SE for voltage control. Part of me wants to do it real bad and part of me doesn't care anymore. I haven't decided yet. I am less motivated to do some of these things than I used to be. There are days that I question my intelligence for even buying the 5090. It was definitely not something I needed and my passion for this is starting to dwindle. But, when I am playing with it I am glad I did.

Ah, ok. I didn't know if you collected some data pre "AC to the face" of your 5090. Maybe ambient vs AC direct intake vs blocked and cooled ambient.

I do think as node shrinks are insanely small and thermals continue to climb and the red line recedes, what is happening is the safety margins are getting tighter and tighter to the detriment of those of us who like to Overclock. Exploding AMD chips and 13th/14th gen issues aren't helping either to let enthusiasts....enthuse properly. Throw in natural boost algorithms and the difference between natural boosting vs hand tuned OCs gets closer and closer every generation.

Once you shunt, you will quickly know if your voltage max is what it is now or if there is a little bit of extra in the tank that will open up once you give it that extra juice. I've long said that voltage matters even though only a few have argued against it and as time is passing, we are finding over and over the higher your voltage max the better your card will perform on average which is only logical in tandem with other factors of course.

There is a reason most of the leader board members are using Elmore's to help pump up their voltage. Like I said weeks ago on the OC forums, "voltage matters."

So an Elmore's might not be a bad idea if you're truly looking to push your card, but then again a chiller would also go hand in hand too. 🤣

But again, we won't know where it lands till after the shunt.

2 minutes ago, Mr. Fox said:Yet again, cooling is king.... it will definitely be interesting to see the shunt results exact same settings....

What were the results with AC blasting in? Have you entertained the thought of maybe moving back to a chiller?

-

2

2

-

-

32 minutes ago, Mr. Fox said:

I just finished installing the 5090 Core block. It's pretty decent and it made a huge improvement in temperatures. It is not nearly as polished and does not have the same degree of aesthetic elegance as the 4090 Core. (It does not conceal the PCB and have the same high-end feel to it, but it is still really nice.) The only thing Alphacool did that I would consider stupid is put the warranty void seal on the front, directly in plain view instead on on the motherboard side. After I have used it long enough to know there is no problem with it I am peeling that off... idiots. I wish the block assembly was 1.5-2.0 inches longer. It is just barely long enough to reach the GPU support bracket attached to the motherboard. (It is about a half inch narrower than the motherboard.) But, I bet SFF jockeys are probably glad it's not longer.

I am going to have to order shunt resistors. I checked my stash and mine are more than 2 mOhm. Tearing down the Solid GPU I have to say that I am highly impressed. The GPU and the air cooler build quality are every bit as excellent and high precision quality as the 4090 Suprim. I still think it was a poor value, but I am glad that I bought this particular model and not one of the other "inexpensive" options. Zotac did an amazing job on it. The "Solid" name is very fitting.

You can see that stupid decal to the left of the fitting manifold, straddling the cold plate and acrylic.

Some bubbles need still to find their way out.

Looking good!

I'll be interested in your air cooler vs blocked results before the shunt mod obliterates both of them.

Any pics of the bare PCB before block mounting?

-

1

1

-

1

1

-

-

8 hours ago, Papusan said:

Without Gold but still pricier than the hyper expensive Astral.

Asus will go the full mile to Maximize profits due special design. Only $100 extra and you could buy 5090 MSRP card instead of this greedy move from Asus. $1899 or 90% above MSRP for a 5080 is the bottom barrel from Asus.

Want it @electrosoft? Maybe you also win in th coil whine lottery this time. Maybe worth it then?🤐 No one will see if you have a 5080 or 5090 in your pc box. They probably think you bought an 5090 for cheap. Open box as they say 😁

I'll step aside so you can get it @Papusan. Maybe Asus will offer a5080 Dhahab Crocs Edition. 🙂

Sheesh, $3500 for a BTF edition of a standard edition Astral 5090 = $3731 in my neck of the woods

$1900 for a 5080 is INSANE.....

-

2

2

-

-

6 hours ago, win32asmguy said:

Well, Best Buy has the Alienware 51 18 with the 5090 on sale this week for the same as Micro Center so I am going to try that out instead! If its on par with the Hydroc G2 and does not have any issues I would probably end up pulling it apart and removing the E31 compound for PTM7950 instead.

That testing spot definitely maxes out the GPU even without RT enabled.

Nice! I'll be looking forward to the results to draw some comparisons especially that 5090 stomping on the 5070ti in mine. I wouldn't mind either going back up to MC to play with that Raider a bit more at $2999.99 or picking one up to test drive.

I think you'll be pleased with the thermals and noise especially compared to the Raider.

And yeah, Tazevesh takes NO prisoners including the 5090. It hits harder than any other area overall in the game. Makes for good static testing without player data muddying the results.

I still stand by Deus Ex Mankind Divided DX12 Max everything 4k runs also give some good, old fashion raster only results and caps out a 5090 too.

3 hours ago, Mr. Fox said:It was difficult and stressful to resolve. Thank goodness there are always a few good people that care enough to help, but sometimes finding the right button to push is difficult. Sometimes you never do. I think I got lucky this time.

I called her out by name in the survey that wasn't positive other than her involvement.

Whew, all's well that ends well. It still boggles my mind when you're trying to get something solved and have to keep going through CSRs who should all know how to fix it and you finally stumble upon one who is competent doing their job.

In the past, I would get their extensions or ask for a way to contact them directly to help resolve future problems too.

Now the real fun begins! Bring on the results. 🙂

-

3

3

-

-

((Cue Paul's Hardware News Intro lol))

------------------------------------------------------

Newest round of 5090 price cuts old and new:

* = new

Gigabyte Windforce 5090 was $2639 now $2199*

MSI Ventus 5090 was $2449.99 now $2299

Zotax Solid OC 5090 was $2399.99 now $2249*

Zotac AMP Extreme 5090 was $2599 now $2349*

MSI Vanguard ($2669) and Gaming Trio ($2559) now $2499

Gigabyte Gaming 5090 was $2919 now $2599*

MSI Suprim 5090 was $3199 now $2699

Gigabyte Master 5090 was $2999 now $2799

MSI Liquid 5090 was $3279 now $2999*

Finally the mighty MSI Liquid 5090 drops in price and the Windforce drop is pretty substantial along with Zotac trending down back towards MSRP. Gigabyte Gaming finally became more realistic in light of Master prices already trending down by $200.

Watched a 5090FE take longer than it should to sell over on the OC forums (and Reddit and elsewhere) which was surprising as it was priced at $2250 shipped.

Insane markups, market uncertainties, slowly still teetering on a recession along with many realizing the pricing is just stupid on these cards are all in play and other factors.

6000 pro's everywhere (literally) and usually you can call and get a good discount even over the $8k (down from initial $10k).

---------------------------------------------

Again, Intel quietly making nice inroads with the Ultra series chips. Handhelds they are really coming into their own.....

Ultra desktop processors are actually well rounded if you don't fixate on "gaming brah!" and even then we're seeing properly tuned they are actually pretty decent.

-------------------------------

22 hours ago, win32asmguy said:Yeah I was thinking about that too. This:

$529 - 285K (Micro Center)

$449 - Asrock Taichi OCF (Central Computers)

$149 - KingBank 2x24GB 8400MT (Amazon)

Although it would mean selling off the Xeon and 12900KS setup. I am not sure how a tuned 14900K would compare to the 285K but my current Z690 board may hold it back.

How is the Kingston Fury kit working in the Alienware? XMP profile working? Memory latency hopefully under 100ns? You might be able to use XTU to tune D2D and NGU for a little more boost.

It seems like the Dell Pro Max 18 Plus is probably not happening. They cancelled my order due to configuration issues. It was a quoted order from a business sales rep so I am not sure what to think.

I'm trying to decide if I want to go for a super cheap 265k + MB combo since I have these KB sticks on the shelf atm. Microcenter has some sub $500 combos with memory, MB and chip. I would just use the 6000 sticks in an AM5 buildout with a 9600x + B650 ITX board sitting on the shelf atm.

Still haven't had a chance to get to it yet on the Alienware with other stuff on deck atm, but soon. 🙂

Are you going to try and re-order it in a different config? What was the config issues with your particular order?

------------------------------------

Yes, your Mac Studio can be a monster power hog pulling 300w+ and also why I always recommend a wall meter to see how much you're actually pulling.

Remember, the PSU in the Mac Studio M4 is rated at 480w. There's a reason for that....

----------------------------------

New testing spot in WoW as you step right into Tazevesh and a little bit forward to remove the gate blur off screen. Hits almost 50% harder than the Oribos spot and routinely many places in Tazevesh cap out the 5090 to 100% utilization easily at 4k Ultra 10 RT high (max):

Same spot with the Ventus with both OC settings to each card's stable OC (+300 Vanguard, +350 Ventus) capped out at 130fps.

PL100 vs PL104 made no difference here for comparison to the Ventus at PL100.

-

1

1

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

in Desktop Hardware

Posted

Most modern users, even over on OC, all prefer clean runs to their 5090s and below. Most are either using the supplied PSU 12vhpwr cable or custom ordering one for their PSU with the connector included.

Just about every model in the Nvidia consumer stack now uses it.

Who in this forum are using the tentacle and running it to 4x PCIe 8 pin connectors to the 5090 besides me?

Unfortunately we're three generations in now on Nvidia and the 12vhpwr connector.

Nvidia dominates the market so it makes sense that PSU makers are starting to default to having one on their PSU

What I would like to see though is always having at least 4x 8 pin connectors too along side it especially on models 850w+ and up but the bean counters have run their numbers and some models are sacrificing them for $$$.

For lesser wattage models I can kind of give it a pass.

So far we've only seen two AMD models with it: The Taichi and the Nitro+ 9070xt models. I suspect more will adopt it for future models. 😞