-

Posts

2,841 -

Joined

-

Last visited

-

Days Won

132

Content Type

Profiles

Forums

Events

Posts posted by electrosoft

-

-

3 hours ago, Mr. Fox said:

Yes it is. Not sure why. I think there is something wrong with the benchmark to be honest. I think it is doing something weird with cache or memory. It's not as stressful as Cinebench R23. My CPU doesn't get as hot as R23, but it still takes lots of extra voltage for some reason. It's strange. I haven't tried lowering the cache ratio or decreasing the memory overclock to see if the behavior changes, but something unusual is going on. It seems to waste a bit of time "preparing" for the benchmark before it starts. Not sure what that's about. Sometimes it won't start rendering. Sometimes it stops responding without crashing or freezing. Other times it runs normally. I think it's just buggy.

On top of that, I find it really annoying to have to remember to manually change it from a timed stress test to benchmark using the "off" option. I just tried to open it and got a BSOD a minute ago. Yet, I can run any other version of Cinebench, OCCT or AIDA64 stress test without issue, so yeah, it's buggy.

Does it exhibit the same behaviors at complete stock? It might be buggy (wouldn't be the first time) or it might be rooting out mild little instabilities in overclocks somewhere.

-

2

2

-

-

5 hours ago, jaybee83 said:

yeah thats the tachyon though, seems like. barely available anywhere, but good to know u have more than one 😄

i for one just crapped out on the cpu imc and/or the mobo, cant get 6400 or 8000 stable for the life of me. so now im tightening things up for 6200 and 7800 instead.

i do see significant improvements in timings tho with the new 8200-48gb kit vs. my previous 6600-32gb kit.

3 hours ago, Raiderman said:If you can run PBO voltage curve at -30 all cores with CB 23, I would say it's your board and not imc. I am bench stable at 8000 xmp on, and 6400. I have benched 6600, but not stable. Of course that's only with very limited time playing with it.

3 hours ago, Mr. Fox said:If both of your motherboards are 4-DIMM and the Tachyon is 2-DIMM that more than likely accounts for most, if not all, of your limited memory overclock capacity if the scenario is the same as Intel. I'm not aware of any amount of skill that allows a 4-DIMM Intel mobo to match 2-DIMM models. I think it's totally illogical that they even manufacture 4-DIMM enthusiast mobos. I would avoid 4-DIMM boards like a plague. This is why the Tachyon, Unify-X, Dark and Apex boards rule, at least on the Team Blue side of the house. They should stop manufacturing 4-DIMM boards for gaming and enthusiast applications. They are a waste of money.

My 12th Gen Celeron 2C/2T netbook CPU runs 8000 stable on the Dark and Apex. IMC and mobo quality certainly matters, but not as much as topology.

100% spot on.

This can not be overstated enough when you're really pushing your memory regardless of CPU platform.

When the new gear settings were announced, I posted quite awhile back even calling into the equation motherboard quality as a factor now with frequencies so high along with what real world results we would actually see which so far outside of benchmarks seems to be low/limited for AMD but hey take every bit you can get!

-

3

3

-

2

2

-

-

Jufes doing fully tuned, 13900ks vs 7800X3D with tuned memory and OC'd 4090 vs 7900xtx swapping them between platforms to boot.

-

2

2

-

-

1 hour ago, Talon said:

Starfield seems to absolutely love dual ranked ram. I am seeing about 10fps higher than my single rank ram that is running at higher speed.

Microcenter had some of the 2x32gb stuff in stock and open box for $153 this morning, insane deal. I reserved some and ran over and unfortunately when I arrived and checked the package, it was M-Die. They had more open box kits in the back for the same price so I decided to go check those. Sure enough they had some A-Die kits for $153. I grabbed them and swapped them into my Dark Z690 with my crappy 13900K (crap IMC).

Running 7400 CL34 dual ranked 64gb for $153. The package had 3x returned stickers all over it, and even a RTV (return to vendor) and RRTV (tested by GSkill and Refused Return To Vendor). They looked sus lol. Sure enough they are rockstars like my other kit I got earlier this year for $270?.

Hoping the IMC is god like. Apparently Asus has stopped production of OG Z790 Apex and a refresh is coming with even higher memory tuning. I saw a product listing for the new Gigabyte boards claiming 8800+ memory OCs.

This would make sense as Fallout 76 with the Creation Engine absolutely loves dual ranked ram too. Just switching sticks from M-die single rank 2x16GB to A-die 2x32GB resulted in a ~13% increase in performance both at 6000 on the 7800X3D with similar timings. I then tightened up the A-die's even more for a ~15% increase overall.

Which sticks did you get exactly from g.skill?

If Asus progresses like they did from the Z690 Apex to the Z790 Apex with their memory, the Z790M Apex should be a monster and what Intel couldn't do in overall frequency bumps they poured into a much better IMC with 14th gen.

-

2

2

-

-

Asus Matrix 4090 unboxing preview.... (no benchmarks). Definitely visually pleasing but I want to know how it performs.

-

3

3

-

-

3 hours ago, ryan said:

this. I get 30fps because I chose to get a 3060 midrange laptop, I knew what I was getting into before I bought it. Im not going to buy an electric vehicle then compain I dont have a 1000km range whilst driving from vancouver to toronto. like I think if your going to talk about something you should not do like the old saying judging a book by its cover. Its a good game, is it game of the year? heck no. but that is true of all games except for the game of the year game.

Gets old when certain members just chime in to argue using their opinion as a fact, and the logic of, I thought of this therefore it must be fact. Not everyone will like this game, and it's a miracle I like it, as I don't play rpgs. more into crysis esque games. but im not going to comment on a game I haven't given any time to.

I see this time and time again, user posts an opinion and someone else has a contrary opinion its like they treated it as an attack on their character, and respond by personal insults the same way a 5 year old child would.

When I find someone's argument has lost logical cohesion and/or it is getting personal, I just stop responding and move on. Many times I have something to say but I choose not to and reserve my brain pan for better pursuits. It's ok to not engage. It is also ok to not need to have the last word. I still consider them my "net friend" and no hard feelings. It is just that the current thread is headed in the wrong/non productive direction. I've adopted this approach for well over two decades now across all platforms and have recently double (or tripled) down on this the last few years and it has worked wonders and made social media so much more fun to use.

3 hours ago, Reciever said:If someone is personally insulting you or anyone on this forum, there is a report function and just as I have in every event, will evaluate whats happening before taking action. If this is in regards to Reddit and/or Youtube Comments, well...

Good Luck!

Notebooktalk is like the kiddie pool compared to the vitriol and rancor that is Reddit and Youtube comments! 🤣

-

2

2

-

4

4

-

-

34 minutes ago, johnksss said:30 minutes ago, Mr. Fox said:

He's got some mad soldering skills so if he can't do it I bet it can't be done.

Nice shirt brother.

25 minutes ago, johnksss said:I break it out on special occasions.😁

He was working on a video for soldering pins back on a 5900 AMD CPU. 😲

Shirt was the first thing I peeped before even noticing it was Alex.... I don't know if that's a good thing. 😂

-

5

5

-

-

1 hour ago, seanwee said:

Bethesda has always been infamous for buggy and unoptimised games, so nothing new here. They *might* get some fixed when Starfield Special Edition comes out in 5 years.

*Lol*

39 minutes ago, Mr. Fox said:Yet people keep giving them money. Rewarding bad behavior guarantees the continuance of it.

I'm glad it came free with my 6700xt purchase. 🙂

I haven't had time to really settle in and play outside of an hour or two. Other game stuff, work stuff and project stuff but I'll eventually get in there and play through. It took me a full year to finally play the last Deus Ex.

I figure with patches and driver optimizations it can only get better.

-

5

5

-

1

1

-

-

Very nice video showing Starfield and memory and CPU tuning / overclocks with 5800X3D, 7800X3D and 13900k. It also shows DDR4 vs DDR5 on 13900k differences from low settings to tighter tuned memory and higher frequencies (up to 8200 tuned for Intel, 8000 for 7800X3D, 4100 on 5800X3D).

DDR5 has really come into its own and those early days of DDR4 having pockets of superiority are rapidly drying up.

The performance gains can be pretty substantial depending on system tuning. He also shows 7800X3D with memory from 5600 up to 8000 and what I suspected is showing in that 6400->8000 shows hardly any increases in game for AMD (near margin of error results).

-

5

5

-

1

1

-

-

4 minutes ago, Etern4l said:

That was never quite in question. Those presumably NVidia sponsored influencers are just trying to misdirect us here. The underlying issue is the market dominance - we don’t want NVidia or any other company to be in that position, or they will obviously abuse it.There is no point worrying about what AMD, never mind Intel, would do if they achieved a dominant position in the GPU market, they are nowhere near that point. Right now NVidia is way ahead in gaming (taking into account hardware and software), and enjoying almost strict monopoly on the compute side. The optimal state does not involve another company taking their place, but more than one company offering comparable products in every segment - this would automatically optimise prices as well, unless they tried to form a cartel, which is a criminal offence/ felony (unless it’s OPEC lol).

Right now, Nvidia is king. You are seeking some type of rule, competition or consumerism to thwart them pricing their items as they see fit?

Rule = I definitely do not want the government stepping in unless they are breaking a known law or a true monopoly. Having ~80% market share isn't it.

Competition = Tell AMD and Intel to step up their game. It isn't Nvidia's fault they are still so behind.

Consumerism = We've covered this already. Free will and buyers can buy what they want based upon their own criteria. A buyer is free to purchase from any of the three or just go buy a console.

The market is working as intended.

They don't have a monopoly simply because they have the superior product. What they do have is superior market share because of their superior products.

Now, if we find out they are using strong arm tactics like Intel did years ago to try and thwart AMD from being sold by Dell and other OEMS/VARS or similar thug like actions, that's another story and is completely unacceptable and they should be punished appropriately.

-

6

6

-

-

22 hours ago, Papusan said:

I don’t want support this with my money. Nope. Not me. And AMD try always follow Nvidia’s prices. Yep, slightly lower but if they have the chance they will do as they did with Ryzen 9 5950X. First mainstream processor matching $800 due no competition. They are not any better than Nvidia. Greedy bastards that aren’t willing to try be on top. Just tune prices so high they can vs what they offer. And what with the performance uplift for some of their SKUs. 3-5% above it’s predecessor two years ago. A joke! And the last nail… I won’t support help paying Fu. Azor’s paycheck. Nope. Not me.Yup. No in hell I support Azor. More than good enough reason to avoid AMD.

Value and Conclusion

Only small gen-over-gen performance improvement.

but AMD has placed a non-functional dummy die here instead, to provide structural stability.

-

Oc’ing

From here on slowly reduce maximum voltage until your card becomes unstable running benchmarks or games. Undervolting is a must for RDNA3, because that will free up some power headroom for the clock algorithm to push frequencies higher. These cards typically get unstable around 950 mV, so there's a lot of headroom (the default is 1.15 V).

https://www.techpowerup.com/review/powercolor-radeon-rx-7800-xt-hellhound/40.html

Yep, I always said AMD is no different and if they were in control of the Golden Rule they would extract as much value out of their products as possible as we saw with AM4 and 5950x pricing. If they suddenly had the dominant GPU, those prices would go through the roof.

Brett sums it up nicely here (timestamped for your convenience):

-

1

1

-

1

1

-

1

1

-

1

1

-

Oc’ing

-

3 hours ago, tps3443 said:

I’m considering getting a RTX4080 or a 7900XT Nitro+

The 7900XT Nitro+ competes dead on with my 3090 Kingpin in all synthetic benchmarks. Timespy/Timespy Extreme/ Port Royal etc. But, I think it would be better because far less power usage, and faster Starfield performance lol.

The RTX4080 is faster in everything for the most part. Both cost about the same.

I feel sick buying a RTX4080 lol. And I’m not buying a 4090. I’m never spending more than $1000 on a GPU ever again.

Any thoughts? Is this even worth it?

I'm not seeing that as a worthy upgrade. 7900xt 20GB is a hard no and the 16GB capped 4080 is even worse.

Stick with your 3090, get 4090 or get a 7900xtx.

You get the 7900xt with less vram and basically 3090 raster performance for a game which has sloppy Nvidia code and/or AMD optimizations (Sponsored by AMD) just launched which will undoubtedly get refinements which could go...well...any which way. I would just wait a few months and see how things pan out patch/driver update wise THEN decide if you want to get a new GPU.

If...

IF you are going to upgrade, grab a 4090 or 7900xtx.

Since you say you're never spending more than $1k on a GPU ever again then the answer is pretty clear: 7900xtx.

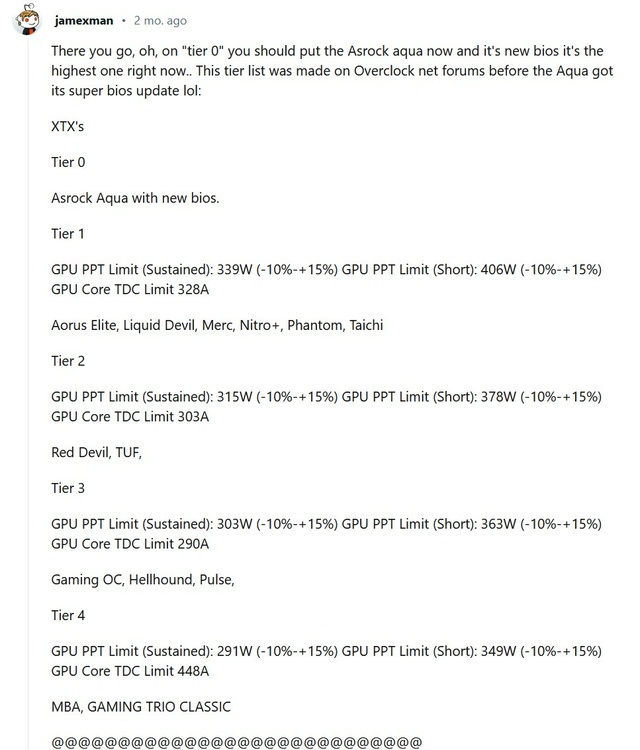

If you go with a 7900xtx, make sure to get a model with max power limits since I assume you're going to block and OC it.

Tier 1 favs for me are Taichi, Nitro+, Devil. Id pass on the Phantom and Elite.

You can flash the Aqua Vbios on the Taichi and maybe some of the other cards but it might require a programmer for the initial Aqua Vbios flash then you can flash the Aqua Super/Extreme Bios if you want. Blocked and chilled, you would definitely be able to extract some righteous performance from it.

If you go 4090, sky's the limit as you just basically get the most powerful, dominant card on the market but it also costs quite a bit more and grossly exceeds your $1k threshold.

Best bang:buck is seriously the 7900xtx especially for Starfield atm....

But I suggest taking @Papusan's suggestion:

3 hours ago, Papusan said:Maybe wait it out. Starfield is very bad optimized for Intel and nvidia. 3090 isn’t a bad card for gaming.

https://www.tomshardware.com/features/starfield-pc-performance-how-much-gpu-do-you-need

-

2

2

-

1

1

-

1

1

-

-

3 hours ago, Papusan said:

isn’t it stupid? The only brand went their own way and used 12VHPWR connectors that have the connectors opposite way. Maybe Asus needs all those weird shaped connectors themselves due huge lack of them? Why go own way? What did they gain with this? Awful for those that have a very good bin.

Re-read my previous post about “ticking bomb”. Then read this one. Weird people can’t read or maybe they are just stupid. Not sure what’s correct bro @ryan

“It seems unwise to let the old adapter in, but it hasn't failed me in more than 5 months.Happy bro @electrosoft listened 🙂

I lost faith when my original cable failed but I know I would have yanked it early on when burned connectors started popping up.

I don't blame MSI for not repairing a card with a burned connector using a third party adapter.

I'm ok with the four pronged Tentacled monster of power. 😂

-

2

2

-

1

1

-

-

3 hours ago, Raiderman said:

Not jumping in to defend AMD's drivers, because they do somewhat suck. I miss the old CCC days for sure, as I didnt have to reset my PC if the driver crashed while benching (most of the time) The modern app model is broken, and pointless IMO, and should have been abandoned years ago. I do, however, like the update frequency in which AMD releases their drivers. On a side note, I am very pleased with the purchase of the 7900xtx, and think its a fun card to bench, especially with a water block installed. (of what little time I have had with it)

Update: Newegg has not asked for the extra DDR5 8000mhz ram back, so I have some 2x16gb Trident Z5 available on the cheap.

https://www.newegg.com/g-skill-32gb/p/N82E16820374449?Item=N82E16820374449

You definitely picked the best 7900xtx of the group IMHO. It is the one I would get (and keep it in my basket on Newegg). I'm really tempted to give it another looksy 8 months removed from my first go around with that XFX 7900XTX (4X gonna give it to ya!) edition

Whew, those sticks are still ~$250. Let me know how much you're asking.

2 hours ago, tps3443 said:

The nvidia control panel has been almost the same since like 2004. 😂 lol. I guess if it ain’t broke don’t fix it. I too remember the Catalyst Control Center though at least they had the built in overclocking which was only good for quick out of overclocking on a new OS. My last AMD GPU was a RX480 from launch day. Amazing GPU. I miss really those days. I paid $250 dollars for a 8GB GPU on launch day that could game 1080P/1440P really well. But, that was also when 30-60FPS was accepted lol. So maybe we’re all being duped after all.

Anyways, I think any of these GPU’s will get the job done. I wouldn’t mind giving the 7900XTX liquid devil a try.

I'm sure if you picked up a 7900xtx you would block it so fast....lol. Plenty of blocks available. Liquid Devil is close to the Asrock 7900xtx for best VRMs and board design. I believe it only has one or two less stages.

-

2

2

-

-

18 hours ago, Etern4l said:

Sure, we know there is a premium to pay, the problem is that NVidia raised prices of products across the board to create an illusion of necessity to purchase the top end card, as everything else seems like bad value. This and the other unhealthy practices have hurt the entire PC market, which is already in a worrying state of decline. So Level 2 thinking here would be to avoid supporting a company which acts to the detriment of the entire community (if not humanity). Sure, people can be selfish, myopic and just pay up the extra $400-500 over par, if that makes them feel as if they were driving a Ferrari among plebs in packed Camrys lol, but will this help move the world in the right direction?

How they choose to conduct business is their prerogative. Nvidia isn't the first nor will they be the last company to purposely make one of their products look like a poor buy to push consumers to another, more expensive one. It is a well known marketing strategy. They are not the first to have overall ridiculously high profit margins. They are in the business of making money while advancing technology. They are the GPU market crown jewel at the moment and they know it and will capitalize on it as much as possible. The golden rule is in full effect.

The consumer then reviews their product line and decides what fits in their budget and if they want one of their GPUs or perhaps go elsewhere to AMD which has a pretty nice overall product stack of their own. Like I said, no one is forcing anybody to purchase one of their products. If they choose Nvidia in the end, I would not be so arrogant as to think they are being selfish or myopic just because their criteria is different than yours.

As noted before, we can see Nvidia reacting to market conditions and competition with the 4060ti 16GB and I am sure down the line the 4060ti 8GB may be re-positioned price wise in light of the 7700xt and even the 4070 re-positioned to compete directly with the 7800xt.

18 hours ago, Etern4l said:You probably meant to say "this is a free market". Well, it's not exactly, because NVidia is a pseudo-monopolist. They are in a position to rip people off by charging excessively for their products. They are doing so without regard for us, the enthusiasts, or the PC market, because Jensen has his head stuck high up in the clouds, hoping for AI-driven world dominance. It's only us, the consumers, who can bring him down a peg or two.

No, I selected capitalism specifically to zero in on corporations (Nvidia in this instance) not the idea of a free market. While they do share many same values, they are not the same: https://www.investopedia.com/ask/answers/042215/what-difference-between-capitalist-system-and-free-market-system.asp

Jensen is technology and profit driven above all else including us enthusiasts. Shocker. 🙂

News flash: So is AMD and the vast majority of corporations out there.

18 hours ago, Etern4l said:People make their decisions based on a plethora of fuzzy factors: their knowledge, their CPU characteristics, the information in their possession, their habits, and crucially - their emotions. Arguably, what's often missing is long-term thinking. I mean we know for example that people make decisions they later regret, buyer's remorse is a thing. But sometimes, there is no remorse even if the consequences are bad - that's flawed human nature, we tend to love ourselves the most, sometimes in situations where actually helping others would be more beneficial.

Agreed. Welcome to humanity.

18 hours ago, Etern4l said:Well, words are cheap, I don't own any AMD GPUs either (harder constraints unfortunately), but the least we can do is avoid helping NVidia by hyping up their products.

See, I actually try to push AMD products even initially skipping the 4090 and trying to go with the 7900xtx but it was a poor performer (Scroll back to January 2023) so I returned it and picked up a 4090. I main rig'd a 5800X and now a 7800X3D. I picked up a 6700xt over a 4060ti for my ITX rig. It has been well known I have a soft spot for them in my heart and always have and I've used their GPUs and CPUs quite frequently over the last 20 years. My main GPU during Turing was even a 5700xt anniversary edition for over a year.

18 hours ago, Etern4l said:Yes, based on the theoretical performance numbers sources from the links I posted earlier. I have used techpowerup GPU specs page quite a bit when comparing GPUs - if there is a major flaw in the methodology, I would like to know.

You can see for example that the 7900XTX has a higher pixel rate (the GPU can render more pixels than NVidia's top dog), but lower texture rate (NVidia can support more or higher res textures, by about 25%). Compute specs are mostly in favour of the 7900XTX, except for FP32, where the 4090 is in the lead. The differences either way can be fairly large, which suggests there exist architectural differences between the GPUs which might also explain differences in game performance depending on the engine or content type.

Based on the specs alone, the 4090 should be priced maybe within 10%, certainly not 60%, of the 7900XTX. The 50% excess constitutes software and "inventory management" premiums, neither of which should really be applicable in a healthy market (to be fair, the software part of it could - to some extent - be AMD's own goal as per @Mr. Fox, I have yet to see bro @Raiderman jump in to the drivers' defence).

If your theory is paper calculations show they are relatively equal in rasterization performance but the discrepancy and vast majority of benchmarks and reviews that show the 4090 is clearly superior overall in rasterization (and then basically everything else) is based upon software and/or Nvidia dev optimizations then I believe you are in error.

Feel free to support your theory with hard evidence outside of tech specs on paper. I'm always open to change my mind when presented with hard evidence.

-

2

2

-

-

51 minutes ago, tps3443 said:

Not gonna lie, just my RTX3090 KP at 4K is extremely comparable to a 7900XT. Of course, not in Starfield though 🫣This and more.

When I had my 7900xtx it was decent but with RT on it equaled my 3080. Pure raster it was about 26% faster than my stock 3080 10GB which wasn't cutting it for WoW 4k Ultra.

47 minutes ago, tps3443 said:@electrosoft How do I even test 1% lows and 99% lows? Do I set a frame cap? Adaptive sync on? It seems this number can be so easily influenced. I can set adaptive sync with a frame cap with lower graphics and it’s gonna be really good. VS no frame cap will hurt the lows a little.

I don’t even know where to start, I get I’ll be testing against my self, but I appreciate any tips for best results.

I figured you would use AB/RTSS w/ benchmark capture and since your hardware is fixed, determine a repeatable save spot run of your choosing and scale frequency without changing timings from your 8800 settings down in 400mhz increments down to 5200 as initially it would be a pure frequency test and see how much it changes.

If you really want to get indepth with timings vs frequency maybe pick a sweet spot for your memory (6000-7200) that really lets you tighten up even more so and test 6000, 6400, 6800, 7200 with loose vs tight timings.

You can then bar graph it or screen shots (or both) to see how much frequency matters with everything else fixed and OCd.

1080p, 1440p, 4k.

But anything you want to present would be awesome since your system is so OC'd I'm sure for a large bulk of upper end resolution results it will be GPU bound.

The most important thing is to have everything remain absolutely consistent (Turn on the chiller!) as possible while yanking your memory to and fro.

-

1

1

-

-

51 minutes ago, tps3443 said:

Not gonna lie, just my RTX3090 KP at 4K is extremely comparable to a 7900XT. Of course, not in Starfield though 🫣This and more.

When I had my 7900xtx it was decent but with RT on it equaled my 3080. Pure raster it was about 26% faster than my stock 3080 10GB which wasn't cutting it for WoW 4k Ultra.

47 minutes ago, tps3443 said:@electrosoft How do I even test 1% lows and 99% lows? Do I set a frame cap? Adaptive sync on? It seems this number can be so easily influenced. I can set adaptive sync with a frame cap with lower graphics and it’s gonna be really good. VS no frame cap will hurt the lows a little.

I don’t even know where to start, I get I’ll be testing against my self, but I appreciate any tips for best results.

I figured you would use AB/RTSS w/ benchmark capture and since your hardware is fixed, determine a repeatable save spot run of your choosing and scale frequency without changing timings from your 8800 settings down in 400mhz increments down to 5200 as initially it would be a pure frequency test and see how much it changes.

If you really want to get indepth with timings vs frequency maybe pick a sweet spot for your memory (6000-7200) that really lets you tighten up even more so and test 6000, 6400, 6800, 7200 with loose vs tight timings.

You can then bar graph it or screen shots (or both) to see how much frequency matters with everything else fixed and OCd.

1080p, 1440p, 4k.

But anything you want to present would be awesome since your system is so OC'd I'm sure for a large bulk of upper end resolution results it will be GPU bound.

The most important thing is to have everything remain absolutely consistent (Turn on the chiller!) as possible while yanking your memory to and fro.

-

17 minutes ago, Reciever said:

Tried to see if my RAM would hit that but no dice, like, at all.

If there is interest I can try the 4400Mhz DDR4 kit from @Mr. Fox kit that he sold me some time ago. Im not sure why but some people on OCN were saying to increase the IF speed to 1900Mhz+ but from what I understand there is only one CCD so, no resource sharing. Would it be worth it to try 4000Mhz with 2000Mhz IF perhaps? Likely would be instable which is why I would guess that 3800/1900 tends to be the goal?

I would definitely try the 4400 sticks to remove that as a bottleneck and see how far you can push the 5800X3D keeping IF:FSR 1:2 intact. The good thing is that while your CPU will give up the ghost at 3800-4000, you can then tighten the timings on those sticks to really dial them in with the 5800X3D.

3800/1900 was routinely the cap for the bulk of AM4 chips with some dips into slightly higher frequencies. Some could hit 4000 but they basically had golden IMCs. I know my 5800X capped out around 3866 IRC on known, good B-die sticks even in a SR 2x8GB config to lessen the load on the IMC.

-

2

2

-

-

3 hours ago, Reciever said:

Any idea if they apply any tuning to the 5800X3D? Had to use the PBO2 Utility to apply the -30 offset to hit 4.450 otherwise its all over the place.

Not really interested in the title perse, just curious. There are definitely games that just prefer the raw Mhz over L3 Cache

Bethesda and their Creation Engine is a great example of the blessing and curse of the 3D cache model.

Creation Engine 1 and ES5 along with Fallout 4/76 was a blessing as it was extremely single threaded but more important, instructions and assets were so much smaller since it was basically using a ~12yr old engine that everything could sit in the cache and performance was greatly bumped. Even now, I'd recommend a 5800X3D or 7800X3D system for Fallout 3/4/76.

Creation Engine 2 has been massively overhauled and upgraded in regards to multithreaded support and more importantly much larger asset and instructions per thread handling and it clearly can't sit in the cache in a large enough or meaningfully way to impact performance to a major degree. We can see this as the 7800X3D is ~10% faster than the 7700X. I'd like to look more indepth in the 7950X3D vs 7950X and their near identical scores as this may be a case of massive thread utilization and problems with some being on the cache enable CCD and others not or gamebar issues. I'd like to see Lasso at work there just as a checksum.

Based on how Starfield feels and plays, they clearly masively overhauled CE1 probably the same way Blizzard overhauled their WoW Engine to modernize it but it still has its roots in its original 2004 design.

I'd like to see how some tight 3800 on a 5800X3D along with an optimized PBO and/or CO deals with it but there obviously won't be any miracles to close that massive gap (108fps vs 65fps)

I'm sure we'll revisit this months down the road as patches, and upgrades and optimizations (oh my!) are implemented and we may find the 4090 properly moving ahead along with the gap between AMD and Intel CPUs closing.

-

1

1

-

1

1

-

-

10 minutes ago, tps3443 said:

I’ll play around with it today. My whole system fell apart recently stability wise. I kinda let it go in to the abyss of normal life and using it for just work and no play. Then it just started crashing left and right under normal loads with DDR5 8800. And CPU freezes in R23. My SP rating actually dropped in the bios to SP96 Global lol. It said my E-Cores were like SP60 😂 (Never seen anything like this before) (Not even kidding)(1st) issue: Super Cool Direct Die cold plate was clogged completely.

(2nd) issue: Liquid Metal was hard as stone. Oops.I put new LM, cleaned my direct die fins, put all back together SP rating said the same thing. I pulled the chip again and pulled the battery on the motherboard and it finally came back to life as an SP104. And it’s solid again yay 🙂. Seriously though, I thought my cpu just up and fell over on me. But this SP104 is still a beast. And she’s back to 100%. This really was strange seeing a SP just up

and go down on only the E-Cores. Temps were always pretty good during all of this. So I’m not sure why it happen.

Whoa but yeah the smallest (or not so small) deviations when running such tight tolerances can derail your setup. See: MSI AIO clogging debacle.

I had the same happen to my Hyte R3 13900ks setup and out of the blue crashes and run away temps and I finally had to abandon that chassis. I'll put up a post later about what happened.

-

1

1

-

-

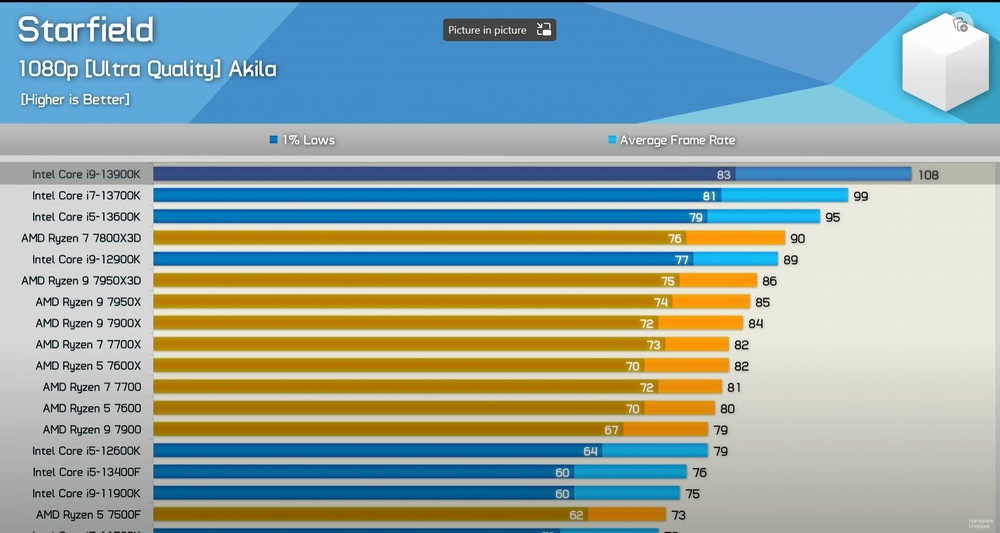

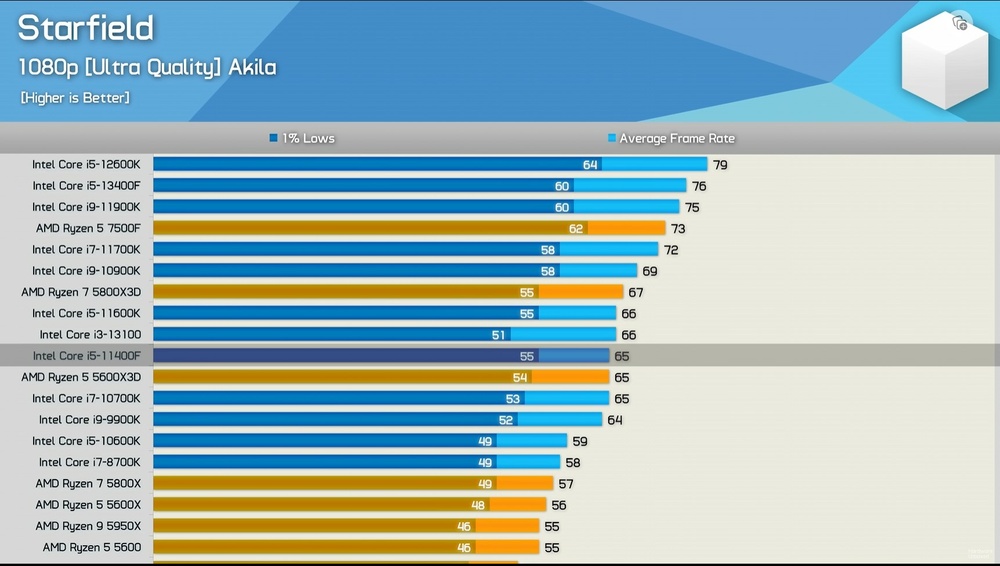

HUB confirming What GN found about CPU performance in Starfield....it is a very heavy CPU influenced title and Intel rules the roost.

Especially the 5800X3D just gets wrecked....

Seriously, Starfield loves Intel CPUs....that has to be a weird win some / lose some for AMD who sponsored the PC version.

(Eyes the SP115 13900KS sitting on the shelf in the MSI Z790i Edge motherboard atm doing nothing)

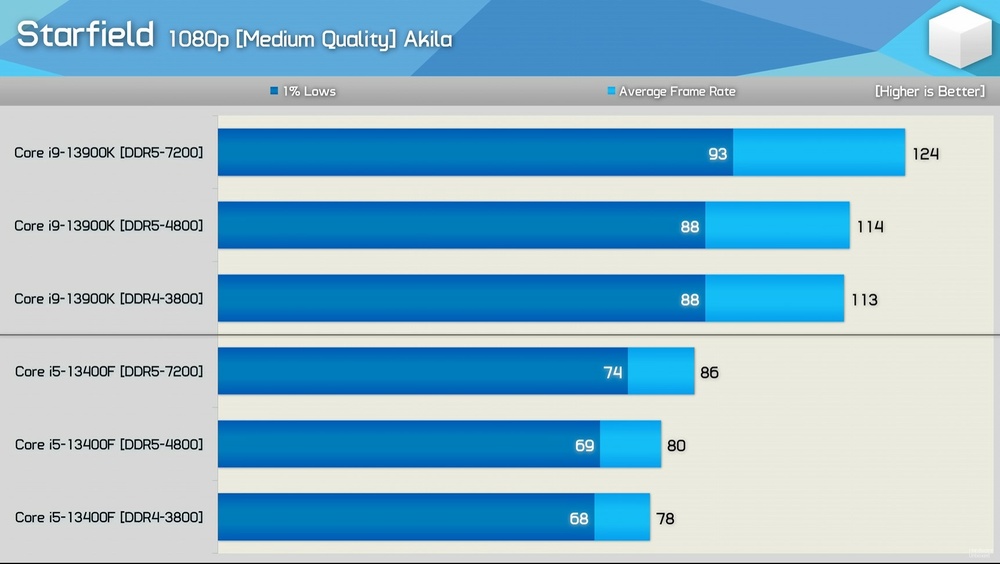

Memory findings in line with GN meaning much smaller gains going from 4800 -> 7200 (but again, I'd like to see 7600 or 8000+. Maybe take a looksy at memory benefits @tps3443 and let us know).

12 hours ago, jaybee83 said:nice writeup, thanks for that. havent gotten around to watching it yet, but its on my playlist. this sounds like Starfield profits way more from raw jiggahertz than it does from increased cache sizes. curious to see how its performance numbers will develop with upcoming patches. especially on the cpu side there seems to be A LOT of performance still left on the table....

HUB just confirms and extends some of GNs results to really dial in these hard truths:

--

Intel CPUs dominate for Starfield.

AMD GPUs are competitive with Nvidia (7900xtx vs 4090 especially).

DDR5 memory bandwidth is not nearly as important for performance gains but there are some

--

We've reached a point where if you're serious about Starfield, a 7600 > 5800X3D for gaming.

I'd say best bang:buck system is a 13700k + cheap B660 + cheap 6400-7000 DDR5 + 7900XTX

As always one of my favorite things to see unfold is how driver and game updates change the performance landscape.

-

3

3

-

1

1

-

-

2 hours ago, tps3443 said:

Level 12 in Starfield so far! While there are many aspects to the game that I didn’t like at first. The game has my attention and it has really grown on me. I play it and enjoy it for many hours at a time. I haven’t had a game to dump endless time in to like this. The game is solid! No complaints with performance either. I’ve bumped my DLSS slider to 80% of my 4K resolution (Looks far better than 67% DLSS), and I still maintain over 60FPS at all times, with shadow quality on high instead of ultra. (Worthy trade) I feel like I’m playing a new version of Fallout 4 with slightly better graphics and engine. Nothing ground breaking in this game. I honestly would have preferred if Bethesda kept it in dev for like 2-3 more years and gave us the whole experience. But I guess we gotta wait for ES6 for all that goodness. Either way it’s a good game, and much better than Cyberpunk 2077. I did beat Cyberpunk 2077… But after the story I just couldn’t get in to it. And they had that bubble you were always in and no action happened outside of this invisible bubble. You could not snipe people at all, and no bombing cars from further away. It was so silly.

Yep, looks feels and plays like FO4/76. That's not a bad thing. I was able to jump right in. Graphics are clearly updated and I expect many hours of fun when I can fully dive in.

As for performance, Steve and GN did a CPU Benchmark/Bottleneck video with a 4090 and a checksum top end with the 7900xtx to show where CPUs give up the ghost. He also did some memory scaling (after the Buildzoid video) showing not much is gained from 6000 up to 7000 (~2fps) but I would have liked to have seen some 7600 and 8000+ testing just because....

This game seems to really like Intel 13th gen CPUs especially 13700/13900 and clearly outpace the X3D models including 7800X3D, 7950X3D and especially the 5800X3D.

Dropping resolution down to stupid levels (720p) shows not much of a difference between the 7900xtx and 4090 CPU wise. ~4fps but forcing the 13900k into a bottlenecked situation still shows the 7900xtx winning vs the 4090 at all useful resolutions (IE, resolutions and settings that can force a CPU bottleneck).

-

6

6

-

-

9 hours ago, Etern4l said:

With Ada, we lament about NVIdia pricing and product offering across the board.

I'm focusing on top tier pricing for those who want the best referencing @tps3443 post but this is absolutely true.

I still stand by my assessment that the 4070 and 4090 are the only two viable cards price wise in the line up this time around but if the 4060ti 16GB somehow falls to $399.99 it isn't a bad pick.

9 hours ago, Etern4l said:It is an expensive hobby because NVidia are ripping us off. If AMD died and Intel GPU failed, then Jensen would probably jack up the prices even more. We can pretend there is nothing can be done about, however, that is patently false.

It is an expensive hobby because laws of diminishing returns are grossly in play for the top end same as if you want a Ferrari over a Camry or first class over economy. To be the best you pay the best sometimes crazily so.

Nvidia isn't ripping anybody off. Nobody is forcing you to purchase their GPUs. This is a capitalistic world. You look at the product and determine if you want it or not at the price offered based upon your own criteria. All Nvidia can do is offer their goods at their selected price points and let the consumer decide.

I don't subscribe to the nonsense that consumers don't know what they're doing. They're making educated purchasing decisions based upon their own purchasing power, preferences and buying criteria. Their preferences and criteria are allowed to be different than yours. It doesn't make yours right or theirs wrong.

I never said or intimated there is nothing that can be done. As always, you vote with your purchasing power. Enough have spoken to let Nvidia know their pricing is still acceptable. When it is not and/or they feel enough competition/threat they will adjust accordingly as we just saw the 4060ti drop to $430 with AMDs announcement of their 7800xt along with lackluster sales. Hopefully we see more of this.

9 hours ago, Etern4l said:Err, not so fast, let's look at the raw specs:

https://www.techpowerup.com/gpu-specs/geforce-rtx-4090.c3889

https://www.techpowerup.com/gpu-specs/radeon-rx-7900-xtx.c3941

We see that the cards trade blows when it comes to texture and pixel rates, and NVidia is indeed faster in lower precision compute, while AMD does better in high precision (less important in gaming).

The manufacturing process is the same, memory bandwidth numbers are very similar, so are caches.

We don't have any theo performance specs on the RT cores (I am not sure comparing core counts across architectures is meaningful), so perhaps NVidia holds the theoretical upper hand there. Maybe.

That aside, it follows that the difference in realised performance basically comes down to software. If things are optimised for AMD, it evidently has the upper hand, much to NVidia fans' self-defeating dismay. If a game leverages NVidia software features (DLSS etc) the tables turn. You typically see games leaning one way or the other - my guess is this is based on which manufacturer has a deal with the given dev house. I would assume that most PC titles and benchmarks are optimised for NVidia simply because it's the more prevalent platform, hence you see the dominance in benchmarks and PC titles.

Just for clarity before proceeding, architecturally speaking, you are claiming the 7900xtx ~= 4090 in raw rasterization and the 4090 overall wins because of software and optimizations?

9 hours ago, Etern4l said:That brought back a lot of memories, I grabbed the first Voodoo though if remember correctly (for the sake of our Candian colleagues, let's not forget about Gravis Ultrasound lol).

I remember skipping the Voodoo because the Verite was still a relatively new purchase for me so I needed a cooling off period before pulling the trigger on another upgrade! 😄 Games like Fallout 1 and 2 and Arcanum I played back then along with Quake didn't need 3D acceleration.

WAY back in the day, I did have a Gravis joystick! 😄

-

4

4

-

-

45 minutes ago, tps3443 said:

It does kind of suck having to stay on top of the upgrade wagon if you always want that peak performance. Sometimes it’s nice to just use something for what it is, to get the job done. I started getting in to shooting rifles as a hobby, and I built a rifle with a nice $2K scope on top, it’s nice knowing it won’t be obsolete in 4-5 years or even 10+ years haha.

We aren’t going out and buying new cars every single year. We typically use what we have. And even now, having the latest and greatest still doesn’t always pan out. Especially seeing what the 7900XT/XTX does against 4080s/4090’s in AMD optimized games like Starfield. Even if we do have the best hardware wise we aren’t always gonna get the best driver and optimization service from our brand of choice. I know the AMD guys are enjoying their tech right now considering how a 6800XT competes at a stock RTX3080 level performance in this game right now lol.

PS: My first ATI GPU was a ATI 9600XT and then a ATI 9700 Pro soon after, I was too young to afford the Nvidia 6800 Ultra or ATI 9800XT. Back then we could do a lot with bios flashing though. I remember flashing a X850 Pro to a X850XT with a floppy disc.😂

Well there is a reason the bulk of GPU sales are mid to lower tier cards as most buyers tend to shop there. We lament about the 4090 and pricing but this cycle (and most previous cycles) top end cards were reserved and purchased by a much smaller group of enthusiasts not joe consumer.

Even then, joe consumer usually buys a mid tier card with mid tier hardware at best and will then use that system for at least 3-5 years before doing anything to it either just through finally wanting an upgrade or games/software running so poorly they decide to upgrade to....yep....whatever is now that cycle's mid tier hardware.

If you perpetually want to have the best performance at all times that comes at a cost. It's an expensive hobby but it is what it is.

If cars experienced the performance/efficiency gains CPUs and GPUs experienced we would probably be getting 500mi/gal at this point and go 0-65 in less than a second.

I 've been saying it for quite some time that the 7900xtx is the sleeper bang:buck card especially if you go for a $999 or less model. Is it as good as the 4090? No. Not even really close when all things are equal. If you see any game that has the 7900xt equaling it or surpassing it in performance that is a reflection of optimized AMD code and/or poorly written Nvidia code that usually reflects sloppy porting from AMD console code where due to the controlled, limited nature of consoles developers HAVE to write leaner, more efficient code that targets AMD optimizations to extract maximum performance that can carry over to AMD hardware.

When devs can, then port their code to PCs and don't take the time to take advantage of Nvidia's optimizations coupled with focusing on AMD optimizations (as they should for the console end), you end up with some charts showing the 7900xtx outpacing the 4080 by 15-20% in Starfield and matching or even beating the 4090 especially at lower resolutions....that tells you everything you need to know since we know hardware wise the 4090 is flat out a better card in every aspect (except price).

---

As for first GPUs......

You young whipper snappers. 🙂

My first stand alone GPU was a Tandy EGA ISA card for my Tandy 4000 386-16mhz back in 1990 (this was also my first PC I bought while working at Radio Shack for 3.5yrs while in college). I upgraded to a VGA card and a Soundblaster a little while later to fully soak in Wing Commander at max settings.

My first 3D'esque video card was a Rendition Verite 1000 card so I could run VQuake.

My first all purpose real GPU was a 3DFX Voodoo2 to I could run GLQuake (I then gave my Redition card to my brother)

My first ATI GPU was a Radeon 7000 series circa 2000 which I modified and augmented the heatsink and externally cooled and OC'd to the max to eek out every fps I could from Deus Ex (I again gave my Voodoo2 to my brother)

My first Nvidia was a BFG 6800 Ultra circa late 2004 for my Power Mac G5 Duo to play WoW on my brand new 30" Apple Display

From 1996->1999 my brother and I were hardcore into Quake playing online and going to cons for matches/competitions so I took our hardware pretty seriously to have it run as quick as possible while keeping details as low/sparse as possible to see everything clearly. We both ran Sony CRTs.

-

2

2

-

2

2

-

Official Clevo X170KM-G Thread

in Sager & Clevo

Posted

What make is it? Throw it up in the for sale forum as detailed as possible with pics and I'm sure you will get some offers.