-

Posts

2,343 -

Joined

-

Last visited

-

Days Won

54

Content Type

Profiles

Forums

Events

Posts posted by jaybee83

-

-

duuude LOL, how? why? 😄

-

1

1

-

-

welp "excited" would be a strong word, since im not an apple user. but i always applaud any steps taken (even when forced by EU legislation lulz) to ensure compatibility and reuseability of other devices 🙂

-

1

1

-

-

haha sounds like you just fell in love 😄 those are quite the thin bezels for sure...

what kinda specs and price tag are we talking here?

-

On 9/30/2023 at 4:00 PM, ryan said:

theres a new star wars series out, I think 8 episodes. going to check it out tonight. anyone have a review of it? its called ashoka

On 10/1/2023 at 11:49 AM, serpro69 said:Saw a couple episodes so far. It's not great by any means, but it's still fine. Definitely better than most (if not all) of SW movies that came out after Disney took over

, but not better than, say, Mandalorian, IMO

, but not better than, say, Mandalorian, IMO

ha, was planning to watch this with my wife after we finish book of boba fett 🙂

-

On 10/1/2023 at 2:32 PM, ryan said:

bought the worlds cheapest lenovo laptop. ideapad 1 with the athlon 3050u/1366x768/4gb/128gb emc/15.6in/6 hours battery life. there was literally no reason to buy this but I just had to lol. want to see how much I can tweak it and ooze out the most performance. so far I have ram usage down to 1.4gb and atlas os on it with eso on tweaked settings and its pretty fast now, but stock no one in there right mind should buy it and not tweak it, it was stuttering at 1080p on youtube now it does 4k videos without any slowdowns. its really amazing what someone can do to a computer with the right knowledge

lol, YOU, my friend, have now entered the point of no return in the rabbit hole realm of benching old hardware 😄

-

1

1

-

-

On 10/7/2023 at 2:50 AM, Rage Set said:

On one hand, I am happy that AMD is supporting their past hardware with new features that aren't "hardware limited", on the other hand I want them to fix the issues that have been plaguing their cards for months now.

If @Mr. Fox ever gets tired of the 6900XT and AMD, let me know. If I can't get a 7900XTX or 4090 for a good price, I will be searching for a 69XX XT card again.

what kinda pricing are you aiming for? are your also considering refurbs or second hand with warranty? just curious 🙂

On 10/7/2023 at 10:18 PM, Rage Set said:So. Is Nvidia going to release refreshed cards or not? Most of the rumors up till now claims they weren't going to release anything to best the 4090 until 50 series...and I thought that was odd (yes even in today's economy).

I guess I will wait until the new year to make a decision.

welp, nothing really changed with regards to the 4090, exactly because of no competition at the perf. level. but the 7900XTX is trading blows with the 4080, sooooo a 4080 Ti makes sense here (for Ngreedia).

On 10/7/2023 at 11:03 PM, Mr. Fox said:I really am looking forward to seeing how Battlemage works out.

If I were buying a GPU today and wanted the best performance, 4090 is the only logical option. The price is idiotic, even for the cheapest one, but still "worth it" in the general sense that it is better to overpay for something good than to overpay for something not so good. If I could not purchase a 4090, the next option would be 4080 if I needed one today. It is a poor value and I would try very hard to come up with the extra $400-$500 to purchase a 4090 instead. But, I would not even consider the other options new and I would settle for something used from last generation rather than settle for an AMD GPU or something castrated like a 4070 Ti scam product.

Well, Kryosheet is crap. Doesn't work worth a damn, unless you think normal high end thermal pastes are good. In that case it is probably awesome. It just can't handle an overclocked 13900KS at all. It performs on par with popular thermal pastes, which is unacceptable for an overclocked CPU. I view it as a totally unusable product for that. I saved it with zero damage and may try it on my laptop someday when I run out of things to do. @Papusanyou were curious. How 'bout no, LOL.

Brother @Talonsent me a tube of Honeywell PTM7958. Thank you so much! It performs about the same as KPX or other popular thermal pastes. So, yeah... not good enough for an overclocked 13900KS. It performed almost exactly the same as the Kryosheet. So, back to the liquid metal. No other good option available.

This stuff was not fun to spread. It is like clay, softened wax or softened kneaded eraser, and it takes a lot of pressure to even get it out of the syringe. It is not very sticky. It is much firmer than the thermal putty EVGA used on the 3090 KPE VRMs. I did spread it and got excellent coverage. It will be amazing for a laptop or a GPU that doesn't pull as many watts. There is no question in my mind that it will be durable, and the perfect solution for sloppy-fitting turdbook heat sinks. This is perfect for that. It is easily removed using a thermal paste spatula. It comes off like soft wax. When I need to repaste the 4090 I will probably use the PTM7958 paste. The pad equivalent worked amazing on the 6900 XT and the 4090 doesn't get nearly as hot as the 6900 XT. Removing my water block, it pulled the CPU (that was taped down for bare die) out of the socket. It suck well enough that the tape could not hold it down.

I got it more than hot enough to melt, and it absolutely melted and got phenomenal coverage. I let my system cool overnight to normalize with ambient temperature and tried it again this morning and the temps were as unacceptable as they were last night. I did not save a screenshot. I must have forgotten. But, there's nothing to see. Look at the Kryosheet temps (above) and shuffle the core order and that's what it was. Same thing basically.

kool writeup bud, thanks for the data 🙂 no surprise there tho that LM still reigns supreme 😄 point of the kryosheet is the fire and forget strategy plus no risk of corrosion / leaking leading to shorts. i could imagine that being good selling points for a lot of people.

On 10/8/2023 at 2:06 PM, cylix said:Nice prices😁. So now the i5 costs like an i7 from last gen. This is NOT the way🙄

https://videocardz.com/newz/intel-core-i9-14900k-and-i5-14600k-cpus-listed-by-spanish-retailer

too early to tell, usually those "first online shop prices" are way above the regular availability pricing. hoping for the best, expecting the worst as usual! 😄

On 10/8/2023 at 10:12 PM, Raiderman said:@jaybee83@electrosoftSo, how is it that I am 10 loops stable with memtest64, but I get random restarts during a 24 hour period? Maybe its not a memory speed thing, and more of a bios thing still?

On 10/9/2023 at 12:31 AM, Raiderman said:Yes, as soon as I hit the reply button to the above post, my screen went black, and rebooted. Fresh install of Win 10 22H2 LTSC. I leave my system running all weekend, and it will reboot for no reason. I figured I was having stability issues with the 8000mhz ram. I think I will set everything to auto in the bios, and see if it happens. This board has been a little sketchy for me, doing some weird stuff, like images showing the drive order in the bios being corrupted, not saving certain bios changes, hanging after hitting "save and reboot", resetting cmos not loading defaults.

huh, this is indeed some funky behaviour (which is to be expected with RAM tuning 😄). as others have already suggested, i would check with TM5 (anta777 absolut), as well to verify RAM stability. also be sure u set everything else to stock, especially CPU. GPU is more unlikely in my opionion, but better to be safe than sorry. also good idea with setting everything to "Auto" in the Bios, in combination with a bios reflash and cmos reset. ive read about some instances where some bios settings would retain their last manual values even when reset to Auto. keep us updated on how things go!

in the meantime, im currently in the last stretch of 6200 Mhz tuning (tightening timings one by one and verifying stability). next step will be max clocks at 2:1 ratio. ill give 8000 mhz another shot, but willing to downclock to 7800 at tightened timings if i cant get it stable otherwise.

8 hours ago, Papusan said:Isn't it nice bro @Mr. Fox If you jumped on the 4080 you'll be screwed two times. First time you pay 72% more over 3080. Then you'll loose several hundreds of $$$ when you sell it within it's useful lfespan (2 years). So double screwed, LOOL

Yup, the 4080 is the worst card you could jump on. And with the 4080Ti arond the corner it will be even an worse deal. Good job Nvidia.

@electrosoft I can't grasp why nvidia would release 4080Ti after new year. No one will buy any of the4080 if this rumors are correct. The sales will go further down the drain. Why not release the 4080Ti at end of November to take more of the AMD 7900XTX sales in the Christmas/New year hollidays season? And a 900$ 4080 would also be much easier to up-sell in the Holidays. Is it because the AIC partners have their shelves full of un-sold 4080s at +1200$ ? Hmm

Only 5x PCIe 8-pin power connectors. 3 for the MB then you have two for the GPU. Then nothing. There is a few Radeon 7900XTX that have 3x8-pins. Then you are forced to use the unwanted pigtail setup with the drawback as dropping voltage on one of the rails. And god forbid you if you want 8 pins connectors to power other peripherals. Nice with new modern tech.

welp to be fair, an 850W PSU can nowadays be considered "midrange" and not highend anymore 😄 so nobody in their right mind would pair a midrange PSU with a highend GPU, right? so cant expect it to have a full range of connectors, they gotta cut costs somewhere.

2 hours ago, electrosoft said:If I were Nvidia, I'd use the Holidays to mark down the 4080 to $999 to clear (or at least lessen) inventory then slot in the 4080ti in Q1 2024 at the same MSRP as the 4080ti.

Drop the 4080 officially to $999, slot in the 4080ti at $1199 (old 4080 MSRP), drop the 4070 to $499 to meet the 7800xt head to head,, 4070ti to $699 and 4060ti to $349 and $399 to combat the 7700xt but Nvidia is greedy so I only expect some of this to come true. 🙂

If they did the above, gain back some consumer love and really box AMD into a corner who has lost market share since the Cryto-demic era.

sure sure, but then Ngreedia would LOSE MONEY. theyll probably just slap a 1400-1500 USD price tag on the 4080 Ti, not change ANYTHING ELSE in their lineup and call it a day lulz

-

2

2

-

1

1

-

3

3

-

-

21 hours ago, Developer79 said:

Yes, the electrical power comes directly from the 2 graphics card cables! I have developed a complete internal power supply for the P870xx...Yes, the cooling will be as thick as that of the vapour chamber of the p870xx...The Cpu wiwrd difficult because the gen. have a different power protocol.... The 10.Gen still goes, the other would be associated with a lot of effort....

dude that sounds amazing. keep us updated on your progress, im sure the community would be VERY interested in this 🙂

-

1

1

-

-

5 hours ago, Aaron44126 said:

Exactly right. There are tons of choices with various pros and cons. Only you can decide what is best the best option for you.

What can I say .....

I got fed up! Fed up with Windows. Fed up with the state of high-end laptop offerings (specifically from Dell but many of the issues are "global"), compromises involved with them, thermal issues, power management issues (specifically random GPU power drops because of it trying to balance CPU/GPU power and not doing it right), and general lack of attention to detail all around. I've posted about these issues elsewhere on the forum. (Never mind the issues I have with the direction that Microsoft is going with Windows 11, I tried switching to Linux for about two months and posted in that thread about some frustrations I was having with Windows; and later my rationale for switching from Linux to macOS, and I've also written about my frustrations with the Dell Precision 7770 which I was initially so excited about.)

So I went to a Mac because it seemed like the only place to go that made any sense. (Apple's recent attention to the gaming space did help push me over the edge.)

What I have found that I didn't fully expect going in is how %@#$ good of a laptop a MacBook Pro is. I'm not talking about "as a PC", but "as a laptop" specifically. It offers a balance between high performance when needed and cool/quiet/long battery life the rest of the time that you simply can't get from anyone else, where you have to pick between one or the other when deciding which model to get. And it offers other things that you'd want a laptop to have; quick battery charging, a really good display panel, good speakers as well, and a solid mostly-metal build.

And yes, there is definitely a compromise when it comes to both "tweakability"/"upgradeability" and "absolute top performance". For the latter, I am settling for "good enough" performance (which is pretty darn good) because, to me at least, the benefits from this system more than make up for it. And, I mean, I played through Shadow of the Tomb Raider at 1510p resolution and "highest" graphics settings preset, it had no trouble maintaining a stable 60 FPS, and that was running through the Rosetta 2 x64->ARM CPU emulator to boot, so I have nothing to complain about with regards to gaming performance. I might not be able to run at 4K/120 or use the latest ray tracing glitz, but I can play games fine and they look nice enough for me.

With regards to the former, it's sort of something that I've just accepted that I have to deal with. But, laptops have been become less upgradeable all around so if I'm going to be pushed down that road no matter which manufacturer that I go with, I might as well get a something that works as a good laptop rather than something that tries to be a mini desktop and, as a result, is on the bulky side and runs noisy and hot. (I'm really at a point where I can't have my computer stuck in one place, so actual desktops are not under consideration for me right now.) Still, while I don't mind having to pay up front to max out the CPU/GPU/RAM, the lack of at least replaceable/upgradeable storage is indeed ridiculous.

To be clear, I have found multi-threaded performance to be not quite as good as a 12th gen Core i9 laptop CPU (never mind a 13th gen which is notably faster). The M2 Max doesn't top the chart in CPU performance, but it is up there near the top, and certainly can trounce anything more than a couple of years old. (Intel sure does have throw a lot of power at the CPU to beat the M2 Max, though; M2 Max probably wins in terms of power efficiency.)

Something I've been mulling over lately. What's the difference between a translation layer and "native support", anyway? Modern games are largely just bits of computer code that target a set of APIs, and if you swap out those API implementations with something else that works the same... is it a "translation layer" or just an "alternate execution environment"? Could a "translation layer" still offer "full performance"?

...Leaving Mac aside for a moment — obviously it is running a whole different CPU architecture so it must be translated if you want to run x86/x64 games. Let's look at trying to run Windows games on Linux, on a PC with a typical x64 CPU.

Modern Windows games generally just x64 binaries (with supporting x64 libraries & data files) that make calls to various Windows APIs to handle various things that they need (open files, play sounds, network chatter, etc.) and use the DirectX 11 or 12 APIs for graphics.

On Linux, running on an x64 CPU, running the code from the game binary should not be any slower than it is on Windows. What you have to worry about are the external API calls that the game makes, which flat out don't exist on stock Linux.

Valve, CodeWeavers, and others have basically cooked up replacements for the APIs (Wine, plus DXVK & VKD3D for DirectX/graphics). The game doesn't care if it is making calls to "real" Windows+DirectX or to Wine+DXVK, as long as the implementation is good and the right stuff happens, it just executes the same code and the game chugs along. And, if the "alternate API implementations" are good enough, there is no real reason why it should be "slower" than running a game on Windows directly. After all, it's still just the same sort of CPU churning through the same x64 game code, in the end, just the parts where it calls out to the OS to do something have been swapped out. And, you'll find that under Proton, many games run just as well as they do on Windows ... and sometimes, even better.

There are issues obviously when the behavior of the alternate implementation doesn't match up with Windows's behavior, which is why some games are broken (DirectX 12 in particular since that is newer), but if you compare Proton today to just like three years ago you will see that they have made incredibly rapid progress in fixing that up.

On the Mac side, to pull this off you need to both have a replacement backend for the OS APIs (something like CrossOver with D3DMetal) and you have to translate the CPU instructions from x64 to ARM (Rosetta 2). The biggest performance hit seems to come from translating graphics API calls right now (D3DMetal or MoltenVK), but that has been doing nothing but getting better over time. D3DMetal performance has actually gone up like 20%-80% (depending on the game) over just the past three months or so. On the CPU translation side, Rosetta 2 performance actually seems to be really good, again looking at my experience playing Shadow of the Tomb Raider which has not been compiled for ARM. It does have a one-time performance penalty hit when you first launch the game, as it basically trawls through the executable and translates all of the x64 code that it can find to ARM code. It might happen again when you hit new bits of code (i.e. when getting from the menus to the game proper for the first time). The results are cached, so you don't have to wait for the same translation a second time (unless the game is updated). This is probably why I originally complained that loading times seemed longer than they should be early on, but that problem quickly went away.

So, is there performance overhead? ......... In some cases, yes, though it is shrinking as these technologies mature, and there are groups of people that are working really hard on "unlocking" games from the environment that they were written for.

Honestly, the whole approach of making alternate implementations of backends that games need (and ideally, open source ones) is critical for long-term preservation. There's been chatter recently following this study that, unlike other media like music and films where translating from one format to another is relatively easy, the vast majority of games ever made are currently unavailable to general users. If you are like me and like playing older games as well as new ones and don't want to have to maintain a collection of old hardware, then you've gotta get used to making your own backups and playing in an emulator or translation environment of some sort.

Heh. That's all of my off-topic ranting for now!

bro @Aaron44126be like

-

19 hours ago, Mr. Fox said:

Nothing is too crappy for the lowest common denominators and their chintzy disposable hardware trash.

hahaha i actually meant no surprise there, they wouldnt release "15th gen" just 2 short months after "14th gen"....notice how im including the air quotes 😄 still! planning to upgrade my lady's machine with a 14900k with some prema love in the mix 🙂

15 hours ago, tps3443 said:duuuuude they look MASSIVE! noice.

@Mr. Fox u know whats also noice? following ur advice on going straight with the 1000W Asus XOC vbios. also updated my igpu and dgpu drivers next to the chipset. while playing control i noticed the gpu going up to 750W and finally nooooo mooooore power limit reason popping up. happy camper!

-

3

3

-

2

2

-

1

1

-

-

2 hours ago, cylix said:

oh yeah total shocker....not 😄

-

2

2

-

4

4

-

-

1 hour ago, Csupati said:

Apple ARM chip and os is just way better , way simple then windows, its kind of ludicrous how a 60w single chip can outperform a 135w i9 and a 165w 2080.

its always about trade-offs. sure, apple can achieve way higher effiency in their walled garden approach cuz they got complete vertical integration from silicon design up to software and os. but id never want to give up on flexibility and modding capability, thats why ill also never switch from android to crapple 😄

-

aaah bro papu our resident reminder to update our software, nice one! 😄

this one is actually VERY cool, have to check this out with my Samsung microSDs hahaha

- Magician now supports all of Samsung's branded memory storage products, including internal SSDs, portable SSDs, SD cards, and USB flash drives.

-

17 hours ago, Mr. Fox said:

Nope. I hate it when one AIB partner does something stupid/different than the rest with things like ports. The standard is 3 DP and 1 HDMI and they should all stick to that. I use all three of my DP ports so that would screw things up. In the past I have tried to use both types of ports in a multi-display setup and the color and brightness never matches 100%.

plus this makes crossflashing of vbios versions more annoying. different port configs mean that u might end up with one of the gpu ports not working...super unnecessary!

-

2

2

-

2

2

-

-

19 hours ago, Csupati said:

Or go to Apple ARM M series chip , 60w consume and get the same gpu performance as a stock mxm 2080. The cpu power is grater than the i9 .... If they managed to release the direct X support , then in the gaming bussiness will be completly broken.

welp that opens up a completely other can of worms: walled garden, even MORE BGA and add to that software locks (for which apple is infamous for) plus apple has to still prove themselves as a "friend to gamers". sure, they started doing at least SOMEthing, but theyll need to stand the test of time first. and yea, lets not even start talking about price to performance here.... 😅

18 hours ago, Aaron44126 said:Ha ha... (As a guy who recently dumped Windows laptops for a MacBook Pro, and has been happily using it for gaming...)

-

As you may be aware, in June, Apple released beta software "Apple Game Porting Toolkit" (GPTK), which is basically a custom packaging of Wine with an additional library called "D3DMetal" which translates DirectX 11/12 calls to Metal calls. It was presented as an evaluation tool for game devs considering a possible macOS port, but folks on the Internet had full Windows titles like Elden Ring up and running in a matter of hours. GPTK was released with a tight license, preventing it from being used with any third-party products or game ports.

- The guy at Apple driving GPTK is Nat Brown, who used to be a graphics & VR guy at Valve.

- Since then, Apple has been releasing updates to GPTK every few weeks, and performance has gone up bit with each release.

- Tooling built up around GPTK like CXPatcher and Whisky, which made it a bit easier for non-technical folks to try to use it.

- In August, Apple changed the license to allow redistribution of the D3DMetal library. (Still can't be used to ship a game port, though.)

- In mid-September, CodeWeavers released a beta version of CrossOver (23.5) which integrates D3DMetal, making it much easier for "anyone" to use this to run Windows DirectX 11/12 games. Install CrossOver, install the Windows version of Steam, toggle D3DMetal on, install a game, and run it.

- Later today, macOS 14 "Sonoma" is launching and all of this stuff should be heading out of beta.

Definitely an Interesting time to be a Mac gamer. (There's also really interesting stuff going on in MoltenVK.) There's still a notable hole, though; there is currently no way to run games that use AVX instructions on Apple's ARM CPUs, because neither Rosetta 2 or Windows 11's x86-to-ARM emulator support AVX instructions. (Ratchet & Clank: Rift Apart, The Last of Us: Part 1, et. al.)

Not to derail the thread, this will be my only post on the matter, but there has been some chatter over here.

blasphemy! how dare you jump over to the apple side of life! 😮 GAIZ, GET HIM! /s 😄 seriously tho, what made u switch?

jokes aside, interesting to see that Apple has made at least some strides into the right direction. in the end, its still going through translation layers and losing performance vs. native support. plus, as mentioned above, apple will need to prove they mean "gaming business" in the long term, so not just a quick n dirty project that quickly falls off the wagon come next hardware / macOS gen (after Sonoma)... so i remain a bit skeptical in that regard.

-

As you may be aware, in June, Apple released beta software "Apple Game Porting Toolkit" (GPTK), which is basically a custom packaging of Wine with an additional library called "D3DMetal" which translates DirectX 11/12 calls to Metal calls. It was presented as an evaluation tool for game devs considering a possible macOS port, but folks on the Internet had full Windows titles like Elden Ring up and running in a matter of hours. GPTK was released with a tight license, preventing it from being used with any third-party products or game ports.

-

On 9/23/2023 at 9:00 AM, electrosoft said:

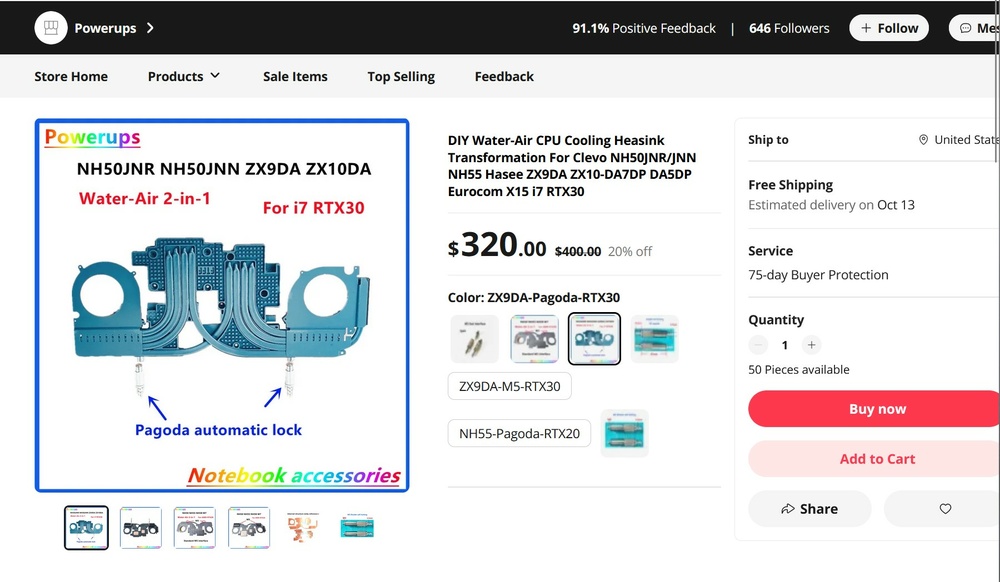

Looks like there *might* be an after market CPU/GPU cooler for the NH55 finally:

https://www.aliexpress.us/item/3256805211337886.html?gatewayAdapt=glo2usa4itemAdapt

On 9/23/2023 at 9:57 AM, FTW_260 said:@electrosoft this custom heatsink is almost a year old, as are custom fans for this model.

better late than never! hahaha

any review data on it yet? with those hybrid air/water coolers im always a bit skeptical as to their air only performance...definitely looks way beefier than the stock cooling though, especially on the cpu side!

might be something worthwhile to consider with the upcoming 14900K...

-

welp, one can dream...i like the way that OP it thinking. however, in the end, Clevo is now following the money trail, thin n light BGA crap FTW! 😢

-

16 hours ago, electrosoft said:

Scott Herkelman leaving AMD:

https://videocardz.com/newz/scott-herkelman-announces-departure-from-amd

And we now are getting Meteor Lake for desktop 2024....

https://videocardz.com/newz/intel-confirms-meteor-lake-is-coming-to-desktops-in-2024

With a ho hum 14th gen "refresh" and nothing from AMD, Intel or Nvidia on the horizon, settle in and enjoy your hardware. 🙂

ha, u beat me to the punch, wanted to post the same @HMScott 😄

but heres another beautiful future to dream about: away with resterization and ray tracing, full on neural rendering with "DLSS 10" 😄

in theory pretty cool, i mean u can basically "make up" the game as u go along (integrated chatgpt letting u "wish" stuff into existence lulz), but, OF COURSE, NGreedia would put all this behind a proprietary paywall and BEST cloud based so that you own NOTHING. yay, lets go!

-

2

2

-

1

1

-

2

2

-

-

14 hours ago, Raiderman said:

I have done zero tweaking with Ram settings yet. All I have done is set the voltage correctly, and enabled Expo/XMP.

Edit: I do not think its stable even passing 10 loops of memtest 64.

While trying to decompress a 100gb file, it will error out. Tried several times, and checked file integrity multiple times, yet it would not decompress. Decided to check if the IMC was at fault, and sure enough, I lowered the RAM speed to 6600, and the file decompressed without issues.

hmmm interesting that ur VPP was bumped to 1.95V, might be something to look into on my side.

and i feel ya, juuuust when u think u got stability figured out, bam RAM comes back and bites u in the butt haha 😄

-

4

4

-

-

4 hours ago, razor0601 said:

Hello, I have an AU B173ZAN01.0 4K Display in my P775TM1-G with a few stripes. So I want to change the LCD. Are there other cheap LCD‘s which will work?

as long as u get the dimensions, the mounting holes and the display connector type correct, u can install any other display that ud like 🙂

-

2 hours ago, Raiderman said:

Umm, duh... Lack of sleep may have contributed to this. I have no idea what I was doing that morning.

I pass 10 loops of memtest 64@8000mhz

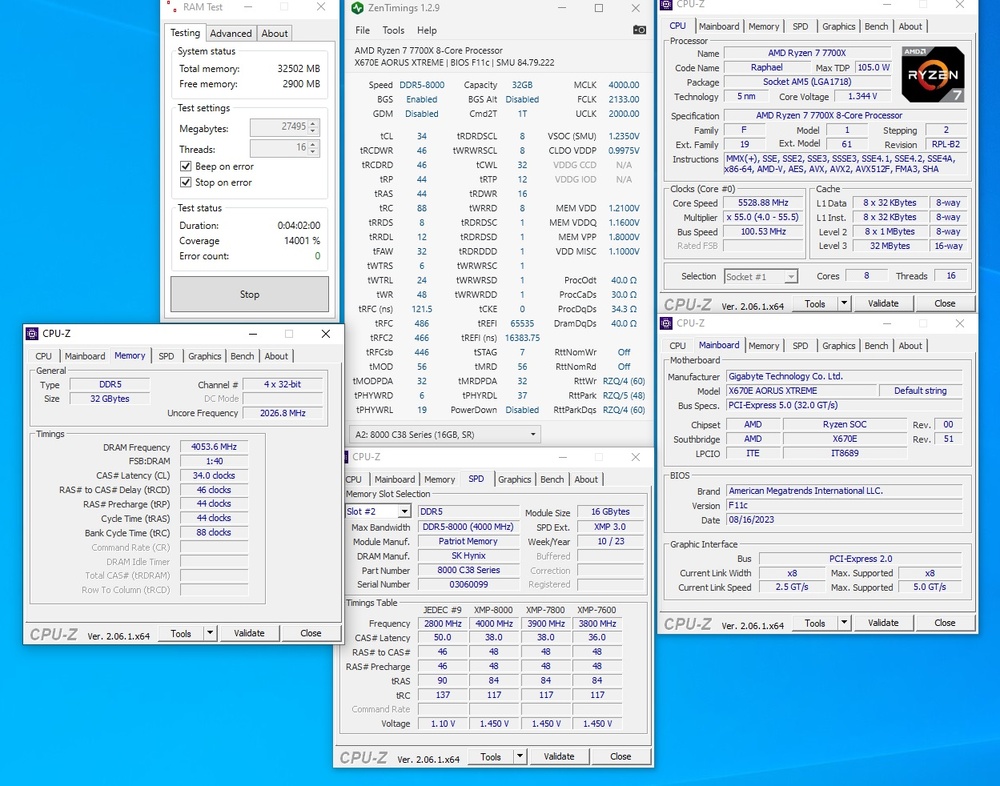

no worries mate. good to hear about ur stability, feel free to throw ur settings my way 😄 currently still tuning my 6200 timings, on the cusp of overtaking my previous best results with the 6600 kit and still a looot of timings to tune, looking promising!

-

1

1

-

-

just stumbled upon a pretty impressive interview with uncle Jensen:

https://www.tomshardware.com/news/nvidia-ceo-shares-management-style-always-learn-make-no-plans

I was positively surprised to see how he manages Nvidia and fosters continuous improvement, as well as keeping flat hierarchies and asking for input from all company levels. On the other hand, looking at Nvidia's history, with this kind of flexible management style its actually no wonder theyve been so far ahead for decades now. Basically start-up mentality inside a bigass conglomerate, pretty cool stuff!

-

3

3

-

1

1

-

1

1

-

-

53 minutes ago, ryan said:

You fellas wouldn't happen to know how to get into the advanced bios of the omen 16 11800h version would you. All this talk about ram timing is making me itchy. Everything on my laptop is modified except screen and ram..man this laptop is built like a tank. Been running max fans and overclocking it for 2 years and 0 issues

i assume uve already gone through all available bios options? if theyre not in there, they would need to be manually exposed by modifying the bios and flashing it....

-

1

1

-

1

1

-

-

19 hours ago, Raiderman said:

If you can run PBO voltage curve at -30 all cores with CB 23, I would say it's your board and not imc. I am bench stable at 8000 xmp on, and 6400. I have benched 6600, but not stable. Of course that's only with very limited time playing with it.

havent really started with tuning the CPU yet tbh 😄 might be stupid questions but: how would an undervolt on the cpu cores say anything about the IMC? different dies....

as for stability, sure, benching is no problem with 6400 and 8000, can do that all day long. im testing with anta777 absolut tm5 tho, and thats not passing haha. i dont want to end up with random BSODs down the line popping up in a few weeks / months time...

13 hours ago, tps3443 said:I ordered my Super Cool DDR5 waterblock kit (Real memory watercooling)! 😁

My 2x24GB M-die’s will be on chilled water now 😂

Man oh man. I’ll be able to pass just about any memory stability test for however long so easily lol. 🤣

I am seriously excited for some DDR5 running like 15C lol. This is gonna be so stupidly glorious 😭

9 hours ago, tps3443 said:

it includes everything. I’d order asap but only 2 left. I’ve been waiting on these for 1.5 years. Finding them used is close to impossible. (It’s actually impossible lol) people apparently asked him to make another batch of these for ram, and he finally had enough request(s) and he actually did it. His stuff is amazing though.

The ram kit includes the blocks for (2) ram sticks for both sides, and the top manifold that directs water and mounts to the both ram blocks. He has a cheaper kit also which is a full kit too, but includes the less expensive clear polymer manifold instead of the solid copper manifold.

And @Mr. Fox you only need (1) and it’s an entire kit, so either the one for $79, or the one for $93.

This is the $79 dollar kit.9 hours ago, Mr. Fox said:Ordered. Thanks for the heads up. It's about twice as much ($112 USD including shipping) as my other liquid cooled memory components cost me, but it will be interesting to see if keeping the memory below 30°C improves overclocking. If not, then I will know not to spend extra next time. Also nice that now I can get rid of the nasty G.SKILL RGB heating blankets and rainbow puke for good. I really hate RGB memory. Such a stupid thing, LOL. Finding good memory options without RGB garbage should be much easier.

lulz, please keep us updated on your results guys. curious to see if going even lower in temp will improve OCability at all. or might behave like current vRAM and lose clocks once u reach certain low temps...

1 hour ago, chew said:welp, im slowly thinking my bottleneck is the classic PEBCAK 😄 now please do the same with the Asus Extreme 😛

so what kinda bandwidth and latencies are we talking here, anyways? have u compared max clocks between 1:1 and 1:2?

-

2

2

-

2

2

-

-

11 minutes ago, ratchetnclank said:

Playing Hades. Really enjoying it, challenging enough to be engaging without being insurmountable. Beat it 3 times so far and trying to get the rest of the story and tryout new builds.

nice i was actually thinking of giving that one a try.

I just saw the most beautiful Asus G18 Laptop

in Asus

Posted

damn, had no idea that mini LED screens have already reached laptops! by any chance, u know the res/size, display model and how many background lighting zones it has?

edit: ah its a QHD screen, just realized 😄 the rest of the specs would still be nice tho 🙂 curious