Developer79

Member-

Posts

359 -

Joined

-

Last visited

-

Days Won

2

Content Type

Profiles

Forums

Events

Everything posted by Developer79

-

No, this laptop cannot be upgraded beyond the GTX9xxm series! Only if there is an MXM card in the old format with a newer GPU like RTX 20/30 series. But that is not known to me!

-

clevo p870tm/tm1-g Official Clevo P870TM-G Thread

Developer79 replied to ViktorV's topic in Sager & Clevo

Yes, that's the idea. The desktop GPU is to be installed inside the P870xx. Of course, a corresponding cooling system must then be manufactured. I'm leaning towards an RTX4080. RTX4090 is absolutely oversized in everything:-) I think the P870xx would already be the fastest laptop with an RTX4080 anyway:-) -

clevo p870tm/tm1-g Official Clevo P870TM-G Thread

Developer79 replied to ViktorV's topic in Sager & Clevo

It would be almost logical if 4 Nvme hard disks were supported.... -

clevo p870tm/tm1-g Official Clevo P870TM-G Thread

Developer79 replied to ViktorV's topic in Sager & Clevo

Better is the backside and very close up!:-) -

clevo p870tm/tm1-g Official Clevo P870TM-G Thread

Developer79 replied to ViktorV's topic in Sager & Clevo

If you take a close-up photo of the MXM NVme board, I can tell you exactly what the maximum support is. For the P870xx it would be 8 lanes. I can't tell you for the GTx! -

clevo p870tm/tm1-g Official Clevo P870TM-G Thread

Developer79 replied to ViktorV's topic in Sager & Clevo

However, the MOD only works if 8 lanes of the MXM port are used. Otherwise it does not work. -

clevo p870tm/tm1-g Official Clevo P870TM-G Thread

Developer79 replied to ViktorV's topic in Sager & Clevo

Almost standard from my point of view:-) -

Price: ca. 150 Euro or best offer Condition: new Warranty: no Reason for sale: no need Payment: Paypal or bank transfer Item location: Germany Shipping: yes International shipping: yes Handling time: - Feedback: Specification: Proof of ownership: Time Stamped pictures Of course the adapters work for all Clevos with GTX10/20xx series MXM! I have not photographed all heatsinks for a long time, here only as an example of the P870xx Normal and Vapourchamber! Feel free to PM me if you don't know if the heatsink works or not. You don't need to buy new heatsinks, everything works with the original heatsinks after machining.

-

In principle, any display will work as long as the 40-pin connector is present. You can use all possible manufacturers. Many people always ask me about the frame rates in 4k! With the RTX30xx series, I have consistently achieved good and high frame rates. The level of detail is also a matter of taste. You also have to bear in mind that everything looks great in 4k even at low settings. Nevertheless, I have the highest settings for most games and it still runs very well. The worry is that if there are fewer HZs in a game than the display can handle, it still looks smooth in my opinion. I think this problem is overrated! You can use the Samsung OLED, it's supposed to be very good. In any case, if you want real UHD/4K, only AUO in high Hz numbers will do! At lower resolutions but even higher than FHD, all possible manufacturers will do.

-

1. it is actually not difficult. It is not a big problem if a display does not have the appropriate holes. Either you make more effort or you don't. I have built with brackets from a special jig. Razor only uses tape. I think my solution is more professional, but to each his own 🙂 2. the cable must have 4 lanes for 4k and be the right length. That's it. 3. you can choose all kinds of manufacturers with touch or without. 4k in 17.3 will be a problem at higher Hz numbers, as I said. Only AUO can do that! With 15.6 it looks different again and there are companies like sand by the sea!:-)

-

clevo p870tm/tm1-g Official Clevo P870TM-G Thread

Developer79 replied to ViktorV's topic in Sager & Clevo

Every donation is welcome! I can always use something 🙂 -

Exactly, they don't match and are not interchangeable. You can only either buy new heatsinks for the RTX30xx series, if they are available for the p750DM2, or I have adapters with which it is possible to screw on the RTX30xx. The P750DM2 went up to the GTX10xx series so this is possible! Greetz

-

Clarifying Questions on upgrading P870DM3 and P750DM2

Developer79 replied to 0anassa0's topic in Sager & Clevo

Hi, all displays that fit into LCD housings will work! It doesn't matter which Hz. You just have to have the right cable. Length and lanes are important. That means 4 lanes and the right length of course. UHD is possible up to 144 HZ. More is not possible in this size. I have UHD 120 HZ, which looks very cool. I might install the UHD 144 HZ soon, we'll see. Or rather, your question was aimed more at 15.6. There are even more options there than with the P870xx or P775xx. I once asked a manufacturer about the availability of 17.3 with OLED. The manufacturer said that there are simply more devices with 15.6 inches than 17.3 inches! Well, I think UHD 144 Maximal is very impressive and the action is really cool:-) I hope that answers your questions. Greetings -

clevo p870tm/tm1-g Official Clevo P870TM-G Thread

Developer79 replied to ViktorV's topic in Sager & Clevo

Development would also go much faster if we had more money available. I'm still looking for companies that support this. Or sponsors. The projects also cost money and not just time:-) If we had more money available, we could realize everything faster... It might also be possible to expand the CPU, but for the reasons mentioned above, things don't always work out the way you want.... -

clevo p870tm/tm1-g Official Clevo P870TM-G Thread

Developer79 replied to ViktorV's topic in Sager & Clevo

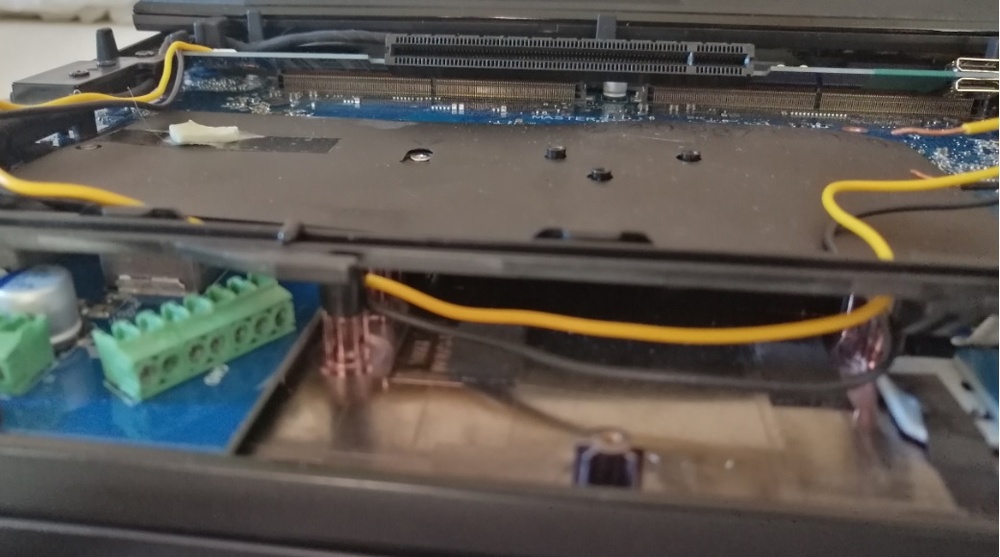

Just think about it for a moment:-) If you insert an MXM card, what angle does the board take when you first insert the MXM card into the slot? Always at an angle to the motherboard. But if you screw the MXM card in, then the card is parallel to the motherboard. It is exactly the same in the picture. Of course my adapter board will be parallel when a desktop card is inserted. The photo is only intended to show a certain perspective. The plan is to have everything closed, like the original! The desktop card will not stick out at an angle to the motherboard, but will be just as parallel as an MXM card! This will allow the P870xx to be closed by the bottom cover...:-) -

clevo p870tm/tm1-g Official Clevo P870TM-G Thread

Developer79 replied to ViktorV's topic in Sager & Clevo

-

clevo p870tm/tm1-g Official Clevo P870TM-G Thread

Developer79 replied to ViktorV's topic in Sager & Clevo

You also have to make special bios modifications. But it works in any case. The 10th gen notebook CPU has the same requirements as the 9th generation from the point of view of switching control... -

Clarifying Questions on upgrading P870DM3 and P750DM2

Developer79 replied to 0anassa0's topic in Sager & Clevo

I'll take that as a compliment :-) Yes, the CPU conversions are still "relatively" simple! Of course, some things have to be done, is clear. Unfortunately, the P775xx is relatively limited in terms of space, so only the CPU MOD would work. The new generations might also work. 13TH/14TH HX. I have checked the technical requirements as far as possible. In addition to the hardware side, the bios side would also mean a lot of work. -

Clarifying Questions on upgrading P870DM3 and P750DM2

Developer79 replied to 0anassa0's topic in Sager & Clevo

No, you are not limited to 144Hz. It is only what is currently possible in UHD, for example! When UHD 240Hz comes out, that would also work. I developed these adapters myself and refined them more and more over time. The current version is the third. Yes, a lot is really possible with the P870xx. In my opinion, one of the best technical prerequisites for future GPU solutions. CPU would of course also be nice n. But I am an individual developer. My financial possibilities are limited. Therefore, I can only wait until the situation improves and possibly continue. Thanks again for the moral support. It would be nice if Clevo would refresh the motherboard of the P870xx. That would save a lot of work. But that's wishful thinking. Thanks:-) -

Clarifying Questions on upgrading P870DM3 and P750DM2

Developer79 replied to 0anassa0's topic in Sager & Clevo

This works without any major problems. You just have to build a few brackets. This works up to UHD 144Hz. I have developed adapters for the P870xx and P775xx, which makes it possible to use all old heatsinks for these laptops. They only need to be machined using a milling machine. You don't need heatsinks from Ali or anywhere else, it works just as well with my adapters. I have already developed the 3rd version of these adapters. I have been using these adapters for a long time since the RTX30xx series came out. Of course you can. It is possible to install this heatsink in the P870DM3 without any major problems. The P870DMG is more difficult. I have finished the adaptation electrically. I may show a photo of the adapter soon. I have tested the RTX4070 so far. That alone is already amazing. This card is already much faster than an RTX4090 laptop. I also tested the RTX4090 FE last weekend. It's a real monster. That's too much for the P870xx. I would see the RTX4080 as my favorite. This card fits very well, but the RTX4070 also fits very well. The benchmarks in the thread show the outstanding performance. Real 16 lane connection and full desktop performance. An acquaintance recently asked me about the possibility of installing the 13900HX and 14900HX. I have checked it as far as possible. It might work. I would have to develop 2 boards for the P870xx series. I'm not going to do that for now 🙂 Anyway, cooling is more important for now. I don't have much time at the moment and money is of course also a factor. See my comments on the P870xx. By the way, the Vapourchmaber in the P870xx cools best. I achieved the following temps with this combination and RTX3070/RTX3080. RTX3070 52 degrees and RTX3080 approx. max. 60 degrees. For the P775xx, the RTX3070 would be the best option with high performance and less heat development. To summarize: Yes, you can see what has already been said above and what is possible. Unfortunately, Clevo has succumbed to the prevailing monetary system and now only builds laptops without a desktop CPU or desktop GPU (MXM). My idea was to create an extension for the P870xx, as it has the best prerequisites from an engineering point of view. I have tried to implement this and it works for the GPU. My tests show that the P870xx is still 2023 up-to-date in pure gaming performance (also MXM RTX30xx and UHD) and my extension is much faster than an RTX4090 laptop compared to RTX40xx laptop. I am also curious how the new RTX50xx series will be. I hope NVida makes the same brilliant progress as with the RTX40xx series. In my opinion, this is the best in many years. I was blown away by the performance of the RTX4070 desktop and the RTX4090 is absolutely amazing in a laptop. The RTX4080 is the upper limit from a technical point of view. But the performance is also far superior in any resolution! -

clevo p870tm/tm1-g Official Clevo P870TM-G Thread

Developer79 replied to ViktorV's topic in Sager & Clevo

I have now checked the CPU expansion to 13th 14th Gen HX! That would work, but the effort is relatively high. You would probably need 2 new PCBs especially for socket 1151 and an extension for the motherboard.