Leaderboard

Popular Content

Showing content with the highest reputation since 01/09/2026 in all areas

-

While I missed the boat on the best pricing for 8000MT+ RAM, I am happy I spent the 150 to 180 for each of the nine 4TB NVMe SSDs I got over 2025. I went nuts on the Acer GM7000 SSDs. I did a quick calculation, I have over 100TBs in SSDs (spread across 8TB, 4TB, 2TB and 1TB drives) in operation. If I were to buy them in today's prices, I'd have to spend over 30K. Damn! I guess being a frugal data hoarder has it benefits once in awhile.6 points

-

I've applied for a lot of jobs I am ideally suited for and could do with my eyes closed that have not worked out. Ageism is illegal, but it is more real than anyone wants to admit. I have one that looks even better that I have my third interview for today that I pray works out and it would probably end up being the best one I have applied for. The one that looked promising that you referred to is not off the table, but the President wanted to see how how the financials looked for 2025 and how January revenue looks before adding a new c-suite resource to staff. On the WireView Pro... when you get around to it, this screenshot might be useful if you run into any complications getting Windows to correctly identify it. I think the key is manually installing the driver in Device Manager, immediately powering off and unplugging the PSU long enough for the board and everything attached to lose power. It appears there was some sort of delivery failure. I replied and it shows to have been sent, but you evidently cannot see the reply on your end. Thanks for checking on me, brother. I really appreciate it.6 points

-

Not everyday I see the editors/writers from Pcworld use their brain. If you buy Razer’s insane $1337 mouse, I will be very disappointed in you I remember the first time I bought a Razer mouse. Inside the box was a letter printed on fancy vellum paper. It opened with, “Welcome to the cult of Razer.” It appears that this isn’t just a cheeky marketing slogan, Razer means it genuinely. Because only brainwashed cult members would pay $1337 for a mouse. It is, in a word, repugnant. In a more accessible word, it’s greedy. In a more all-encompassing and entirely appropriate word, the Razer Boomslang 20th Anniversary Edition is bullshit. Razer is taking pre-orders for the mouse in four days. If you buy one, and I want you to imagine this in the most overbearing and judgmental dad voice possible, I will be very disappointed in you.5 points

-

The least they could do is pretend they love us and say nice things while they are choking us and pulling our hair.5 points

-

soooooo i used my last three days of sick leave to get some shit done at home, including computer stuff 🙂 > applied full backplate coverage TG Putty Pro - check! if anyone is interested, you need about 250-300 grams worth of putty to cover the whole backside of an air cooled 5090. > while the gpu was out, i used the opportunity to also install the wireview pro II and replace the gpu power cable (angled back to straight, both seasonic) > good news: both cable and socket were still pristine, not even a whiff of discoloration or burn marks. > good news 2: did a quick max OC test in alan wake at max. settings with the gigachad vbios, max. variance i saw between pins was 1.1 amps (7.5 vs. 8.6) under load, that i can totally live with! > also finally came around to delid my second 9950X3D and put it under the TG high performance heat spreader with LM > while i was at it, did some dusting and cleaned the tempered glass windows, clear views to the RGB rainbow puke once more 😄 still need to do testing with regards to gpu / cpu temps after the changes, as well as check how the RAM OC was impacted. you might remember that i suspected a suboptimal cpu mount for not being able to reach my previously stable 8000 setting. fingers crossed!5 points

-

Hey Guys! On the z490-h for now until the replacement motherboard arrives. I have also acquired the GPU risers to split the x16 lane into x8 so I can do 2 GPU's off the single lane via bifurcation. 3090Ti will be coming out of retirement to some degree for Lossless Scaling but in the interim I did test it conceptually with the 3090Ti as raster and the 1080Ti as Frame Gen. Seems to work pretty well in the couple of titles that I have tested. Allegedly you dont want the Raster GPU to be fully saturated as it increases the time it takes to send the frames to the secondary GPU. Honestly I am pretty surprised how well it worked given the software's price point. Main game I have tested were Monster Hunter Wilds and Borderlands 4 both games have piss poor engines. I was able to hit 120 FPS in both, B4 did crash a couple of times but I was mucking with the settings as that game cant run without some Scaling or FG. I did also try Horizon: Forbidden West but I should have to the DLC area as its more GPU intensive. For people that dont want to pony up the cash for new GPU's I think this may be a viable option on the table much in the way SLI/Xfire used to be for me in the old days. Nvidia still pisses me off though. If I install 591 driver, 1080Ti doesnt work, If I install latest driver for 1080Ti (581) then the 3090Ti doesnt work. I have to let windows install 560 in order for both to work until I figure out another way to go about it.5 points

-

I can smell it, too. This gal lets a Jensen in the car. Or, is it a Nadella? They smell the same. And, I can feel it.5 points

-

5 points

-

Hey fellas. Yeah I’m just burned out on just barely gett’n by is all. I’m 35 years old now been contemplating life a lot lately, me and my wife both work full time from home, life is good in the new house. Bills are paid, but that’s it lol. Things are getting really expensive lately like (Daughters new braces 😬) kids will need cars in a 2-3 years (I’m broke). I need to do something on the side and make some major moves. About ten years ago I use to buy and sale junk cars, this was extremely lucrative. There were times when making $2,500 on a good day wasn’t impossible. I have been complacent and living on auto pilot for several years now doing the minimum.. The 5090 had to go though, I needed the funds to help fund a truck+car trailer+winch. In this case, not a truck but close, I bought a clean and well kept used Tahoe Z71 4x4. Lately I learned that we got to step outside of that comfort zone and take some risks in order to gain something or see change. If nothing changes, nothing changes. Life is hard, none of us make it out alive. 😂5 points

-

It is definitely overclocking season - I am about to take my new purchase (yes, I went the dumb BGA route and got a 275HX/5090 Lenovo 7i Pro laptop) into the snow. I got a great deal on it with 64GB of 6400MT RAM. I kept haggling with the seller and he kept coming back - 64GB of laptop RAM cost around 500 to $600. However, I got it for the price of 2800 (before taxes), my max budget. I am hoping I can sell my old 7i (11980HK/3080 (16GB VRAM) 32GB of DDR4 RAM) for around 800 to $1000 to offset the cost. February is the best time to sell things, because people are going to be spending their returns. My area is projected to get around 21 inches of snow - at 5F (-15C), with a wind chill of -10F (-23.33C). @Papusan I know this snowy weather is tame compare to what you have, but us people (well most) in the Northeast are celebrating. We haven't had snow like this in years, normally our max is 12 inches of snow.5 points

-

THIS is why I love MSI...... MSI just contacted me about my RMA on the AI1300P to inform me they have zero stock of the newer revision (AI1300P PCIE5) and would the following PSU be an acceptable replacement: https://us.msi.com/Power-Supply/MEG-Ai1600T-PCIE5/Specification Uh, yes, that will definitely be a suitable replacement!! I'm going to wait till it gets here and then do my system with it along with the swap to Ultra 265k, WVP2 and custom 140mm fan cooling for the DDR5.5 points

-

OK. Enough of the silly gamerboy normie crap. Back to speed trumps everything.4 points

-

ha not with me they didnt! upgraded all the way from a 6700K to a 9900K in my Clevo machine 😄 suckaZ! and big thx to bro @Prema for that one 😛4 points

-

im 3rd at timespy with my CPU/GPU combo... n even the 7950x + 9060XT i would be 3rd too, n 9950x + 9060XT i would be 8th 😄 no new CPU needed when u have DDR4 with B-Die n totally optimized 🤤 n just noticed this score was with my old B350 board, i should do a run with the new B550... AMD Radeon RX 9060 XT 16 GB Grafikkarten Benchmark Resultat - AMD Ryzen 9 5950X,ASRock AB350 Gaming-ITX/ac4 points

-

Oh its just the waterblock (EK-WB) not the GPU proper, if someone listed a 3090Ti for 70 USD I was assume its a scam be it from the sellers end or my own lol4 points

-

Which 9070 XT do you have, Brother @Rage Set. (I think you mentioned it before but I do not remember.) I got bored and decided to mess with slower memory speeds to see how fast I could make it go. This is stable so I will see how low I can get tCL before it unravels. 6400 1:1 is snappy feeling even though it is a bit slower on read/write/copy.4 points

-

my dudes, we can consider ourselves lucky to have covered all our bases with regards to hardware. our 4090s/5090s will stay at the top of the pack until 2028, lets just hope they dont go up in flames / melt until then 🤣 boy am i glad that i got my 32TB of pcie4 m.2 ssds plus took care of the ram binning with 10+ kits back in the summer of last year. prices were at rock bottom then ("good old times"). and even though i overpaid for my 5090 at +25% above minimum pricing (3300€ vs. 2650€ incl. 19% VAT and shipping), a whopping two thirds of it (2100€) was made up by the sale price of the 4090 😅 besides, even when the suprim currently IS available, its offered at 4000+€, absolutely insane... long story short: i guess compared to other unlucky users who are currently planning on building a rig, were pretty well off at least for the time being...always good to count and be generally aware of your blessings 🫠 mixed news on the ram tuning front: still cannot reach anything above 7600 stable but at 7600 im now reaching way way tighter timings than before, actually best ive had thus far overall on this mobo. final results pending, as u all know ram tuning takes quite a while...4 points

-

Cracks in Nvidia's armor starting to emerge.... "Exclusive: OpenAI is unsatisfied with some Nvidia chips and looking for alternatives, sources say" OpenAI wants to move to SRAM based inference (Like Apple uses FYI for their M architecture) and exploring alternatives... Google is already turning inward using their own custom designed chips.... AMD is starting to chip away at Nvidia's AI marketshare.... Microsoft questioning the profitability of AI..... I can smell that change in the air ever so slowly....4 points

-

5090's back in stock shipped and sold by Newegg reflecting the beginning of new pricing insanity incoming when they are stocked..... In their defense, Walmart is selling shipped and sold by Walmart the PNY EPIC 5090 for 4299.99 so this is the way things are going for 5090s..... MSI Liquid 5090 is also listed on MSI's site at $3699.99 too....4 points

-

an angry thunderstorm now and then. @Papusan -21c is not too terrible. We will get that here in Idaho from time time. 60mph on a snowmobile is not as much fun when its that cold though!4 points

-

Sorry to hear about the adverse weather. Your temperatures look to be about the same as Brother @Rage Set and a significant portion of the Eastern US (even the Deep South) is experiencing brutal and abnormal ICE and snow, downed power lines, etc. I suspect that Brothers @tps3443 and @electrosoft are also getting hammered pretty severely right now. The Arizona summer heat can be deadly, but Arizona (and most other states in the West) very seldom have severe or catastrophic weather events. Most of the horrible and catastrophic weather events occur in the Central and Eastern states. Brother @Raiderman and I do not see very many temper tantrums from Mother Nature.4 points

-

I'm going to try a bit more tonight luckily I unplug a few cables and I can pull the whole thing out but it's still a pain to work in due to the water loop and how it's routed. Problem is work is rampant due to the winter storm so I just don't have the time to troubleshoot. There was a local listing for a x570 strix but it's 3 weeks old so I don't expect to hear back I think for the time being ill set up the z490 system just to prevent me from making an impulse buy, I can still play with lossless scaling with the 3090ti + 5700XT foe the interim. Also gives me a chance to play with my pixel 10 pro and how well it handles desktop mode on android since unraid handles most of the grunt work. But that's all just me trying to make lemonade4 points

-

4 points

-

Someone used the wrong screw and drilled through the card @Mr. Fox lol what the heck. People are something else man. I’ve had mine apart like 4 times now. You would have none of these issues though. That’s not a screw that would be included with it either. All the screws in that section do not look like that at all, and they all match.4 points

-

I feel like if cable makers really didn't cut corners it would at least put a nice dent into many of these problems. Watching that brand new Corsair cable be absolute trash was alarming from its loose fit to its readings. It seems like Cablemod learned their lesson that first time around and all the issues with 4090 cards and really came back with a high quality cable with a snug fit and good amperage distribution. Trash connector that needs perfect conditions to properly thrive Trash AIBs shaving pennies on quality adapters even to their own detriment Trash end users treating it like it is as robust as 8-pin and twisting and pulling it with reckless regard.... I DO think this connector is here to stay, but I do feel like with a few more refinements it will be declared "safe" lol, I tried to avoid that club! 🤣 lol, well I'm going to let you know it doesn't get any better in your 50's! 🤣 I turned the corner today and my fever finally broke after 4 days but I know this wet cough and general malaise is going to be with me for at least another week. Speedy recovery brother! -------------------------------- @Mr. Fox It's like we talked it up..... 3090ti vs 5090. 5090 is two generations newer and fully 66 to 133%+ faster than a 3090ti with a lot of that on the low end being an inability to fully utilize the 5090 even at 4k....it would be even worse with a 3090. 5090 is just a beast. I am glad we were able to get ours at a somewhat sane (in the realm of 5090s) price... --------------------------------- Zotac first out letting us know massive price hikes coming specifically to the 5060 and 5090 and kind of throwing Nvidia under the bus but they are the reason so.....yeah.....4 points

-

If you do end up trying another, the only logical model is the Red Devil. You get the highest phase design, 3x8pin and I would say one of the best and quietest air coolers, but we know you would rip that off ASAP. Even though it is a kick in the performance sack, I wouldn't be adverse to running a KPE3090 again if it came to that and a 3090 is where you wanted to go. We didn't realize that was truly the last of a magical time in GPUs. 😞 Based upon how AMD implemented mobile considerations with their effectively transplanted desktop chips, I think you are better off with the 275hx. I have yet to encounter an AMD laptop that doesn't have poor fan tables and fans revving up vs the now three 275hx based laptops I've tested. Even the hot box Acer Nitro 15.6" with 5060 and 275hx that thermal throttles under heavy load runs relatively even and quiet vs the Acer 16" AMD OLED, Acer AMD 7325 + 4050 15.6" and my former Raider 7945HX3D. Unless AMD changes something in their mobile implementation, I am going to stick with Intel moving forward. Since the launch of their Ultra chips both true mobile and desktop shoehorned into laptops, they just run quieter and more even for D2D use than AMD....at least in my testing/use cases. We only ended up with a paltry 6 inches of snow. So disappointing..... (Sorry @Papusan! 🤣) I was wondering if you had a preferred card one over the other, but as Nvidia stock dries up there's plenty of 9070xt cards available even though prices jumped. Hopefully AMD can make some more inroads. lol, thanks! It really is the best model on paper out of the 9070xt lineup for several reasons. I didn't even really give it a serious look till you went with one and I went "Hmmm, if @Rage Set is going with it, maybe there's more to it that I missed," and then read some reviews, watched some videos and realized it is the best 9070xt this time around overall IMHO. Ugh, Thursday night after the gym I had a scratchy throat and itchy sinuses. I woke up Friday feeling like someone hit me with a baseball bat. High temp, throat feeling full of glass shards. By Saturday, it had moved down into my lungs and chest was hurting and nose flowing like the nile. Sunday temp spiking to 103+ and now a tight, juicy cough with lots of green stuff that would make even Slimer proud. Took a home test and yep, Flu A. I skipped my flu shot this year too and this was a nice reminder why I shouldn't skip it. My wife got her flu shot and she picked up Flu A from me and besides some mild sniffles, she's fully functional while I am coughing and hacking up all types of stuff with spiking fevers going on day 4 and I'm just wiped out.....luckily I have enough energy to at least play FO76 and WoW 🤣 Like you, and I am sure the bulk of us married, I dunno how we would survive without our significant others. I will say this though, I will take the flu over Covid any day of the week.....Covid wrecks me in a way no other sickness ever has and I'm down for the count for 6-8 weeks depending on how close I contract it to my vaxx window and even after I am weakened for quite awhile. I've had Covid 5x now in the last 6 years (including on "launch" in early 2020). My wife caught it with me last February and it was the sickest she's ever been in her life. In her words, "I now see how this crap can take someone out...." I think one reason I tend to skip my flu shot from time to time is because even at its very worse it is maybe a 5/10 vs covid for me. Oh, and you're relatively young. You're what? In your 30s? Yes, it gets worse as you get older.... 🤣4 points

-

If only we could get some moisture here in the PNW. Normally the valleys would be coated with white stuff this time of year, but we have not had any measurable snow fall dating back a to a year ago. It was however, 14 degrees this morning in Nampa, Idaho which would be nice for some OCing.4 points

-

Hey guys, since having looked at a bit of the lossless scaling app, thinking my next daily driver system should align with that for my 7900 XTX and 3090Ti since Im not too keen on the newer connector + pricing of it all. Are there X3D variants of threadripper in the rumormill? If so I might start saving up... I got the 512GB of Persistent Memory (Intel Optane 2666Mhz 4x128GB) installed in my C622 server, Gigabyte was kind enough to not include a switch to dedicate it to system memory and I cant activate it via a Linux VM, so I wont be able to see the RAM pool until tomorrow (family is asleep).4 points

-

As already stated...MSRP is a fat lie. Nvidia have now stopped subsidise the MSRP cards. Gigabyte have improved RTX 5070 Ti Windforce OC with v2 to fit into more of the modern tiny trash boxes. What could go wrong going cheapo way as greedy Asus? I have seen similar from Asus before. They preffered cool half the vram with nothing more than hot air from the fan for their 1060/70 Strix models. Also older mid tier models come with half baked cooling design. The worst part with Gigabyte... They did it right with the V1 models. So they improved the card to the worse to save a few $ and sell more cards to uninformed people with cute mini pc's. First let em bleed... NVIDIA is being sued for using 500 TB of pirated books to train its AI Today we learned that NVIDIA is facing a lawsuit filed in the Northern District of California, USA, for using pirated books to train AI models.4 points

-

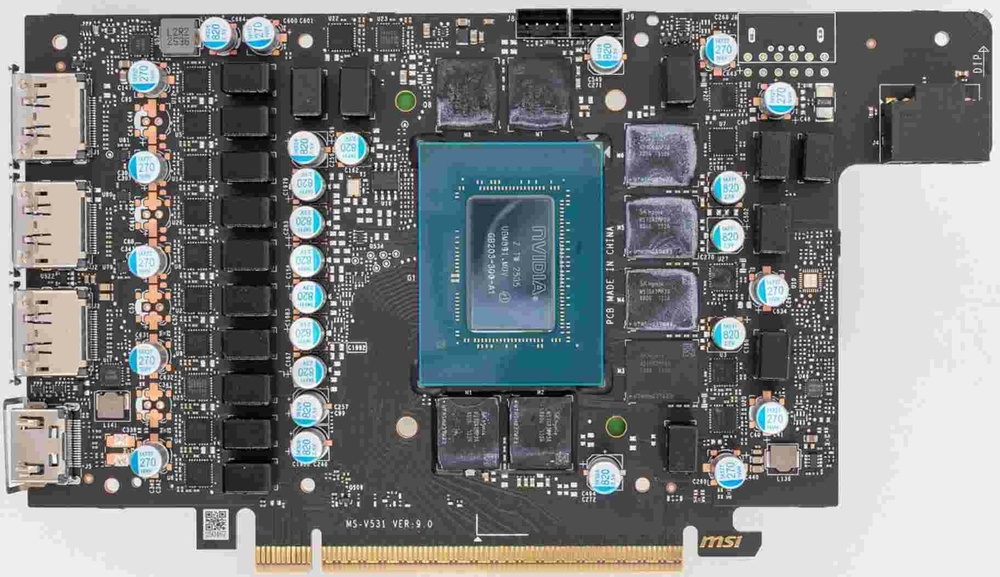

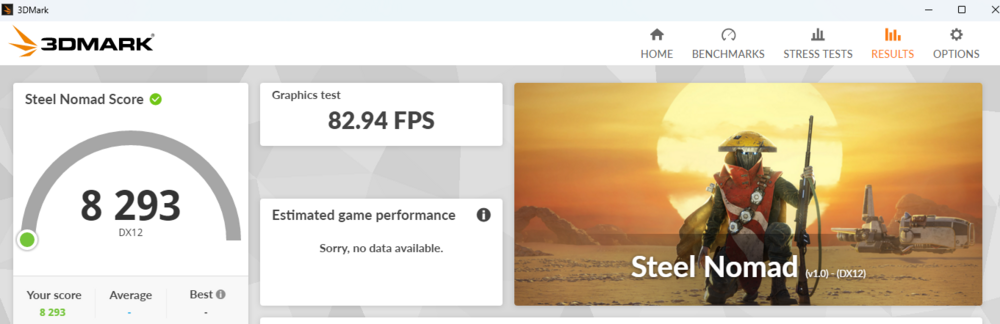

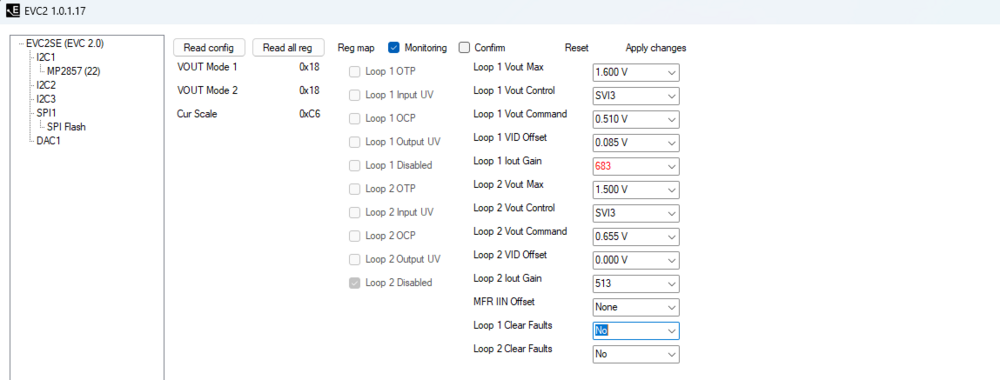

This evenings efforts, iout Gain was stuck at 300 so effectively I was able to push the card again. Around 650-700w, I wasnt able to change the Iout gain from 300 so ran as is. Next time I'll try 250-275. Core 3475 Mem 2764 Voltage 1.158 Card is stuck at 683 again after one of the failed attempts so I guess thats all for tonight. Wasnt able to breach 3500 Core. :(4 points

-

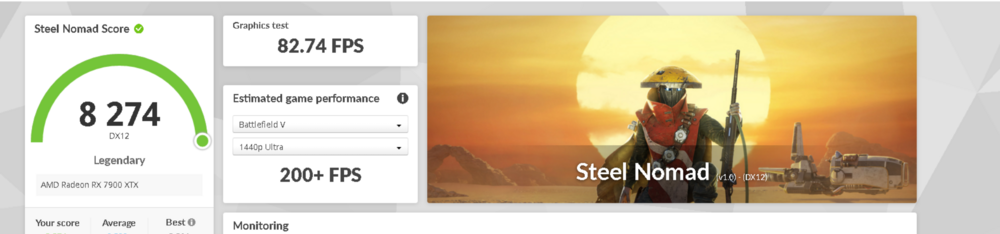

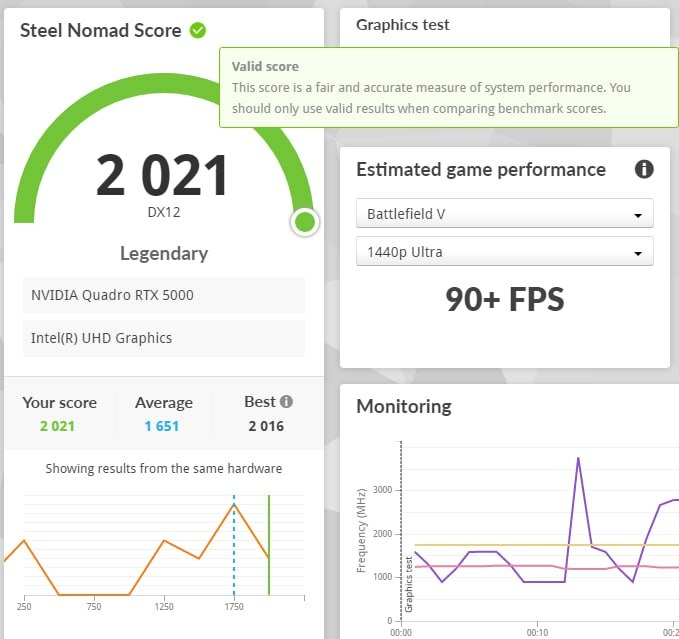

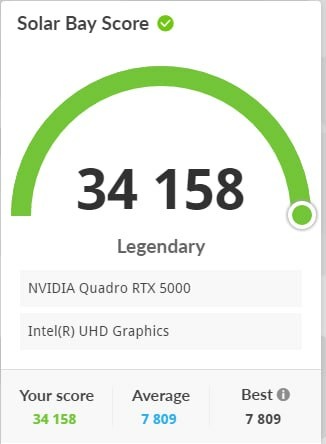

I always make things more complicated than they aught to be, like using 3x PSU's for each 8-pin on the bottom shelf, an ITX system since HEDT has been more or less dead to me since the X58 days (though Im sure there were options since, poor decisions didnt allow them to be obtainable) so anything beyond ITX or cheap laptops like the M4600 were simply not even considered. Then naturally a UT45 make perfect sense in my hodgepodge of parts. Since the 5800X3D sips power and is locked a cheap Thermalright PA120SE does fine for gaming though if I go the 9800X3D route I could definitely see a second UT45 in my future. During the era where I surfed OCN AIO's were still called CLC's mostly targeted at ITX users who later got to deal with the pumps dying or tubes coming undone and leaking over everything, I never considered CLC's since they were considered "Cheap Low Cost". Anyways it feels like I have been typing this post for a week now. I was making progress when perplexity recommended changing the power plan in Windows. Ever since then I have not been able to get the EVC2SE to change the register for the IOUT Gain so its stuck at 683 which is the default. I tried a couple of fixes but for the evening I am going to call it a night. Core: 3450 Mem: 2764 Now my clocks are back to stock and until I figure it out, I cant get them past 2850 in Steel Nomad, a far cry from 3450. Was working on getting 3450 sure stable before moving on to 3500 then seeing what I could do about the memory. With a 16c ambient, 21c idle, and 30c peak load temperature I feel like I still have some performance to get out of the 7900 XTX. Hot spot was "bad" from a differential standpoint, 30c at its peak so it peaked at 60c. Still well within margin for benching (105c throttle). Since it wasnt working before, I tore down the loop and took apart the GPU to reflow the solder. Worked great after that. I also checked on the LM application and all was well on that front as well.4 points

-

In for a penny, in for a pound eh @Reciever? 🤜 Very nice! You really embraced water cooling nicely....I wouldn't be surprised to see a chiller on premises in the near future at this rate.... 😁4 points

-

4 points

-

Dropping tRFC to 388 shaved a ns off of memory latency, but otherwise unremarkable by other measurements. There is very little difference between 6400 1:1 and 8000/8200 2:1 on Ryzen due to architectural limitations. Adding 3D cache makes it even less relevant. Y-cruncher is around ~1.0 second slower at 6400.3 points

-

I have the Powercolor Red Devil 9070XT. I am trying to convince myself not to buy a waterblock for it. I am also limited by RAM. So my goal of getting into the top 15 TS (for my CPU/GPU) might be out of reach.3 points

-

Hi, Many thanks to Darius. I'll pick up the card on Monday and continue building and developing. The first small subproject is the internal and external cable so that an internal display and an external display can be connected. I've already developed the schematic and, with Darius's help, I can now determine the exact length of the FlexPCB and start routing once I know the exact geometric ratios. The cables should work for all future generations. The second project will be the cooling system. In the short term, it will be water cooling, but there is also the option of making a vapor chamber like the original. However, I don't know the exact costs for that yet. That's all from me for now. Thanks for your support.3 points

-

Yep, more than what they do for their GPU division.....3 points

-

PCWorld generally is not a good resource for useful technical information. I am not interested in the biased thoughts and personal opinions of their editors. I only care about what I think and seeing how many others share my opinions. It used to be a good source, but not for a long time. They do not allow readers to comment on their reviews and articles, which delegitimizes them in my opinion. I think any media outlet that does not allow readers to comment to support or rebut has an agenda of some sort and they want to control the narrative. I posted my thoughts on the TweakTown article yesterday.3 points

-

I'm very happy to see a fellow community member using Lossless Scaling in the exact fashion I do. This is something I've been promoting for a while, and I'm happy to see someone else interested in using Lossless Scaling to relive the days of SLI/Crossfire. This really is the way to better performance in games if you're super hungry for those frames but don't want to spend anything on new hardware. We can instead use what we already have! Cluster computing to increase performance in games is awesome. I use a combination of Lossless Scaling + Apollo + Moonlight to use two machines to render a game. Being able to take advantage of the combined performance of 2 computers to run your games is awesome! As an experiment, I think I'm going to see if a Tri-SLI/Trifire setup is possible this way. As I haven't tried that sort of a setup yet. I got a Radeon 9070 XT and a Radeon 9060 XT from Micro Center just before prices got to skyrocket, so I was able to get some hardware at those sweet discount prices. The 9060 XT was for my dad, but I'll be installing the 9070 XT in my desktop sometime soon when I find extra time. Currently, my desktop has a Radeon 6950 XT and an RTX 3080. I'm going to install the 9070 XT in my desktop and use that as my new render GPU, then use the RTX 3080 as my frame gen GPU. I'm going to install the 6950 XT in my second desktop (which currently has a GTX 1060) and pass those frames to my second desktop using Apollo + Moonlight, then perform another layer of frame gen on that input using a second instance of Lossless Scaling running on this machine. BAM! Triple GPU rendering baby! With some really awesome newer monitors coming out, I'm going to want the 1000 fps experience in every game, which should be achievable through a triple GPU setup using Lossless Scaling + Apollo + Moonlight. I actually really want the Thermaltake Core W200 case for my next build I will inevitably do sometime in the future, but it seems it's nowhere to be found anymore. I may have to just stick with 2 separate cases. Does anyone know of any other cases that can fit 2 ATX motherboards?3 points

-

Your ambient temperature in this photo is 10°F cooler than mine. Congrats on the PSU upgrade.3 points

-

Replacement PSU as finally arrived. This box is a chonker.....thank you MSI for the upgrade especially considering the original AI1300P was an open box Amazon Warehouse pickup for $144.55 to my door. Every (and I do mean EVERY) RMA I've ever had to deal with MSI has gone perfect. Only knock would be the turn around time which I could see being a problem if it is a critical component. If they had a cross shipping service for RMA parts (not sure if they do?), they would be near flawless. Time RMA generated to replacement/repair received is on par with others before. RMA Generated 1/8 Original PSU shipped 1/9 Marked Received by MSI 1/22 Out of stock and replacement agreement outreach 1/23 (Friday) Replacement shipped 1/26 (Monday) New unit received 2/2 I will continue to recommend MSI for many reasons one being their service and support. ------------------------------------------------------------ Testing out the lower 265k + Z890 AYW tuned up at 8000 rock solid with the Red Devil 9070xt and putting an open box Corsair SL1000 SFX PSU through its paces. I can see why the Corsair SL line of SFX PSUs are the goats. Very cool, quiet and the very thin, soft, properly measured modular cables makes this thing a class above the Lian Li and Coolmaster SFX models I've tested so far. I'll be using the Corsair in my own, personal SFX build outs moving forward.... What makes the Red Devil stand out is how quiet the fans are even under load. That along with very low coil whine makes this thing crazy quiet even running Timespy or testing WoW/FO76. Clocks were boosting max out of the box up to 3275mhz ~100-150mhz higher than the gaming 9070xt out of the box. It is also much quieter both coil whine and fans under load than the Gaming 9070xt but this is to be expected since the Red Devil has a much better heatsink. ~30k stock is about right for the three 9070xt's I've tested so far that were all 3x 8pin designs. -------------------------------------------------------------------- I'm no fan of AIBs overall, but they can only sell what Nvidia gives them and without the OPP program in place from Nvidia, you have the perfect storm of quantity + higher prices overall right from Nvidia. It is disingenuous to keep comparing AIB pricing to Nvidia MSRP pricing. The correct comparison will always been initial MSRP pricing of individual AIB model pricing versus their pricing now. With the lack of supply, I wouldn't be surprised if many of these limited edition cards are nearly impossible to get especially with small runs. 1000 Matrix cards? 1300 Lightning cards? That's nothing and they get snapped up pretty quick especially the Lightning cards. I had zero desire for a Matrix, but that Lightning is much more tempting but I still wouldn't drop $3k+ on a 5090. If you're in the market for a GPU right now? Whew, I don't know what to tell buyers. AMD is still the best value atm even with inflated prices. I'm not a huge fan of the Challenger 9070xt having owned one briefly. Compared to the Gaming 9070xt and Red Devil 9070xt, it felt light, cheap and only had 2x 8 pin leads and clocked much lower out of the box than the other two but bang:buck it is at this moment the best buy for $699.99 at MC and everywhere else it is now $719.99. When it was $569.99 at MC, that was just a killer deal......3 points

-

Brother @Papusan it looks like there is a solution to use the Matrix BIOS on your Astral. I am using it on my Solid OC and it is the best one. https://www.overclock.net/posts/29553948/3 points

-

Employers that do not value their employees often view them as a liability or overhead expense rather than an asset. Companies like that do not deserve to be profitable or successful. They deserve the same treatment as the employees they abuse.3 points

-

Hopefully the idiots like Jensen, Lisa Su, Nadella and the rest of the AI cartel will be the first robot-murdered human beings when the machines take over.3 points

-

Definitely interested but at this stage it would prove difficult to set up logistically to avoid dumping the heat back in my room. One of the family members may be moving out and getting their own place next year, if we keep this place I can put basically all the heat generation and removal in that room and run some longer hoses and pumps. If we get a different place then I'll just look to getting a bigger bedroom. It would also give me some time to go through what I have for GPU's and start a passive search for more waterblocks to replace the stock heatsinks I have as I already know Im going to stay on water. The 7900 Xtx is purring at 3.1Ghz for now, I think I'll need to use the evc2se for 3.2Ghz. It's crashing at 3150 but I'm hoping that's just due to sudden spiking outside the power limit. Hopefully I can push 3500 for synthetic benching.3 points

-

Initial test with Asus Z890 AYW and "new" open box 265k: Installed newest BIOS (one on board was from May 2025) Booted to G4 9200 no problem Has no problems doing 8667 G4 Testing the new open box 265k and unlike the old one, it booted right up at stock settings at 8667 G2 unlike the first open box 265k that wouldn't boot past 8200 auto and even pushing voltages up to ~1.45v couldn't do more than 8400. For 8400 to pass, the old 265k required 1.41/1.40. It flat out wouldn't post at 8600 no matter what you threw at it. New Open Box 265k passed G2 8667 on auto settings no problem: So far just testing on the Z890 AYW, new 265k will boot up to 8800 G2 on auto but no post at 8933 so I will give it some juice later but I'm moving the new 265k over to the Strix next as the Z890 AYW for $95 has more than met my expectations so far. For 99% of users, that first 265k will be absolutely fine and run their 6000-8200 memory just fine. But for my criteria it is a dud.3 points

-

I haven't seen a A02 version of the board yet. I've only ever seen A01. But I assume that board was returned because of that fact. I had a board from Amazon that was terrible. The Nitro Path Asus is using has made 4x boards viable now. I wish they would have done some magic like that for the 2x boards. $300 for a Z80 AYW and 265K, absolutely insane.3 points

-

I'm thinking of migrating away from Win 11 to Mac Mini/MBP 14 because of choppy video playback and amount of things it broke every month on Copilot certified hardware. Davinci resolve 20.3.x is broken and Dec update made it much worse even with nvidia RTX 4070S and playback being choppy with FHD 30/60 FPS. I had to edit the same video on my phone with imovie. @Mr. Fox is adobe premiere affected? I couldn't find any threads online. I'm thinking of switching to Premiere Elements 2026 and Photoshop 2026 from Davinci Resolve Free. Premiere Pro is overkill for me. I checked with other Resolve Studio users with NVIDIA RTX installed and they reported the same issue. Adobe allows to be used on iPhone, Mac and Windows with single user license. @Mr. Fox @Papusan @jaybee83 @Dr. AMK @Prema @saturnotaku I'm excited to announce crossing 1K subs on youtube last week. https://www.youtube.com/channel/UCpCSkED9eOAQCVFEORQ_qJQ3 points

-

Lol, nothing worse than multiple teardowns trying to fix cooling issues, but it all ended up great and it makes you wonder what thermal pads Asus is using..... What thermal compound are you using on the GPU Core? Nova Lake looks so good. Hopefully they stick to their "late 2026" launch and don't drag it into 2027.3 points

-

Hi, I have a zbook 17 g5 that has an identical chassis to the g6 version. I'll quickly list pros and cons that matter to me (you can ask me other stuff too): PROS : - Last worstation laptop to have a bluray player, can be swapped out for a HDD, not available on the 15 inch version. - Last worstation laptop to have MXM 3.0 standard connector, not available on the 15 inch version. Meaning upgrade up to MXM 4090 is possible - All metal chassis, feels very solid, rounded edges, 0 flexing. - Screwless maintenance hatch gives access to 2x ram slots, 2x sata/nvme m.2, 1x2.5inch sata drive, dvd player, wifi + wwan. CONS : - Big screen bezels - Other 2 ram slots + 3rd nvme slot is behind the motherboard meaning you need to remove the entire motherboard to access them (dumbest workstation design I know) - okay keyboard, kinda shallow compared to my precision 7720. - ram limited to 2400mhz by BIOS, no option to change it - undervolting only possible with v1.12 bios from 2019, downgrading is a pain. - best cpu option is 9980H (non K) also the 8950HK is possible but it's 6 cores Currently running the 8850H undervolted -140mv, unlocked boost to 4.4ghz 60w power limit, 75°c in games3 points

This leaderboard is set to Edmonton/GMT-07:00

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

.thumb.jpeg.6525223bcae8200a11192d197e3f8fff.jpeg)

.thumb.jpeg.91722053f95a7bc78a220375413f3d25.jpeg)