-

Posts

467 -

Joined

-

Last visited

-

Days Won

4

Content Type

Profiles

Forums

Events

Everything posted by Clamibot

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Yeah, the guide I wrote is more for maxing out gaming performance than benchmarking. It'll be really useful when I eventually get a 480 Hz monitor. I really like how these new ultra high refresh rate monitors look so lifelike in terms of motion clarity. One of my good buddies has joked on multiple occasions that Icarus keeps flying higher (referring to me) whenever I get a new even higher refresh rate monitor. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

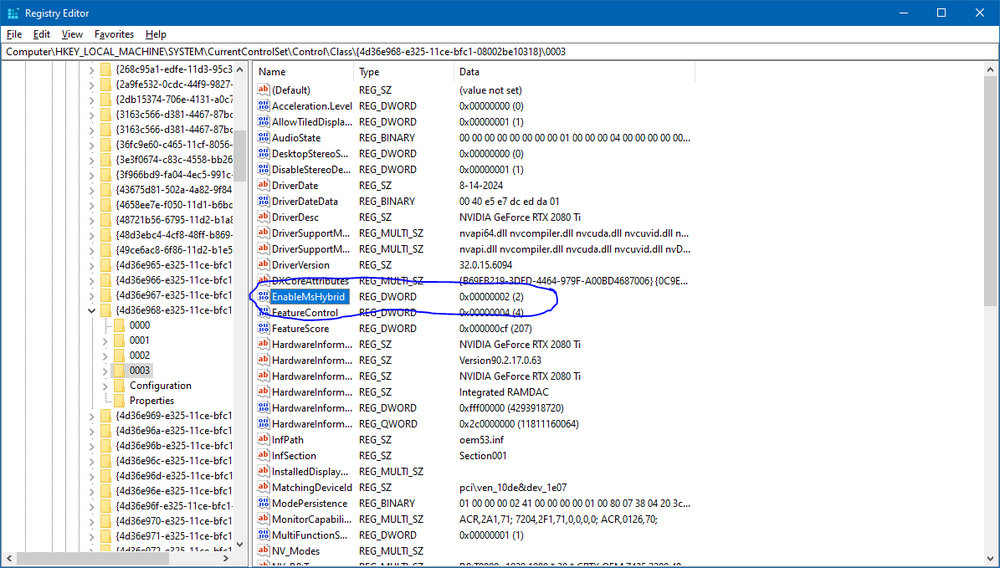

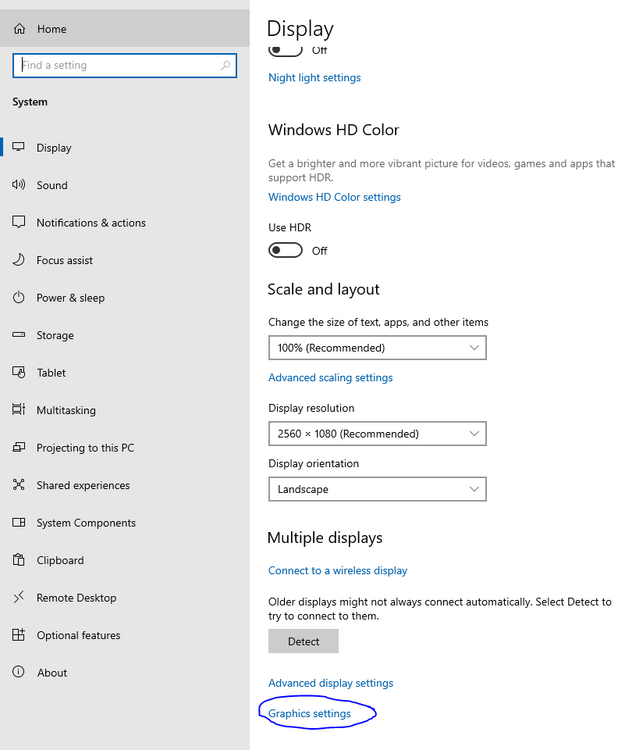

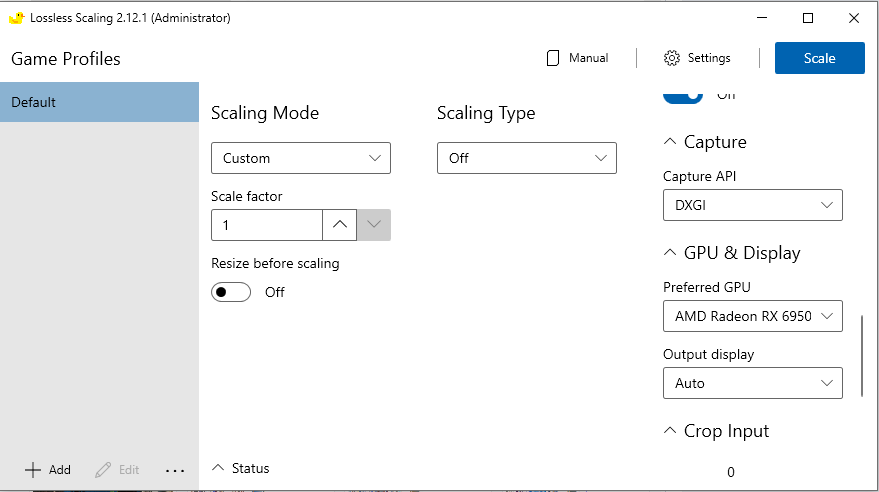

I don't have any SLI capable motherboards, but I can offer you an alternative if you can't get SLI working. You can use Lossless Scaling instead to achieve pseudo SLI with much better scaling in the worst case. I posted some instructions on how to set this up a while back on this thread. You can even use a heterogeneous GPU setup for this and it works great! -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Looks like the 9070 XT is a really good card. It's super enticing since its MSRP is only $20 more expensive than what I paid for my 6950 XT about 2 years ago, and it's a big upgrade over the 6950 XT. I know this is FOMO, but I think I'd like to get 2 of these before tariffs hit and relive the days of Crossfire, but with near perfect scaling through Lossless Scaling. I'd be set for a very long time with 2 of those cards giving me a worst case scaling of +80% in any game. I have come to like AMD graphics cards very much after getting used to their idiosyncrasies and learning how to work around those, and I plan on only buying AMD graphics cards in the future. They are a better value than Nvidia and offer superior performance vs their Nvidia equivalents at all performance tiers, at least in the games I play. They work pretty well in applications I use for work too. Usually I do upgrades mid generation and do so once every 3 GPU generations at least, but I may make an exception this time if prices on everything are going to skyrocket. Makes me wonder if the tariffs are just a ploy to jump start the economy by inducing FOMO in everyone to buy stuff up now, therefore drastically increasing consumer spending. I hope this is the case but am prepared for the worst. I'm no stranger to holding onto hardware for a long time as that is already my habit, but it's rare for me to upgrade so quickly, and it kinda feels like a waste of money if I don't need it. However, I also don't want to pay more later, so it may be better to just eat the cost now. -

The most powerful CPU supported by this laptop is an i9 10900K. Depending on your workload, it may be worth upgrading to as the 10900K is a 10 core CPU vs the 8 cores in a 10700K. That's a 25% increase in core count, so a 25% increase in theoretical multicore performance. The RTX 3080 is the most poweful GPU that can be installed in this laptop. Unfortunately, Clevo abandoned this model really quick and never made any further upgrades available. That's really sad as I got this laptop specifically for its upgradeability. Given the cost for a GPu upgrade (since a new heatsink is also required), it may not even be worth upgrading this laptop (not that I need one right now, but still). You can overclock the 2080 Super fine in this laptop. I can do a 10% overclock, which is the max I can do without the GPU drivers crashing, and the heatsink is able to adequately cool the GPU. As for the CPU side, it is possible to run a 10900K full bore at 5.3 GHz all core depending on workload. I can run that speed while playing Jedi Fallen Order, which becomes a very CPU intensive game when you have insanely high frmereate requirements like me. I have about a 200 watt power budget for the CPU side before it starts thermal throttling. A few things to note about my CPU side cooling in this laptop: I got the laptop from zTecpc, so it was already modded from the factory. I also use a Rockitcool full copper IHS, which is flatter and has 15% extra surface area over the stock IHS, allowing for improved heat transfer. I also use liquid metal on the CPU, both between the CPU die and IHS, and also between the IHS and heatsink, so basically a liquid metal sandwich. Also, this laptop does have a USB-C port with DP support, that one is right next to the USB-A port on the right side of the laptop. You should see a strange looking D above it.

-

I'm sorry to hear you got hurt but am glad you're ok. I wish you a speedy recovery! Like me, you are still very young so you will most likely make a quick full recovery along with some physical therapy. Scratch that, you WILL fully recover. Mind over matter. A positive attitude makes a world of difference. Although you don't know any of us here on the forums in real life, we're still here for you and care about you. Good luck in life brother!

-

Anyone want a robot dog? https://www.aliexpress.us/item/3256805736266551.html?src=google&pdp_npi=4%40dis!USD!475.88!433.05!!!!!%40!12000035049332711!ppc!!!&gatewayAdapt=glo2usa

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Well that woud explain why I can't get wifi working with my Asus Maximus Z790 Apex Encore no matter what I do. That's been making me feel like an idiot, but that would make total sense as the reason why it isn't working for me. Fortunately that is also the only component that doesn't work, and I have a few USB wifi adapters laying around, so I'm just using my wireless AC one. It works well enough. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Clevo is going back to making modular laptops again? Awesome! Or is that a Tongfang model? Laptops just keep pulling me back in. I can't get away from them because the allure of portability is impossible to resist. Having said that, I also don't have a tolerance for laptops that are not modular, so I wouldn't buy such machines anyway. It takes something special like this to pique my interest, and it piques my interest HARD! Let's hope we get a proper 18-19 inch DTR with that modularity + external water cooling. That'll be fun for benchmarks and awesome for max performance in an ultraportable form factor (yes I consider 18 inch laptops ultraportable). Speaking of which, I'll need that special water cooling heatsink for my X170 to perform an upgrade to the RTX 3080. That should be interesting. -

I would say if you're going to replace the Flex ATX PSU with a Pico PSU, then being able to house 2 of those HDPlex 500W PicoSPUs would be awesome as that would allow for very high power builds using a dual PSU config.

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

If the 5070 truly shifted performance up by 2 performance classes vs the previous generation, then the pricing seems a bit better to swallow, but I'm still not going to allow them to condition me to higher prices. A 70 class card should still not be that expensive, but I digress. I'm conditioned to Pascal era prices. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Now just gotta wait for the Clevo X580 reveal, whenever that is. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Happy new year my friends! -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Looks like Clevo is starting to pull their heads out of their butts. Still soldered crap, but it's a massive upgrade over the X370 abomination that is completely undeserving of the Xx70 moniker: https://videocardz.com/newz/clevos-x580-next-gen-laptop-specs-leaked-arrow-lake-hx-cpu-and-geforce-rtx-50-gpu This is a nice 18 inch Clevo DTR, so it's good to see them starting to go back to what they once made. It doesn't have an upgradable CPU or GPU, but they increased the drive slots from 3 back to 4 and also increased the RAM slots back to 4. Gives me hope that Clevo may return to full socketed models one day. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Merry Christmas everyone! This is my most favorite holiday of the year! Bummer it only lasts one day, but we still have about a good 6 hours left (at least where I'm at)! -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

I just swapped my 144 Hz screen on my X170SM-G with a 300 Hz one. It was a pain in the butt to find the display cable for it, but it was worth it. I ended up finding the part on Aliexpress and then waited around for the cable to arrive. I just did the panel and cable swap today. I also modded the screen to make it glossy. It looks amazing! I have a nice glossy 300 Hz screen for my X170SM-G now! The colors are better than on the 144 Hz screen and everything looks so much smoother on the 300 Hz screen (as it should). I think I'm going to keep going higher and higher with my screen refresh rates as time goes by. My ultimate goal regarding screens is to acquire one where motion on it looks like real life (super duper ultra smooth). The 220 Hz screen I have for my desktop pushed me to get this 300 Hz one for my laptop. I'm very happy I got it! -

This is awesome! Now we just need a version of this for Clevo laptops. The upgradeability of my SM-G is begging to be utilized.

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Also if anyone needs extra cotton swabs for applying liquid metal, they're these Japanese ones: https://www.amazon.com/Tifanso-Cruelty-Free-Biodegradable-Chlorine-Free-Hypoallergenic/dp/B07R8B93GL/ref=asc_df_B07R8B93GL?mcid=e042f9f5c5c2392481644c9da3b526ea&tag=hyprod-20&linkCode=df0&hvadid=693127596188&hvpos=&hvnetw=g&hvrand=14693873930929850647&hvpone=&hvptwo=&hvqmt=&hvdev=c&hvdvcmdl=&hvlocint=&hvlocphy=9026808&hvtargid=pla-757827286496&th=1 Don't waste your money on ordering extra swabs from Thermal Grizzly. They're just doing an insane upcharge on the swabs I mentioned above. The swabs are "special" in the sense that they're more densely packed than q-tips, which helps a lot with spreading the liquid metal, but they're just regular cotton swabs otherwise. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

I'm very interested in your end results! I just bought some Conductonaut extreme since I used the rest of my Conductonaut on the build I did for my buddy over the weekend. This liquid metal here looks like it has even better thermal conductivity. -

Pull the cable straight up using the black tab that's attached to it. This is the eDP cable. You'll need to pinch that black tab with your thumb and index finger. It should lift off pretty easily once you pull straight up.

-

New Member! My Alienware Collection

Clamibot replied to KOVAH_ehT's topic in New here? Introduce Yourself

I love seeing people being passionate about the things they love and enjoy. This is quite the collection you have! I used to be super into alienware laptops as a kid and it was my dream to own one. I finally got an Alienware 17 R1/M17X R5 when I was in high school and really enjoyed it. I upgraded the GPU to a GTX 1060 and I still use it to this day alongside my Clevo X170. The old Alienwares were built to last! My most favorite backpack ever is my Alienware Vindicator backpack I got in 2015. I've used the crap out of it and the rubberized face is peeling off, and my mom snapped one of the zippers by accident since the rubberized zipper tips have become quite brittle, but the rest of the backpack itself is still in really good shape. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

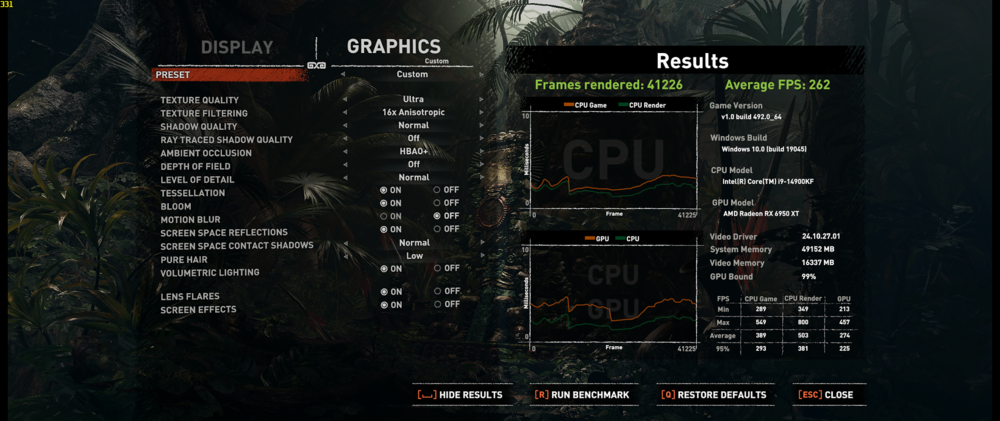

Yep, high binned 10900Ks can do some pretty amazing all core speeds if they're kept cold enough. The cold also significantly reduces their voltage requirements and power draw, further increasing your overclocking headroom. You saw the results that just a TEC is capable of getting out of this chip. I was able to do 5.5 Ghz on mine in games initially but could never keep the chip cold enough to keep it 100% stable. Any temperature spike too high and the machine crashed. One thing I really like about my current 14900KF system I just built last weekend is that the system doesn't crash if I overclock just a little too high. Programs crash instead, which makes the trial and error a lot quicker of a process now that I don't have to wait for system shutoffs and reboots every time now. I do want to get the V2 of the MasterLiquid ML 360 Sub Zero, called the MasterLiquid ML 360 Sub Zero Evo, as that will enable me to continue my sub ambient overclocking adventures with the 14900KF. Funnily enough, Skatterbencher did some overclocking using a TEC on almost my exact same setup (he used a 14900KS instead of the KF) and got really good results out of it. He was able to easily do 6.2 GHz all core in Shadow Of The Tomb Raider. Video if anyone is interested: -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

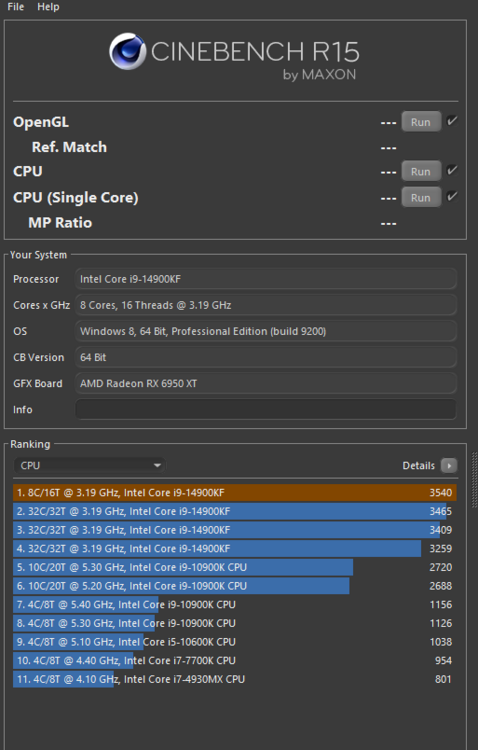

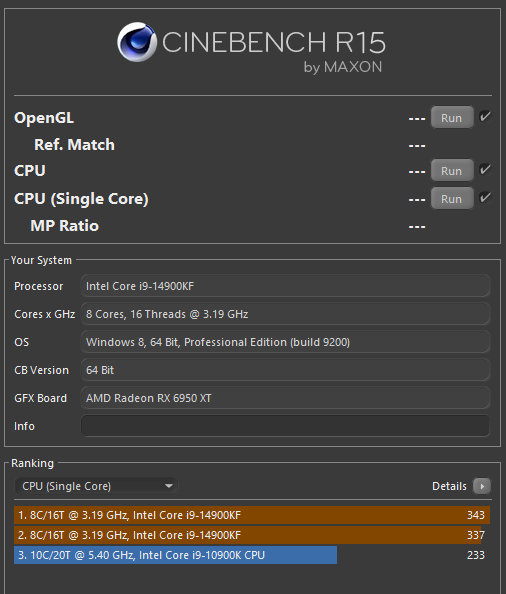

I'm using Windows 10 22H2. I'm specifically using the WindowsXLite version for maximum performance. Cinebench R15 is able to use the E cores, just not at the same time as the P cores. I can make it use either core types, just not at the same time. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Yes, the cooler sucks until you enable the TEC. There are 2 different control center applications you can use for it. You can either use the Intel official Cryo Cooling software: https://www.intel.com/content/www/us/en/download/715177/intel-cryo-cooling-technology-gen-2.html Or you can download the open source modded version that doesn't contain the stupid artificial CPU model check, which allows the TEC to work with any CPU: https://github.com/juvgrfunex/cryo-cooler-controller I prefer the open source version. Once you get either one of these applications set up along with the required drivers to make them work, just enable the TEC and watch your CPU go brrrrr (literally, the CPU can get pretty cold). If you use the Intel official version, I'd recommend setting it to Cryo mode for daily driving, and enable unlimited mode only when benchmarking in short bursts. If you use the open source version, setting the Offset parameter to positive 2 is equivalent to running Cryo mode on the Intel official software, and setting that Offset parameter to a negative number is equivalent to running Unlimited mode on the Intel official software. Once you enable the TEC, the cooler is good for a CPU power draw up to about 200 watts before you start thermal throttling. I also used liquid metal between the CPU die and IHS, and also between the IHS and the cooler coldplate nozzle. Note, DO NOT use liquid metal between the IHS and coldplate on the MasterLiquid ML 360 Sub Zero if your IHS is pure copper, use nickel plated IHSes only! If you use a pure copper one like I did, the liquid metal will weld both copper surfaces together over time with the TEC active as the colder temperatures from the TEC coldplate and the heat from the CPU accelerate the absorption of the gallium from the liquid metal into both copper surfaces, and eventually bonds the copper surfaces together. Alternatively, you can pre treat both copper surfaces with liquid metal to prevent this from happening. Stupid me, now I can't get the cooler off my golden 10900K. It really needs a repaste.😭 I was able to do 5.4 GHz all core in games on this thing using the TEC, 5.6 GHz single core boost on my particular chip. It can hold all core speeds over 5.4 GHz indefinitely as long as the temperature is kept below 140°F (60°C). -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

5.8 GHz P Core only all core run in Cinebench R15: 6 GHz P core only single core run in Cinebench R15: Shadow Of The Tomb Raider Benchmark with the 14900Kf at 5.8 GHz all core: I can do 240 fps raw on this system, mwahaha! -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

I'm using the BIOS profile that was on the motherboard when I received the parts. Also I'm benchmarking using Cinebench R15. Also funnily enough, my system feels significantly more responsive and snappy with the E cores disabled. I haven't done any official benchmarks for this, but it just feels faster. Looks like it'll still serve as a good stability test for me then. I've always used Cinebench R15 to test my overclocks. If they pass a benchmark, they're pretty much always stable in games.