-

Posts

3,114 -

Joined

-

Last visited

-

Days Won

165

Content Type

Profiles

Forums

Events

Everything posted by electrosoft

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Are they doing an 18" version? 16 inch makes it DOA for myself. I love that NH55 (especially now with the BIOS unlocking), but the screen size is the only negative on it. ----- This is my favorite spot to benchmark WoW in the new Xpac since it will max out the GPU even a 4090 overclocked. I've used it across numerous systems in Hallowfall. It will max out even a 4090 and I can't hit my own personal frame cap there. Comparing my overclocked 4090 (GPU only, not memory) at 3135mhz fans at max vs my stock 5080 out of the box, it is 135fps (4090) vs 122fps (5080) on absolutely identical system specs so the 4090 overclocked to 3135mhz has ~10% lead over the 5080 stock. 4k 100% render scale, Ultra 10 RT enabled. Everything set to max. 4090: 5080: -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Especially if you're not wanting the Ultra series in your gaming laptops that is as good as it gets for 14th gen laptops. Every bell and whistle you can think of and then some. Plus we know 5090 mobile is going to barely be better than 4090 mobile just like 2080 Super mobile to 3080ti mobile was a nothing burger. Actually, 3050 mobile all the way through 3070ti mobile to their 4000 series variants was a let down too. The only major uplift was 4080 and 4090 mobile. Plus, outside of custom variants, MSI has the best mainstream mobile BIOS on the market hands down. For me, it's all about 18" displays. I want the biggest displays possible on my laptops when able. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

I mean, I guess that isn't terrible comparatively.... Better than the Asus combo which I can't say I'm shocked sold out within 5 min even at 6200 (~$6610 after tax)....sheesh. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

There was an Astral 5090 combo on Newegg a few hours ago. It was ~$6200 and included the Astral, Asus PG32UDM, Asus Z890 Hero OR Asus x870E Hero, 1200 watt PSU, Asus Keyboard, Asus Mouse and their $600 Router. It was a legit bundle from Newegg. They lasted about 5 minutes actually but someone bought them! All 5080 Bundles are gone now too. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Looks like Gigabyte has raised their prices now too across the board based on NE prices. @tps3443 water blocked 5090 from them is now $2809.99 (or $2996.15 after tax for moi). Which models are you gunning for specifically? Or do you plan on grabbing whatever is available? -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

True, the 3090ti had it. I remember seeing it with my KPE 3090ti but I can't recall seeing it on my KPE 3090. We'll have to track down an elusive 5090 owner to see if it is there. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Nope, nothing for the 5080 I can see. Was there an option for the 4080? I thought it was only the 4090. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Yep, and it starts....and of course the poorest are going to feel it the worse confirmed by numerous sources. I can grumble grumble because some luxury toys are now even more pricier but I can weather the storm pretty easily. Others not so much. ----- In related news, looks like Newegg might have had a few 5090s drop tonight. Specifically Gigabyte Aorus 5090 and Gaming RTX along with the Zotac gaming Solid. Tomorrow is MSI's "official" launch day so if you're wanting one get ready at 9am EST for Newegg and especially Best Buy. Best Buy and New Egg haven't changed their prices yet but I'm sure they will before launch. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Yup, prices are officially rising from the AIBs: https://videocardz.com/newz/msi-and-asus-hike-geforce-rtx-50-series-prices-in-official-stores-now-up-to-3409-for-rtx-5090 Plus with the tariffs in play prices are rising on a lot of items here in the US for other computer items. I noticed even Asus peripherals have gone up in price in the last few days. I'll pocket my $1000, rock my 5080 FE which like my 4090 is being held back in WoW and Fallout 76 (9800X3D results not in yet) and keep it moving unless on a rare snag I get a $2k MSRP card. Anything else is just not worth it paying a almost 200%+ markup for 50% more performance on a card that is being bottlenecked for my two main games anyhow atm. I have several SFF builds in mind I can't wait to slap this into. 100% markup for 50% more performance? Ok, I can maybe swallow that....kinda but that requires an alignment of the stars and that doesn't rule out Nvidia raising the prices on their FE editions to $1100/$2200 or more. I'm not paying $2868 for a 5090 Suprim or $2975 for a Liquid 5090 after tax. That's insane. Absolutely insane. That is literally almost $2k more than I paid for my 5080 FE. Astral 5090 is $3284 after tax. No. Astral 5090 Liquid? $3635 after tax. **** no Just......no. --------------------------- For those of us who also enjoy laptops, I'm loathe to think of the new prices that we'll get. If Asus has already raised the price of the incoming 5090 laptop to $4199.99 BEFORE the tariffs, that is going to be $4500.00 for a 5090 laptop. No idea what MSI will be charging but they're routinely not that far behind. I'm sure the Titan will cost at least one kidney and a weekly quart of blood for a down payment. ----------------------------- It might be time to use common sense and just sit back and enjoy what we have or if you're really hell bent on getting a 5090, just suck it up and pay out the wazoo as soon as possible and hope to recoup a lot of that cost reselling your 4090....if you can score a 5090. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

See? And that's why I'm glad you're here and actually bought one. 🙂 All I have to go off of are other people's results. So having owned and played with it for quite some time now, what is your overall comparison versus your tuned 14th gen and 9800X3D? Pick 3-4 games that favor either or and? -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

I will give the AIBs a pass on this one because of how Nvidia treated them in regards to leaving them in the dark till the last minute and literally giving them ~2wks to actually get the cores and do some semblance of QC testing. But Nvidia? They had ample time to work out the kinks with the 5000 series. They had already pushed it back from its normal launch cycle (~2yrs). They could have easily waited till June or even pushed it back another full year to the fall and launched it properly. If it helps, my 5080 FE is running smooth as silk and zero problems. Just the normal 4090 issues with the 14900KS still bottlenecking it at 4k more often than not where before the 4070 Super was sitting at 99% the vast majority of the time. Raids at Ultra 10 RT on leave the 5080 FE sitting well below 80% almost all the time and sometimes even lower. I am pleasantly surprised at the performance level of the 5080 stock. I haven't even begun to OC it yet. One last round of testing with the 14900KS and 8200 tuned before I slap in the 9800X3D tuned 2200/6400 1:1 for its turn and relegate the 14900KS to the test bench system till it learns its fate. (insert ominous music HERE). I love the comment section. A lot of salty, butt hurt 4090 owners getting testy where it really isn't needed.... -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

I imagine this on loop while you refresh over and over in the darkness of your computer room..... -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Potential plan of attack for myself: ---------------------------------------------------------------------------------------------------------------------- Sold mine for $2100 and prices are absolutely insane right now hitting 2700+ for the liquid. Picked up a 4070 Super to tide me over w/ 60 day BB return window Snagged a 5080 FE on launch Returned 4070 Super on very last day eligible (Feb 1st) 5080 FE has a return window till April 2nd (like that would happen) Plan on rocking the 5080 FE till an MSRP 5090 potentially drops If I managed to snag a 5090, hopefully sell off of the 5080 FE can cover most of the expense since I blew the last of my Fall sell off budget picking up @win32asmguy's beastly 18" 4090 laptop to travel. ----------------------------------------------------------------------------------------------------------------------- If you can stomach going without a GPU, sell it for a bit of profit while the market is ultra dry. Pick up a holdover GPU if needed. You had $$$ set aside for the 5090 anyhow, so use a good chunk of the 4090 sale and address other upgrades and needs or toys. 🙂 I know with the 5080 FE, those 99% utilizations I had all over the place with the 4070 Super are gone again and I'm back into 4090 territory with the 5080 FE being bottlenecked all over the place with the 14900KS, so the next big project is rebuilding out my main rig with the x870e hero + 9800X3D which is finally tuned up as tight as I can get it for my needs then let's see if this 5080 FE keeps laughing. I don't mind being GPU less for a few months or rocking a much lesser GPU. I rocked that 4070 Super for a solid two months and survived. When I sold my 3090 before, I rocked a 3060 for a month or two and survived. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Regression in performance for Ultra 9 285k even tuned a bit for gaming: ---- I was thinking the same thing having just watched Roman's video. So many issues and oddities with the 5090s but with most AIBs having at most a few weeks to actually slap the chips on and test their designs, it makes sense. I would also expect more issues with the 5090 vs 5080 since the 5090 is basically redlining right out of the box. Meanwhile, Nvidia has had the entire lead up time to vet their engineering and design and get it right and it is still having some issues. I'm just not seeing a realistic scenario without dual 12vhpr ports for a true enthusiast 5090. lol, it's true! All you need to do is look around and realize overclockers are an ever shrinking minority in the sea of hardware users. it's evident how companies have taken over with boosting algorithms to properly give every end user access to the bulk of their CPU/GPU's performance naturally. Yeah, too much myopic focus on gaming and benching (present company included). Sometimes we forget all the other advantages and upgrades in the 5000 series especially to content creators and AI along with better display standards for starters never mind all the gaming "enhancements" it received. I think when all is said and done, the 5080 is going to be the overclocking gem of this generation since there are no, real limitations to power out of the box like the 5090 is brushing up against even at stock. My biggest fear is Nvidia analyzed the cross BIOS flashing vulnerabilities and have locked down the 5000 series even tighter. New ranking for desired 5090: #1. Gigabyte Windforce (good cooler, MSRP) #2. Suprim Liquid (Why fix what ain't broke?) #3. Suprim Air (Like a Liquid, but easy to move case to case) #4. Astral (Because if you're being stupid with your money, why not be EXTRA stupid?) #5. 5090 FE (MSRP, but reports of crazy coil whine and weird noises and issues seem to keep increasing) -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Brett ranting about people ranting about the 5000 series basically sums up how I feel (timestamped): ----- MSI 5090 Liquid 5090 quiet as always and less coil whine: ----- Insane shortages everywhere. Even the stock trackers are showing zero restocks basically since drop in the US for eTailers for both 5090 and 5080 cards. MC shows some trickles coming in on trucks. People paying $2k for 5080 FE cards on eBay is insane but hey it's their money! Imagine funding a 5090 FE snag with reselling a 5080 FE.... ------ I'm probably one of the few who enjoyed the 5000 series launch video from Microcenter. The guy doing the longest interview talking about how people are overlooking the encoders/decoders upgrade on the 5000 series is valid. For content creators, the 5000 series is a massive step up even the 5080. AI upgrades are pretty substantial seeing in some models too Looking at SO many happy faces getting some beastly upgrades with the 5080 and 5090 is great to see. The guy upgrading from a 1050ti to a 5080 has no idea the insane difference he is about to experience in performance. Times like this, I realize we truly are a small minority in a big sea of joe gamers and Carla Content Creators of the world. ----- 5080 OC up in stock 4090 territory in TS: -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Well, mystery solved. It was the memory. Pulled the 2x32GB G.Skill A-die and slapped in the 2x24GB 6400 kit that was a freebie with the 9800X3D bundle and just like that everything is working right as rain in WoW and everywhere else. Those sticks were problematic on the x670e hero and x870e hero. I'm starting to think they are failing (or at least one of them). Here is what it looked like when the 2x32GB sticks were installed: -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

I enjoyed the hunt actually. It was fun frantically trying to nab one from NE and BB while also getting real time reports from a few buddies camped out in St Davids and Parkville MC to keep me in the loop how that was going (it didn't lol) All of us in our own, little personal hardware forum here too was fun as real time we smashed ourselves against Nvidia's rock... I mean, we all came out with nada but it was fun! -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

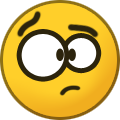

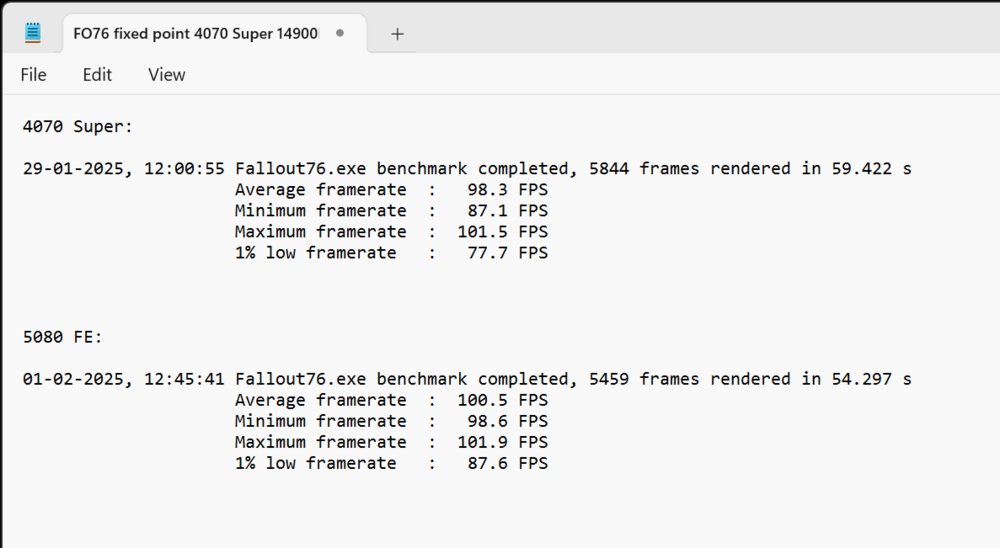

This is the way..... ------------------------------------- 5080 FE has arrived: Even better news, ZERO coil whine. I mean none. I mean I let Fallout run over 1000fps in the menu and zero during Steel Nomad, Timespy or World of Warcraft. Out of the box it is boosting close to 2800 so that bodes well for some overclocking magic. This is **4** GPUs in a row now (3090ti KPE, 4090 Liquid, 4070 Super, 4080 FE) with different motherboards, CPUs, memory, SSDs with basically no coil whine and all have been on my EVGA 1600w P2. Like I said before, my old 3080 Strix has coil whine in the wife's system with the Seasonic 1000w, but when I swapped it into my system after repasting it to retest it, it had none. Bad news is I'm getting some serious artifacting in WoW with my CPU >5.4ghz and Timespy errors out on the last GPU test. I drop it to 5.4 and everything is smooth sailing. Only difference now vs then is this last week I did swap in those 6000 2x32GB dual rank sticks while I tune up the TG sticks with the 9800X3D. I'll swap in the extra set of Corsair 2x16GB sticks to see if that makes a difference, but I got a funny feeling..... ---- Lastly, 4070 Super seems to be the sweet spot for Fallout 76 as I'm getting CPU bottlenecked the same across the most hard hitting spot static spot I can find where I do my testing with 4k everything maxed. The 4070 Super hits ~99% utilization in this spot, but the 5080 FE is hitting ~54%. I know FO76 loves X3D and was a monster on the 7800X3D, so hopefully the 9800X3D (pictured left above, coming down the home stretch for tuning the TG 8200 sticks at 2200/6400) will bring this 5080 to its knees but we will see. Min and 1% is up though with the 5080. For historical comparison to show how much the 14900KS w/ 8200 tuned up to 5.9 improved performance but more importantly the absolutely dominating performance of the 7800X3D in Fallout 76. The 13900KS was doing 5.7 all core and the 8000 sticks weren't tuned on the level of the TG 8200 sticks so even with a 4090, my 5080 had better results but that 7800X3D....WHEW. Oh and lastly, today was literally the last day I could return the 4070 Ventus, so I did. Now it's me and this 5080 FE..... -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

I think this is a case of the 2000 to 3000 series debacle again where minimal gains were achieved. Even 3000 to 4000 saw nothing really below the 4080 mobile. Seeing as outside of the 5090, nothing is massive in terms of improvements this is about what was to be expected. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

I agree 100% (I usually do with what you write @Papusan) I would love to see that, but we both know Nvidia is not going to do that. They definitely targeted putting their boot even harder on AMD's neck and the 7900xtx and preparing to counter the 9070xt without giving too much back to consumers. Nvidia just extended the 4080 Super with newer technologies, GDDR7, a modest bump in performance and a new compact design and kept the price the same. No more; no less. Good or bad, value wise, it is one of their better picks. That just tells you overall their price:performance is not great across the stack so you pick your poison and keep playing their game. But truly this is the ultimate solution: -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

100% Agreed. I said it before, but for Asus to purposely go out of their way with a BIOS update to no longer allow settings to retain without the need of their software was a dirty move. To proactively enforce the need of their software installed is terrible. Everything should default to OFF or at worst a static white, fixed and saved. I've been watching various videos and read ups on this and here is the problem as I see it. Nowhere did Nvidia promise or imply that various tiers would equate to a certain amount of cores or performance metrics comparatively. I can't find where Nvidia said: 090 (previously 080ti) tier = X cores 080 tier = X-A 070 tier = X-B 060 tier = X-C This looks to be some type of model concocted by users, journalists and influencers noticing a trend to determine what each tier should be not what is reality. I also don't remember Nvidia promising certain tiered performance metrics per generation either. You get what you get and decide if you want to purchase it or not. We complain a lot, but yet we keep lining up and buying Nvidia. The one device that speaks the loudest to Nvidia, our wallets, routinely open right up and spew forth cash to get their products. Present company included. I'm not defending Nvidia as their objective has been and always will be to give you less for more while nudging the overall performance forward. They spoiled us with the jump from the 2080 to the 3080 at such a good price. They spoiled us again with the jump from the 3080 to the 4080 performance wise but snatched that right back by raising the price by $500 where consumers actually fought back with their wallets and they course corrected. I shudder to imagine how they would be if AMD didn't exist. I used to think the same with Intel and AMD too. So what do you want? Another jump in performance but costing $1500 for the 5080 using the 3080 to 4080 jump? Because if you keep thinking we're going to get another 2080->3080 jump at almost the same price, you need to wake up. Never mind the fact Blackwell is stretched to its limits as is. In regards to humanity as a whole, in the words of Gene Hackman: "Like I always say. Put a fox in a hen house and he'll have chicken every night." -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

I agree, no crying. The market is what it is and when I don't get the product(s) I want, I literally shrug my shoulders and toss in an audible "ah well" each and every time and it's out of mind as quick as it was in there and life goes on. 🙂 I say this knowing that a good chunk of GPUs went to scalpers or knowing bots were used or knowing insiders had first dibs for themselves or.... (insert another reason here). It is what it is. Life is too short to get worked up over stuff like this over luxury items that only a very select few can afford anyhow. I know I am in a blessed position in life and I can roll this stuff off my shoulders and keep it moving. When you can relate contextually and realize how much better off you are than most, life becomes a much more positive, wonderful existence....(or at least that has been my experience). (The BIG fist bump! 😁) ------ On a related note: Is it me, or is the Asus Prime 5000 series linup very industrial beautiful designs? I really like the look of the 5080. And it is $999 MSRP -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

I put nothing past businesses to constantly increase profits. Absolutely nothing. Newegg routinely takes advantage of current market conditions by charging more over MSRP along with suddenly adding in shipping fees. Let's not forget their combo "deals" (well, in their defense, some of the deals are nice). -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

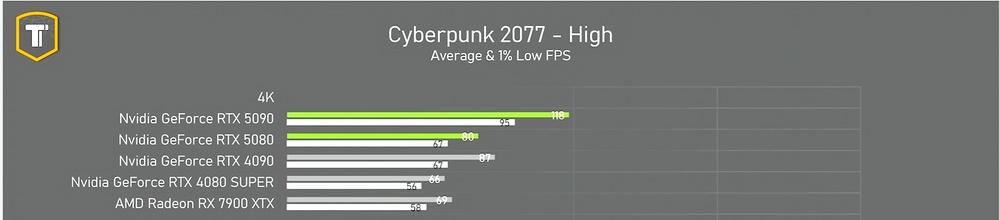

All that matters is your frame rates/benchmarks with the undervolt and how much you're saving from the wall versus your performance metrics. I stopped worrying about clock stretching / ghost clocks and started focusing on my actual bench/performance results / stability versus what my meter is reading being pulled out of the wall and drawing the conclusions there while undervolting and increasing clocks/mem to find the perfect power:performance point. @tps3443 rerun your tests with Timespy or in game benchmark to find that sweet proper UV point where performance takes the least hit. I just used TS with my 4090 to hit 2825mhz at 0.950v with power usage right around 340w in WoW and then below that clocks and performance really started to suffer and I had to dial back on my +175 to clocks or give it a wee bit more power or I would get lesser performance or crash/lock ups. I monitored and kept numerous 7900xtx's in my basket on newegg. Each had anywhere from being in 150+ baskets (Sapphire Pulse 7900xtx) up to 450+ baskets (Nitro+) to a modest 300+ baskets (Asrock Taichi). I also kept track of several 7900xtx models on Amazon and BB too. They were routinely in stock if not always in stock. This was also true with the 4070 Super. 5000 series drops, sells out instantly, tons of people couldn't get a 5080 or a 5090 and suddenly after the launch 7900xtx and 4070 supers sold out fairly quickly. Translation: Some buyers were holding off buying a 7900xtx, 4070 super (top cards realistically left in stock at many places from each company at MSRP) and once they couldn't get a 5000 series card and needed something they snapped up an alternative. You also have a fear of Tariffs kicking in and those prices skyrocketing. I guess this is your first taste of an Nvidia launch in quite awhile. 🙂 This one was worse than the 4000 series launch though by far as some 4090's lasted a few days at MC before selling out. 5090 was ready to launch. I mean, the cards and architecture are there and out. It wasn't ready to launch in volume but it was definitely ready to launch. 🙂 I think Nvidia wanted to get in also before the potential Tariffs kick in and suddenly the cost of all these cards is going to be at least 25% more expensive if not more for consumers unless an exception is made. With a baseline tariff, you're suddenly looking at $1250 for a 5080, $2500 for a 5090 for FE models and the prices just scale up from there. $3000 for a Suprim Air. $3500 for an Astral. This is all before sales tax. I know for myself if Tariffs kick in before I get a potential 5090 I will most certainly pass. Even now I'm still "meh" (as I was looking all morning....I know right?). I'm going to do some hard numbers when my 5080 FE comes in. at basically $1012 after tax w/ discounts it will be hard to beat price:performance. I'd prefer a 5090 FE or other MSRP model for $2k or if I need to spend more, a Suprim 5090. That's about it realistically but sometimes you have to let common sense kick in (or not 🤣) When I see numbers like this: It really shows the 5080 at $999.99 justifies its place in the price:performance pantheon. ~9% behind a 4090 for a fraction of the price. Do I wish it was faster? Of course, but no denying the same price for more performance and features. 5090 is just off in its own world dominating everything..... I've done this in the past. You just have a family member or friend order something for you from the same location so you can get more than one of whatever. It's no biggie and I'm sure Dom (and others) have done it to get multiple units. On the other hand, purchasing before the embargo is another thing or swooping in and buying dozens or more. I guess I just don't get my shorts in a bunch over things like this. I never have. I didn't score a 5090. I just shrugged my shoulders and thought, "Ah well, there's always tomorrow." 🙂 -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

I thought this was a slight discount on the 5090 variant for that price then saw the 5080 and chortled. 😂 Update: It now says "Notify Me" so someone wanted it! WoW.