-

Posts

2,805 -

Joined

-

Last visited

-

Days Won

130

Content Type

Profiles

Forums

Events

Posts posted by electrosoft

-

-

Just now, tps3443 said:

From the research I did on this, this is happening because of the random latency and access times of our modern Gen3/4/5 M.2 SSD’s. They are just terrible with random latency response times. I have seen that an Intel P5800X can completely alleviate the issue all together, which was why I kinda got all in to wanting to get one. But we’re talking about owning a crazy expensive 400GB/800GB Enterpise drives that would only hold a couple games and cost a lot of money.Refer back to my post about SSD streaming.

18 minutes ago, electrosoft said:Not much they can do. Even the world's best SSDs can't help....yet. The dream goal is to seamlessly stream assets from SSD->Vram. We may in the future get to a point where we can sacrifice fidelity for fluidity if at all.

This is the issue and I am not sure even one of those drives would alleviate the issue in totality. It may help in windows where it is right on the edge but for larger transfer/load scenarios? No.

-

1

1

-

-

10 hours ago, tps3443 said:

Hey everyone, I bought Wukong Black-Myth. The game is great so far!

Running fully maxed out, and it is a very engaging game. The Steam reviews sold me on it. Usually, games aren't reviewed this well at launch, the reviews are as steam says: "Overwhelmingly positive" But it is fun so far. Story seems great! The 4090 strong arms it so I average about 80+ FPS at 4K DLSS Quality+FG with Cinematic graphics. Barely touches the CPU. The game has an issues at times where GPU usage drops for no reason from 99% to 95%. I was walking one time and it hit 89% when it was rendering a boss in the background, makes no sense because I am 100% GPU limited here, and it's using 6-10% of my CPU lol. But most of the time it is 99% GPU usage. But when this happens the 1% lows tank for a moment. They need to fix that. But I am just being picky here.Very common to newer games with insane asset sizes. Less common to older games on newer hardware but it was always an issue. Best experienced when a game has a speedy travel mechanism that streaming assets can't keep up with and you get a nice "chunk" as they load in unseamlessly.

Not much they can do. Even the world's best SSDs can't help....yet. The dream goal is to seamlessly stream assets from SSD->Vram. We may in the future get to a point where we can sacrifice fidelity for fluidity if at all.

@tps3443 in this scenario, the way games are designed, even 24GB of Vram won't fix the issue as assets are fixed to various sizes and scale down based on Vram available. Usually, if you turn down resolution/detail level, you can avoid it sometimes as the assets can pass off or stream easier.

WoW has long suffered from this with its engine re-design (more of an overhaul) 5-6 years ago. Recently it has really come to light with dragonriding which allows burst travel almost 3x faster than the fastest normal flying. Depending on terrain and position, you can trigger this "chunk" and watch your fps drop in half for a split second at repeatable locations in the game. If you drop your resolution from 4k to 1080 and/or details to med-low, it magically gets better or flat out goes away.

Back in the day, we called this "look ahead streaming" "dynamic draw fill" and other nifty terms to describe the process. It is also why many games offer "view distance" options to not only take a load off your hardware but to minimize asset loading and make it more manageable.

@D2ultima "Traversal Stutter" I like that! 🙂

-

4

4

-

-

2 hours ago, Etern4l said:

Hello, hope everyone is well.

I wish I listened to that advice. While the initial PTM7950 (later changed to the 7958 paste) was durable enough and lasted for several months, I can confirm the temps are nowhere near as good as what's achievable with Conductonaut.

Temps eventually degraded and the CPU started suffering from instability, so just bit the bullet and replaced with LM on both sides. Unfortunately I'm pretty sure my 13900K actually suffers from the degradation issue as it's not stable at stock speeds (even with temps under 90C), however, good news is that it's stable after downclocking and undervolting which works for me.I think the mental block problem was caused by my imagining LM as this mercury-like kind of thing that will almost surely ends up running all over the components lol Cool and stable after a couple of weeks so very happy and wanted to thank @Mr. Fox for trying to ease me into this 🙂

Hey @Etern4l!

Did your 13900k used to run fine at auto but now won't pass those same tests? Did you change BIOSes? Does it fail on 0x129 using the Intel Enforced Limits? Usually for a degraded CPU downclocking and a positive offset are required. The fact it responds well to an undervolt is encouraging it may be ok or the degradation is minimal.

Delidding a CPU and not using LM really defeats the purpose of replacing the stock sTIM especially with the stock lid as there is usually no absorption/hardening (3rd party copper top can be a different story).

-

1

1

-

1

1

-

1

1

-

-

Wukong on a CL. 5.9/4.5

Vcore load up to 1.43 seems a bit high ?

I'll have to go back in and re-check my profiles and see because 1.43 under load auto? Fixed?

-

1

1

-

1

1

-

-

5 hours ago, Mr. Fox said:

I watched about 4 or 5 minutes of that and now I know for certain I won't be buying that game. Based on my personal preferences it looks extremely boring to me.

Funny, I came away with the same conclusion. 🙂

"Oh, here's something for @tps3443 to aspire to" and "Yeah, I'm good with the benchmark"

1 hour ago, tps3443 said:

I can actually do this. Don’t make me do it lol. I am already trying to resist going direct die with my chip lol. 8800c38? No problem! I have built a stable profile for it. As for 6.4/4.9+HT on in a game that uses about 6% CPU usage? Done lol.

With chilled water + Direct die it’s all doable. The one thing I cannot do is 3,150 on my 4090 because it’s on air. And it’s just a turd.

Don't do it! You know you'll regret it! But if you DO do it, make sure to post all the results. 🤣

True on the 4090. I am sure you could hit that on mine no problem seeing as it does 3120+ on stock Vbios and stock AIO cooling. I have no idea where it would go blocked let alone chilled lol.

1 hour ago, tps3443 said:Been doing some clearing! And my lot is almost ready for the home! Nothing but woods around me. I love it. There is one new home that was built across the street you can see in the pic, but woods to my left, woods on the right, and woods behind me.

The 1st pic is my drive way, and I have water hookup, and a culvert pipe! Electric is next, then Concrete pad for the home. The home has already been manufactured, that process took 4 months after the order was submitted for them to build it.

I know you have to be excited about this bro! Once we downsized, we sold our monster sized house and moved into a Double Wide, costs dropped by insane levels across the board. 15 years later, my lady now keeps sending me listings for "smaller houses...not as giant sized." She wants the privacy of a house and land around it again.

Looking forward to the finished place. You DID make sure it has a dedicated computer/office room for you?

-

4

4

-

-

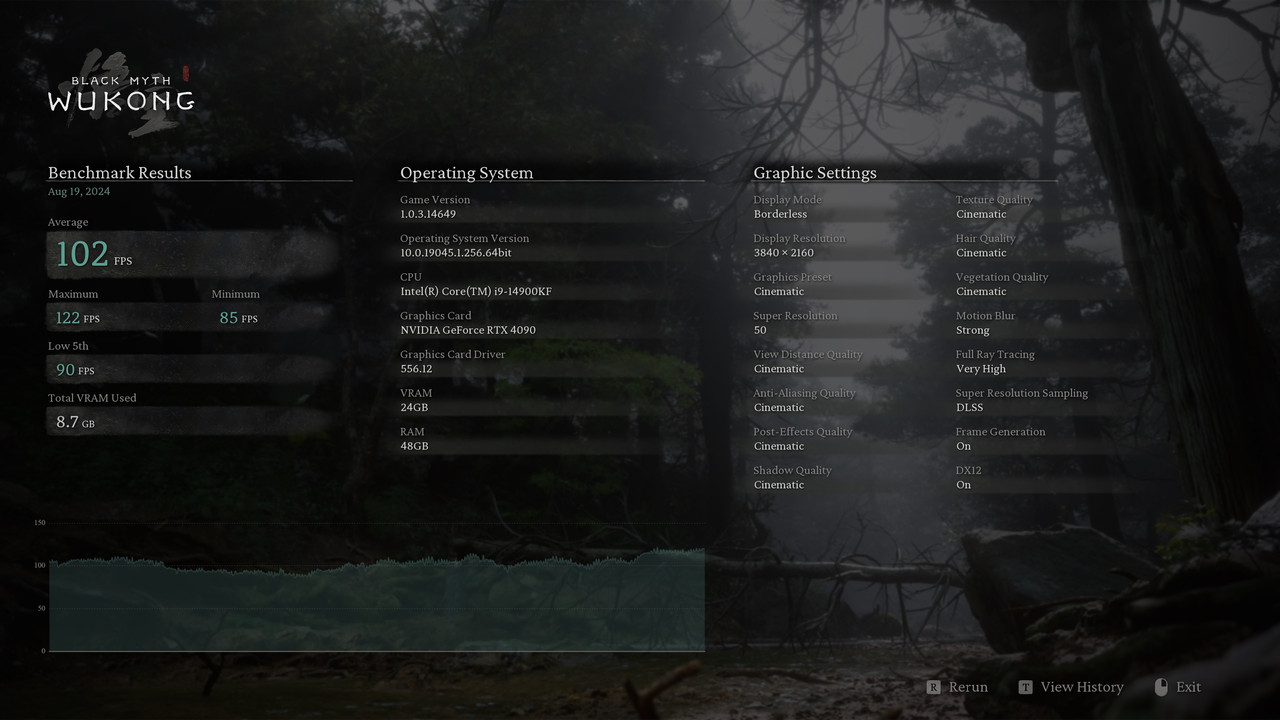

Here you go @tps3443. System gaming stats to shoot for!

4090 - 3150mhz

14900KS - 64x / 49x (SP111 P126 / E81)

DDR5 8800 CL38

-

1

1

-

3

3

-

-

6 minutes ago, Talon said:

You know I have vacation in Oct for a couple weeks. I’ll be at Microcenter on launch morning lol.

But honestly I’m not so sure this is going to be as great as I had hoped after seeing the leaks. My 14900KS at stock and undervolted with good dual ranked memory is almost as good or better than the result we saw.

I know it’s just Geekbench and gaming could be great but meh.

That won’t stop me from wanting to get one and tune for max perf.

This is what? 10 years now since the NBR forums? I realized X amount of years ago that you buy Intel/Nvidia hardware day one or close to it and give it a really competent set of early testing that tends to stick and align with my approach to hardware so I always trust it and it has yet to be wrong....

.....why fix what isn't broke? 🤣

So yeah....I'll be on the lookout for your results. 😜

-

1

1

-

2

2

-

-

16 minutes ago, jaybee83 said:

nah, no more dispute from my side, seems pretty clear now. scoured through enough review now to have a good overall picture for Zen 5. X3D waiting game commences...

Ditto..... If I wasn't into gaming and I was new to computers, 9950X makes sense. I would pick it over 14th gen.

But right now, the smart move is to wait for 15th gen and X3D variants.

-

2

2

-

-

20 minutes ago, tps3443 said:

So who is pre-ordering the 285K? And who is waiting for the possible 295K?

I'll wait for day 1 @Talon results to see where I'm going.... 🤣

BTW, saw the thread for the SP103 over yonder....yikes that's going to be a poo poo storm and then some.

JayZ on how to check for degraded chips.....

-

2

2

-

-

2 hours ago, Talon said:

Just downloaded it myself. With my 14900KS with latest 0x129 Microcode BIOS, default performance 253w profile, and -110mV Core and -50mV L2 it compiled without as much as a hiccup.

Unfortunately too many are using the old BIOS and are either scared of a BIOS update, or think they're on the newest BIOS because they put out so many that said "Intel defaults".

Intel really needs to do a better job with a public campaign that sends out emails to consumers via their retailer.

Yeah, I tested it on my 14900KS setup and it compiled just fine with my original profiles. Wukan Benchmark just hit differently as noted by many here and elsewhere. I also think there is a wide crossover for software issues and hardware issues and the easy out is to blame Intel atm as we see below:

5 minutes ago, Papusan said:The fact it reaches 100% shows it most likely isn't a shaders compiling issue but the problem lies elsewhere.

Sometimes people confuse buggy software with hardware issues. This is true for Intel and AMD platforms.

-

1

1

-

1

1

-

-

1 minute ago, Mr. Fox said:

I can see myself, and some other fine people of equal intelligence, (like a lot of us here), potentially going through a long dry spell and not finding any good reason to purchase new computer tech. Maybe even never finding a good reason in the foreseeable future if the trend continues on its current undesirable downward trajectory. That would actually be a blessing in disguise in some respects. No point in wasting money on downgrades when the result is only newer is

betternewer.I have hopes for Arrow Lake.

AMD 9000 really isn't exciting. Slim hope for 9950X3D/9800X3D to provide some type of meaningful uplift.

AMD GPUs are apparently regressing next gen overall but supposed to have stronger RT?

Help me 5090..you're our only hope. 🤣

Right now, I'm financing my daughter's College Education and she's in year 2, so any excuse to pump the brakes is ok. The bill from the Bursar's office just hit and it was spicy! 🤑

-

2

2

-

1

1

-

-

12 hours ago, tps3443 said:

This SP103 is toast! I feel bad for the guy who bought it. I went straight to direct die, didn’t even test it before hand. But the chip needs like 1.225v just to run 5.6P at 48c package temp. Someone smoked the chip. 5.7/5.8/5.9 are all completely unstable. Launching anything other than a browser will BSOD. Turning off or reducing the E-Cores helps a lot. So, all I could get “Kinda useable” was 5.6P/4.3E/4.5R on direct die+ chilled water. 😂 Wonder if that’s why it was only $400 bucks for a 14900KS. 🤔 Anyways, I tried everything I could with the CPU. It just can't run at all without failing it seems.

The chip refuses to launch R23 under any circumstances. Any light games are closing to desktop. This chip is defective, or it was cooked with so much voltage that it is so degraded it can barely run anymore at all. Since it needed about 1.225V for 5.6P and still would fail to run R24 consistently this makes me think it has been degraded or abused. But, I do not really know. I have never dealt with a CPU like this. I spent a lot of time with it. Not sure what it may be. 🤷♂️3 hours ago, tps3443 said:

I couldn’t even bare it. The chip was just completely unstable on auto/default everything and DDR5 5600. I ran it for like 3 hours last night trying to see if I could get it working properly. And I couldn’t do anything but crash and run in circles. It acted like a 1000% defective CPU to me.

(1) Opening R23 was impossible.

(2) Extracting new R23 file would freeze during extraction.

(3) opening any game would close to desktop after just a second, even games like BeamNG that are easy to run without shader compiling.

(4) The only thing I could run was CPU-Z bench/Stress, which case the temps were really really good.

(5) Opening a browser would lose internet connection and say, you‘re not connected to the internet.

Using 5.9/4.5/4.5 with DDR5 5600c46 complete auto settings was next to impossible. I increased SA voltage which actually helped stability a little bit. R24 load would shoot load voltage up, and crash before it even started.

It would only run properly if the chip was de-tuned to 5.6P/4.3E. Then it was useable as a chip. I did test memory stability at which passed fine as well. I pulled the chip and put mine back in.

Here are a few pictures of the CPU.

Pity, the 5600Mhz V/F is actually decent.

If it won't run anything even on your killer setup, DD and stability only at those settings and load voltage, that chip screams defective. I don't think I've ever read of a degraded chip to that level.

Either way, need to relid it professionally and send that thing in for an exchange from Intel.

-

1

1

-

-

3 hours ago, tps3443 said:

This SP103 is toast! I feel bad for the guy who bought it. I went straight to direct die, didn’t even test it before hand. But the chip needs like 1.225v just to run 5.6P at 48c package temp. Someone smoked the chip. 5.7/5.8/5.9 are all completely unstable. Launching anything other than a browser will BSOD. Turning off or reducing the E-Cores helps a lot. So, all I could get “Kinda useable” was 5.6P/4.3E/4.5R on direct die+ chilled water. 😂 Wonder if that’s why it was only $400 bucks for a 14900KS. 🤔 Anyways, I tried everything I could with the CPU. It just can't run at all without failing it seems.

The chip refuses to launch R23 under any circumstances. Any light games are closing to desktop. This chip is defective, or it was cooked with so much voltage that it is so degraded it can barely run anymore at all. Since it needed about 1.225V for 5.6P and still would fail to run R24 consistently this makes me think it has been degraded or abused. But, I do not really know. I have never dealt with a CPU like this. I spent a lot of time with it. Not sure what it may be. 🤷♂️There have been plenty out of the box new 14th gen CPUs that were failing on auto settings because they were garbage, but this one seems like uber trash. It could be a dud , abused/degraded or a bit of both.

That is stupidly high load voltage for 48c at 5.6.

How does it work on pure auto? Incrementing + offset to see if you can find a level of stability since it is DD/water chilled to take heat out of the equation.

-

2

2

-

-

5 hours ago, Papusan said:

This sum up today's new modern tech. Create disasters to be able to maximice profits. We need bigger chips and with same sorts of cores. And more cores if needed for more performance. Not this modern hybrid or glued togeter tiles mess.

@Prema Here's is the latest Intel ME Firmware Update v16.1.32.2473. Can you see what this brings? Probably new security fixes as usual. Or a re-brand. Thanks

Hmmmm, I think I remember someone basically saying the same thing. Lemme see if I can find it...ah yes....here it is....

On 8/18/2024 at 2:54 PM, electrosoft said:The real problem was when Intel and AMD started to deviate from a monolithic type design and started offering these hybrid designs both in core differentials (P vs E) and core functionality (X3D vs non-X3D) so OSes could no longer just work with a generic scheduler. We're expecting M$ and AMD/Intel to work hand in hand to properly have their CPUs know what and how to prioritize tasks.

It has gotten somewhat better since Alderlake and 7950X3D/7900X3D introduction but it will always be a work in progress.....

🤣

-

1

1

-

2

2

-

-

3 hours ago, tps3443 said:

I have a SP103 14900KS that I will be delidding and running on direct die. Will be testing the IMC as well. I want to see what something like this does at 5.8Ghz all core on direct die.

Considering you said your SP99 vs SP108 was fairly close with chiller love (temps rule everything), I am sure you will get amazing results from it too. I'm always curious about the IMC.

-

2

2

-

1

1

-

-

Apparently the new Final Fantasy XVI Demo on Steam released on the 19th also is giving some users fits with its shader compiles. I just ran it on my main desktop (7950X3D/4090) and no problems at all. I'll try it on my 14900KS/7900XTX setup later to see how it stacks up against the Wukong fun times.

https://store.steampowered.com/app/2738000/FINAL_FANTASY_XVI_DEMO/

-

2

2

-

1

1

-

-

8 hours ago, Talon said:

This is why I say, for most people the 4070 Super with it's paltry 12gb is more than enough. It drives my 4K 120hz OLED TV in the living room for some couch gaming and it's no slouch. The performance and efficiency of that card is unreal. The 4070 Super at $599 for the FE is the best value card of 40 series. The next closest IMO is the 4080 Super FE at $999. The 4070 vanilla isn't worth it, the 4080 OG was a joke in price, and the 4090, while stupid powerful is out of reach for many people. I say this as a day 1 4090 Strix OC owner lol. And I know I'll likely buy the next Titan or 5090 around launch even though I absolutely do not need it. Ugh the sickness.

Yep, I called the original 4070 the sweet spot and the 4070 Super is the ultra sweet spot. It is a no brainer for someone with a ~$600 budget for a GPU (or what we previously called the flagship pricing years ago....). I keep coming back to it for my SFF build on my charts with the 14900KS......

4 hours ago, Talon said:It's hilarious to hear people scream about Intel power consumption for their flagship high core count CPU, then you look at the latest Ryzen flagship on a cutting edge 4nm node pulling the more or less the same power and producing less FPS.

Depends on the title and consumption requirements. Look at Starfield.... you can see as it gets hungrier they start to separate but for mid to lower tier power requirements they all kinda just end up in the same area for the bigger chips. A 7800X3D would wipe the floor with the 14900k for consumption and beat it overall in gaming (it gets trashed everywhere else).

Those results really don't show Intel in the best light even (look at the memory) and it is still winning. I am expecting Ultra 200 series to hopefully bring some heat. The laptop variants are......ok. The top of the line Ultra 9 185 is basically a slightly better 13600k.

The 9800X3D will just step right back in and take the top spot......over the 7800X3D but not as massive as YT keep saying because.....

3 hours ago, tps3443 said:

What garbage tuning is this? The AMD chip and 14900K isn’t even pushing the 4090 properly. My system certainly slams my 4090 to the moon at 1080P.Cyberpunk 2077 does not experience any cpu bottleneck until 400fps+ range, yet they are already hitting low GPU usage at like 136 FPS avg? 🤣

......of this. A properly tuned 13th/14th gen can hold its own with the X3D chips but it takes a level of refinement that normal users aren't going to do nor should they have to do. Intel is the tinkerer's chip as it yields the most results from getting under the hood. AMD has a lot of "set and forget" properties which has their merits.

Most users are sitting on air coolers or rando AIOs, using factory thermal paste on the AIO and booting up and going from there with factory defaults. I respect YT channels like Testing Games that present a more realistic "end user" experience but also acknowledge we will never run anything even close to that.

When you run an out of the box experience, Intel suffers the worse as it has much more tune-ability. Look at the memory settings. 9950x is running at the 6000 sweet spot while Intel is chugging along at 6000 too.

If you want a more realistic AMD vs Intel vs Nvidia channel, I watch Bang4BuckPC Gamer. He tunes his hardware on a CL, and routinely does comparisons with all his hardware (X3D, 14900k, 7900xtx, 4090, etc...).

1 hour ago, Papusan said:So even with the MC 0x129 with the new XOC beta bios you got back the SA bug.... Hmmm.

Your reply. I tested the ASUS XOC BIOS that @safedisk posted (v9933) and the SA bug returned to that CPU even with 0x129 MC.

What a disgusting soap opera this has become with the different motherboard manufacturers. 1.6V when loading up the OS with the new so called fixed bios (MC 0x129). Could be a detective board but who knows.

.

Intel can push the new MC, but it still comes down to MB makers responsibly enforcing it while also allowing advanced users to disable it if they see fit. I am sure we will see a few more BIOS updates fleshing it out.

As for the SA Bug, when all is said and done, I am still setting my SA back to 1.18 for 8200 because it works and no need to feed more voltage than required. Of course for benching and testing, I let it run free. 🙂

-

1

1

-

1

1

-

-

2 hours ago, Papusan said:

Not everyone that have an Y-cruncher/OCCT profile ready for the new game😁

Despite Intel patch, Black Myth: Wukong devs warn about Core 13th and 14th Gen CPU crashing

A nice mix with Intel and AMD's crashing in here...https://steamcommunity.com/app/2358720/discussions/0/6149189044492760562/?ctp=3

With the way I tier my profiles based on power requirements for games to boost my clocks and scale, it will quickly show which games are sucking down the most power and require higher tier/lower clock settings on my modest AIO setup.

This was the first game I ran into that all my previous auto/lower power tiers crashed. This was even before it could get to any type of shader loading even. Shaders just kicked it up a notch.

I had no problems running it on my 4090/7900xtx and 7950x3d/14900ks (post miser power acknowledgement lol).

Oh, and happy birthday @Papusan! Hope it was a good one and here's to many more brother!

1 hour ago, tps3443 said:

I already knew this was gonna give people trouble after seeing the 297 watt with my chip at 5.9-6.2/4.8E/5.0R. I am using bios 9901 with Micro code 0123. The shader compiles are getting intense these days.True, but unfortunately joe user isn't going to know what or how to handle it and either refund the game or blame the hardware that might be unstable on many fronts. Me personally? I like tinkering and figuring out wth is going on. 🙂

297w on your setup is serious for a game, yikes. This might be a good wake up call for Intel and to an extend AMD going forward to maybe not push their chips so hard trying to one up each other and get back to static, realistic settings.

-

1

1

-

1

1

-

-

45 minutes ago, tps3443 said:

Do you have trouble with the Jedi Survivor game as well? Thats another CPU intensive one I think.I do not have that one. Is it free or is there a demo?

With adjustments (using my Y-cruncher/OCCT profile), Wukan works perfectly now. I just didn't know I was going to have to roll out the bigger guns profile to do it but it's understandable. Even thought it pulled only 226w max at 5.6 compiling shaders and running the demo, it still needs 1.31v set (down from 1.32v) and 1.184v under load to run. Hogwarts and Fortnite UE5 worked just fine but Wukan must have some special sauce mixed in. 🤣

-

1

1

-

-

3 hours ago, Mr. Fox said:

I would not even be able to test the Intel Application Optimizer on the Z790I Edge if I wanted to. I was going to see if it made any difference with the 3090 Ti but the menu option for Intel DTPF is missing from where it is supposed to be according to MSI documentaion (right below the setting for CFG Lock). I wish I knew what the key combo is for MSI desktop firmware developer mode to access all menus. The old key combo that worked on laptops does not work on the desktop motherboard. It is stupid that MSI also hides the IA VR Limit menu. So, you cannot set a cap on the VRM voltage output (and therefore can't limit VCore properly) in their firmware. Super dumb.

Ugh, MSI is supposed to be right up there by Asus in regards to BIOS controls. One thing I can say about Asus is they really do give you the kitchen sink and then some. EVGA was up there too. I always considered MSI next but watching BZ flip around in Gigabyte's BIOS, it seems pretty competent these days too.

Asrock is missing a lot of fine/granular controls and I find their FIVR controls a bit lacking. So far, it is Asus and Gigabyte with the ultimate cut off valve for vrout max settings.

1 hour ago, tps3443 said:

Real world stability testing is always the best. These modern games are stout. People use to pick on the idea of using games as stability testing. Now most of the time systems can pass through stability testing and fail to run games lol.When it comes to games, I have always run a mix of configs allowing me to scale to the games demands and reap the rewards in clock settings. It lets me really wring out every last bit of performance per game from my chips without trying to implode them. 🙂

What I usually do is when I find a game or stressor I want/need to run, I will then adjust around it and create another profile tier to run. For example, WoW, Starfield and FO76, I can even get away with tuned/dialed in auto and run it up to 6ghz all core if I want but 5.9 is the perfect sweet spot. I could actually probably run FO76 6.1ghz all core most likely. CP2077 I can do 5.8 all core.

This Wukan benchmark? I had to go back and use my "big boy" profile at 5.6 which means I'll have to adjust up 5.7 and most likely 5.8 won't run but it might. 5.9 is definitely a pipe dream on my lidded AIO setup.

With that being said, I absolutely agree that real world stability is the best as it assures everything will run, but the cost is performance left on the table when a game can run more with less.

Did you ever find out what made your monitoring text turn color and what it meant when running this benchmark? I'm curious.

-

2

2

-

-

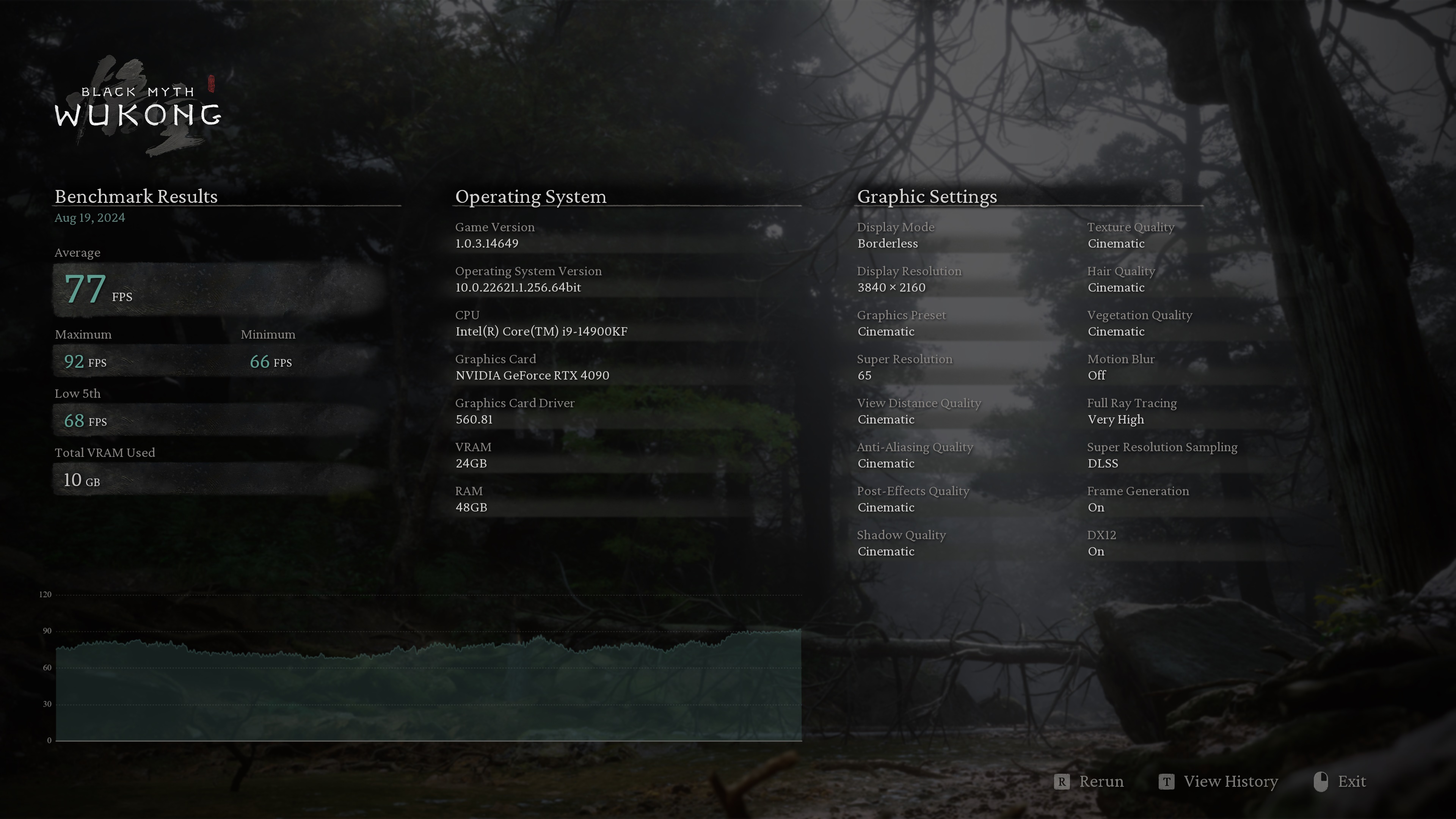

42 minutes ago, Mr. Fox said:

OK this benchmark draws insane amounts of power for some reason if run on Windows 11. And, it is not only during shader compiling. It's from start to finish. Just for giggles I wanted to see if the FPS got better or worse, so I booted into Windows 11. I tripped my circuit breaker twice and had to leave my work computer turned off when testing on Windows 11 for some reason. I almost never use Windows 11 and now I find myself wondering if that (Windows 11) has something to do with the Intel CPU degradation being complained about. This did not happen on Windows 10 (circuit breaker tripping thing). It seems like the power utilization spirals out of control on Windows 11, but does not when I am running the benchmark on Windows 10.

On Windows 11, my framerates are higher by about 5 FPS, but the power draw increases by more than the minor increase in FPS. The WireView meter on the 4090 Suprim shows up to 490W (almost 100W more than Windows 10 does) and almost 100W more on the CPU on Windows 11. My Kill-A-Watt showed up to 790W being pulled from the wall.That is a bug in the game, not unique to you. I experienced exactly the same thing. To get the scale to 66 in the benchmark I have to set it to 67 or the benchmark runs at 65%. But the scores are identical with 65 or 66. No measurable difference. This benchmark is also very consistent. Back-to-back runs stay the same in terms of FPS and vary by zero to 1 FPS between runs.

65 (settings show 66)

66 (settings show 67)

Yeah, the power draw is one thing but having to actually use my Y-cruncher/OCCT profile to get it to run for a game benchmark is crazy. Using my other settings, it would just exit out before it even got to the shaders compiler screen. I'll have to go back in and figure out where 5.7 and 5.8 can get it to run too unless the pull/heat gets a little too much for my modest setup.

Glad to know I wasn't seeing things as I tried three times to get my adjusted 66 to then show up on the final results screen.

Seems like over on the OCN forums, they have all decided on max everything at 4k, Super Resolution setting of 75 and trying to see how many can break 80fps and by how much. I saw a couple 83fps scores.

14 minutes ago, ryan said:Consistent here also..buttery smooth 4fps maxed..I prefer this cinematic experience. Goes to show what alot of advances in tech and obscene amounts of time can accomplish..all kidding aside it is actually a very consistent and accurate benchmark with great visuals. Not much difference from timespys accuracy.

LOL.... I chuckled nicely at that one. 🙂

-

2

2

-

1

1

-

-

2 hours ago, tps3443 said:

I mean, it’s still 8.5% and your resolution slider was 65% and not 66% 😂 But, I really don’t think it matters about CPU OC, and or RAM OC. I’m pretty sure an i3 with DDR4 3200 would probably yield a similar result as me now with my 4090. My 4090 doesn’t overclock well at all. I saw one 4090 boosting near my max OC only his was completely stock (Very sad) 😭 but you probably will beat me in this one easily @electrosoftI’d go ahead and do a full on OC run with the GPU OCed. I think this benchmark might come down to whose GPU has best silicon. I didn’t exactly see any action in the benchmark, it looks like a bunch of senior animal ninjas slowly walking around their retirement resort in the jungle. 😂

It's 66. I don't know why it kept showing 65, but when I went back in and manually set it to 66, it would show 65. I actually did the run *3* times trying to get it to show the 66 I selected! 🙂

1 hour ago, tps3443 said:Thinking about blocking it?

2 hours ago, Mr. Fox said:The game has great graphics no matter what settings are chosen. Very good appearance. I am so glad the benchmark is free. Now I don't need to buy the game to get the benchmark. (Not a fan of the RPG genre, so it is highly unlikely that I will buy it.)

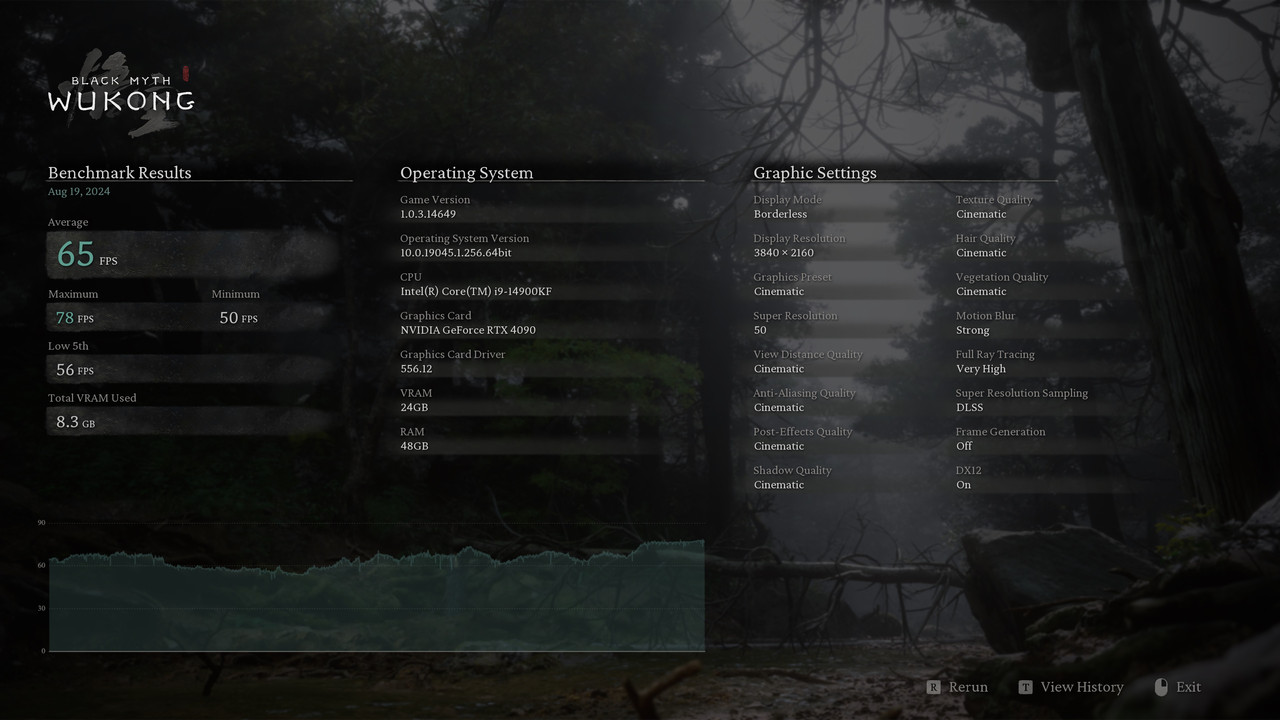

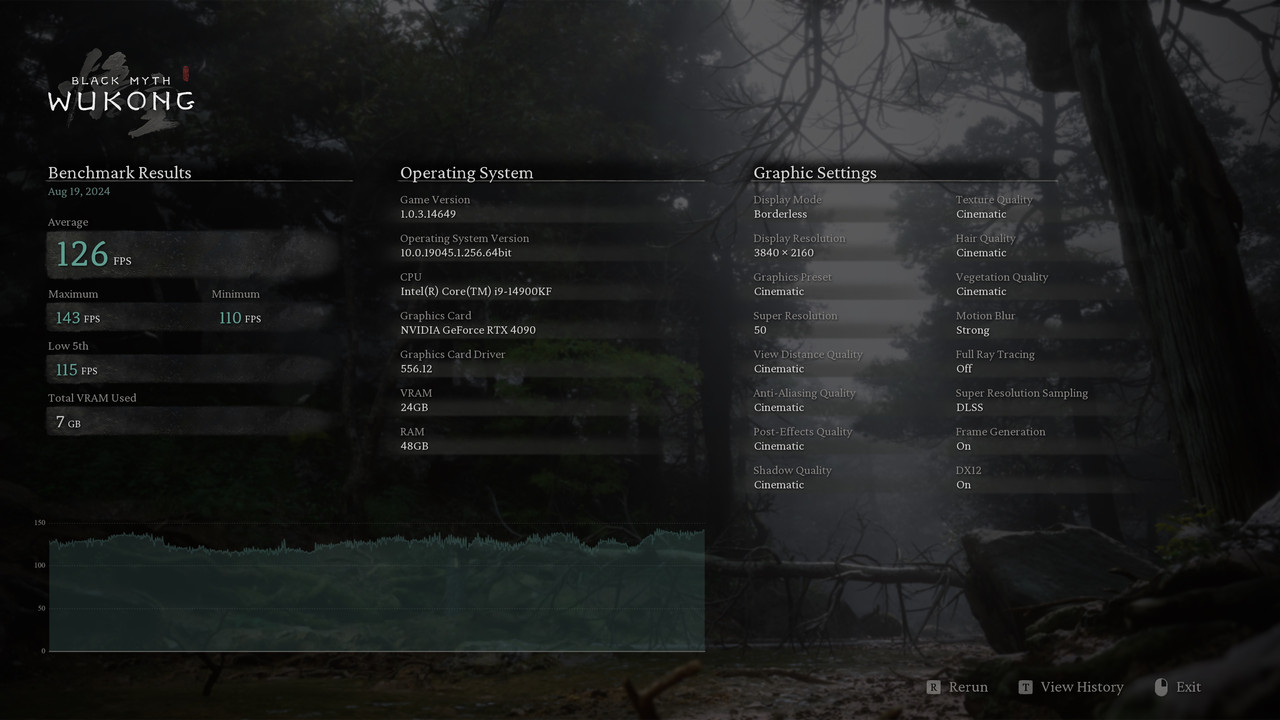

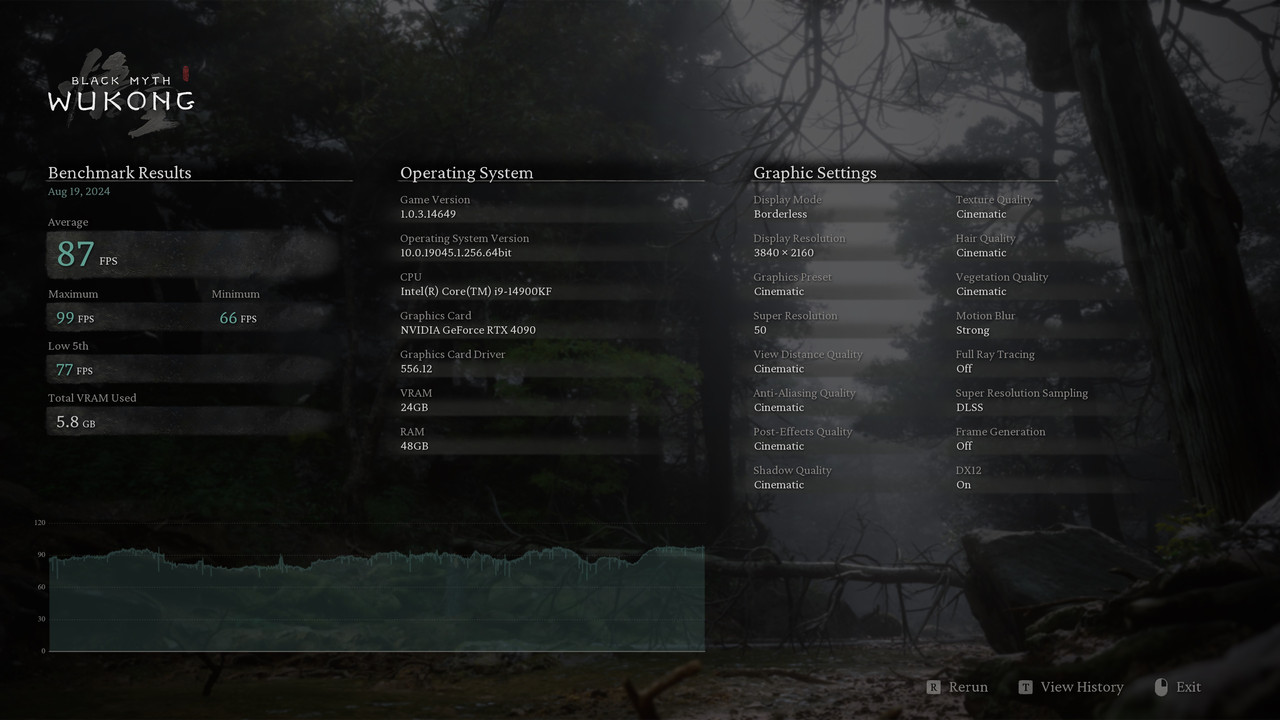

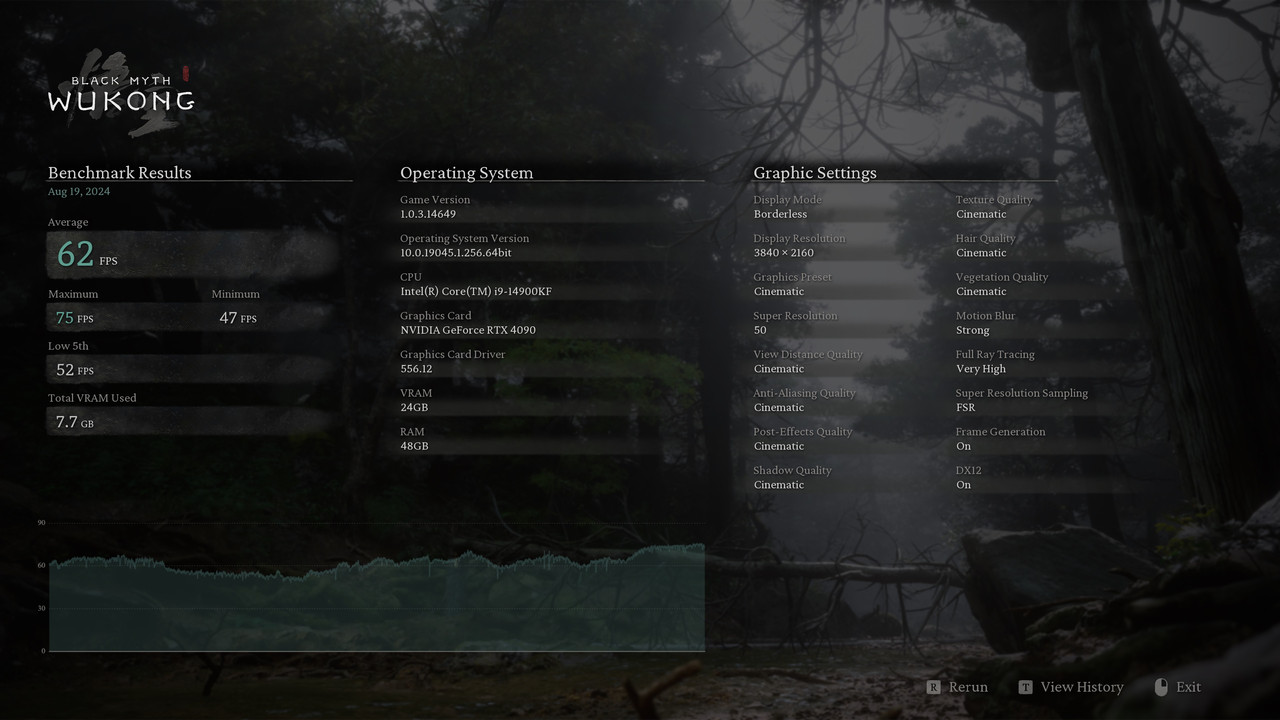

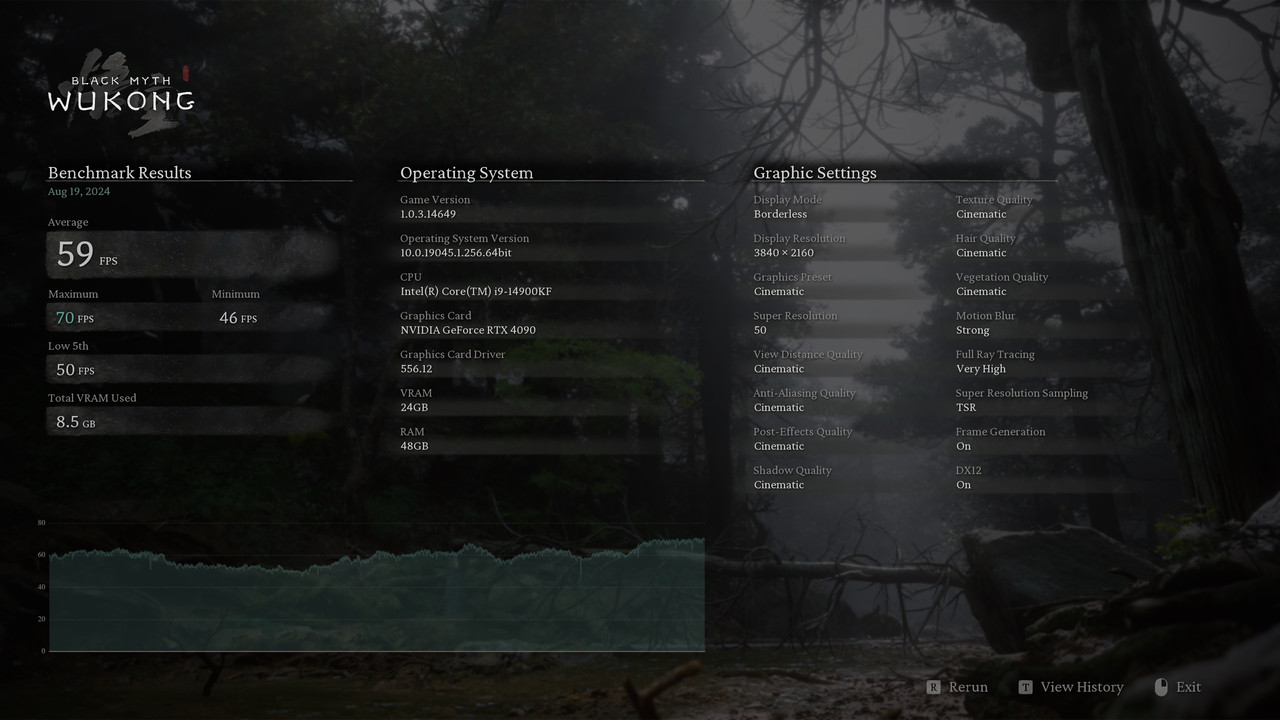

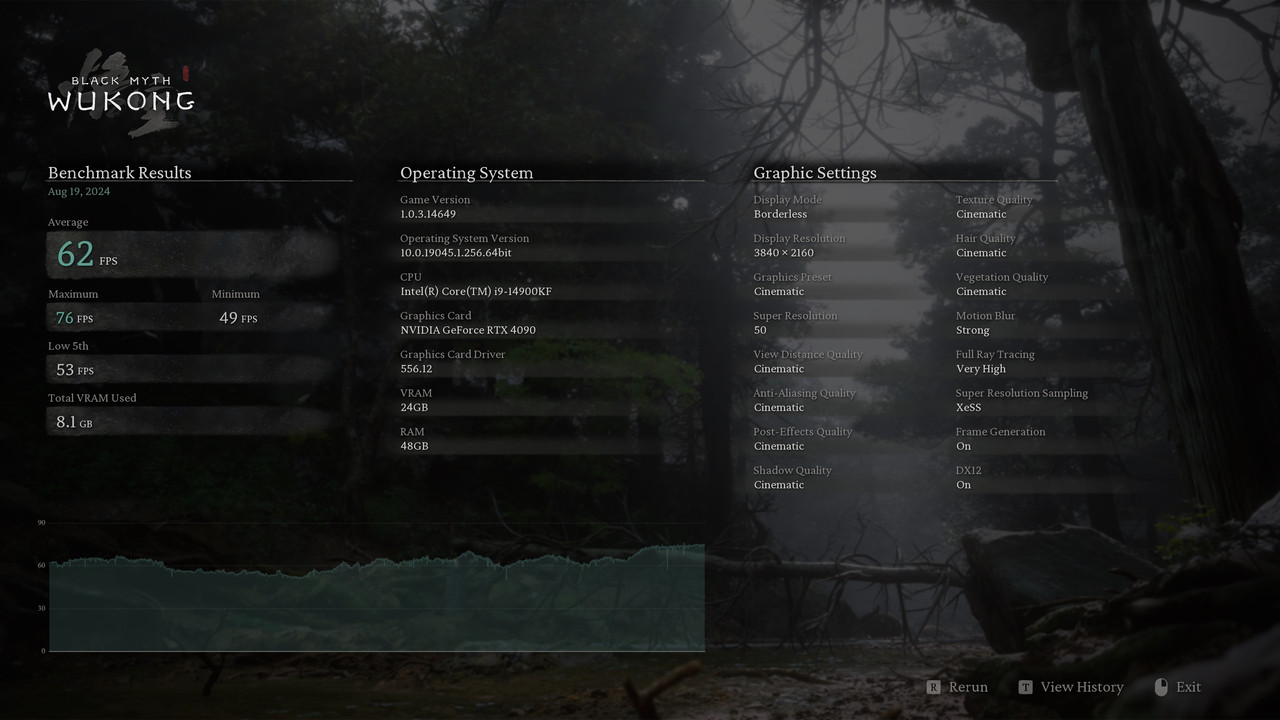

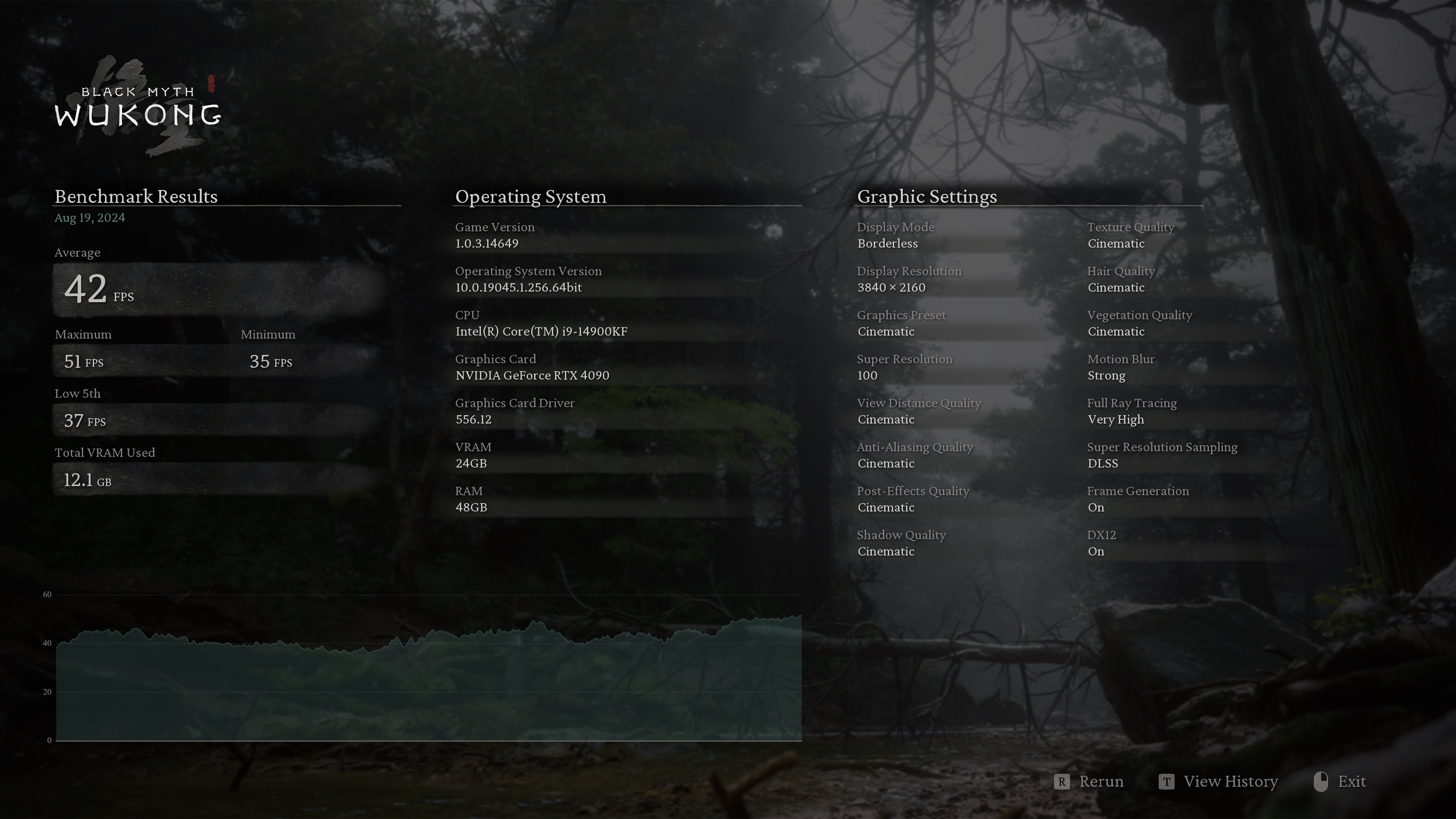

For comparison:

14900KF @ 59x-P, 48x-E, 52x Cache

Memory @ 8400 CL38

4090 Stock

Display @ 4K 144Hz

DLSS, RT On-Very High, Frame Generation On

DLSS, RT On-Very High, Frame Generation Off

DLSS, RT OFF, Frame Generation On

DLSS, RT OFF, Frame Generation OFF

FSR, RT On-Very High, Frame Generation On

TSR, RT On-Very High, Frame Generation On

XeSS, RT On-Very High, Frame Generation On

DLSS SR 100%, DLSS, RT On-Very High, Frame Generation On

Max everything out and set Super Resolution to 66 to see how it stacks up to @tps3443 and my scores at the same settings.

-

1

1

-

-

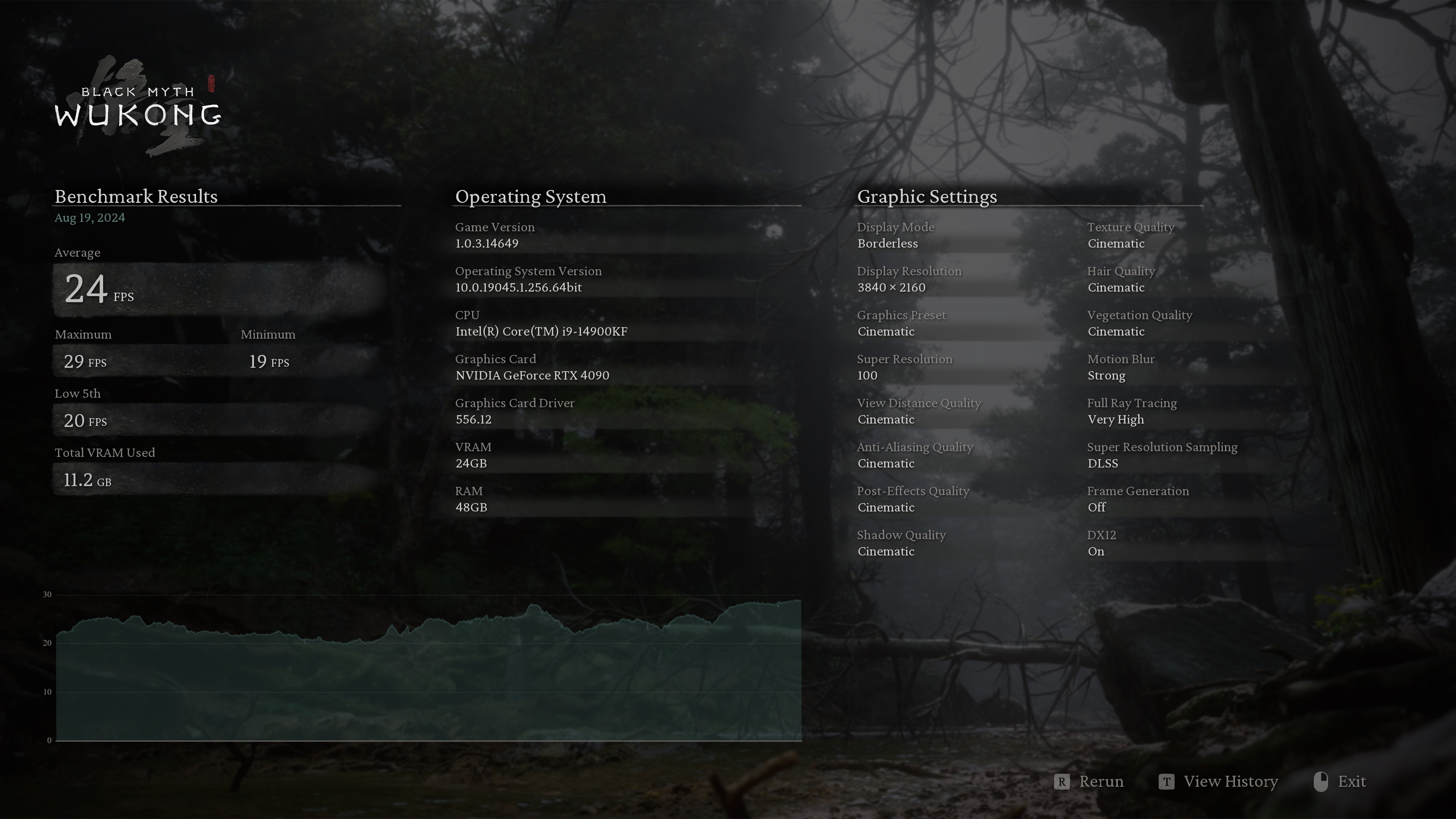

And here is some AMD 7900XTX runs on the 14900KS. I had to actually bump my Vcore up to 1.32 for 5.6/4.5/5.0 (1.2 under load) to get it to run properly not just with shader compiles but normal use too. This thing is hungry. I think there are going to be a lot of systems that are going to crash/won't run this on launch. This is the same profile I have to run for even small OCCT and Y-cruncher testing. I can pass CB23/CB15 at 1.29 on the new bios and 1.28 (1.152v load) on the old bios. But this thing required the same profile as the ultra hungry stuff. Temps were good though! 🙂

This is why I like having multiple profiles. I can load up 5.9 all core auto tuned for WoW and FO76 and 5.6 as above for any heavy hitting.

As for AMD, er, well.... let's just say RT really isn't in the future for them with this game in any meaningful way.

Using the same settings as my previous run on the 4090 (and same as @tps3443😞

7900XTX stock + 14900KS fixed 56x/45x/50x RT ON:

(Note: this run was the first run and includes shader compiles data in the HWInfo)

7900XTX stock + 14900KS fixed 56x/45x/50x RT OFF:

-

1

1

-

-

3 hours ago, tps3443 said:

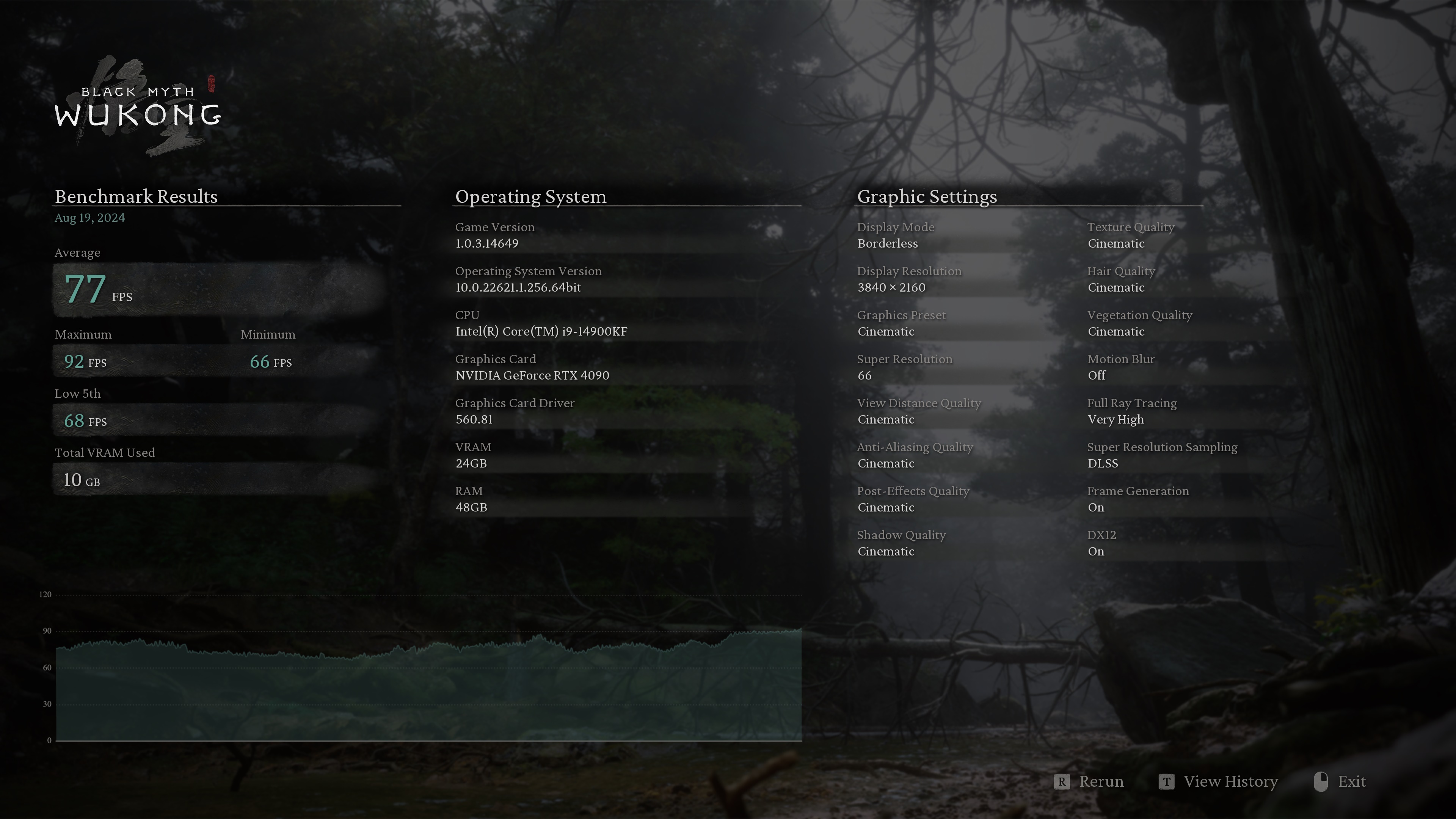

Tested the Black Myth WuKong benchmark. My 4090 does not particularly overclock all that well. It ran 2,895Mhz on the boost through the entire benchmark. But here goes. It seems the 4090 will get this title done pretty well maxed out at 4K. Especially with further updates and optimization I hope it gets even better! I tried to disable motion-blur and turn anti-aliasing to low, this only resulted in a +1 FPS improvement lol. So, I left it on for the below run.

These settings are how I will play the game.

4K/DLSS@66%=Quality DLSS/ Ray Tracing=ON/ Frame Gen=ONI have to say 78 FPS average for a 4090 at the end of its life on a AAA title just coming out is really fantastic. It’s definitely doing its job. Thank goodness for DLSS, and Frame Gen. (10 Years ago, the game would just mop the floor of your fancy high end GPU and that was that)

During the shader compile my CPU peaked at a thirtsy 297.7 watts of juice at 5.9P/4.8E/5.0R DDR5 8600C36-49. Not sure why the font turned this color. But yeah, we all know some of these modern game shader compiles can use as much power as Cinebench lol.

Happy Birthday @Papusan 🎊Pure 100% stock CPU and stock GPU, mem at 6000 only but tuned. IF at stock 2000. So 6fps difference? That's it? Hmmm....

And yeah, the shader compiles are brutal. I'm fishing through various setups on my 14900KS on the AIO to find what works best....7950X3D just shrugged it off and said, "and what?" 🤣

-

3

3

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

in Desktop Hardware

Posted

I'm watching this now (I'm really behind on my YT videos with work, life and WoW Xpac lol).

He's crapping all over the 7800X3D, but the "cake and eat it too" answer is the 7950X3D.

I'm surprised he likes the 9950X so much. Bodes well for the 9950X3D. I like that it is more responsive to BIOS spelunking but outside of Jufus's standard, "I found out all these tweaks, but I won't say what they are but come to my discord and pay for it," BS. Here is te he "magic" I expect to see:

Basically, he's going to turn off the weaker CCD eliminating any and all cross CCD latency/issues along with scheduler issues (since the CPU is new, we get to do the whole 7950/7900 song and dance again) and run the tuned binned CCD and tuned memory and declare he's created some super magic....the end. Welcome to the 7950x/7950x3D circa one year ago Jufes.

He's apparently new to AMD's dual CCD binning strategy which is kinda, sorta an Intel approach (P cores are greased lightning, E cores not so much but for multithreaded that is the magic). You get one CCD that is binned magic then a so so CCD. This has been around since the 5950x. The weaker core was a deal breaker for him. He wanted two binned/magical CCDs. I mean I don't blame him on that one. For $650, I would expect both my CCDs to be equally binned 🙂

In the end, he opts to return it he's so butt hurt over that one weaker CCD.

He then says if you have a choice between the 9950x and the 7800x3d and you're an enthusiast, you pick the 9950x. No, you wait and pick the 9950x3d.

Overall, he loves the new architecture but that second CCD is the deal breaker.

I'm very much looking forward to the 9950X3D.....then again I'm also looking forward to 15th gen too. 🙂

"I'm not revealing how to tune the 9950x except to my GOD (discord tier) supporters" ....GTFOH with that BS.

Latency of even Optane is nowhere near on the level of DDR5 or VRAM but go ahead and give it a try.......it can only help. If the pricing was a bit better, I'd give one a spin for testing. 🙂