Leaderboard

Popular Content

Showing content with the highest reputation since 01/27/2026 in all areas

-

For the past 6+ years I have used my own desktop PCs for work and now I will be using a company-issued turdbook for work. I repurposed my dual Acer 4K 160Hz panels for the work PC since I will be needing the massive screen real estate for work. I grabbed a 40" Samsung Odyssey G75F 5120x2160 180Hz WUHD monster on sale at Micro Center for $638 (model LS40FG75DENXZA) and it seems pretty great so far. It doesn't like the 4090 and neither does my ASUS 4K 120Hz monitor. I have to lower the resolution to not have a BIOS or Windows Boot Manager black screen (same problem using the ASUS screen with 4090) when using DisplayPort. Something about 40-series firmware and high refresh rate 4K on DisplayPort is glitchy. There are many examples of people complaining about this online. Using HDMI 2.1 it functions flawlessly. The 5090 doesn't care whether I use DisplayPort or HDMI.6 points

-

https://www.overclock.net/posts/29562444/ What grade are you in? Are your classmates really mean to you, or is it a parenting issue?6 points

-

The main problem is, that this community has rather few members and not everyone left is tinkering around with their laptops anymore. Like me for example. Normally, i only login once a week, because there is not much interesting activity. This is not, what NoteBookReview once was. Unfortunately. In addition, there are not many models on the market available, that can be used for modding. Almost none with current hardware. Which makes such a forum really difficult to maintain.6 points

-

While I missed the boat on the best pricing for 8000MT+ RAM, I am happy I spent the 150 to 180 for each of the nine 4TB NVMe SSDs I got over 2025. I went nuts on the Acer GM7000 SSDs. I did a quick calculation, I have over 100TBs in SSDs (spread across 8TB, 4TB, 2TB and 1TB drives) in operation. If I were to buy them in today's prices, I'd have to spend over 30K. Damn! I guess being a frugal data hoarder has it benefits once in awhile.6 points

-

Grab it before its too late😁 Remember its an limited edition. MSI RTX 5090 Lightning Z GPUs listed on eBay for almost $27,000 — limited edition graphics card demands 500% premium from resellers As you can see... The world is filled with stupids. I'm sure there is some out ther that are willing to pay $27K for the Lightning.5 points

-

5 points

-

I sure hope so (return of Intel and back to HEDT and more PCIe lanes). This has been the longest amount of time I have ridden an AMD donkey. It is the first time the experience was respectable and not plagued with functionality issues, yet with overclocking being my primary source of computer satisfaction it feels so lackluster, mediocre, subdued and limiting in comparison to what I had grown used to with Intel. I've also heard some very bad things (like no longer having "unlimited" power and current options in the firmware) and want to see how those things shake out before calling it a win for Intel. My start date for the new job is 2/23. I'm excited about that. My 90-day unpaid "vacation" has been a real test of faith. As an added blessing, (thank you Lord,) it will be a compensation upgrade, so as long as the new Intel prices are not too off the rails NVIDIA-level stupid and the performance rumors turn out to be true I will likely be looking to make the move back to Team Blue. I'm not going to shoot until I see the whites of their eyes. In an era where lying and misrepresentation are normal marketing tactics, early adoption isn't very smart. Since Linux developers seem to not be hardware junkies and early adopters, it usually takes a while for Linux support to surface as well. Since Micro$lop Windoze is no longer my OS of choice that also needs to be looked at before leaping. I fully expect that there will be no driver support for Windoze 10 and the feces OS (Winduhz 11 ) will likely be the only OS supported. One step forward, two steps backward.5 points

-

We are a very small, tight knit community that started as a cast off from the original NBR forums which were already dying to the point they shut them down in totality. Those forums at one point were so large and influential that representatives from the major laptop makers would frequent them on the regular for interaction and feedback but with changing market conditions, social media and other means of delivery coupled with niche and specialized laptops dying out and being replaced with thin fast and cheap laptops the writing was on the wall. You can't blame laptop makers for this as they simply go where the $$$ is and the vast majority of consumers have zero desire to tinker with their laptops and just want them to work, with good battery life and be light and portable. Every year, we saw true DTRs dying off and those original representatives basically abandoning NBR till all that was left was Clevo and their last somewhat true DTR based on desktop chips was their 12th gen hybrid 15.6" model (Clevo NH55) which did poor enough to signal the end of DTR anything as we knew it with interchangeable CPUs and GPUs. The first real death stroke was Nvidia basically abandoning MXM and upgradeability standards. ----- A community such as this is only as good as the enthusiasts who still have a passion for some older hardware and many of those, myself included, have moved onto more modern laptops because all the modding in the world will not approach the power modern models provide. The best you can do with modern laptops is look for models that at least have or offer the possibility of flexible BIOS so you can at least tune your hardware. Prema still offers his BIOS services on some models commercially followed by MSI which still has their excellent unlockable BIOS options. Dell/Alienware does offer some limited options as does Asus. You can also look to make mods to the actual cooling system itself from something as basic as upgrading the thermal interface material to modding the actual heatsink and fans themselves along with the chassis to improve air flow. Good luck!5 points

-

I called this early on, but at least 8000 has drawn parity with 6000/6400 worse case and best case it usually is a little bit better overall but nothing to write home over. 6000/6400 shines in extremely latency sensitive games though but they are few and far in between these days.... For X3D, we've collected enough data and seen enough game bench marking to know it truly is a case by case benefit with a massive differential ranging from only running a few points better at best than non X3D to running up to 86% faster vs a 9700x showing how brutal it can get if a game really loves that X3D cache and can comfortably sit in it the majority of the time. Seriously, I'm looking forward to Intel's foray into 3Dcache with BLLC..... Tight 6000 and Tight 8000 are the sweet spots for AMD. Buildzoid, while taking his first venture into Arrowlake, talks about some bandwidth and platform memory limitations on AM5. Intel is really where you want to be to push memory and see tangible gains with scaling even up to 9000 you will see some gains G2.5 points

-

Not everyday I see the editors/writers from Pcworld use their brain. If you buy Razer’s insane $1337 mouse, I will be very disappointed in you I remember the first time I bought a Razer mouse. Inside the box was a letter printed on fancy vellum paper. It opened with, “Welcome to the cult of Razer.” It appears that this isn’t just a cheeky marketing slogan, Razer means it genuinely. Because only brainwashed cult members would pay $1337 for a mouse. It is, in a word, repugnant. In a more accessible word, it’s greedy. In a more all-encompassing and entirely appropriate word, the Razer Boomslang 20th Anniversary Edition is bullshit. Razer is taking pre-orders for the mouse in four days. If you buy one, and I want you to imagine this in the most overbearing and judgmental dad voice possible, I will be very disappointed in you.5 points

-

The least they could do is pretend they love us and say nice things while they are choking us and pulling our hair.5 points

-

soooooo i used my last three days of sick leave to get some shit done at home, including computer stuff 🙂 > applied full backplate coverage TG Putty Pro - check! if anyone is interested, you need about 250-300 grams worth of putty to cover the whole backside of an air cooled 5090. > while the gpu was out, i used the opportunity to also install the wireview pro II and replace the gpu power cable (angled back to straight, both seasonic) > good news: both cable and socket were still pristine, not even a whiff of discoloration or burn marks. > good news 2: did a quick max OC test in alan wake at max. settings with the gigachad vbios, max. variance i saw between pins was 1.1 amps (7.5 vs. 8.6) under load, that i can totally live with! > also finally came around to delid my second 9950X3D and put it under the TG high performance heat spreader with LM > while i was at it, did some dusting and cleaned the tempered glass windows, clear views to the RGB rainbow puke once more 😄 still need to do testing with regards to gpu / cpu temps after the changes, as well as check how the RAM OC was impacted. you might remember that i suspected a suboptimal cpu mount for not being able to reach my previously stable 8000 setting. fingers crossed!5 points

-

Hey Guys! On the z490-h for now until the replacement motherboard arrives. I have also acquired the GPU risers to split the x16 lane into x8 so I can do 2 GPU's off the single lane via bifurcation. 3090Ti will be coming out of retirement to some degree for Lossless Scaling but in the interim I did test it conceptually with the 3090Ti as raster and the 1080Ti as Frame Gen. Seems to work pretty well in the couple of titles that I have tested. Allegedly you dont want the Raster GPU to be fully saturated as it increases the time it takes to send the frames to the secondary GPU. Honestly I am pretty surprised how well it worked given the software's price point. Main game I have tested were Monster Hunter Wilds and Borderlands 4 both games have piss poor engines. I was able to hit 120 FPS in both, B4 did crash a couple of times but I was mucking with the settings as that game cant run without some Scaling or FG. I did also try Horizon: Forbidden West but I should have to the DLC area as its more GPU intensive. For people that dont want to pony up the cash for new GPU's I think this may be a viable option on the table much in the way SLI/Xfire used to be for me in the old days. Nvidia still pisses me off though. If I install 591 driver, 1080Ti doesnt work, If I install latest driver for 1080Ti (581) then the 3090Ti doesnt work. I have to let windows install 560 in order for both to work until I figure out another way to go about it.5 points

-

I can smell it, too. This gal lets a Jensen in the car. Or, is it a Nadella? They smell the same. And, I can feel it.5 points

-

5 points

-

Hey fellas. Yeah I’m just burned out on just barely gett’n by is all. I’m 35 years old now been contemplating life a lot lately, me and my wife both work full time from home, life is good in the new house. Bills are paid, but that’s it lol. Things are getting really expensive lately like (Daughters new braces 😬) kids will need cars in a 2-3 years (I’m broke). I need to do something on the side and make some major moves. About ten years ago I use to buy and sale junk cars, this was extremely lucrative. There were times when making $2,500 on a good day wasn’t impossible. I have been complacent and living on auto pilot for several years now doing the minimum.. The 5090 had to go though, I needed the funds to help fund a truck+car trailer+winch. In this case, not a truck but close, I bought a clean and well kept used Tahoe Z71 4x4. Lately I learned that we got to step outside of that comfort zone and take some risks in order to gain something or see change. If nothing changes, nothing changes. Life is hard, none of us make it out alive. 😂5 points

-

Maybe someday I can be bothered with Memory tuning, but its not going to be today, or tomorrow, or the day after :) I enjoy overclocking to yield tangible gains in gaming. Looks like PCIe Bifurcation works on my Aorus ITX x570. That being said I had to scrunch up the ribbon cables so much for the test that I think it was rendered non-functional. The 7900 XTX wouldnt show up at all and the 3090Ti was misbehaving quite severely reporting the link at PCIE 2.0 But it POSTed! so thats progress of a sorts. Got a shorter ribbon (60mm) so hopefully that works i'll test this weekend.4 points

-

Not sure if it'd work on other mobos, but i bet there is a generic tool out in the wild somewhere. What happened to memory prices? Chip shortage? I've a brand new unopened 2x16gb Trident Z5 6000mhz rgb kit, and feel extremely wealthy! 🤣4 points

-

niiice, nifty lil tool. would it work on other mobos as well? or asrock specific? wtf 😮 i wonder how many IMCs he had to bin for that RAM speed (and how stable it is).4 points

-

Yeah, got $2900 for it which is about $500 more than I paid a year ago. 1440p was definitely holding it back. For now I will just run my desktop as iGPU only which works fine for what I am actually using it for. All that I ended up using on the Vcolor kit was 8400 C40 as beyond that was unstable and I could not pinpoint what was causing the issue. iGPU may be an issue now with the higher MT as well.4 points

-

Sugi pushing AM5 rig to the max: 5800 on all cores 9950X3D 8800 C34 5090 running 2500w Lightning BIOS clocking 3427 pretty much rock solid the entire play session4 points

-

4 points

-

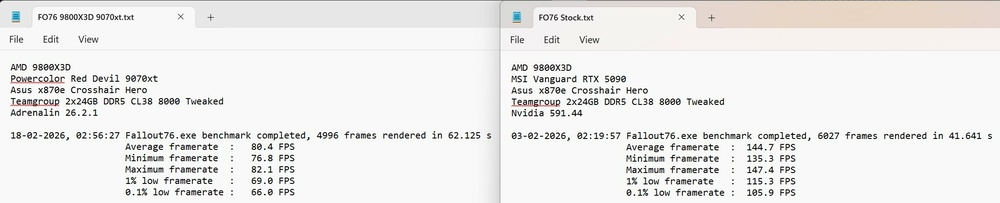

Red Devil is so much quieter and boosts higher than my Gigabyte. IMHO, this is the "premier" card to get this generation of 9070xt's especially using 3x 8pin vs 12v2x6. What makes it worse is the positioning of the connector on the Taichi 9070xt which is overly recessed or Nitro+ which is on the back of the card and will force a substantial cable bend in every scenario. Best argument for the 9070xt vs 5070 and 5070ti: Red Devil hitting 3300+ and 3400+ in FO76 at stock:4 points

-

I returned the X870E Taichi because installing anything in a PCIe slot dropped the GPU to 8X. It was installed and running 30 minutes before I started the RMA. Functionality is the equivalent of an ITX board with only a GPU slot. Very idiotic engineering and lousy bifurcation design decisions are a curse on MOST X870E mobos. We can partially thank AMD for mandating the waste of PCIe lanes on USB4/TB (which most never use and never will). I could see limited use cases for it with a turdbook (like using an eGPU or having no external display options without it) but USB4/TB is irrelevant and mostly worthless on a desktop with a dedicated GPU.4 points

-

Had a little time the other day, so I decided to try the Taichi X870. So I swapped the gigabyte aorus elite for the taichi. Took a bit to get the 8000 mhz ram set up, but a bios upgrade helped. Did a mild overclock and was able to hit 45k in cb23. If I have a little time this weekend, I may try to break my PB of 46.5k. The firmware is very similar to the gigabyte (not my favorite), I much prefer the MSI firmware, but its always fun to play with new hardware.4 points

-

I bought another 16x to x8x8 adapter that is a bit more direct and doesnt use the SAS interconnects + daughterboards. If this doesnt work then I'll just have to eat the L and buy the adapter from C_Payne as he had tested his adapter with my motherboard years ago as working. Trouble is the guy is not based in the US so i'll have to risk the overseas + customs. Really want to test 7900 XTX and 3090Ti together though I could go with less I am enabling the idea of getting a second 1080mm UT45 for "symmetry" purposes under the AC unit. If so then I would likely go for a waterblock on the 5800X3D just to make it easier on my fingers. Also bought another PSU for the benching rig. Be Quiet 1000w. If I need more power I still have 3x 900w for GPU's. Going to be changing up my DD a bit as the current scenario is not really optimal for benching. God of War: Ragnarok took the GPU to 39c the other night so I think I am too close to the wall and recirculating air.4 points

-

Yep! That 285K might have actually been better than my 285K I am running lol. I didn't really test the 2 fully, but that 285K I sold you did my 8400 CL38 tune, same voltages, ran 40x D2D no issue, and only had a very slightly higher P core voltage and ring voltage. But it still did the same 41x ring and 56x P cores. The chip I sold you also booted higher E core on auto voltages lol. I can boot the higher E core clocks on this chip, but the low voltage V/F stops it from scaling unless I go in adjust the V/F offset for point 8. I think that is mostly a function of interpolation failing, while your slightly higher voltage allows for better interpolation and boots with no adjustments. But ya my 285K can't do 40x D2D unless I run a manual voltage, but I've been gaming on it and testing it for a couple days now and no issue at 40x. If we had a higher ceiling you'd likely be able to go higher since you still have voltage room.4 points

-

4 points

-

Is that 285K that could do D2D 40x at auto voltage the one you sold me? I have not done much tuning with it sadly and will probably not end stretching it much. But it is still a major uplift compared to the 12900KS I used before especially with encoding jobs. It can even outperform the 24 P-core Xeon 2495X in certain tasks that could not multithread well beyond 8 P-cores. I sold my 5090 a few weeks ago as well as I still do most of my gaming on my laptop (which I am now testing out Prema's bios which has been really fun). I have even tested out my 32GB M-die kit which is XMP 6400 CL32. Obiously not as great as the 48GB M-die which would do 8400 CL40 out of the box. I need to bench my encoding jobs and see if the faster memory speeds them up. Then again if I want Nova Lake later this year the faster memory would probably pair better with it.4 points

-

I am sorry, but the guy doesn't know what he's talking about. He claimed he was able to "warp" the internal PCB. Clearly, he hasn't watched Northwestrepair - the PCB's on these 5090's are literal heatsinks. He almost likely killed the GPU by a mistake in his shunt mod. This is what happens to people that labeled themselves experts.4 points

-

We opened this forum so that it can be a soft landing pad for those who still surfed those forums could still mingle. Personally I wanted to keep in contact with those of like mind in hobbies that I also enjoy. Forums have become fragmented over time especially for laptops as manufacturers become more and more locked down both on software and hardware. The identity of the forum may change over time as a result. That being said we are able to keep the forum relatively light in cost so as a result we are comfortable keeping it alive effectively forever. You can always PM me if you have questions or concerns I do try to be as fair as possible in moderating.4 points

-

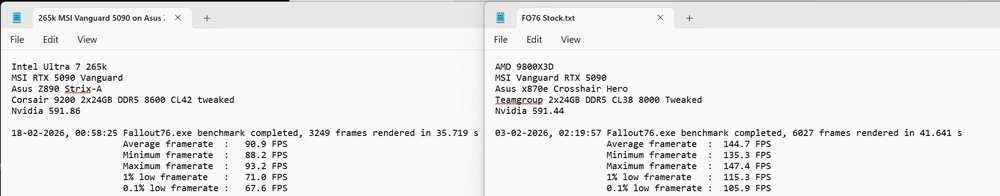

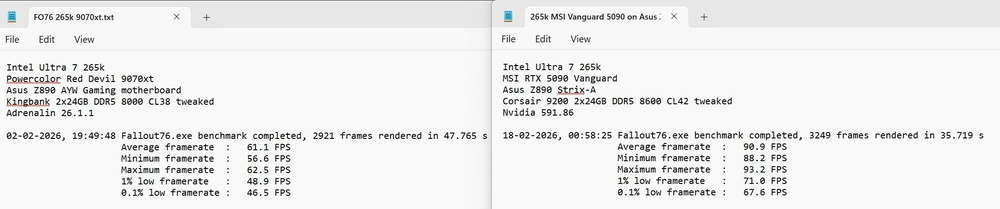

Finally got around to the ole switcheroo for some FO76 and WoW testing.... Welp, my 265k adventures have come to an end for potential on my main rig methinks. 9800X3D is just too beastly in Fallout 76 9070xt is just too weak on top of that but that was to be expected against a card that costs 3-6x more and is really a 5070ti/5080 contender depending on games played. Good news is for WoW at 4k? 265k tuned is a legit contender. It also was holding its own in Hogwarts too. For raids, I found it had almost that same smoothness and control of the lows like the SP109 14900KS in the wife's system. If all I played was WoW, I'd recommend a nice, tuned, cheap 265k on the cheapest board you could find. Problem of course is securing 8000+ DDR5 in the current market. Unfortunately I play equal parts Fallout76 and WoW both at 4k these days as my two main games and have for the last 3+ years and the X3D just massacres All of Intel's offerings in that game as it always has since I started testing with the 7800X3D several years ago vs the 12900k, 13900KS and eventually the 14900KS. I did manage to eeek out a little bit more performance on the Strix and 8600 tuned vs 8000 tuned with the better 265k being able to tune up quite nicely but it still couldn't hang but that's about right considering it couldn't hang even with the 14900k still churning along at 59/45/50 atm. Red Devil 9070xt was clocking over 3300 at stock in Fallout76. Much better than my Gigabyte 9070xt in the wife's system. 265k vs 9800X3D with MSI Vanguard 5090: 9070xt vs 5090 on 9800X3D: 9070xt vs 5090 on 265k: ----------------------------------------------------------------------------- Up next is a break down and cleaning of my main rig and install the MSI AI1600T PSU along with the WV2Pro and DDR5 cooling mod. I plan on holding onto my Vengeance 9200 sticks in anticipation for Nova.....4 points

-

I still wouldn't run the 2001w XOC as a D2D vs the Matrix vBIOS. I guess if you don't really have a choice unless you want to whip out a soldering iron to get it working with the Matrix, you use what you can use but for normal cooling, it is the Matrix 800w for me or potentially the 1000w Lightning.4 points

-

The main problem with the (wrong) 2500w XOC version is the default voltage of 1.2v. If you forget to use the curve and reduce voltage you are at risk kill the card in longer benchmarks. Not as much with the 2001W XOC that default to 1.150 And where to get the MSI XOC tool the chosen ones got for the Lightning? Not sure that one is leaked. +50mv is huge when already high 1.150 is at the border for custom cooling with +1000W power limits. I remember using the Galax XOC vbios I have for the 4090 HOF. Pump in +50mv with the Galax tool above default max voltage can kill the card without proper cooling (preferably chiller with sub zero temp).4 points

-

speaking of, any indications that the lightning vbios has leaked yet? 😛 im guessing they dont have any reason to upgrade to quad channel anytime soon, what with DDR5 (and likely also 6) doubling the amount of internal "channels" with each new gen. btw, resolved the bad paste job, turned out that the Thermal Grizzly AM5 High Performance Heatspreader was the culprit, or rather its missing z height vs the stock heatspreader. the Arctic LF3 coolplate doesnt make proper contact with it, even though im using the TG shortened offset mounting kit. ive tried twice now with crappy results. so its back to the regular AM5 retention mechanism incl the stock heatspreader, but at least now i got a nice clean delid with TG conductonaut extreme on it. done with disassembling my hardware for now, back to RAM tuning! 😄 on another note: received and email from TG, seems like a new firmware update is out for the WV Pro II, including first official version of the accompanying software, nice 🙂4 points

-

Totally absurd and such a low-value. Sad. It is nice that not all tech is crazy overpriced. My wife and I last purchased phones 5 years ago. We have been using our OnePlus 8T phones since early 2021. I finally decided to upgrade them and our new Google Pixel 10 Pro XL phones are out for delivery today. The price is essentially the same for the new Pixels as what I paid for the new OnePlus phones (within $25 of the same). I chose the Pixel for both of us not only because of their outstanding quality, but also because I am going to be using GrapheneOS rather than Android. Time to give Google the same treatment as Micro$lop.4 points

-

OK. Enough of the silly gamerboy normie crap. Back to speed trumps everything.4 points

-

ha not with me they didnt! upgraded all the way from a 6700K to a 9900K in my Clevo machine 😄 suckaZ! and big thx to bro @Prema for that one 😛4 points

-

im 3rd at timespy with my CPU/GPU combo... n even the 7950x + 9060XT i would be 3rd too, n 9950x + 9060XT i would be 8th 😄 no new CPU needed when u have DDR4 with B-Die n totally optimized 🤤 n just noticed this score was with my old B350 board, i should do a run with the new B550... AMD Radeon RX 9060 XT 16 GB Grafikkarten Benchmark Resultat - AMD Ryzen 9 5950X,ASRock AB350 Gaming-ITX/ac4 points

-

Oh its just the waterblock (EK-WB) not the GPU proper, if someone listed a 3090Ti for 70 USD I was assume its a scam be it from the sellers end or my own lol4 points

-

Which 9070 XT do you have, Brother @Rage Set. (I think you mentioned it before but I do not remember.) I got bored and decided to mess with slower memory speeds to see how fast I could make it go. This is stable so I will see how low I can get tCL before it unravels. 6400 1:1 is snappy feeling even though it is a bit slower on read/write/copy.4 points

-

my dudes, we can consider ourselves lucky to have covered all our bases with regards to hardware. our 4090s/5090s will stay at the top of the pack until 2028, lets just hope they dont go up in flames / melt until then 🤣 boy am i glad that i got my 32TB of pcie4 m.2 ssds plus took care of the ram binning with 10+ kits back in the summer of last year. prices were at rock bottom then ("good old times"). and even though i overpaid for my 5090 at +25% above minimum pricing (3300€ vs. 2650€ incl. 19% VAT and shipping), a whopping two thirds of it (2100€) was made up by the sale price of the 4090 😅 besides, even when the suprim currently IS available, its offered at 4000+€, absolutely insane... long story short: i guess compared to other unlucky users who are currently planning on building a rig, were pretty well off at least for the time being...always good to count and be generally aware of your blessings 🫠 mixed news on the ram tuning front: still cannot reach anything above 7600 stable but at 7600 im now reaching way way tighter timings than before, actually best ive had thus far overall on this mobo. final results pending, as u all know ram tuning takes quite a while...4 points

-

Cracks in Nvidia's armor starting to emerge.... "Exclusive: OpenAI is unsatisfied with some Nvidia chips and looking for alternatives, sources say" OpenAI wants to move to SRAM based inference (Like Apple uses FYI for their M architecture) and exploring alternatives... Google is already turning inward using their own custom designed chips.... AMD is starting to chip away at Nvidia's AI marketshare.... Microsoft questioning the profitability of AI..... I can smell that change in the air ever so slowly....4 points

-

5090's back in stock shipped and sold by Newegg reflecting the beginning of new pricing insanity incoming when they are stocked..... In their defense, Walmart is selling shipped and sold by Walmart the PNY EPIC 5090 for 4299.99 so this is the way things are going for 5090s..... MSI Liquid 5090 is also listed on MSI's site at $3699.99 too....4 points

-

Sorry to hear about the adverse weather. Your temperatures look to be about the same as Brother @Rage Set and a significant portion of the Eastern US (even the Deep South) is experiencing brutal and abnormal ICE and snow, downed power lines, etc. I suspect that Brothers @tps3443 and @electrosoft are also getting hammered pretty severely right now. The Arizona summer heat can be deadly, but Arizona (and most other states in the West) very seldom have severe or catastrophic weather events. Most of the horrible and catastrophic weather events occur in the Central and Eastern states. Brother @Raiderman and I do not see very many temper tantrums from Mother Nature.4 points

-

I'm going to try a bit more tonight luckily I unplug a few cables and I can pull the whole thing out but it's still a pain to work in due to the water loop and how it's routed. Problem is work is rampant due to the winter storm so I just don't have the time to troubleshoot. There was a local listing for a x570 strix but it's 3 weeks old so I don't expect to hear back I think for the time being ill set up the z490 system just to prevent me from making an impulse buy, I can still play with lossless scaling with the 3090ti + 5700XT foe the interim. Also gives me a chance to play with my pixel 10 pro and how well it handles desktop mode on android since unraid handles most of the grunt work. But that's all just me trying to make lemonade4 points

-

4 points

-

Good on you brother! Nothing is as frustrating as "comfortably getting by." Just enough where life is comfortable, but not enough if something were to go sideways or you suddenly wanted to take a break or do something else but can't really afford to do that....cruise control has its pros and cons. Almost 2 out of every 3 people live paycheck to paycheck in America now. It's a somber reality. Here's wishing you all the luck and success in the world man....now go get it. 🙂 My dad used to tell us all the time, "You know the people who both lose and win the most at life still equally die right? Immortality is not in the prize pool."4 points

-

Someone used the wrong screw and drilled through the card @Mr. Fox lol what the heck. People are something else man. I’ve had mine apart like 4 times now. You would have none of these issues though. That’s not a screw that would be included with it either. All the screws in that section do not look like that at all, and they all match.4 points

-

I feel like if cable makers really didn't cut corners it would at least put a nice dent into many of these problems. Watching that brand new Corsair cable be absolute trash was alarming from its loose fit to its readings. It seems like Cablemod learned their lesson that first time around and all the issues with 4090 cards and really came back with a high quality cable with a snug fit and good amperage distribution. Trash connector that needs perfect conditions to properly thrive Trash AIBs shaving pennies on quality adapters even to their own detriment Trash end users treating it like it is as robust as 8-pin and twisting and pulling it with reckless regard.... I DO think this connector is here to stay, but I do feel like with a few more refinements it will be declared "safe" lol, I tried to avoid that club! 🤣 lol, well I'm going to let you know it doesn't get any better in your 50's! 🤣 I turned the corner today and my fever finally broke after 4 days but I know this wet cough and general malaise is going to be with me for at least another week. Speedy recovery brother! -------------------------------- @Mr. Fox It's like we talked it up..... 3090ti vs 5090. 5090 is two generations newer and fully 66 to 133%+ faster than a 3090ti with a lot of that on the low end being an inability to fully utilize the 5090 even at 4k....it would be even worse with a 3090. 5090 is just a beast. I am glad we were able to get ours at a somewhat sane (in the realm of 5090s) price... --------------------------------- Zotac first out letting us know massive price hikes coming specifically to the 5060 and 5090 and kind of throwing Nvidia under the bus but they are the reason so.....yeah.....4 points

This leaderboard is set to Edmonton/GMT-07:00

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

.thumb.jpeg.6525223bcae8200a11192d197e3f8fff.jpeg)

.thumb.jpeg.91722053f95a7bc78a220375413f3d25.jpeg)