-

Posts

466 -

Joined

-

Last visited

-

Days Won

4

Content Type

Profiles

Forums

Events

Everything posted by Clamibot

-

I have that same Crucial Ballistix kit. It works great in my X170SM-G! I haven't tried doing any overclocking with it yet, I'm just using the XMP profile.

-

Ray Tracing on M18xR2 - RTX 3000 MXM Upgrade!

Clamibot replied to ssj92's topic in Alienware 18 and M18x

This is still a good thread to post your benchmark results on and ask questions, but an even better one would be this thread since it's far more active: -

Definitely check the pins, but it could also be due to mounting pressure as well. You have to get it just right, or certain things will go wonky with the system. If I tighten the CPU screws down too much on my X170, the system will no longer boot.

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

AMD cards are good if all you're after is pure rasterization performance and don't care about ray tracing, frame generation, or deep learning driven frame upscaling. They're also great if the particular suite of games you play has a performance advantage on AMD cards, which for me, about half my library has a performance advantage on AMD cards, so it was worth it for me to get my 6950 XT when I did. If he 7900 XTX was a few hundred dollars cheaper than it is now, I would've gotten that instead. Another upside is they use 8 pin power connectors, so no need to worry about your power connector melting. The caveats are various driver issues which still are not freaking fixed. Fortunately, only one of those affects me, and that's with anything VR related. Do not get an AMD card for VR if you don't have a VR headset that plugs in directly through a USB connection and Displayport. I do VR development, so I have to use an Oculus Rift with my desktop, otherwise if I use a Quest headset through Quest link (wired or wireless), I will get a black screen in 5-10 minutes and my desktop will shut off. If the only thing you do is play games with the card and your library has a performance advantage on AMD cards, then AMD graphics cards are for you. Edit: I forgot, there's one more caveat that affects me. I can't do video calls on my desktop either. The drivers irrecoverably crash within 5-10 minutes, so I get that black screen and my computer shuts off. -

Yeah that REV-9 laptop is super badass. I would really like to see a complete disassembly of that laptop.

-

I'd absolutely love to have one of those as well! That'd be the one laptop that would get me back into laptops. Clevo's newest X270 and X370 models are abominations and are completely undeserving of the Xx70 moniker. I absolutely love my X170 and wish it had a proper successor.

-

That new MSI Titan laptop looks like a good laptop, but it's strange that they capped the combined power draw at 270 watts when they supply a 400 watt power supply with it. That doesn't make sense. On the other hand, if that power supply uses the same connector as the X170's does, that would be awesome as we could make use of that.

-

That's really bad since the X170 can cool a 480 watt combined load indefinitely without thermal throttling. It pains me to see the build quality of laptops going down the toilet. My X170 will definitely be my last laptop unless something good comes out again when I will eventually need a replacement. Fortunately the loss of laptops from my arsenal of computers isn't much of an issue nowadays since my needs and wants are better served by a desktop + PC gaming handheld setup these days. It would be nice to keep having laptops, but again, not a requirement for me anymore.

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Looks like this question hasn't been answered yet and I'm qualified to answer as I own this laptop. I have never had this problem on the CPU side, but I had something similar happen on the GPU side when I first upgraded my graphics card from the original GTX 860m that was in it to a GTX 1060. From what I can gather, there is a power allocation that is performed on different parts in the laptop that scales based on the maximum output of your power supply. With my original 180 watt PSU, I was being clock limited on the GTX 1060 and noticed that I could never get a power draw higher than 80 watts on the GPU. Once I got the 240 watt PSU, I was able to get the full 120 watt maximum power draw out of the GPU. So my guess is something similar may be happening for you on the CPU side. With a 180 watt power supply, you get 80 watts for the GPU, maybe 75 for the CPU (which may be why you're hitting that limit), and 25 watts for everything else. What PSU are you using with your Ranger? I use the 240 watt PSU and have been able to overclock my 4930MX to 4.5 GHz. It requires a significant overvolt (I think 75 mv but it may have been more, I'll need to try it again as I haven't done that for a while), and the CPU drew 125 watts of power in the process when running Cinbench R15. I wasn't able to sustain that speed for very long before thermal throttling, but the power delivery is capable of the task. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

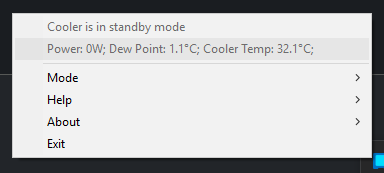

Today is a good day in Dallas to do some benchmarking with a sub ambient cooler. We got what looks like a quarter inch of snow and it super cold outside. Also no wonder the air feels really dry. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Has anyone else encountered an issue where touch input on a touchscreen won't register if the screen is running below its native refresh rate? If so, how did you resolve it? It never ceases to amaze me the vast amount of weirdo issues that pop up with hardware and software. As a software engineer and game dev, I see these things happen all the time, and I exect them to pop up very frequently. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

@Mr. Fox Speaking of legal requirements, I think all motherboards present in all devices should be required by law to have a dual BIOS. Ones that don't should never be sold. I had a bit of a scare today when flashing a BIOS update on my Lenovo Legion Go. The device stupidly decided to shut off in the middle of the update. and the power button turned red. I got freaked out for a second but remembered I read somewhere before I got the device that it had a dual BIOS, so I powered it on and off a few times until something showed on the screen again, and then the update restarted and completed successfully the second time around. This is the first case of me ever seeing a dual BIOS in a handheld, which makes it pretty special in that regard. Had it been something like the Steam Deck or ROG Ally, I'd have been screwed. Good thing Lenovo had the foresight to include a dual BIOS in their handheld. -

My 10900K in my X170 can do 5.2 GHz all cores while consuming around 215-220 watts of power. This is specifically when playing Jedi Fallen Order, a pretty CPU heavy game. Cinebench R15 runs make that spike up to around 245-250 watts. For giggles, I did try to see exactly how far I could push it, and I can manage 5.3 GHz all core in Cinebench R15 with the power draw spiking to 275 watts at the maximum. The laptop's cooling system on the CPU side can't handle this for very long and therefore I have never been able to complete a full run at that speed. Pushing it any higher results in a crash. It won't go any higher, regardless of how low I get the temperature. The good news is the power delivery on this laptop is very robust, so if you can manage to cool the CPU and have an excellent sample, you can push it very far in this laptop. I also acquired an even better sample from @Mr. Fox a while back that can do above 5.5 GHz all core as long as you keep the temperature below 60°C. Go any higher, and the system will crash regardless of how high you push the voltage. I did drop this sample in my X170 to see what it could do in a laptop, and that one did 5.4 GHz all core in Jedi Fallen Order at a slightly higher power draw (225 watts) as my first 10900K at 5.2 GHz all core (215-220 watts) in game. I use this super sample in my desktop now with the Cooler Master Masterliquid ML 360 Sub Zero cooler. The highest speed I've been able to achieve on this CPU so far with the TEC cooler on full blast is 5.7 GHz on 4 cores, and 5.6 GHz all core during Cinebench R15 runs. I don't remember what the power draw was at that speed, so I need to go measure that again. I just remember overwhelming my cooler after a while, but these speeds are definitely sustainable indefinitely for games. These are the results you can expect with super samples.

-

Yup. Mine has liquid metal plus the zTecpc mods, so it can cool a little over 200W sustained without the CPU thermal throttling. I definitely need that as pushing the CPU to the max + DXVK Async is the only way I can sustain 144 FPS in Jedi Fallen Order. This laptop is just awesome and it's a shame nobody has made a similar one ever since.

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

My recommendation to you if you still want a portable form factor in something that resembles a laptop is to look into military style or industrial portable PC cases. You can get something that essentially looks like a laptop from the 1990s but can house desktop parts inside. They're expensive cases but are really cool. One was featured in a recent LinusTechTips video as they build their own laptop using such a case. This was the case they used: https://www.alibaba.com/product-detail/New-design-16-1-Inch-LCD_1600578225544.html Looks like it may have enough space to even squeeze and ATX motherboard into based on the case dimensions. Note that you'll probably need to use a Flex ATX power supply with it. However, I wouldn't be surprised if someone managed to cram an SFX power supply into it. Have fun building your own *forever* upgradeable laptop! Edit: I did some more digging and the case officially fits up to a Micto ATX motherboard. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Happy new year everyone! I hope you all have an awesome fun filled day! -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

My real life usage experience so far is that the Optane SSD has done the following for me over a standard SSD: Much faster hibernation Much faster wake from hibernation Much faster loading times in open world games (Borderlands 3 loads 3X faster off the Optane 905P vs my Samsung 970 Evo Plus) Faster copying of large batches of small files Faster load times for Unity projects Faster webpage loading times Faster code compilation Faster game builds Slightly faster boot times Slightly more responsive OS Optane SSDs greatly benefit games that do a lot of asset streaming because of the mad fast random read speeds. Now that's not the reason I got it, but I might as well put some games on my Optane SSD since I use my computers for both work and play. My workflow has also had some of it's bottlenecks alleviated so I can now spend more time programming rather than waiting. I do use 2 computers while working, but that incurs it's own latency penalty when I need to sync the code I wrote between both computers I use. In short, Optane is definitely worth it for a work computer or if you play a lot of open world games. I strongly recommend an Optane SSD to anyone who does software development like me, works with databases, or mainly plays open world games. I never want to go back to a standard SSD for work. It looks like it will be quite a long while before Optane SSDs will be beat in random reads, and these things are built to last! They can last a lifetime! -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Turns out the x4 slot was also running in x1 mode since my 4th m.2 slot shares bandwidth with the 4th PCIe slot😐 I disabled that slot and now have to move the drive that was installed there. My drive performance increased significantly after that change since now the slot is actually running in x4 mode. Apparently m.2 slots 2 and 3 will be disabled if I use SATA ports 5 and 6 as well. Some motherboards have wacky designs. This would be the last thing I expected out of my MSI Unify Z590. If they're gonna put the ports there, they should all be useable at once. Edit: Thanks for the help everyone. While you guys didn't directly answer my question about the drivers, you gave me a strong push in the right direction and I was able to figure things out. If anyone else encounters suboptimal Optane SSD performance in the future, this is the fix: Make sure your Optane SSD is connected directly to the CPU (not through the chipset, check your motherboard manual to see which PCIe slots are connected though the chipset and which ones are connected directly though the CPU). Then, make sure the motherboard doesn't do something wacky like limit the bandwidth (like in my case) due to it sharing bandwidth with another slot. Then, install the Optane drivers last and restart your computer. If your x4 slot is running in 4 lane mode, is connected directly to the CPU, and you have the Optane drivers installed, this will maximize the performance of your Optane SSD. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

The 1.5 TB 905P model I got costed $400. It's really expensive😅 I wanted to see how much of an impact it would have on my workflow as from what I've seen, it theoretically would significantly speed up my workflow. I spend a significant part of my workdays compiling code and waiting on builds to be made so I can test the games I develop. I wanted more time to actually do software engineering in a day and thus increase my productivity. I'm using the U.2 version as that was the cheapest. I have it connected to my PC through a Startech x4 U.2 to PCIe adapter. The AIC versions of the 905P are a lot more expensive which is really silly. What is this Intel Optane Panel you speak of? Edit: Welp I found out why the drive isn't performing as expected. The x4 PCIe slot on my motherboard is connected to the chipset, not directly to the CPU. I need to go set it to CPU mode first🤣 Ohh I'm a doofus. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

I'm using the 905P as an ordinary storage device. I cloned my OS disk to it. The immediate benefits that were apparent to me were faster load times for multiple programs at once, faster webpage loading, faster hibernation and wake from hibernation, and faster build times for games with Unity. I don't remember there being a BIOS option pertaining to Optane. I'll have to dig around and see if I find one. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

Hey guys, I recently got ahold of a 1.5 TB Optane 905P SSD for christmas and have been loving it so far, except for one really strange issue I've been having. The drive is very fast in of itself, but the dedicated Optane drivers significantly decrease performance over the default Windows storage device drivers (my game build times INCREASE by about 27.5%). According to individuals on the Level1Techs forums (which is where I got the driver from), the driver is supposed to significantly increase performance. For anyone on this forum that has experience with Optane, is there a specific driver verison I should be using with the 905P to maximize its performance? -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

MERRY CHRISTMAS EVERYONE!!! I hope you all have a stupendous day with your families and friends! This is my personal favorite holiday of the year. It truly is full of cheer. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

You're looking at prebuilts right? In that case, you're gonna be paying a premium over building one yourself. A prebuilt desktop should still be less money than a laptop with the same processing power though. Maybe it's a pricing glitch in your region due to market factors and hopefully things return to normal soon. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

That's an anomaly as typically laptops are more expensive than desktops for the same processing power. However it also doesn't help that CPUs in different devices are typically misleadingly named. For example, a laptop i9 is equivalent to a desktop i7, and an ultrabook i9 is equivalent to a desktop i5 running at low power. A tablet i9 is equivalent to a desktop i3, and thus is wholly undeserving of the i9 moniker. Therefore, it can seem you're getting more processing power than you actually are from the laptop you buy. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

Clamibot replied to Mr. Fox's topic in Desktop Hardware

I've seen some of those displays and they look absolutely gorgeous. It's really a shame that the laptops that are equipped with them are garbage, but my goodness the screens look amazing! I would like an OLED screen for my custom laptop build, but all portable OLED screens are currently very expensive. Perhaps they will have dropped in price by quite a bit once I actually get around to starting the build.