-

Posts

2,386 -

Joined

-

Days Won

36

Content Type

Profiles

Forums

Events

Everything posted by Aaron44126

-

I can say that my Precision 7560 is also domain-joined and I haven't experienced this issue with the latest Windows patch. ...Have you tried just disabling all of the Windows Hello stuff and then turning it on again? (I.e. remove the PIN and then set up a new one.) I figure it has to "cache" your AD credentials somehow (password hash gets sent in to authenticate you when you login in with your fingerprint or PIN) so maybe something got messed up with that.

-

I was going to check but it looks like that video has now been marked private... but you are right, I'd expect a touch/OLED to be glossy. That said, I have seen matte LCD touchscreens before, and I've never seen an OLED panel in a laptop at all (just in phones which are obviously glossy), so my expectations might be off. Thanks. I got my order in on the first day, but I know @operator ordered before me (from Europe) and also has a late August date. We were previously speculating that maybe 7770 systems are a bit more ready to go than 7670, but I haven't seen any other early 7770's either. So, I'm little bit worried that my estimate is inappropriately optimistic because it doesn't line up with everyone else's. But it did go to production status within 72 hours, and based on past experience, that means it should be here within two weeks or so.

- 973 replies

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

.thumb.png.e841bc17c8cca329ac53db7523663d9a.png)

Precision M4600 Owners Thread

Aaron44126 replied to Hertzian56's topic in Pro Max & Precision Mobile Workstation

Yes, all of these systems had a base option that was a plain DVD-ROM drive. You can install another drive (I've done it on my M6700). It needs to be an "ultra slim" drive, I can't remember the exact size but its around 9.5mm or 9.7mm. The 12mm ones are too tall. You'll want one with a flat faceplate/bezel (some might have one that is rounded at the bottom to match the style of the laptop it came from) but it's actually pretty easy to snap the faceplate off and replace it too. -

Curious why you guys selected A5500 over GeForce RTX 3080 Ti? GeForce will offer the same performance for cheaper in many cases. Do you use ISV/professional applications? (If so, it totally makes sense.) Did anyone else order from U.S. territory? I'm also wondering why I'm the only one with an early August ETA. I was looking back at the Precision 7X30 pre-release thread yesterday. Seems like a few early purchasers all got their systems around the same few days despite being in different countries. https://www.nbrchive.net/forum.notebookreview.com/threads/precision-7730-5530-7530-coffee-lake-pre-release.809278/page-30.html#post-10750185 I remember deliveries of last year's 7X60 systems being much more staggered out even among early purchasers. (Figured this was due to supply chain / global shipping issues, which are still a problem now but should be better than last year...)

- 973 replies

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

I can confirm that the fingerprint scanner is working as expected for me after the latest Windows Update patch from Tuesday. Two systems: Precision M6700 (FIPS) and Precision 7560 (non-FIPS). Both running Windows 10 Enterprise LTSC 2021 (19044.1826). FIPS is a separate gold square in the palmrest on all models. Non-FIPS will either be a "swipe" style reader in the palmrest, or integrated into the power button. ...Is your Precision system attached to an Active Directory domain? Do you use a SmartCard? (If not, I do not think that the Microsoft article really applies.) It looks like the English language for this statement is "This option is currently unavailable." This might help you with Googling for a solution. I saw some articles like this which discuss resetting various things, checking group policy, etc. https://supertechman.com.au/how-to-fix-windows-hello-this-option-is-currently-unavailable/

-

If you want to use single-cable docking (no AC adapter connected to the system) — WD19DC / WD19DCS is your only real choice. (Note, 7770 might take a performance hit with only 210W of power available instead of 240W.) If you are willing to use dock cable + AC adapter plugged directly into the system — pick any dock you want (even third-party).

- 973 replies

-

- 1

-

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

More chatter about "Windows 12" dropping in 2024, restarting the three-year cycle between major Windows releases. https://www.neowin.net/news/windows-12-microsoft-goes-back-to-releasing-new-windows-every-three-years/ https://www.zdnet.com/article/microsoft-said-to-be-ready-to-shake-up-the-windows-update-schedule-again/ (Will Windows "X" Enterprise LTSC 2024 be based on Windows 11, or Windows 12? Wouldn't be surprised if they don't even decide that internally until pretty late.)

-

RAID 1 is mirroring so it really only makes sense to do with two drives. If you want RAID 0 (striping / "one big drive") then you should be able to do it with three drives. Easy enough to set up RAID arrays after you receive the system. Intel's configurator is much more flexible than what you see playing with the Dell web site...

- 973 replies

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

BIOS update 1.3.1 has been re-posted. It's not the same file posted previously; this one has a signature date of July 12. https://www.dell.com/support/home/en-us/drivers/driversdetails?driverid=16RVV

- 973 replies

-

- 2

-

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

BIOS update 1.13.0. - Fixed the issue where the system cannot detect the dock when you disable the External USB Port option in the BIOS setup. - Fixed the issue where LAN port on the dock does not work when you connect a Thunderbolt 3 hard drive. This issue occurs when you disable the External USB Port option in the BIOS setup. https://www.dell.com/support/home/en-us/drivers/driversdetails?driverid=TMMTG

-

OK, so they might be telling you when it is on the way from the China factory to a local shipping depot, where in the case for me, that time will still be covered by "In production". ...Suspecting machines built in China and going to the U.S. go pretty quickly via cargo plane (they probably wouldn't be able to meet their estimated arrival if it went by sea) but maybe that is not the case for all destinations... 🤔

- 973 replies

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

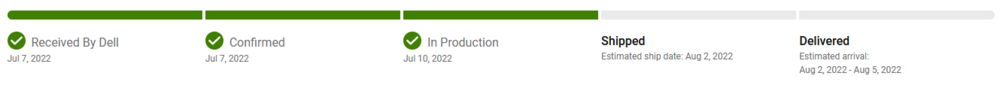

I'm a little bit curious why yours shows six segments on the order status page, and mine shows five. Do they have an extra status for some non-U.S. territories? The status that shows on mine are: Order received by Dell Confirmed In production Shipped Delivered (You should be able to see them all listed out if you view the same page in a desktop browser.) In this case, "In production" also covers the time after the build is complete, when it is still making its way from the factory in China to a local shipping depot. They usually won't mark it "shipped" until they have a local tracking number for it. (Sometimes they ship directly from China via UPS or something, and in that case it will be marked shipped and you'll see progress from China when you track it.) Holding out hope that it will arrive a bit early, before the end of July. Precision 7560's ordered at launch last year shipped after being in "in production" status for 15 days (and that was when global shipping lanes were more jammed up than now). I also ordered a Precision 7530 at launch in 2018 and it was received 20 days after launch. (I can't pull up the history on that one to see when it went from "confirmed" to "production" to "shipped".) This 7770 has second-day shipping so it won't be long from "shipped" to receipt.

- 973 replies

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

Well, the Precision 7560's that we got last year came in individual boring plain brown cardboard shipping boxes, so if they really do have "slick" black boxes designed specifically for these systems (like they also do for XPS / Precision 5000), that's sort of cool. ...Not that it really matters that much what the box looks like, once you're past the first few minutes of having the system. [Edit] Noticed that they have a proper unboxing of the Precision 5470 featuring @Dell-Mano_G... They also have some for the new Latitude systems and it seems like they're using the same design ideas across the board.

- 973 replies

-

- 2

-

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

Use the small business sales phone number. Or, just use the online chat (pick business sales). Click the blue "Contact us" button at the bottom right of the product pages to kick off the chat. (Even though it says 8am-1pm for the hours there, they've had someone available at all times that I have checked including during the evening.) I ended up going through a chat rep to place my initial order (from June that was cancelled). You can just tell them what model you're interested in and then go through the specs/options that you want, and they'll generate a quote to email over to you. You can finish the order by pressing the button at the top of the quote. Check the quote carefully before ordering (be it phone rep or chat rep) and make sure that everything is exactly right. Smaller things like whether or not SSD door is included, fingerprint reader, backlit keyboard, and so forth are things that they tend to get wrong for me...

- 973 replies

-

- 2

-

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

See the PC World article if you haven't looked at it yet. The author was able to interview Tom Schnell, who is one of the key players at Dell in creating the CAMM "standard", and it seems that there was also at least some exchange with @Dell-Mano_G (Mano Gialusis). https://www.pcworld.com/article/693366/dell-defends-its-controversial-new-laptop-memory.html It is noted that Dell is working to get the CAMM "standard" in front of JEDEC for blessing. If JEDEC approves it then Dell would have to offer it to other laptop/memory makers under "reasonable and non-discretionary" licensing terms. It seems like something has to be done about SODIMM which will become more and more difficult to work with as DDR5 matures and speeds ramp up. There's also always a chance that what becomes the final standard is tweaked a bit from what we are seeing in the Precision 7X70 systems, so these modules and future modules might not be cross-compatible (assuming that CAMM becomes a JEDEC standard at all).

- 973 replies

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

Not sure where to find the GeForce part number. On the quote, it says 490-BHOM; I think that is an order/item SKU code and not the actual part number. Maybe I'll be able to find the part number after the service tag is issued. I can say that I've had multiple engagements with sales reps and they've never had an issue "finding" the option to add to a quote. If you want to use the part number to order the GPU card separately from the system, you can try, but Dell doesn't allow that in most territories. I ordered with Windows 11. I'm planning to use the stock Windows 11 install only for review benchmarks. I will use my own Windows 10 LTSC license/install for general use. (I have some major issues with Windows 11 that I have discussed before, in this thread and others. There's a link in my signature.) I'm planning a triple-boot setup with those two, plus Linux, but I will be spending almost all of my time in Windows 10 after getting the review out. No SSD door for me. I don't see any practical need for it, I prefer the "look" of the system without it (...though you're not looking at the bottom of the system that often...), and I don't necessarily want it to be easier for someone to yank the drive from my system. From pictures in the service manual, I don't think that Dell has done much to improve the SSD thermals. But, they did add DOO fans which should get some air circulating around inside of the chassis, and that could help reduce SSD temperatures a bit.

- 973 replies

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

I did just pick up a "smart thermostat" a few weeks ago, which has the perk of being able to see the temperature and control the AC while away from the home. Not sure if that would really be helpful in your situation but maybe you could set it higher and then lower it while you are on the way home from work or something. I selected "Ecobee3 Lite" and I'm quite happy with it so far... Ecobee seems to be a leading brand and this was one of the few current models that I could find that has HomeKit support and does not have a microphone for Alexa / smart assistant support.

-

I've heard of this thing but haven't tried it. You can install it at your breaker box and it will help tell where your electricity is going. https://sense.com/

-

Well an easy one is, you could order through the web (not through a rep), and then come back next week and find the price has been lowered. Another one could be that Dell puts a new deal in place shortly after you order (i.e. "five years ProSupport for the price of four years" like they had in June) and you want to take advantage of that.

- 973 replies

-

- 1

-

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

Seems like web prices have been updated, at least on the U.S. site. The system that I configured last week on launch day for $7,100 is now showing around $6,200. (i9/128GB RAM/A4500/512GB SSD/5 year ProSupport Plus/extras; same as the one that I bought through a rep, except with RTX A4500 instead of GeForce RTX 3080Ti.) If you buy a system and end up finding a better deal shortly afterwards, you can go to customer service and they should credit you the difference.

- 973 replies

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

So, I'm going to be working on a "professional"-style review for the Precision 7770 after I get it. I've been planning on doing this for a while, even before NBR died. My intent is to publish it here on NotebookTalk. I'm planning to have two parts to the review. The first part will follow a normal laptop/notebook review format and in addition to some benchmarks, I'll be looking at things like available options, design & build, disk performance, fan behavior/noise, temperatures & max/average clock speeds, and so on. I'm picking up a "Kill-a-Watt" power meter, IR temperature sensor, and dB meter to help out with this. For the first part, I will specifically be looking at running tests with the system in its stock/default configuration and not taking any special steps to push the performance up. The second part may be published a little bit later and it will be more of a less structured grab bag where I take a look at things like the impact of Dell's thermal settings, Optimus on vs off, Windows 10 vs 11, dock behavior, undervolting CPU & GPU, what Linux support looks like, and whatever else I can think of. In terms of benchmarks for the first part, I am looking at: CPU Cinebench WPrime GPU 3DMark (Fire Strike & Time Spy, and probably some others as well) Unigine Superposition GravityMark Final Fantasy XIV benchmark Shadow of the Tomb Raider benchmark (Not apt to include SPECviewperf ... I'm getting a GeForce configuration, so performance in many of those tests will be bad compared to what you'd get with a pro-RTX GPU) It's my first time attempting something like this so I'm looking for feedback as to what sorts of things you guys would like to see in a laptop review, if there is anything specific that you think I should take a look at or if there are any benchmarks that you would like to see run that are not included above. (The review will take some days/weeks to put together, so of course I will also be doing Q&A/AMA once the system comes in. I imagine that some other users receiving systems would be happy to participate in this as well.) Thanks!

-

There was the situation where it looked like 7770 would launch before 7670, a couple of weeks ago. They might have been more ready to go with that one.

- 973 replies

-

- 1

-

-

- dell precision 7770

- dell precision 7670

- (and 6 more)

-

Yes, the issue seems to be if you add a fingerprint reader, it forces you to add a smartcard reader, and that forces you to add the SSD door.

- 973 replies

-

- dell precision 7770

- dell precision 7670

- (and 6 more)