-

Posts

3,108 -

Joined

-

Last visited

-

Days Won

164

Content Type

Profiles

Forums

Events

Everything posted by electrosoft

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Astral installed.... This card is a CHONKER. Easily the largest GPU I've ever owned and it is HEAVY. I had to fish around for a sag device as I don't want to open up the other if I'm going to end up returning it. I just took out the card and the tentacle. Everything else is sealed and intact. Absolutely beautiful card. I like the look of it just as much as the FE. Initial WoW tests has it pulling ~537w and hitting 2857-2865 out of the box. Timespy has this thing pulling ~610w but temps are good. During the first test it was hitting 2900+ GPU out of the box and never went below 2857mhz on this dirty run after GPU install. As expected, the fans are a big 'ole nothing burger. Either that or Asus has corrected them in the interim. I left it running playing wow with audio muted (while testing for coil whine) and I sat there listening to them and just, "that's it?" Like I said, I've heard much louder and worse on many other cards. Is it there? Yes, but common..... Coil whine is on the same level as my MSI 4090 Liquid which is super quiet. You need a silent room to hear it at all. With any level of audio it is non-existent. Not as quiet as my 5080 FE which is basically silent but as close as you can get. Score another win for the EVGA 1600 P2..... Preliminary quick tests in my "cap out" spot in WoW (gonna have to find a new spot now...boooo!): 5080 = 125fps GPU bound (99%) stock 2718mhz 4090 = 135fps GPU bound (99%) at OC 3135mhz 5090 = 184fps NOT GPU bound (92-95%) at stock 2857-2865mhz Looks like the 9800X3D even at 4k Ultra RT at max has met its match. Question now over the next week is.....is it worth it? -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Must MUST protect precious cargo on the way home!!!!! 🤣 In case anyone else wanted a GPU from my BB, here is there massive selection: **SCORE** -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

I thought so too. Only thing I could see maybe doing is some thermal putty on the backplate for extra cooling but the overall look and everything is top notch. I initially thought the rubber gasket came with the astral but realized it did not. I wonder if it comes with the WB, but either way it is nice. One reason I was hesitant before pulling the trigger was the LM possibility. I'm sure Asus only hires the best bean counters 🤣 Not yet......but close. 🤑 What's the ETA on your Suprim? -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Spot on with that link to Reagan speaking about the pitfalls of tariffs and how to properly use them. Look, the US has been here before. When we went to economical war with Japan in the late 80s/early 90s we were successful because of not only us but other countries bringing balance to global trade and leveling out participation in the economy. Focusing on China only and making sure we have allies in the fight world wide is the right approach if that's the goal to thwart another country from achieving economical dominance like the US has enjoyed for decades. But this Liberation Day nonsense in tandem? No. I will reiterate, I hope it succeeds, but all the signs are it is going to cause catastrophic damage and the vast majority will be a tax on citizens in the US and other countries if they retaliate which I expect can and will happen. I'm just not seeing the end game here dropping an economical nuke on the world and not expecting retaliation. I'm sure @Reciever is going to want to steer the convo back to tech (rightfully so), so this is all I'll speak on about the tariffs and how our hobby is going to get much more expensive sooner than later. ------ If more and more businesses, both small and large, start to use 5090 for AI server needs that will compound pricing on top of everything. It would also harken back to mining farms and lots of GPUs being sold en masse. For 5090 flag ship cards, it's a potential problem, but for all the cards underneath I expect supply to continue to flow in both AMD and Nvidia. If you're content with 5080 on down, you should be good to go soon. Yeah, that 10900k was one of those CPUs on the desktop the SP113 when pushed to 300w the SP113 was clearly the winner hitting 5.5+ pretty easily, but in the X170SM-G? I just kept comparing R23 runs going, "this can't be right" because it was running so much better than all the previous ones I tested and all the ones after I tested. Yeah, you and @tps3443 run 14900hx chips while mine runs a 7950x3d. He has an Acer while you and I have MSI variants. I did swap out the stock drive for a heatsink equipped 990 Pro 2TB. I'll have to check the memory temps. I've been using this since 2016 and if I'm away for awhile, I will pack it in my laptop bag. It works fantastic. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Astral disassembly and block. I thought the Astral used LM. Kind of glad to see it doesn't after the Matrix. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

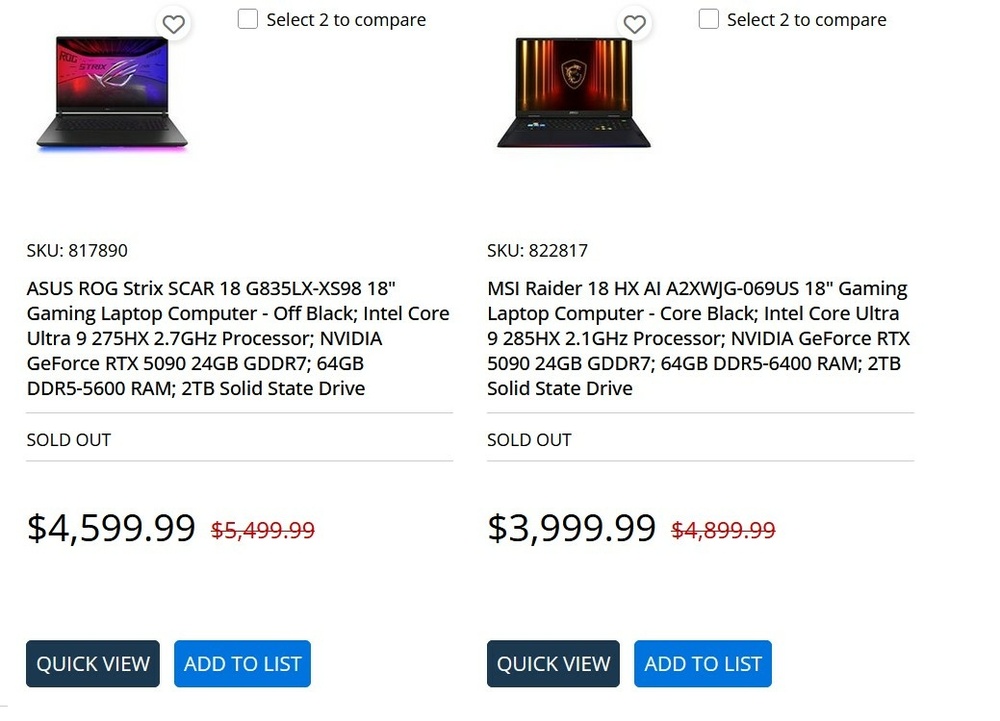

The sweeping tariffs announced today across ALL countries basically and increasing China's tariff rate to 54% is mind boggling. The <$800 loop hole is also closing too. I wish we would just focus on China at the moment but alas.... "Liberation Day" 🤑🤮 With the way things are going, buy any hardware you want now if you're in the US as much higher costs and a recession is almost guaranteed along with a lot of US based businesses going under because US buyers can't or won't buy their goods at those costs. If this sticks, expect computer part prices to skyrocket in the US. I don't even know where GPUs are going price wise at this rate.... It's a game of chicken at this point and I'm not really sure China will blink let alone the sheer amount of damage we are doing to our relationships with all the other countries in the world including those we call (or called) friends and allies. As always, my main concern is making sure exemptions are placed on necessities required to exist. I really am not as concerned about the top end Lincoln Nautilus going from 80k to 120k+ as I am the cost of necessities from food to household items jumping from $10 to $15 across several ranges that add up quickly for those barely getting by or even those slightly above that. "Hey, tariffs have made the price of already unaffordable American made items cheaper than previously affordable but now extremely unaffordable imports....are we winning yet?" Imagine in the USA where ~11% are classified as poverty level and some studies showing 50%+ live paycheck to paycheck suddenly everything goes up in cost by 10% or more because of tariffs which are known to be another form of taxation. I don't care how you sugar coat it and wrapped it in false patriotism and puffery......tariffs = tax on citizens. Do you think companies are going to suck that up? Nope, they're going to pass as much of it as they can right along to consumers. trump is betting big with house money. I truly hope he knocks it out of the park, but if he doesn't, this could be catastrophic for the US. With today's announcement of 54% tariffs on all imports from China and Liberation Day, these prices may seem cheap in a few months..... Looks like Saturday it should be ready for pick up now and I'm going to pick it up. Worse case scenario, I return it if it has too much coil whine and/or that 4th fan @Papusan is proclaiming sounds like Darth Vader's breathing system at the end of Return of the Jedi after the emperor tunes him up before taking a swan's dive sounds thusly so back it will go..... $3999.99 isn't crazy out of range for the 18 Raider with the 285HX, 64GB 6400 memory, 5090 and 2TB SSD and a mLED display though. but a heck of a lot more than I paid for mine. After tax that is ~$2k more than I paid. No thank you. Nope, when I got home from Florida we got sick with Covid pretty quick and it sat in the bag till last Friday when I updated it for an out of town trip for a family member's wedding where it handled gaming nicely and now (lol) it's back in the bag again. I always use a set of old school "laptop balls" on all my primary laptops for keyboard angling and better cooling. Next time I bust it out, I'll collect some before and after OC data from it. I know you asked me before a week or so ago, but it was still in the bag.... -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Oh Nvidia......you rascally rabbit you! 5070ti is really shaping up to be the sweet spot for Nvidia when MSRP settles down to $749.99..... -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

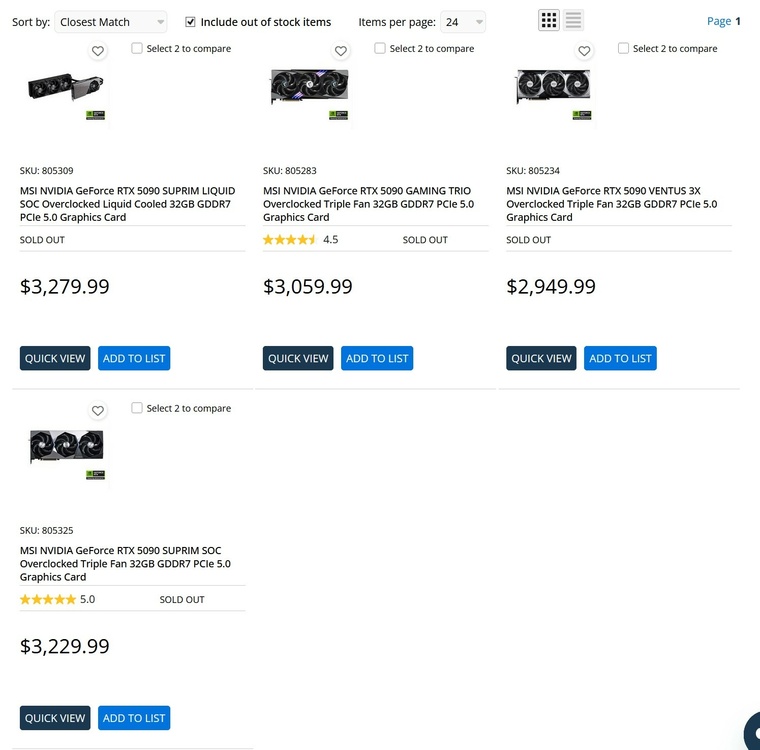

I'm looking forward to your results! I always follow the X170 threads (along with NH55 of course) to see what new results pop up. I just know when all was said and done, I tested that 10900k against many off the shelf variants and 4 specifically targeted binned chips including an SP113 I borrowed for a few days and it was still better for laptop use. It was better stock IHS than all the others including the delidded ones. It was just a great chip in the X170SM-G. Last cash grab as the market normalizes to a degree and all the heavy hitters move out. Hmmm, who was it that said everything would settle down a bit by the end of April? 🤣 FOMO makes people do crazy things. Finally the Apex is arriving. I think what we've seen is latching onto a very valid reason (tariffs) and using that as a means to increase prices as much as possible even beyond and trying to at least touch off of scalper prices to maximize profits with the ability to lower costs when needed. I will be curious to see when sales drastically slow and prices have to come back down, how that suddenly "works" with tariffs still in play. I have no problems with AIBs charging as much as they want. It is up to the consumers to say no. I know for many, even $3k-$4k still isn't much to them as they spend much MUCH more elsewhere on their hobbies and leisurely pursuits but still.... As for the FE, it is compact and elegant. The fact you can slap a full tilt 5090 in a SFF 2 slot design is pretty awesome. It is not for those who want to overclock but want full 5090 power out of the box that isn't ginormous. It does run warm but well within spec and doesn't throttle. It is also priced the best easily at $1999.99. I fully get why it exists but also why certain segments have no desire for it. Yup, the early adopters with better spending/worse common sense are drying up along with a steady trickle of inventory coming in. You also have scalpers slowing down as it is past the "easy money" stage of the program. Monitoring a few stock bots, other brands are bringing cards to the market somewhat regularly too but they are usually snapped up pretty fast (or were). The real question will be how the market and companies respond going forward. -------------- Remember the outlandish prices for 5090 laptops announced? Discounts already on MSI and Asus models. Nobody in their right mind is going to pay those prices. On another note, I didn't realize MSI went with the 285HX in their Raider. I thought only the Titan was getting it so that's nice to see. Basically no real difference real world but still.... Unfortunately the 9950X3D variant for MSI is still sitting at $5099.99 atm, but we will see.... -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

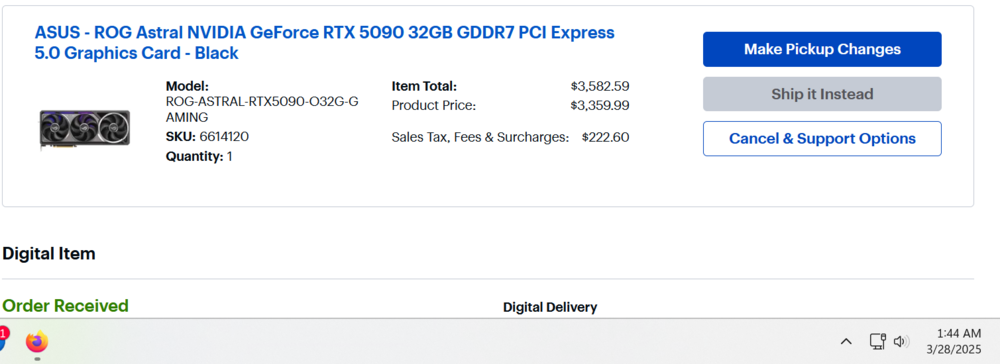

I'm so glad a board member picked it up especially @1610ftw. Hopefully he gives it a whirl in his X170SM-G to see how far it can flex especially compared to the SP101 I sold him that was my best sample I had including vs my golden sample like yours but your SP106 was rated higher I think than my LTX sample. --- And yeah, supply is trickling in steadily now but more importantly demand at those higher price points is waning fast. The fact those combo deals which aren't even that bad (Sub $3k w/ a z890 Motherboard) for a gigabyte shows something is going to have to give especially for perceived "B" tier cards. I'm thinking of cancelling my Astral 5090 pickup at the end of the week. I just am having problems stomaching a $3.5k 5090 purchase. I know it's an Astral and all that, but the price just seems crazy high. But on the other hand, Astrals have been binning pretty nicely overall but I know me and I'd be just as content with a $2k FE or a $2.2k PNY and keep it moving. I'll continue to mull it over mentally. 🤣 I could always just play with it and see if that justifies the purchase. I do have a 60 day return window but still that isn't realistic considering I have to pay for it within 30.... -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

What's in the box? (In my Brad Pitt from SE7EN voice) --- 5090 sales are definitely slowing down and everything else Nvidia is seriously slowing down..... Gigabyte, Zotac and MSI combo deals on Newegg have been up all day with some even sub $3k for the Gigabyte combos still available for the $2639 Windforce and Z890 MB combo for $2939. That includes a free Star Wars Outlaws Gold Edition key (~$109 value). Like I said, Pre-builts are available everywhere now for order. Upper end 5070, 5070ti and 5080 (aka the stupidly overpriced versions) cards are in stock. (shipped and sold from NE only) and some on BB too. The real question is with tariffs in play, will the prices remain the same (IE, MSI just raised their prices again), continue to go up or fall? What is the actual breaking point? 9070xt's are all out of stock atm on NE (shipped and sold from NE only) and BB. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Absolutely awful deal no doubt about it. If I don't like it, it will be going right back. I have a 60 day return window. I wonder if you could have even picked up the LC on their site at the old price..... Astral $1649.99 vs $999.99 = ~65% markup over MSRP Astral $3359 vs $1999.99 = ~68% markup over MSRP Ugh.... I agree. While I do like my 32" Alienware OLED, I find I still prefer the picture quality of my benQ 10-bit 32" IPS display overall in every aspect but it is capped at 60hz. Everytime I'm working/tuning/building a system with it and launch some games for testing, it just re-affirms my love of its display. I might try one of BenQ's 10-bit 32" 144hz displays. I have a few Asus Vivobooks here with Ultra 7 and Ultra 9 CPUs with 15.6" 1080p OLED displays and they're nice, but I always preferred the displays on my X170KM and SM along with even the P870TM I had. I thought those 144hz displays were great. It is starting to show its age though. I moved my SP109 14900KS into the wife's system and I suspect that is where it will stay for the next 2-3 years or beyond. It's running great, boosting to 6.2 in WoW, temps under 70c and pulls under 1.4v within the Intel recommended limits coupled with a dialed in LLC and UV. Still a banger of a platform though. --- Like above, OLED is nice, but I find myself really preferring the picture quality and colors (after both were calibrated) of my BenQ 32" 10-bit IPS display. ---- 5090 demand is definitely leveling out at least in regards to overpaying past MSRP and scalping. Pre-builts are in stock everywhere now and combo deals are lasting a lot longer on da egg even simple combos like Gigabyte 5090+MB only. But MSRP keeps creeping up...... MSI also looks to have raised prices across the board with the Liquid and Suprim now costing $3k+ in the US and even the damn Ventus clocking in at $2949.99. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Binning is Winning especially when you're trying to push beyond factory specs (which is really all you're guaranteed). -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

I listened to it with headphones and again, I'm just not seeing all the uproar or whining especially post vBIOS update. Compared to my Powercolor 7900xtx or 5700xt that is nothing. It truly isn't. Maybe it needs to be heard in person? Maybe I'll pick up my shiny, new Astral on Thursday or Friday, slap it in, play some games and immediately go, "oh hell no!" and back it goes if it is that bad. ....OR I'll plug it in and run some tests/games and go, "People will whine and complain just about anything these days..." 🤣 I guess we'll see soon enough. ----- I was wondering especially at $3k+ that is no small bit of change. You'll get no arguments from me @Papusan. Look at the crazy pricing over here..... -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Thursday! -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

After der8uer's video, I actually spent time hunting down YT videos of the card in action and even before the vBIOS update, it wasn't bad at all. Nobody complains in the forums about it either. I actually put headphones on to really listen and the overall sound profile of the Astral is better than half the cards I've owned in the past easily. ---- But having owned your LC for a bit now, are you overall happy with it or are you going to return it? -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Asus did address the harmonics issues with the fourth fan with a vBIOS update but I get your point. For a company that charges such an asus tax, you would expect HOF levels of perfection and we're just not getting it. It's frustrating. What I find frustrating from Asus is they hit it out of the park with their GPU Tweak software and pin monitoring and to a level their build quality, but then fail cutting costs everywhere and charging so much more overall vs MSRP. I would think it would be the ram, but it is TG which is usually pretty solid and it is rated at 8200 but still it *could* the ram. It could also be your board as alluded to before being a 2DPC board that is stalling it with issues >=7600. I know the stupid $80 1DPC Asrock board bested my 2DPC Carbon pretty easily for 8000+. Physics can not be defeated. Reason 4090 was so much better than the 3080ti mobile was the node shrink. Keeping the same node means there's only so much you can do with a 175w envelope and there was no way you were going to see a major performance bump. Then laptop makers decide to insanely bump prices prices into the stratosphere for the 5000 series...... -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Astral flexing some seriously nice clocks there @Papusan! I haven't seen a bum Astral (air or LC) yet really. I don't think I can resist the allure of my BB order. 🤣 @tps3443 what kind of clocks/scores are you getting with the 5090 FE in comparison? @jaybee83 can't wait to see some Suprim loving up in here! All roads lead to Rome. Our 2nd / terts are very similar, but my primary are based around 2200/6400 vs G2(4), nice! ---- @jaybee83 x870e crosshair Extreme if you want to upgrade and continue to address us peasants with Hero only 🤣 https://videocardz.com/pixel/asus-rog-crosshair-x870e-extreme-motherboard-has-been-pictured -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Yeah he is! Very nice @Papusan! -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

$999? Heck $1499.99 I would snatch up a 5090 with missing ROPS ASAP. Anything above that? Nope. $3150 is hilariously funny. I suspect they can't really compete against the 5070 and especially the 9070xt..... B580 is a unicorn in price:performance (assuming you have somewhat decent modern hardware). Nvidia is making money hand over fist and we know anything not AI is cutting into their potential overall profitability but they have to keep AMD and to a much lesser extent Intel honest. They know they can't just abandon the gamer market outright because AI could contract at any given time and they will need that gamer/prosumer market to still be intact and valid. Their $8600+ card is getting all that 5090ti love we will never see. Want the best for the next 2-3yrs? Suck it up and get a 5090. 😞 -

(Also posted in the X170KM thread) If someone is looking for a sweet 10900k sample (SP106), message @tps3443 here. He's selling his Golden Sample chip here: https://www.overclock.net/threads/10900k-golden-sample-1-of-200.1815617/?post_id=29448362#post-29448362 but you can also reach him here.

-

If someone is looking for a sweet 10900k sample (SP106), message @tps3443 here. He's selling his Golden Sample chip here: https://www.overclock.net/threads/10900k-golden-sample-1-of-200.1815617/?post_id=29448362#post-29448362 but you can also reach him here.

-

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

All it takes is ***ONE*** leak of the XOC vBIOS and we all win! 😁 Unless like I said before the vBIOS is tied directly to the software and individually encoded upping security from last time. We know @tps3443 is gonna end up getting an EK Block at a minimum and adding it into his loop at a minimum. He might even spin up the CHILLAH!!! to really get it going. 🤣 And yeah, the pattern is prison rules are now in effect for 5090 AIBs.... 🤔 Unfortunately this has been going on for quite awhile now. I can't fault the bean counters. It is their job. They just respond to market conditions while topping it off with as many cost cutting measures as they can get away with to continue to pad the bottom line while inching prices upward till they get their hand slapped. I've always been content as long as they at least also offer a few top end enthusiast parts while flooding the market with the other bits. Apex motherboards and Astral cards at least from Asus have some of those properties (along with the Asus tax). ---- And as expected, the 5090 mobile is going to be very underwhelming vs the 4090 mobile because, well, physics. Decent rationale as to why DLSS and FG matter in laptops because you can't slap a 575w part in a 175w envelope. He even touches on the older laptops and how thick and big they were that could handle more power and heat with their dual adapters and such: Review of Razer and MSI: https://hothardware.com/reviews/nvidia-geforce-rtx-5090-mobile-gpu-preview -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

So I scored a Gigabyte 9070xt from BB that was delivered today. With discounts and rewards = $739 after tax. Not doing back flips over this one, but could be much worse. Can't wait to dig in on this one next week and run some tests with WoW in my known testing spots to see how it fairs against the 5080 and 4090 data. ---- I also snagged an Astral 5090 on BB Total price after rewards and discounts = 3403.46. Seriously on the fence about this one, lol. If the 10850k is your best CPU, that has to be the benching rig CPU with hopefully decent (>=3600) DDR4 sticks. I can't see regressing to a 9700F for a benching rig but that's just me. And it starts..... The march to an EK block..... 🤣 -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Used to do that and there was a massive 2c difference in temps for added noise. I actually removed them (left the bottom fans but they're unplugged) but I might put some 140mm back in as I find the AC 140mm run whisper quiet so why not? Remember before I had the 4090 LC in there. The top and back is so porous that even full tilt, I can stick my hand in the ambient air inside and feel barely any change. Main indicator is memory temps never go over 45c before or after but the 5080 doesn't spit out nearly as much heat as the 5090 FE. If I get an Astral or Suprim 5090 I might change my tune! And visually and dimension wise, the 5080 FE is identical to the 5090 FE. You can't tell the difference until you put them on a scale and/or notice the 5080 badge. -

*Official Benchmark Thread* - Post it here or it didn't happen :D

electrosoft replied to Mr. Fox's topic in Desktop Hardware

Let's be honest. Even $3k is too much..... but at this point you just knuckle down and get the card you want.... The tariffs are alive and well in the US and may continue to climb. The dollar is weaker right now. Couple those two things and we have the prices we have atm. The last holdout is Nvidia ironically in the US keeping their $999 and $1999 price points. But tariffs aren't the only reason. Component shortages, demand and good ole fashion greed also play into that equation because other areas aren't experiencing such severe price hikes like GPUs in comparison: Motherboard prices are up too but nowhere near on the same scale as GPUs CPU prices have held relatively the same since last year. Funny enough, last year there were predictions of SSD prices going through the roof but they either stayed about the same or went down. Memory prices are also about the same or have fallen in many areas..... Before we go patting Nvidia on the back for holding steady with their pricing, AIBs also have razor thin margins on GPUs thanks to Nvidia's greed so when AIBs are presented with a valid scenario to raise prices, why not raise them as much as possible as many times as possible? "Greed....is good" - Michael Douglas in Wallstreet