-

Posts

6,266 -

Joined

-

Days Won

715

Content Type

Profiles

Forums

Events

Everything posted by Mr. Fox

-

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

One always have to be very careful with Amazon and Newegg to only purchase products that are sold and shipped by them and not resellers. It's a roll of the dice what the return policy will be if you buy from a third party vendor selling in their marketplace. One of the things that gives me pause is what anecdotally seems like a normalization of performance and limited ability to overclock enough to distinguish yourself on a leaderboard. If this is true, it kind of defeats the primary purpose of me wanting one. I'd never buy it with gaming being the primary purpose. I do that too little for it to matter and both of the GPUs I own are more than capable of providing a very gratifying experience when I do. I also remind myself that it's not a one and done. I don't want air cooling and I don't want hybrid. So that means added cost for a waterblock and I don't want to use the crappy short pigtail so it means either a PSU upgrade or a fairly affordable Cablemod replacement. So, whatever I do requires additional costs to have what I want, how I want it. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

You're welcome. I am glad that you're getting results as good as I am. Be sure to post in the BIOS update thread. There are a lot of whiners with Classified boards complaining that their 4-slot mobos don't overclock the memory as well as the 2-slot Dark, Apex or Unify-X motherboards, and some are expecting their low budget Samsung B-die to overclock like Hynix, LOL. What is your forum name at EVGA Community? It looks like there are 3 or 4 "Talons" over there and I can never figure out which one is you. Some 4090 owners are having no-POST/BIOS freeze issues, some with black screen issues until Windows loads (no video in BIOS), with the GPU installed. Some kind of weird vBIOS firmware conflict. What GPU are you running? Have you had any issues on the Dark mobo with it? -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

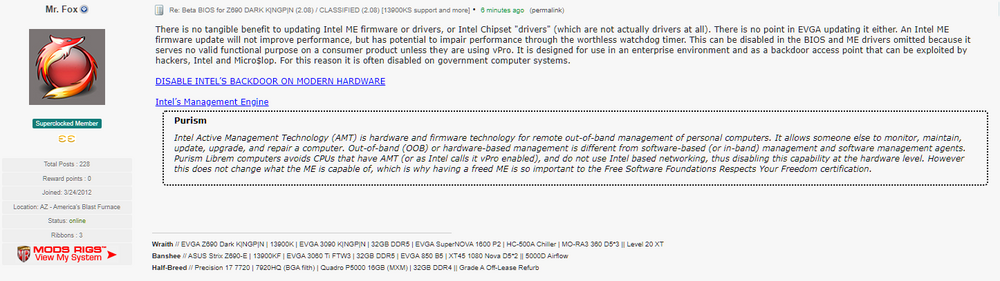

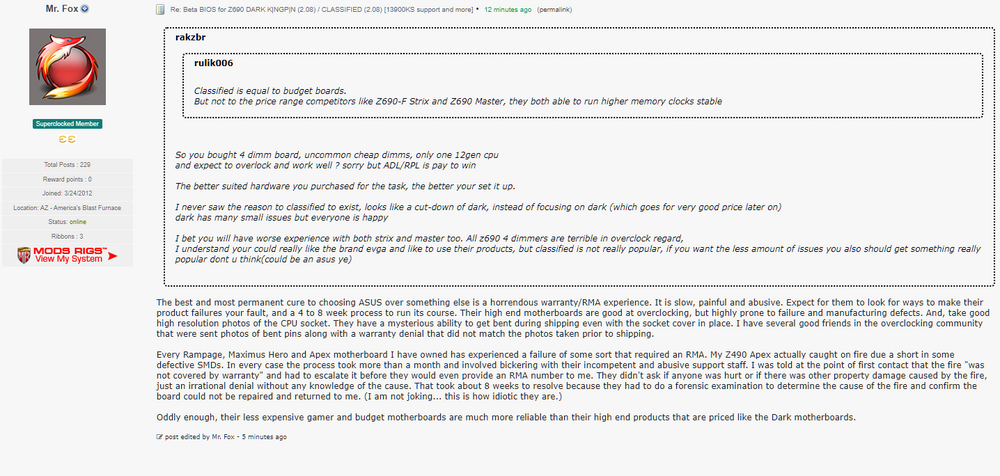

To build on the reality of consumer stupidity and ignorance, here is a repost of my comments in EVGA forum. People keep griping that EVGA is not updating IME or providing new ME drivers, which is utterly retarded. EVGA actually allow disabling Intel ME in the BIOS, but they are oblivious to that benefit that other OEMs do not offer. EVGA also leaves it unlocked so you can easily flash an old ME version if you wish to do that. Most OEMs do not allow it to be disabled/enabled in the BIOS at will, or downgraded to an older firmware version. There is no tangible benefit to updating Intel ME firmware or drivers, or Intel Chipset "drivers" (which are not actually drivers at all). There is no point in EVGA updating it either. An Intel ME firmware update will not improve performance, but has potential to impair performance through the worthless watchdog timer. This can be disabled in the BIOS and ME drivers omitted because it serves no valid functional purpose on a consumer product unless they are using vPro. It is designed for use in an enterprise environment and as a backdoor access point that can be exploited by hackers, Intel and Micro$lop. For this reason it is often disabled on government computer systems. DISABLE INTEL’S BACKDOOR ON MODERN HARDWARE Intel’s Management Engine At the end of the day you're better off with a bricked ME than a functional one. The behavior and stability of your computer will be unaffected, but it might be more secure. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

-

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

I am feeling kind of proud of myself. I had several opportunities today to purchase "not from reseller/sold and shipped by" Zotac and MSI Suprim 4090 GPUs today between $1700 and $1800 on Amazon and NewEgg (including one just about 10 minutes ago). While I was tempted, I said no to checking out, emptied my shopping cart and walked away. 12 equal payments with zero interest wasn't enough to change my mind. These would have been easy payments, but when I considered the cost per month it seemed like more money than the degree of pleasure I would derive from having it. The lure was definitely very tempting though. I think I would be lucky to get 90 days worth of benching fun out of it before it no longer served any useful purpose for me. It is probably good that I do not have a Microcenter here or I would likely be doing a "free road test" right now knowing I could bench it a bit and get my money back if I changed my mind. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

I can't remember now, and don't really want to. Maybe someone else knows and can give you some sound advice. That said, a P870 is a whole lot better than a P7XX. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

Why not LTSC 2019 (1809)? It has less cancer. Just use a normal ISO and run the script to destroy/remove Defender and then use CTT debloat script and you're good to go. It will cut your life-sucking services by about half and be similar to Windows 7 overhead. The modified ISO files are often very buggy and I don't use them. And, the end product isn't any better than doing what I suggested. -

-

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

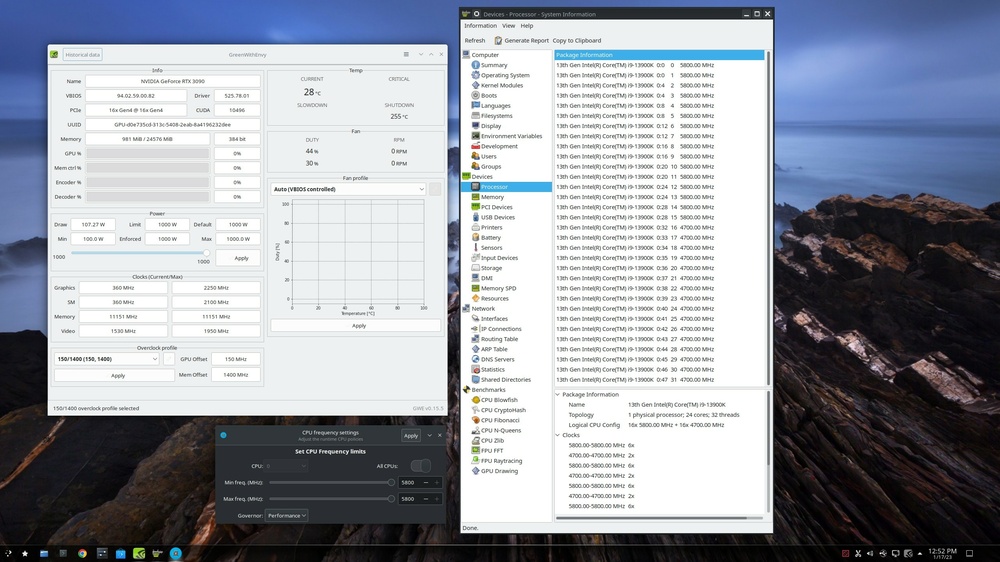

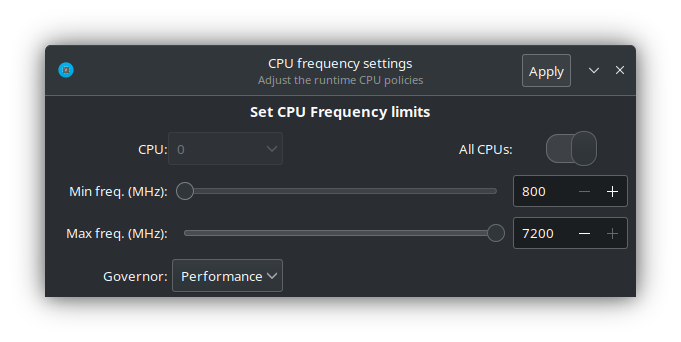

Limited to a great degree, but 99.9% of all overclocking is something I do in the BIOS anyway. I almost never change anything working within the OS like what was often necessary with a laptop due to lack of BIOS controls. The problem I have had on the Strix mobo was not with overclocking. It was Linux not being able to identify or report accurately on the CPU clock speeds. It was/is working perfectly on my EVGA Z690 Dark and not working at all on the ASUS Z690 because their implementation of ACPI is all buggered up and not talking to the Linux kernel. I am happy to have found a workaround for the incompetence of ASUS. Given your occupation, what you would likely find even more amazing is the fact that, with very rare exception, almost every game title on Steam that is "Windows Only" runs flawlessly on Linux using Proton Experimental. In many cases the DX11/12 titles run better on Linux using Vulkan, even when it is "not supposed to work" it almost always does. Green With Envy is better than MSI Afterburner. I do not know if there is an AMD solution for GPU overclocking, but it is very good for NVIDIA. -

Things are finally starting to fall into place. I was beginning to think I would never get the ASUS motherboard to function correctly on Linux like my EVGA motherboard. ASUS is such a pain in the butt sometimes. CPU seems to be functioning correctly now. I used Linux all day long for work yesterday. Once I figure out how to get Micro$lop Office working correctly on Linux, I might finally be able to kick Windoze to the curb. Everything is just so much cleaner without a trashy OS.

-

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

-

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

The possibilities are limited only by their incompetence and lack of concern for the importance of producing a good product. This is 2023, so they rely on end user stupidity and the deception of frequent buggy patches and change only for the sake of change to be mistaken as something useful and beneficial. The exact opposite is true. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

I do not need to even try to imagine this. It is my normal modus operandi. OS updates are especially unimportant and easily the fastest and most effective way of increasing the possibility that your system is going to be effed up in one or more ways. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

Basically a rerun of the scenario between 12900K and 12900KS. Anyone planning to buy a 13th Gen i9 CPU that is not a KS would probably do well to limit their selection to 13900KF to avoid bottom-of-the-barrel silicon. The best 13900K samples will likely be branded as a 13900KS. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

I do not like either of them, and the latest versions of them are less desirable than the versions they replace. In that respect, Winduhz 11 is a downgrade that is true to form and consistent with the way things are headed with Android and ChromeOS. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

No. Given current trends, things should probably be worse than how terrible it already is. The current trends are not for the better. Edit: I have spent the past 10 minutes thinking about it, and I am unable to indentify anything meaningful that is better today than it was 2, 4 or 6 years ago. Literally everything that actually matters is trending undesirably now. That's the trend, so Winduhz 11 is in keeping with the trend. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

I do, and most things are the same or worse than a recent version Windows 10. Setting aside my general dislike of it, I do not believe you will find it useful for benching. When it comes to benching I will use whatever OS produces the highest scores, even if I do not like the OS. I haven't identified anything (yet) that Windows 11 is better at. I haven't gained performance in anything, but have lost some here and there. It's essentially a dumbed-down version of the latest W10 OS with an uglier and more inefficient GUI skin. For gaming, six of one, half dozen of the other. It doesn't matter. Unless you are struggling to hit 60, a variance of 5 to 10 FPS is irrelevant and within a normal margin of error. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

I have found it difficult to manualy select a font color that is easy to read regardless of the forum theme selected, so I leave the color selection on "auto" for that reason. What is easy to read on a dark background is not good on a light background, and vice versa. It is probably worse for those of us that are partially colorblind. Dark colors on a dark background and light colors on a light backgroup are a challenge for me. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

When other people in your life are not into it, you take some flak for it, too. I have an addictive personality. When I enjoy something I want to binge on it, but I also get burnt out on it easily. My pattern is, I may go a year without playing anything. Then I will pick something up, like it a lot and play it for 20+ hours in one or two days on a weekend, then never touch it again, or anything else for months. If I do not become mesmerized with it during the first 15-20 minutes then chances are great I will never touch it again. I think that part is fairly common. That could be why we do not see playable game demos as often as we used to. For me, the demo was usually enough to get as much out of it as I ever would, and convince me not to waste money on it. That, or I added it to my list of titles I liked well enough to buy whenever the price dropped to $20 or less. Many of them have never been installed, so I will have lots to keep me busy in retirement, LoL. Many of the games I own were purchased only for an included benchmark, and many of the playable demos included a benchmark. That made buying them unnecessary. Some I can immediately tell from the benchmark that I would hate the game. Best examples here are the Final Fantasy benchmarks. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

Silly, silly people. Always in search of a newer driver... because newer is always better newer. They will never learn because they have been taught wrong and still believe the crap that they were told. Somebody they thought was smarter them, but wasn't, set them up for failure. What makes it so sad/stupid/messed up is they think they are "optimizing" their system, but what they are really doing is choking it and making it slower. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

The only thing we can count on at this point is the continuation of bad decisions by people who should not be making decisions. Black parts in a white case certainly looks much better than white-on-white or white parts in a black case. White on white is the worst-looking combination. It has no character and is very boring. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

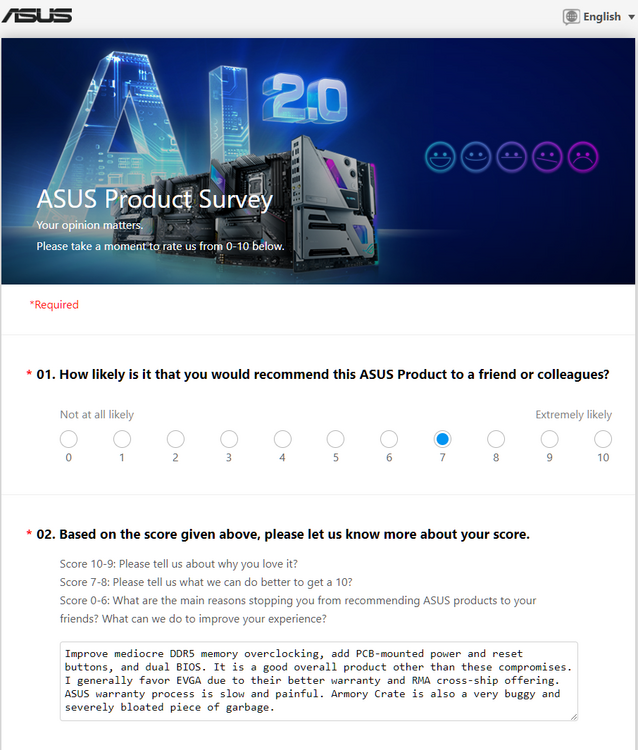

I think a lot of people that buy them just don't care about the color. White is only popular with the people that like it, and they are a minority. From what I understand, (not sure how accurate the data is,) black cases outsell white cases by like an 8 to 1 margin. ASUS seems to have some people with abnormal aesthetic preferences making decisions. They make what they like and if customers end users don't like it they don't care. I think white look beautiful when it is brand new and belongs to someone else. As soon as it gets dirty, scuffed or scratched, or if I have to look at it for longer than a day or two I am ready to get rid of it. Every blemish screams "over here... look at me" LOL. Try OpenRGB. It will probably work. It is what I use because the alternatives provided by the motherboard OEMs are pure dung even on a supported OS. ASUS Aura/Armory Crate is beyond horrible. It is so bad it should be against the law. It makes most of the other digital cancer look like the neatest thing since sliced bread. It not only constantly malfunction, it adds more performance-killing, resource-leeching bloat to a system than any other piece of garbage software that I have ever seen before. The LanCool III is the first of the LanCool series that I like. I did not care for its predecessors. It looks wonderful. I wish they made a version of it that was three or four inches larger in each direction. I would have to have one if they did. It is certainly priced right for what you get... very good value for the money. I used to want the O11 Dynamic XL, but it has become so ordinary that I no longer like it. It still looks nice, but it is so common (including copycat cases) that it has lost distinction at this point. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

Just based on my personal preference, but dadgum... that is one hella butt-ugly GPU. I bet the kiddos just love the worthless card and tacky ring. I guess dreams are inclusive of nightmares, so by that measurement it could be my dream GPU, LOL. Hmm... I scanned through his last few posts to see what I missed, but I am not spotting it. What happened with Brother @electrosoft? I hope nothing bad. -

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

-

.thumb.png.362386d2804d5f9fbcf2ec7f5aa009c5.png)

*Official Benchmark Thread* - Post it here or it didn't happen :D

Mr. Fox replied to Mr. Fox's topic in Desktop Hardware

I am always suspicious when I see unopened BNIB listings for computer parts that are deeply discounted. I tend to be cynical, but I always first assume they are stolen. (Entire truckloads of CPUs, GPUs, motherboards, laptops, printers, etc. being stolen is fairly common.) If they are not stolen, then what? Being suspicious of good deals from people you do not know that never have to look you in the eye, or interact with you in a forum like this, is the best approach. Scams used to be few and far between, but now they are status quo and as common as dirt.